Fábio Vital

Implicit Repair with Reinforcement Learning in Emergent Communication

Feb 18, 2025Abstract:Conversational repair is a mechanism used to detect and resolve miscommunication and misinformation problems when two or more agents interact. One particular and underexplored form of repair in emergent communication is the implicit repair mechanism, where the interlocutor purposely conveys the desired information in such a way as to prevent misinformation from any other interlocutor. This work explores how redundancy can modify the emergent communication protocol to continue conveying the necessary information to complete the underlying task, even with additional external environmental pressures such as noise. We focus on extending the signaling game, called the Lewis Game, by adding noise in the communication channel and inputs received by the agents. Our analysis shows that agents add redundancy to the transmitted messages as an outcome to prevent the negative impact of noise on the task success. Additionally, we observe that the emerging communication protocol's generalization capabilities remain equivalent to architectures employed in simpler games that are entirely deterministic. Additionally, our method is the only one suitable for producing robust communication protocols that can handle cases with and without noise while maintaining increased generalization performance levels.

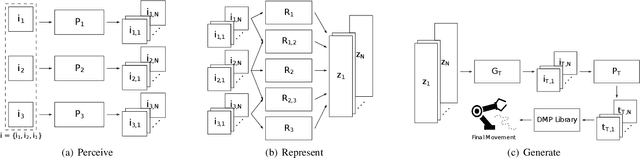

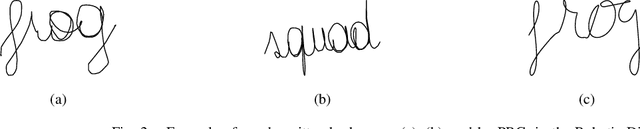

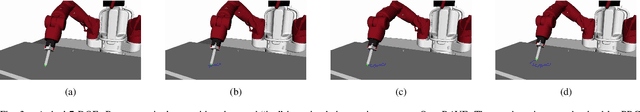

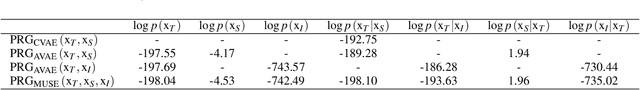

Perceive, Represent, Generate: Translating Multimodal Information to Robotic Motion Trajectories

Apr 06, 2022

Abstract:We present Perceive-Represent-Generate (PRG), a novel three-stage framework that maps perceptual information of different modalities (e.g., visual or sound), corresponding to a sequence of instructions, to an adequate sequence of movements to be executed by a robot. In the first stage, we perceive and pre-process the given inputs, isolating individual commands from the complete instruction provided by a human user. In the second stage we encode the individual commands into a multimodal latent space, employing a deep generative model. Finally, in the third stage we convert the multimodal latent values into individual trajectories and combine them into a single dynamic movement primitive, allowing its execution in a robotic platform. We evaluate our pipeline in the context of a novel robotic handwriting task, where the robot receives as input a word through different perceptual modalities (e.g., image, sound), and generates the corresponding motion trajectory to write it, creating coherent and readable handwritten words.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge