Francisco Melo

Perceive, Represent, Generate: Translating Multimodal Information to Robotic Motion Trajectories

Apr 06, 2022

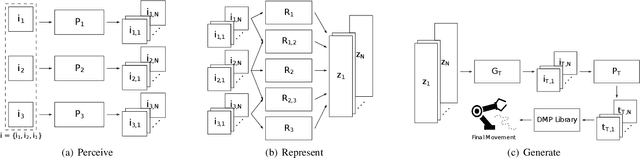

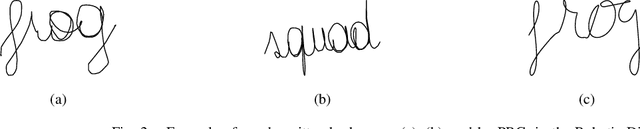

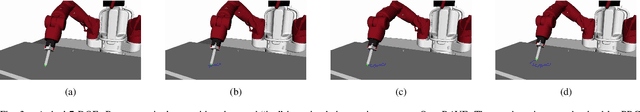

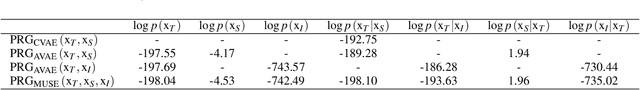

Abstract:We present Perceive-Represent-Generate (PRG), a novel three-stage framework that maps perceptual information of different modalities (e.g., visual or sound), corresponding to a sequence of instructions, to an adequate sequence of movements to be executed by a robot. In the first stage, we perceive and pre-process the given inputs, isolating individual commands from the complete instruction provided by a human user. In the second stage we encode the individual commands into a multimodal latent space, employing a deep generative model. Finally, in the third stage we convert the multimodal latent values into individual trajectories and combine them into a single dynamic movement primitive, allowing its execution in a robotic platform. We evaluate our pipeline in the context of a novel robotic handwriting task, where the robot receives as input a word through different perceptual modalities (e.g., image, sound), and generates the corresponding motion trajectory to write it, creating coherent and readable handwritten words.

Class Teaching for Inverse Reinforcement Learners

Nov 29, 2019

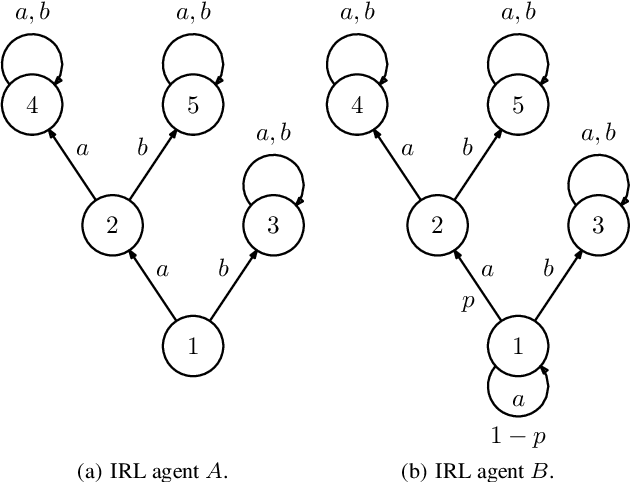

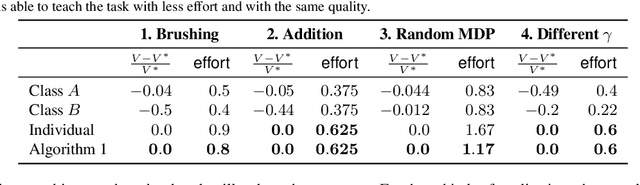

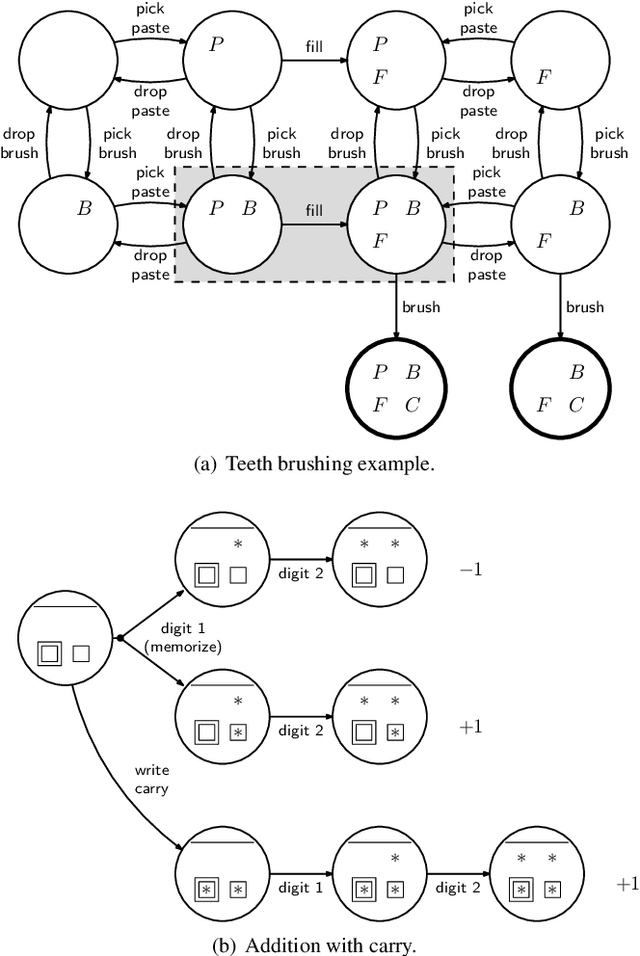

Abstract:In this paper we propose the first machine teaching algorithm for multiple inverse reinforcement learners. Specifically, our contributions are: (i) we formally introduce the problem of teaching a sequential task to a heterogeneous group of learners; (ii) we identify conditions under which it is possible to conduct such teaching using the same demonstration for all learners; and (iii) we propose and evaluate a simple algorithm that computes a demonstration(s) ensuring that all agents in a heterogeneous class learn a task description that is compatible with the target task. Our analysis shows that, contrary to other teaching problems, teaching a heterogeneous class with a single demonstration may not be possible as the differences between agents increase. We also showcase the advantages of our proposed machine teaching approach against several possible alternatives.

Multi-class Generalized Binary Search for Active Inverse Reinforcement Learning

Jan 23, 2013

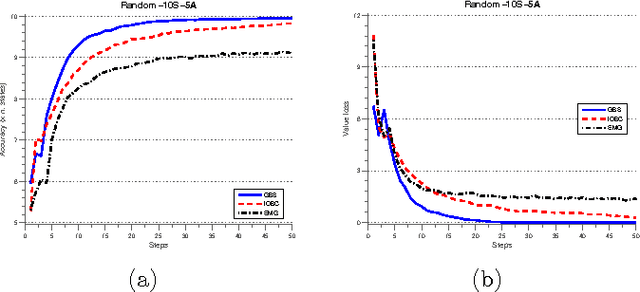

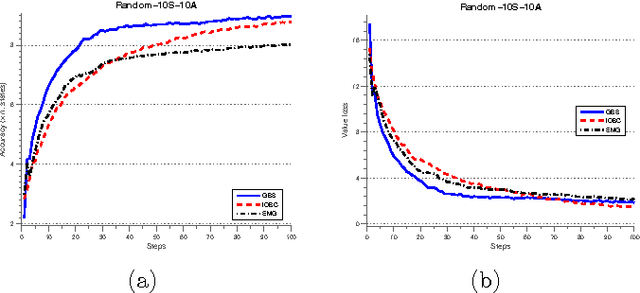

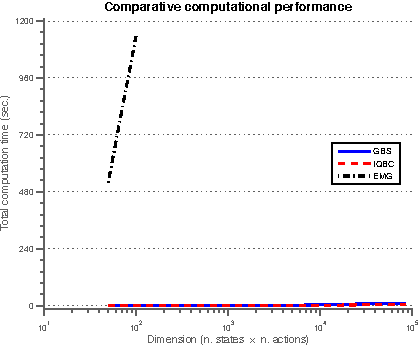

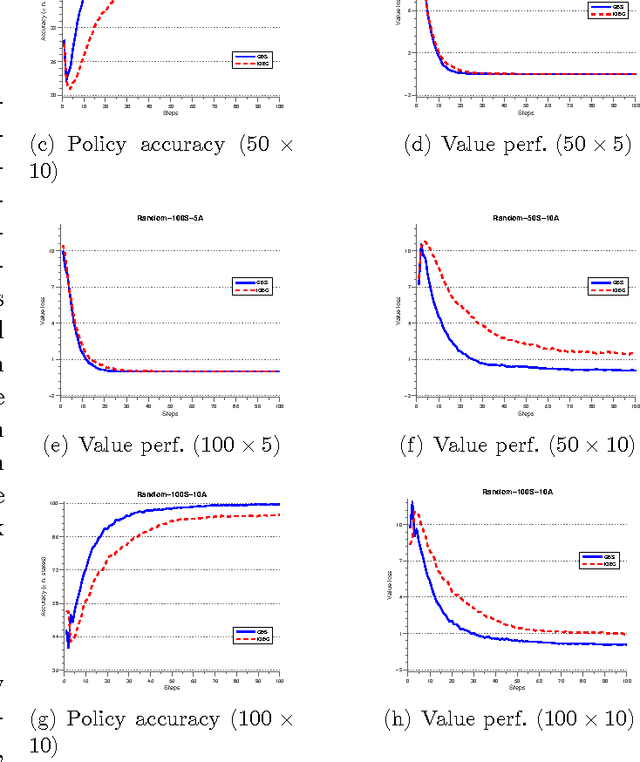

Abstract:This paper addresses the problem of learning a task from demonstration. We adopt the framework of inverse reinforcement learning, where tasks are represented in the form of a reward function. Our contribution is a novel active learning algorithm that enables the learning agent to query the expert for more informative demonstrations, thus leading to more sample-efficient learning. For this novel algorithm (Generalized Binary Search for Inverse Reinforcement Learning, or GBS-IRL), we provide a theoretical bound on sample complexity and illustrate its applicability on several different tasks. To our knowledge, GBS-IRL is the first active IRL algorithm with provable sample complexity bounds. We also discuss our method in light of other existing methods in the literature and its general applicability in multi-class classification problems. Finally, motivated by recent work on learning from demonstration in robots, we also discuss how different forms of human feedback can be integrated in a transparent manner in our learning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge