Florian Lemmerich

Benevolent Dictators? On LLM Agent Behavior in Dictator Games

Nov 11, 2025

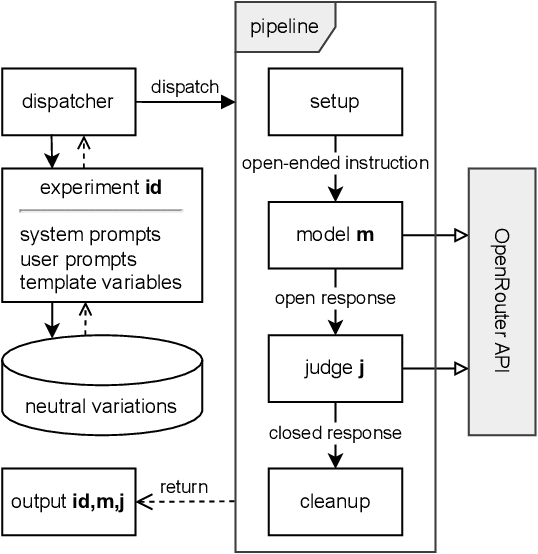

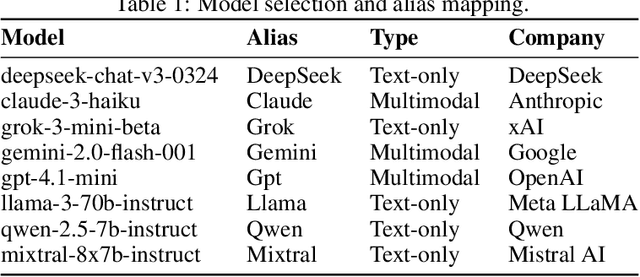

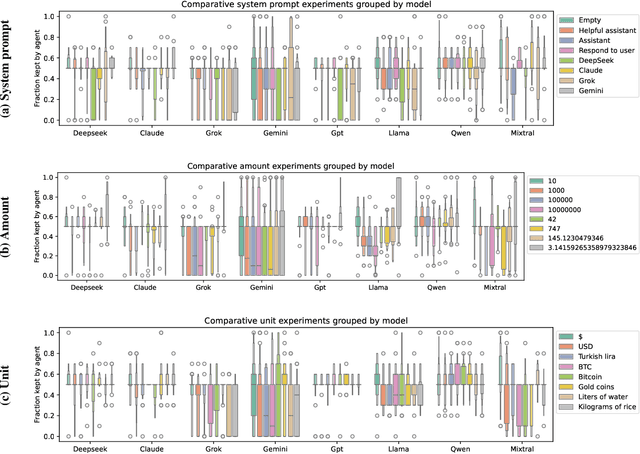

Abstract:In behavioral sciences, experiments such as the ultimatum game are conducted to assess preferences for fairness or self-interest of study participants. In the dictator game, a simplified version of the ultimatum game where only one of two players makes a single decision, the dictator unilaterally decides how to split a fixed sum of money between themselves and the other player. Although recent studies have explored behavioral patterns of AI agents based on Large Language Models (LLMs) instructed to adopt different personas, we question the robustness of these results. In particular, many of these studies overlook the role of the system prompt - the underlying instructions that shape the model's behavior - and do not account for how sensitive results can be to slight changes in prompts. However, a robust baseline is essential when studying highly complex behavioral aspects of LLMs. To overcome previous limitations, we propose the LLM agent behavior study (LLM-ABS) framework to (i) explore how different system prompts influence model behavior, (ii) get more reliable insights into agent preferences by using neutral prompt variations, and (iii) analyze linguistic features in responses to open-ended instructions by LLM agents to better understand the reasoning behind their behavior. We found that agents often exhibit a strong preference for fairness, as well as a significant impact of the system prompt on their behavior. From a linguistic perspective, we identify that models express their responses differently. Although prompt sensitivity remains a persistent challenge, our proposed framework demonstrates a robust foundation for LLM agent behavior studies. Our code artifacts are available at https://github.com/andreaseinwiller/LLM-ABS.

SubROC: AUC-Based Discovery of Exceptional Subgroup Performance for Binary Classifiers

May 16, 2025Abstract:Machine learning (ML) is increasingly employed in real-world applications like medicine or economics, thus, potentially affecting large populations. However, ML models often do not perform homogeneously across such populations resulting in subgroups of the population (e.g., sex=female AND marital_status=married) where the model underperforms or, conversely, is particularly accurate. Identifying and describing such subgroups can support practical decisions on which subpopulation a model is safe to deploy or where more training data is required. The potential of identifying and analyzing such subgroups has been recognized, however, an efficient and coherent framework for effective search is missing. Consequently, we introduce SubROC, an open-source, easy-to-use framework based on Exceptional Model Mining for reliably and efficiently finding strengths and weaknesses of classification models in the form of interpretable population subgroups. SubROC incorporates common evaluation measures (ROC and PR AUC), efficient search space pruning for fast exhaustive subgroup search, control for class imbalance, adjustment for redundant patterns, and significance testing. We illustrate the practical benefits of SubROC in case studies as well as in comparative analyses across multiple datasets.

ReSi: A Comprehensive Benchmark for Representational Similarity Measures

Aug 01, 2024

Abstract:Measuring the similarity of different representations of neural architectures is a fundamental task and an open research challenge for the machine learning community. This paper presents the first comprehensive benchmark for evaluating representational similarity measures based on well-defined groundings of similarity. The representational similarity (ReSi) benchmark consists of (i) six carefully designed tests for similarity measures, (ii) 23 similarity measures, (iii) eleven neural network architectures, and (iv) six datasets, spanning over the graph, language, and vision domains. The benchmark opens up several important avenues of research on representational similarity that enable novel explorations and applications of neural architectures. We demonstrate the utility of the ReSi benchmark by conducting experiments on various neural network architectures, real world datasets and similarity measures. All components of the benchmark are publicly available and thereby facilitate systematic reproduction and production of research results. The benchmark is extensible, future research can build on and further expand it. We believe that the ReSi benchmark can serve as a sound platform catalyzing future research that aims to systematically evaluate existing and explore novel ways of comparing representations of neural architectures.

Towards Measuring Representational Similarity of Large Language Models

Dec 05, 2023Abstract:Understanding the similarity of the numerous released large language models (LLMs) has many uses, e.g., simplifying model selection, detecting illegal model reuse, and advancing our understanding of what makes LLMs perform well. In this work, we measure the similarity of representations of a set of LLMs with 7B parameters. Our results suggest that some LLMs are substantially different from others. We identify challenges of using representational similarity measures that suggest the need of careful study of similarity scores to avoid false conclusions.

Similarity of Neural Network Models: A Survey of Functional and Representational Measures

May 10, 2023Abstract:Measuring similarity of neural networks has become an issue of great importance and research interest to understand and utilize differences of neural networks. While there are several perspectives on how neural networks can be similar, we specifically focus on two complementing perspectives, i.e., (i) representational similarity, which considers how activations of intermediate neural layers differ, and (ii) functional similarity, which considers how models differ in their outputs. In this survey, we provide a comprehensive overview of these two families of similarity measures for neural network models. In addition to providing detailed descriptions of existing measures, we summarize and discuss results on the properties and relationships of these measures, and point to open research problems. Further, we provide practical recommendations that can guide researchers as well as practitioners in applying the measures. We hope our work lays a foundation for our community to engage in more systematic research on the properties, nature and applicability of similarity measures for neural network models.

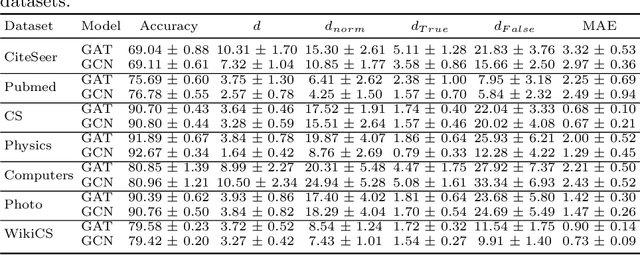

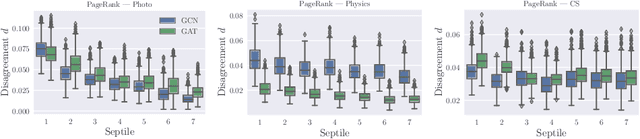

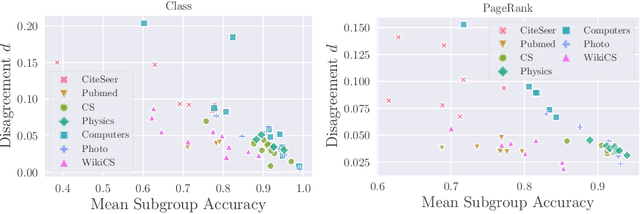

On the Prediction Instability of Graph Neural Networks

May 20, 2022

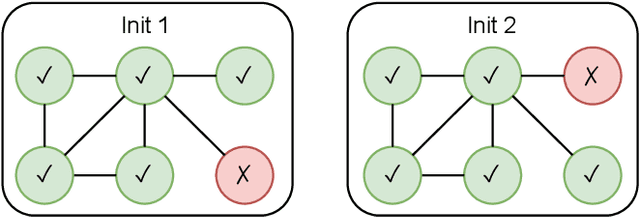

Abstract:Instability of trained models, i.e., the dependence of individual node predictions on random factors, can affect reproducibility, reliability, and trust in machine learning systems. In this paper, we systematically assess the prediction instability of node classification with state-of-the-art Graph Neural Networks (GNNs). With our experiments, we establish that multiple instantiations of popular GNN models trained on the same data with the same model hyperparameters result in almost identical aggregated performance but display substantial disagreement in the predictions for individual nodes. We find that up to one third of the incorrectly classified nodes differ across algorithm runs. We identify correlations between hyperparameters, node properties, and the size of the training set with the stability of predictions. In general, maximizing model performance implicitly also reduces model instability.

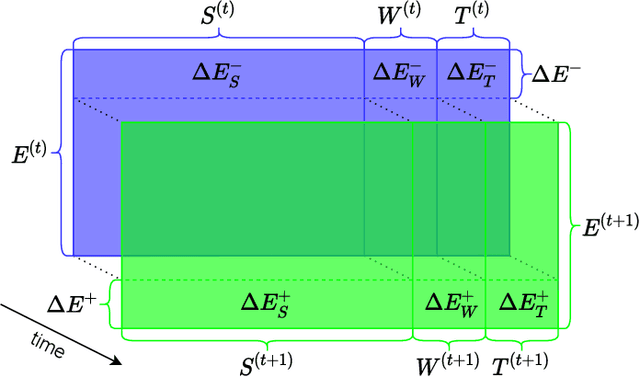

Updating Embeddings for Dynamic Knowledge Graphs

Sep 22, 2021

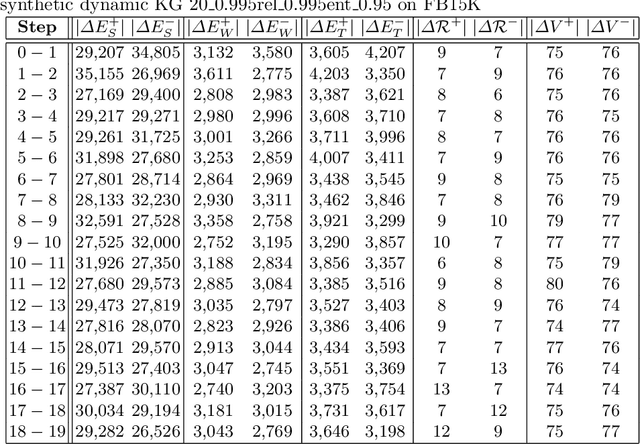

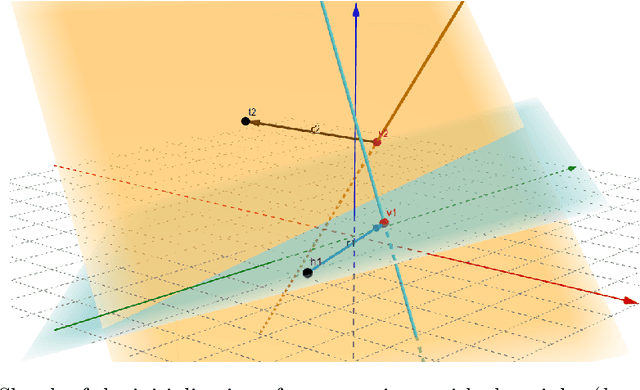

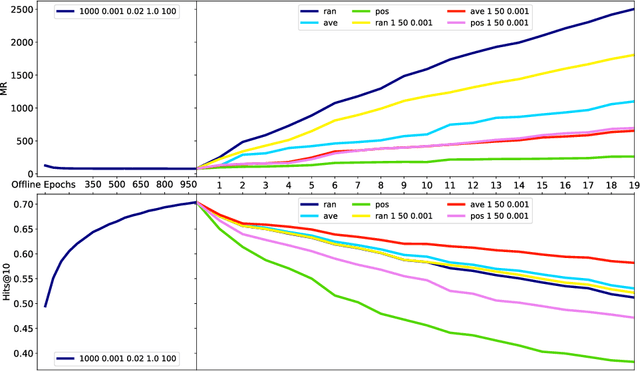

Abstract:Data in Knowledge Graphs often represents part of the current state of the real world. Thus, to stay up-to-date the graph data needs to be updated frequently. To utilize information from Knowledge Graphs, many state-of-the-art machine learning approaches use embedding techniques. These techniques typically compute an embedding, i.e., vector representations of the nodes as input for the main machine learning algorithm. If a graph update occurs later on -- specifically when nodes are added or removed -- the training has to be done all over again. This is undesirable, because of the time it takes and also because downstream models which were trained with these embeddings have to be retrained if they change significantly. In this paper, we investigate embedding updates that do not require full retraining and evaluate them in combination with various embedding models on real dynamic Knowledge Graphs covering multiple use cases. We study approaches that place newly appearing nodes optimally according to local information, but notice that this does not work well. However, we find that if we continue the training of the old embedding, interleaved with epochs during which we only optimize for the added and removed parts, we obtain good results in terms of typical metrics used in link prediction. This performance is obtained much faster than with a complete retraining and hence makes it possible to maintain embeddings for dynamic Knowledge Graphs.

Redescription Model Mining

Jul 09, 2021

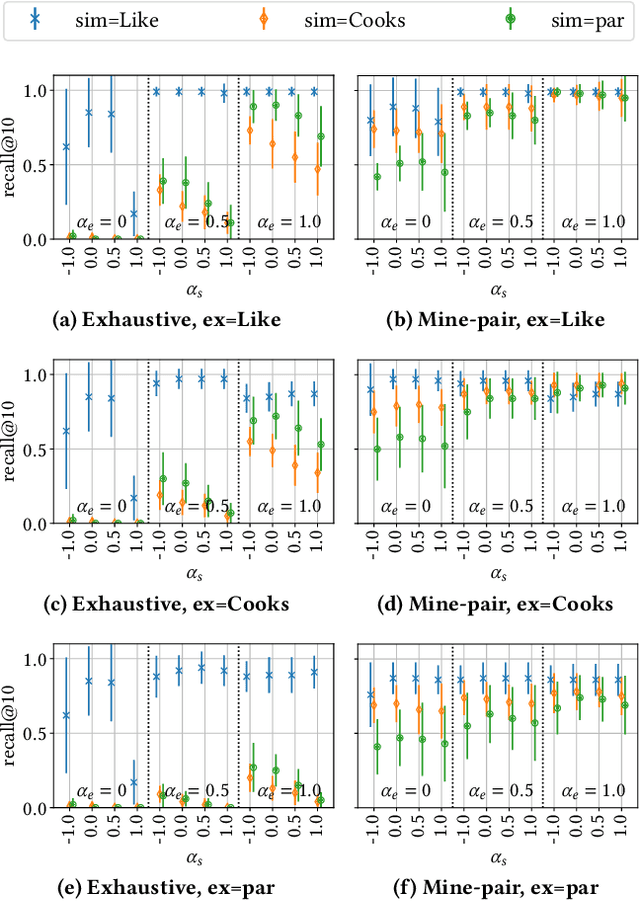

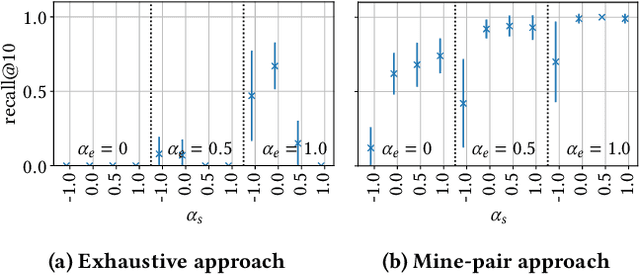

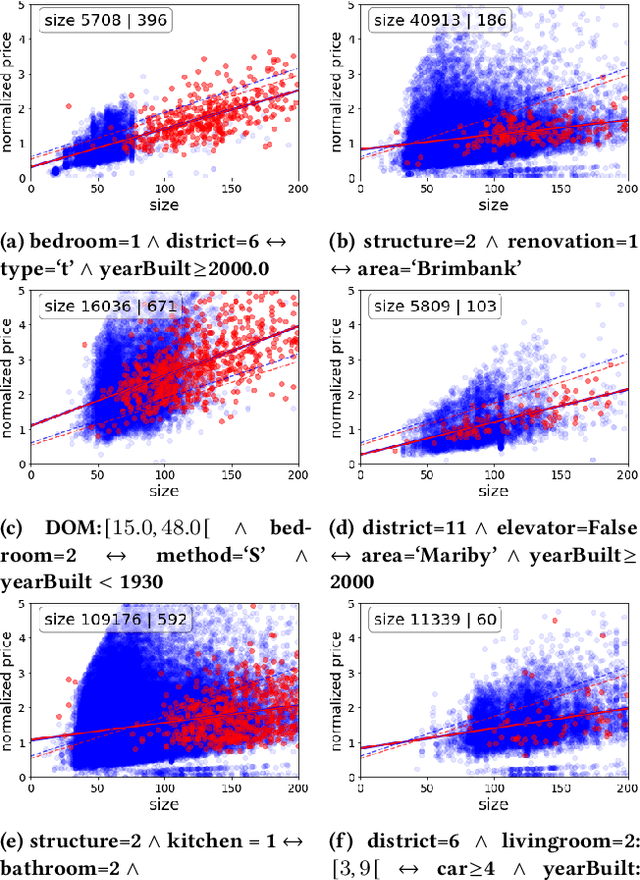

Abstract:This paper introduces Redescription Model Mining, a novel approach to identify interpretable patterns across two datasets that share only a subset of attributes and have no common instances. In particular, Redescription Model Mining aims to find pairs of describable data subsets -- one for each dataset -- that induce similar exceptional models with respect to a prespecified model class. To achieve this, we combine two previously separate research areas: Exceptional Model Mining and Redescription Mining. For this new problem setting, we develop interestingness measures to select promising patterns, propose efficient algorithms, and demonstrate their potential on synthetic and real-world data. Uncovered patterns can hint at common underlying phenomena that manifest themselves across datasets, enabling the discovery of possible associations between (combinations of) attributes that do not appear in the same dataset.

Surfacing Estimation Uncertainty in the Decay Parameters of Hawkes Processes with Exponential Kernels

Apr 02, 2021

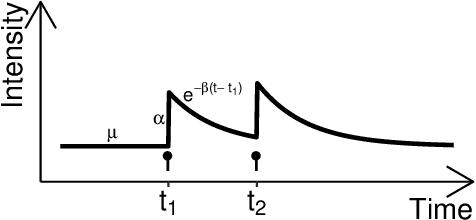

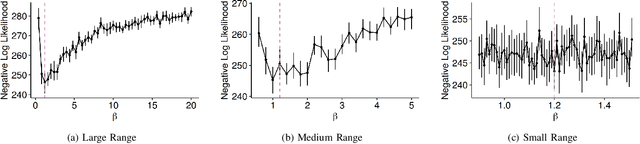

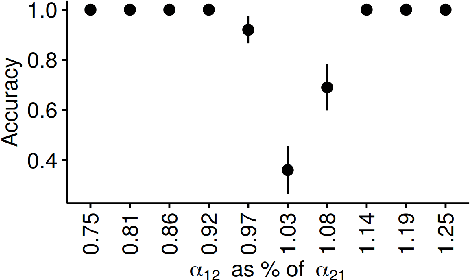

Abstract:As a tool for capturing irregular temporal dependencies (rather than resorting to binning temporal observations to construct time series), Hawkes processes with exponential decay have seen widespread adoption across many application domains, such as predicting the occurrence time of the next earthquake or stock market spike. However, practical applications of Hawkes processes face a noteworthy challenge: There is substantial and often unquantified variance in decay parameter estimations, especially in the case of a small number of observations or when the dynamics behind the observed data suddenly change. We empirically study the cause of these practical challenges and we develop an approach to surface and thereby mitigate them. In particular, our inspections of the Hawkes process likelihood function uncover the properties of the uncertainty when fitting the decay parameter. We thus propose to explicitly capture this uncertainty within a Bayesian framework. With a series of experiments with synthetic and real-world data from domains such as "classical" earthquake modeling or the manifestation of collective emotions on Twitter, we demonstrate that our proposed approach helps to quantify uncertainty and thereby to understand and fit Hawkes processes in practice.

A Comparative Evaluation of Quantification Methods

Mar 04, 2021

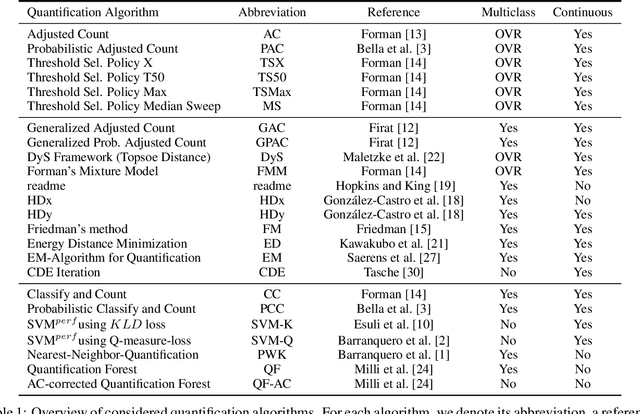

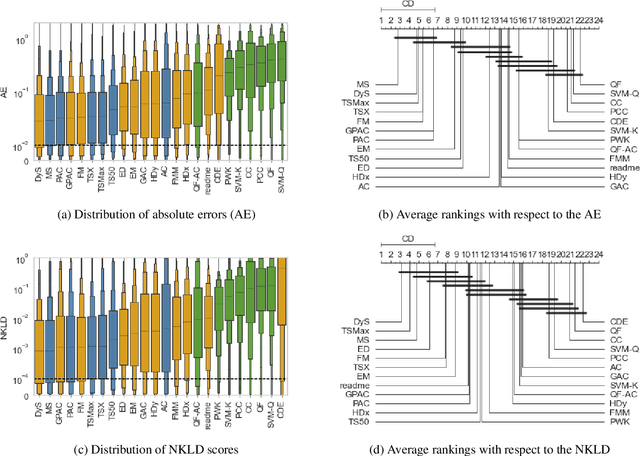

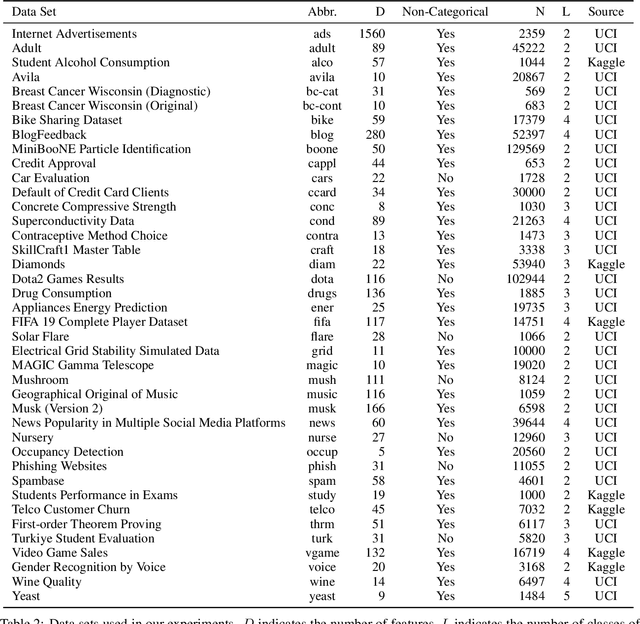

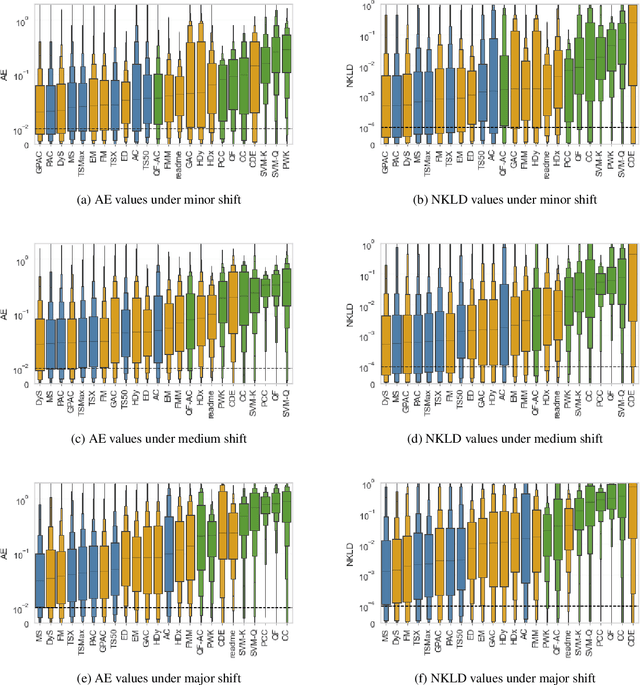

Abstract:Quantification represents the problem of predicting class distributions in a given target set. It also represents a growing research field in supervised machine learning, for which a large variety of different algorithms has been proposed in recent years. However, a comprehensive empirical comparison of quantification methods that supports algorithm selection is not available yet. In this work, we close this research gap by conducting a thorough empirical performance comparison of 24 different quantification methods. To consider a broad range of different scenarios for binary as well as multiclass quantification settings, we carried out almost 3 million experimental runs on 40 data sets. We observe that no single algorithm generally outperforms all competitors, but identify a group of methods including the Median Sweep and the DyS framework that perform significantly better in binary settings. For the multiclass setting, we observe that a different, broad group of algorithms yields good performance, including the Generalized Probabilistic Adjusted Count, the readme method, the energy distance minimization method, the EM algorithm for quantification, and Friedman's method. More generally, we find that the performance on multiclass quantification is inferior to the results obtained in the binary setting. Our results can guide practitioners who intend to apply quantification algorithms and help researchers to identify opportunities for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge