Florence Tupin

IMAGES, IDS

Just Project! Multi-Channel Despeckling, the Easy Way

Aug 21, 2024

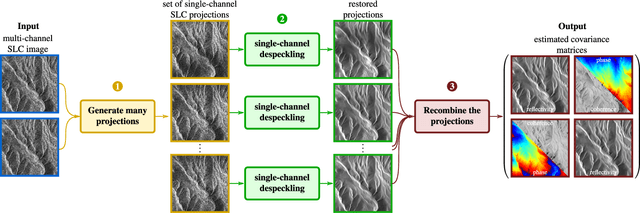

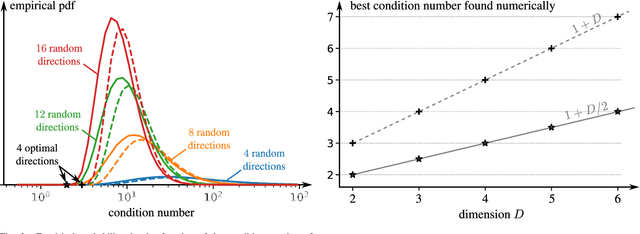

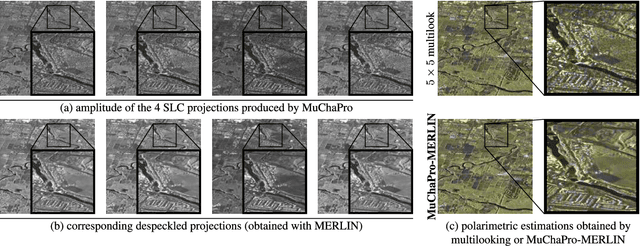

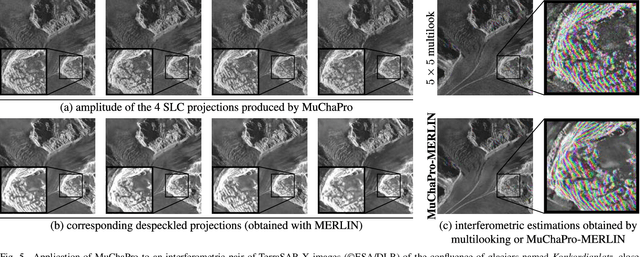

Abstract:Reducing speckle fluctuations in multi-channel SAR images is essential in many applications of SAR imaging such as polarimetric classification or interferometric height estimation. While single-channel despeckling has widely benefited from the application of deep learning techniques, extensions to multi-channel SAR images are much more challenging.This paper introduces MuChaPro, a generic framework that exploits existing single-channel despeckling methods. The key idea is to generate numerous single-channel projections, restore these projections, and recombine them into the final multi-channel estimate. This simple approach is shown to be effective in polarimetric and/or interferometric modalities. A special appeal of MuChaPro is the possibility to apply a self-supervised training strategy to learn sensor-specific networks for single-channel despeckling.

Patch-based adaptive temporal filter and residual evaluation

Feb 14, 2024Abstract:In coherent imaging systems, speckle is a signal-dependent noise that visually strongly degrades images' appearance. A huge amount of SAR data has been acquired from different sensors with different wavelengths, resolutions, incidences and polarizations. We extend the nonlocal filtering strategy to the temporal domain and propose a patch-based adaptive temporal filter (PATF) to take advantage of well-registered multi-temporal SAR images. A patch-based generalised likelihood ratio test is processed to suppress the changed object effects on the multitemporal denoising results. Then, the similarities are transformed into corresponding weights with an exponential function. The denoised value is calculated with a temporal weighted average. Spatial adaptive denoising methods can improve the patch-based weighted temporal average image when the time series is limited. The spatial adaptive denoising step is optional when the time series is large enough. Without reference image, we propose using a patch-based auto-covariance residual evaluation method to examine the ratio image between the noisy and denoised images and look for possible remaining structural contents. It can process automatically and does not rely on a supervised selection of homogeneous regions. It also provides a global score for the whole image. Numerous results demonstrate the effectiveness of the proposed time series denoising method and the usefulness of the residual evaluation method.

Multitemporal SAR images change detection and visualization using RABASAR and simplified GLR

Jul 15, 2023Abstract:Understanding the state of changed areas requires that precise information be given about the changes. Thus, detecting different kinds of changes is important for land surface monitoring. SAR sensors are ideal to fulfil this task, because of their all-time and all-weather capabilities, with good accuracy of the acquisition geometry and without effects of atmospheric constituents for amplitude data. In this study, we propose a simplified generalized likelihood ratio ($S_{GLR}$) method assuming that corresponding temporal pixels have the same equivalent number of looks (ENL). Thanks to the denoised data provided by a ratio-based multitemporal SAR image denoising method (RABASAR), we successfully applied this similarity test approach to compute the change areas. A new change magnitude index method and an improved spectral clustering-based change classification method are also developed. In addition, we apply the simplified generalized likelihood ratio to detect the maximum change magnitude time, and the change starting and ending times. Then, we propose to use an adaptation of the REACTIV method to visualize the detection results vividly. The effectiveness of the proposed methods is demonstrated through the processing of simulated and SAR images, and the comparison with classical techniques. In particular, numerical experiments proved that the developed method has good performances in detecting farmland area changes, building area changes, harbour area changes and flooding area changes.

A Deep Learning Approach for SAR Tomographic Imaging of Forested Areas

Jan 20, 2023

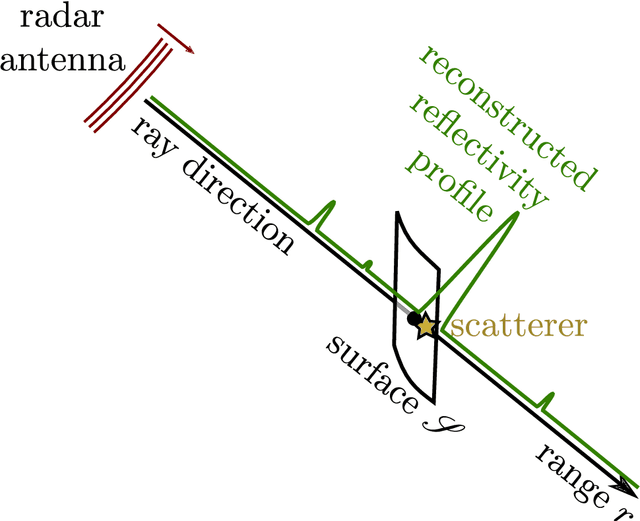

Abstract:Synthetic aperture radar tomographic imaging reconstructs the three-dimensional reflectivity of a scene from a set of coherent acquisitions performed in an interferometric configuration. In forest areas, a large number of elements backscatter the radar signal within each resolution cell. To reconstruct the vertical reflectivity profile, state-of-the-art techniques perform a regularized inversion implemented in the form of iterative minimization algorithms. We show that light-weight neural networks can be trained to perform the tomographic inversion with a single feed-forward pass, leading to fast reconstructions that could better scale to the amount of data provided by the future BIOMASS mission. We train our encoder-decoder network using simulated data and validate our technique on real L-band and P-band data.

Multi-temporal speckle reduction with self-supervised deep neural networks

Jul 25, 2022

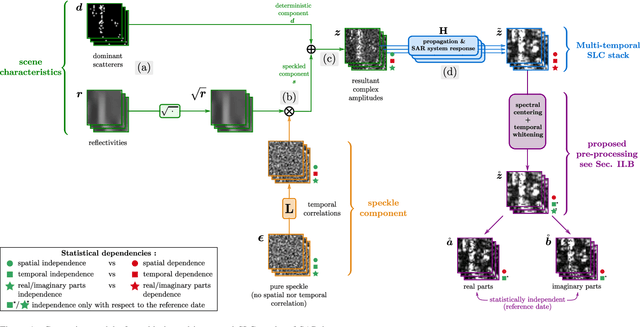

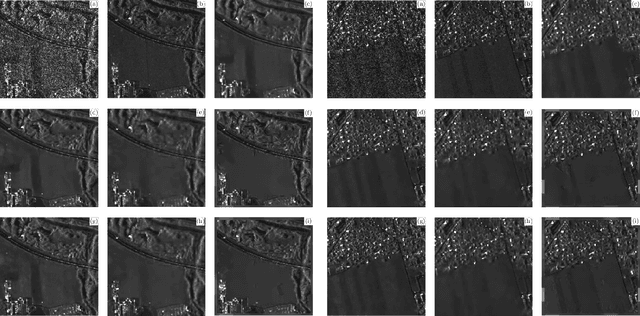

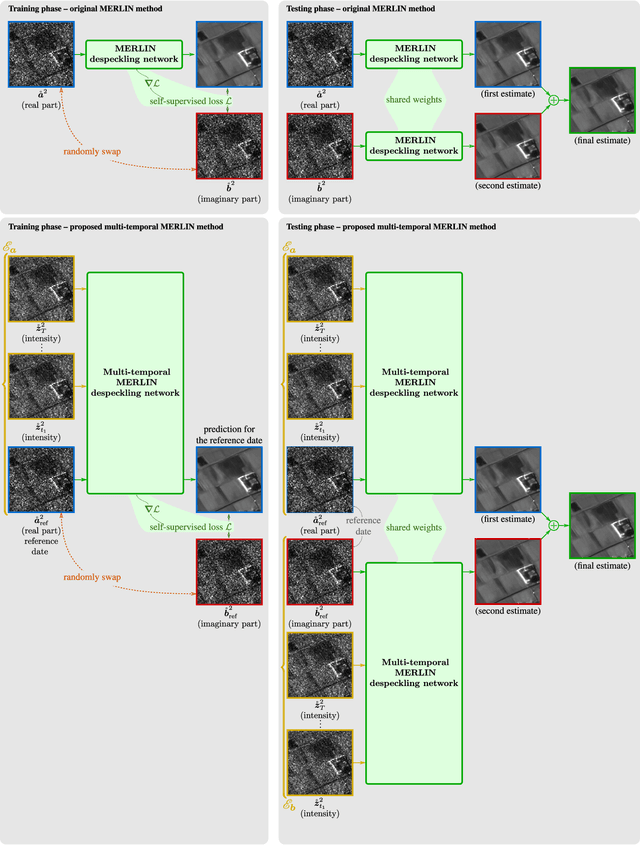

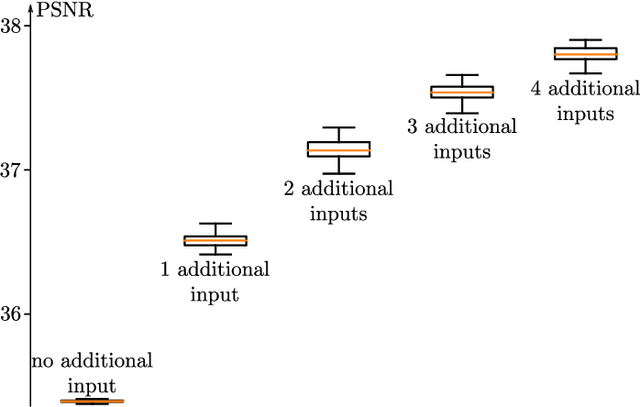

Abstract:Speckle filtering is generally a prerequisite to the analysis of synthetic aperture radar (SAR) images. Tremendous progress has been achieved in the domain of single-image despeckling. Latest techniques rely on deep neural networks to restore the various structures and textures peculiar to SAR images. The availability of time series of SAR images offers the possibility of improving speckle filtering by combining different speckle realizations over the same area. The supervised training of deep neural networks requires ground-truth speckle-free images. Such images can only be obtained indirectly through some form of averaging, by spatial or temporal integration, and are imperfect. Given the potential of very high quality restoration reachable by multi-temporal speckle filtering, the limitations of ground-truth images need to be circumvented. We extend a recent self-supervised training strategy for single-look complex SAR images, called MERLIN, to the case of multi-temporal filtering. This requires modeling the sources of statistical dependencies in the spatial and temporal dimensions as well as between the real and imaginary components of the complex amplitudes. Quantitative analysis on datasets with simulated speckle indicates a clear improvement of speckle reduction when additional SAR images are included. Our method is then applied to stacks of TerraSAR-X images and shown to outperform competing multi-temporal speckle filtering approaches. The code of the trained models is made freely available on the Gitlab of the IMAGES team of the LTCI Lab, T\'el\'ecom Paris Institut Polytechnique de Paris (https://gitlab.telecom-paris.fr/ring/multi-temporal-merlin/).

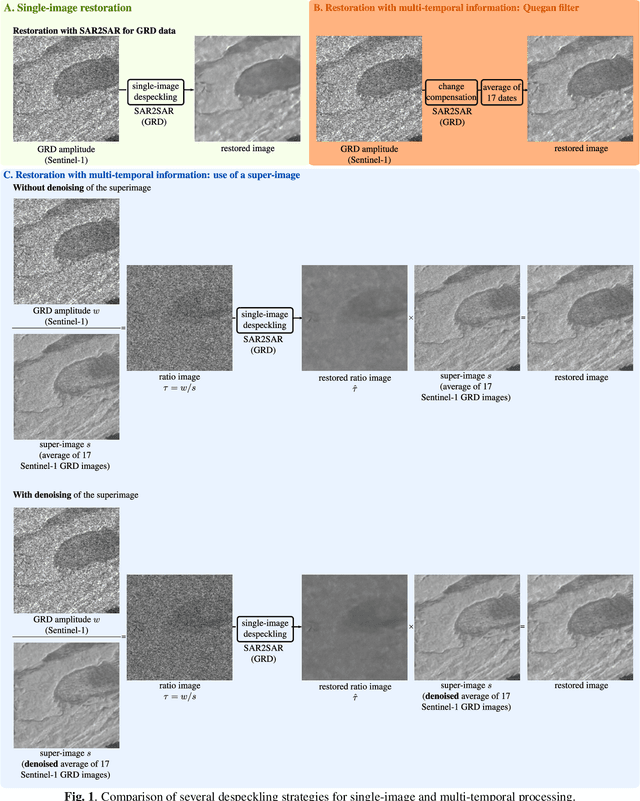

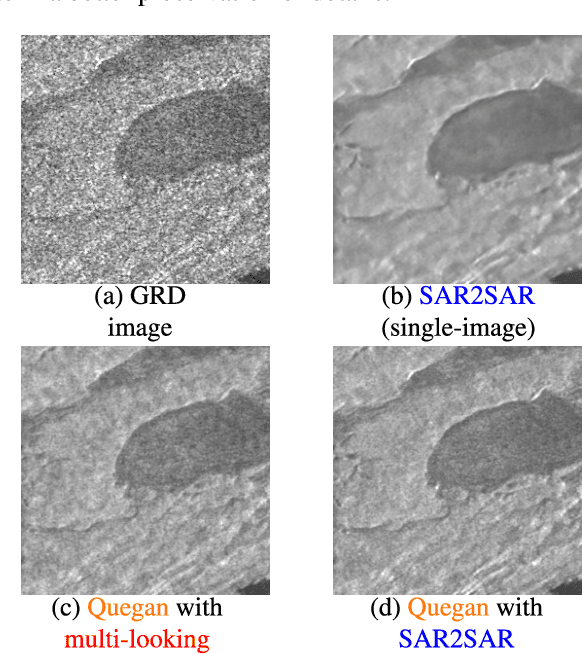

Fast strategies for multi-temporal speckle reduction of Sentinel-1 GRD images

Jul 22, 2022

Abstract:Reducing speckle and limiting the variations of the physical parameters in Synthetic Aperture Radar (SAR) images is often a key-step to fully exploit the potential of such data. Nowadays, deep learning approaches produce state of the art results in single-image SAR restoration. Nevertheless, huge multi-temporal stacks are now often available and could be efficiently exploited to further improve image quality. This paper explores two fast strategies employing a single-image despeckling algorithm, namely SAR2SAR, in a multi-temporal framework. The first one is based on Quegan filter and replaces the local reflectivity pre-estimation by SAR2SAR. The second one uses SAR2SAR to suppress speckle from a ratio image encoding the multi-temporal information under the form of a "super-image", i.e. the temporal arithmetic mean of a time series. Experimental results on Sentinel-1 GRD data show that these two multi-temporal strategies provide improved filtering results while adding a limited computational cost.

unrolling palm for sparse semi-blind source separation

Dec 10, 2021

Abstract:Sparse Blind Source Separation (BSS) has become a well established tool for a wide range of applications - for instance, in astrophysics and remote sensing. Classical sparse BSS methods, such as the Proximal Alternating Linearized Minimization (PALM) algorithm, nevertheless often suffer from a difficult hyperparameter choice, which undermines their results. To bypass this pitfall, we propose in this work to build on the thriving field of algorithm unfolding/unrolling. Unrolling PALM enables to leverage the data-driven knowledge stemming from realistic simulations or ground-truth data by learning both PALM hyperparameters and variables. In contrast to most existing unrolled algorithms, which assume a fixed known dictionary during the training and testing phases, this article further emphasizes on the ability to deal with variable mixing matrices (a.k.a. dictionaries). The proposed Learned PALM (LPALM) algorithm thus enables to perform semi-blind source separation, which is key to increase the generalization of the learnt model in real-world applications. We illustrate the relevance of LPALM in astrophysical multispectral imaging: the algorithm not only needs up to $10^4-10^5$ times fewer iterations than PALM, but also improves the separation quality, while avoiding the cumbersome hyperparameter and initialization choice of PALM. We further show that LPALM outperforms other unrolled source separation methods in the semi-blind setting.

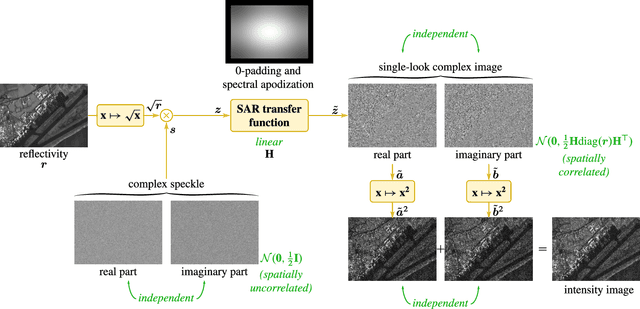

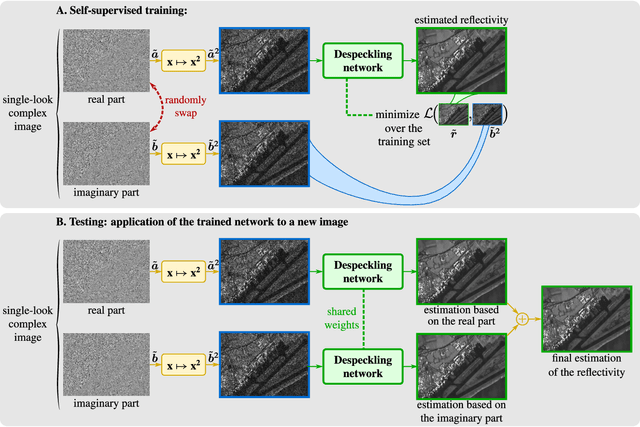

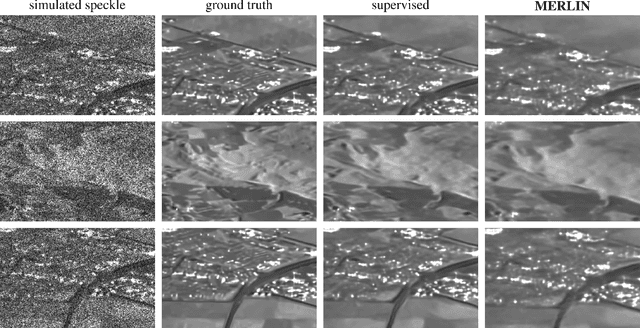

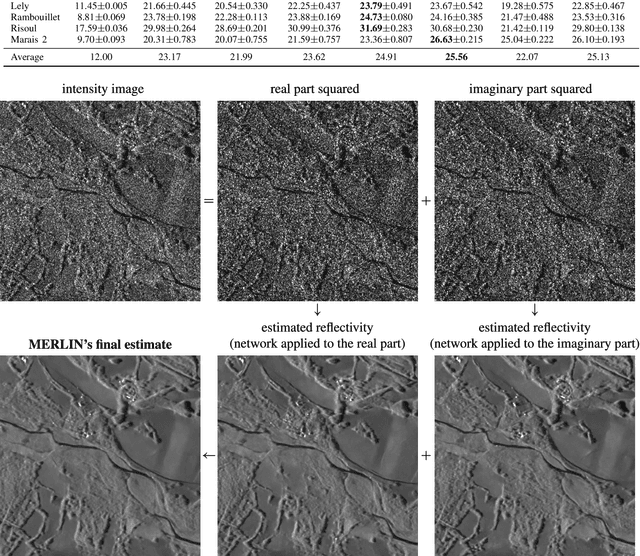

As if by magic: self-supervised training of deep despeckling networks with MERLIN

Nov 15, 2021

Abstract:Speckle fluctuations seriously limit the interpretability of synthetic aperture radar (SAR) images. Speckle reduction has thus been the subject of numerous works spanning at least four decades. Techniques based on deep neural networks have recently achieved a new level of performance in terms of SAR image restoration quality. Beyond the design of suitable network architectures or the selection of adequate loss functions, the construction of training sets is of uttermost importance. So far, most approaches have considered a supervised training strategy: the networks are trained to produce outputs as close as possible to speckle-free reference images. Speckle-free images are generally not available, which requires resorting to natural or optical images or the selection of stable areas in long time series to circumvent the lack of ground truth. Self-supervision, on the other hand, avoids the use of speckle-free images. We introduce a self-supervised strategy based on the separation of the real and imaginary parts of single-look complex SAR images, called MERLIN (coMplex sElf-supeRvised despeckLINg), and show that it offers a straightforward way to train all kinds of deep despeckling networks. Networks trained with MERLIN take into account the spatial correlations due to the SAR transfer function specific to a given sensor and imaging mode. By requiring only a single image, and possibly exploiting large archives, MERLIN opens the door to hassle-free as well as large-scale training of despeckling networks. The code of the trained models is made freely available at https://gitlab.telecom-paris.fr/RING/MERLIN.

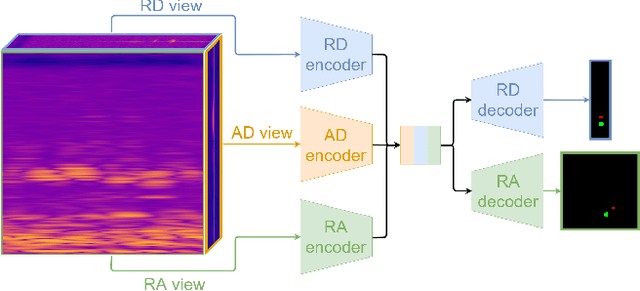

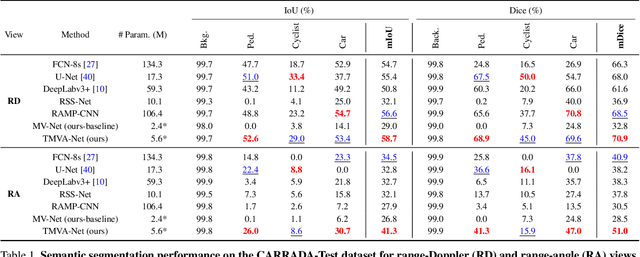

Multi-View Radar Semantic Segmentation

Mar 30, 2021

Abstract:Understanding the scene around the ego-vehicle is key to assisted and autonomous driving. Nowadays, this is mostly conducted using cameras and laser scanners, despite their reduced performances in adverse weather conditions. Automotive radars are low-cost active sensors that measure properties of surrounding objects, including their relative speed, and have the key advantage of not being impacted by rain, snow or fog. However, they are seldom used for scene understanding due to the size and complexity of radar raw data and the lack of annotated datasets. Fortunately, recent open-sourced datasets have opened up research on classification, object detection and semantic segmentation with raw radar signals using end-to-end trainable models. In this work, we propose several novel architectures, and their associated losses, which analyse multiple "views" of the range-angle-Doppler radar tensor to segment it semantically. Experiments conducted on the recent CARRADA dataset demonstrate that our best model outperforms alternative models, derived either from the semantic segmentation of natural images or from radar scene understanding, while requiring significantly fewer parameters. Both our code and trained models will be released.

Urban Surface Reconstruction in SAR Tomography by Graph-Cuts

Mar 12, 2021

Abstract:SAR (Synthetic Aperture Radar) tomography reconstructs 3-D volumes from stacks of SAR images. High-resolution satellites such as TerraSAR-X provide images that can be combined to produce 3-D models. In urban areas, sparsity priors are generally enforced during the tomographic inversion process in order to retrieve the location of scatterers seen within a given radar resolution cell. However, such priors often miss parts of the urban surfaces. Those missing parts are typically regions of flat areas such as ground or rooftops. This paper introduces a surface segmentation algorithm based on the computation of the optimal cut in a flow network. This segmentation process can be included within the 3-D reconstruction framework in order to improve the recovery of urban surfaces. Illustrations on a TerraSAR-X tomographic dataset demonstrate the potential of the approach to produce a 3-D model of urban surfaces such as ground, fa\c{c}ades and rooftops.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge