Filippo Arcadu

From Sparse Signal to Smooth Motion: Real-Time Motion Generation with Rolling Prediction Models

Apr 07, 2025

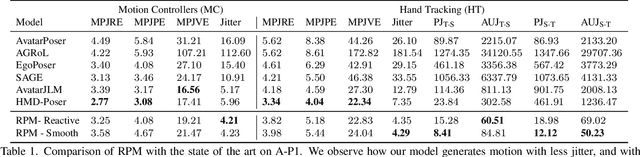

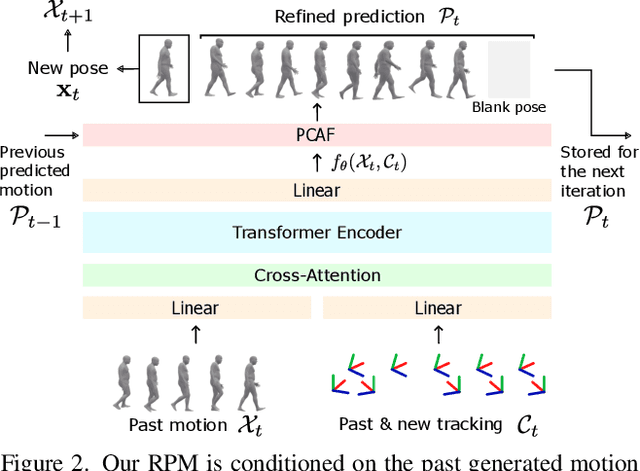

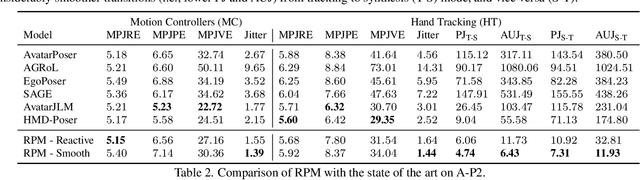

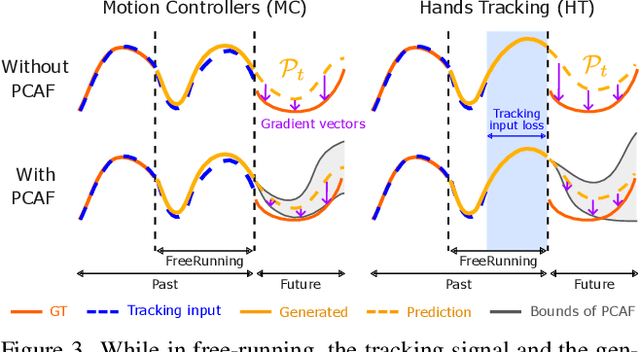

Abstract:In extended reality (XR), generating full-body motion of the users is important to understand their actions, drive their virtual avatars for social interaction, and convey a realistic sense of presence. While prior works focused on spatially sparse and always-on input signals from motion controllers, many XR applications opt for vision-based hand tracking for reduced user friction and better immersion. Compared to controllers, hand tracking signals are less accurate and can even be missing for an extended period of time. To handle such unreliable inputs, we present Rolling Prediction Model (RPM), an online and real-time approach that generates smooth full-body motion from temporally and spatially sparse input signals. Our model generates 1) accurate motion that matches the inputs (i.e., tracking mode) and 2) plausible motion when inputs are missing (i.e., synthesis mode). More importantly, RPM generates seamless transitions from tracking to synthesis, and vice versa. To demonstrate the practical importance of handling noisy and missing inputs, we present GORP, the first dataset of realistic sparse inputs from a commercial virtual reality (VR) headset with paired high quality body motion ground truth. GORP provides >14 hours of VR gameplay data from 28 people using motion controllers (spatially sparse) and hand tracking (spatially and temporally sparse). We benchmark RPM against the state of the art on both synthetic data and GORP to highlight how we can bridge the gap for real-world applications with a realistic dataset and by handling unreliable input signals. Our code, pretrained models, and GORP dataset are available in the project webpage.

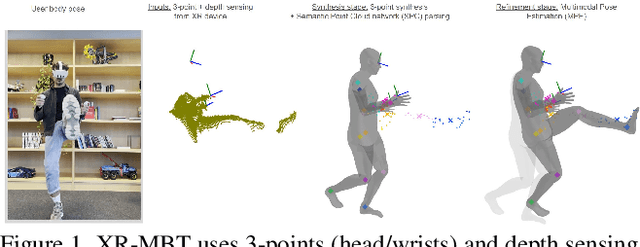

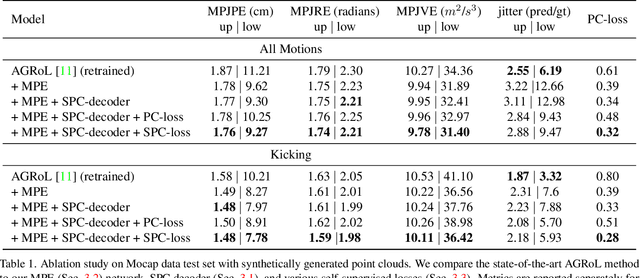

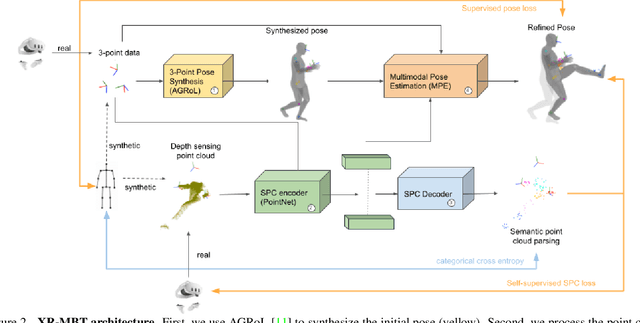

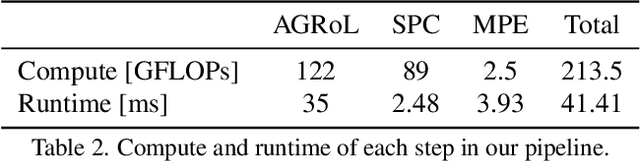

XR-MBT: Multi-modal Full Body Tracking for XR through Self-Supervision with Learned Depth Point Cloud Registration

Nov 27, 2024

Abstract:Tracking the full body motions of users in XR (AR/VR) devices is a fundamental challenge to bring a sense of authentic social presence. Due to the absence of dedicated leg sensors, currently available body tracking methods adopt a synthesis approach to generate plausible motions given a 3-point signal from the head and controller tracking. In order to enable mixed reality features, modern XR devices are capable of estimating depth information of the headset surroundings using available sensors combined with dedicated machine learning models. Such egocentric depth sensing cannot drive the body directly, as it is not registered and is incomplete due to limited field-of-view and body self-occlusions. For the first time, we propose to leverage the available depth sensing signal combined with self-supervision to learn a multi-modal pose estimation model capable of tracking full body motions in real time on XR devices. We demonstrate how current 3-point motion synthesis models can be extended to point cloud modalities using a semantic point cloud encoder network combined with a residual network for multi-modal pose estimation. These modules are trained jointly in a self-supervised way, leveraging a combination of real unregistered point clouds and simulated data obtained from motion capture. We compare our approach against several state-of-the-art systems for XR body tracking and show that our method accurately tracks a diverse range of body motions. XR-MBT tracks legs in XR for the first time, whereas traditional synthesis approaches based on partial body tracking are blind.

Deep learning automates bidimensional and volumetric tumor burden measurement from MRI in pre- and post-operative glioblastoma patients

Sep 03, 2022

Abstract:Tumor burden assessment by magnetic resonance imaging (MRI) is central to the evaluation of treatment response for glioblastoma. This assessment is complex to perform and associated with high variability due to the high heterogeneity and complexity of the disease. In this work, we tackle this issue and propose a deep learning pipeline for the fully automated end-to-end analysis of glioblastoma patients. Our approach simultaneously identifies tumor sub-regions, including the enhancing tumor, peritumoral edema and surgical cavity in the first step, and then calculates the volumetric and bidimensional measurements that follow the current Response Assessment in Neuro-Oncology (RANO) criteria. Also, we introduce a rigorous manual annotation process which was followed to delineate the tumor sub-regions by the human experts, and to capture their segmentation confidences that are later used while training the deep learning models. The results of our extensive experimental study performed over 760 pre-operative and 504 post-operative adult patients with glioma obtained from the public database (acquired at 19 sites in years 2021-2020) and from a clinical treatment trial (47 and 69 sites for pre-/post-operative patients, 2009-2011) and backed up with thorough quantitative, qualitative and statistical analysis revealed that our pipeline performs accurate segmentation of pre- and post-operative MRIs in a fraction of the manual delineation time (up to 20 times faster than humans). The bidimensional and volumetric measurements were in strong agreement with expert radiologists, and we showed that RANO measurements are not always sufficient to quantify tumor burden.

On the crucial impact of the coupling projector-backprojector in iterative tomographic reconstruction

Dec 16, 2016

Abstract:The performance of an iterative reconstruction algorithm for X-ray tomography is strongly determined by the features of the used forward and backprojector. For this reason, a large number of studies has focused on the to design of projectors with increasingly higher accuracy and speed. To what extent the accuracy of an iterative algorithm is affected by the mathematical affinity and the similarity between the actual implementation of the forward and backprojection, referred here as "coupling projector-backprojector", has been an overlooked aspect so far. The experimental study presented here shows that the reconstruction quality and the convergence of an iterative algorithm greatly rely on a good matching between the implementation of the tomographic operators. In comparison, other aspects like the accuracy of the standalone operators, the usage of physical constraints or the choice of stopping criteria may even play a less relevant role.

Improving analytical tomographic reconstructions through consistency conditions

Sep 21, 2016

Abstract:This work introduces and characterizes a fast parameterless filter based on the Helgason-Ludwig consistency conditions, used to improve the accuracy of analytical reconstructions of tomographic undersampled datasets. The filter, acting in the Radon domain, extrapolates intermediate projections between those existing. The resulting sinogram, doubled in views, is then reconstructed by a standard analytical method. Experiments with simulated data prove that the peak-signal-to-noise ratio of the results computed by filtered backprojection is improved up to 5-6 dB, if the filter is used prior to reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge