Feng Lyu

Sherman

QoE-Aware Service Provision for Mobile AR Rendering: An Agent-Driven Approach

Aug 12, 2025Abstract:Mobile augmented reality (MAR) is envisioned as a key immersive application in 6G, enabling virtual content rendering aligned with the physical environment through device pose estimation. In this paper, we propose a novel agent-driven communication service provisioning approach for edge-assisted MAR, aiming to reduce communication overhead between MAR devices and the edge server while ensuring the quality of experience (QoE). First, to address the inaccessibility of MAR application-specific information to the network controller, we establish a digital agent powered by large language models (LLMs) on behalf of the MAR service provider, bridging the data and function gap between the MAR service and network domains. Second, to cope with the user-dependent and dynamic nature of data traffic patterns for individual devices, we develop a user-level QoE modeling method that captures the relationship between communication resource demands and perceived user QoE, enabling personalized, agent-driven communication resource management. Trace-driven simulation results demonstrate that the proposed approach outperforms conventional LLM-based QoE-aware service provisioning methods in both user-level QoE modeling accuracy and communication resource efficiency.

Accelerating LLM Inference Throughput via Asynchronous KV Cache Prefetching

Apr 08, 2025Abstract:Large Language Models (LLMs) exhibit pronounced memory-bound characteristics during inference due to High Bandwidth Memory (HBM) bandwidth constraints. In this paper, we propose an L2 Cache-oriented asynchronous KV Cache prefetching method to break through the memory bandwidth bottleneck in LLM inference through computation-load overlap. By strategically scheduling idle memory bandwidth during active computation windows, our method proactively prefetches required KV Cache into GPU L2 cache, enabling high-speed L2 cache hits for subsequent accesses and effectively hiding HBM access latency within computational cycles. Extensive experiments on NVIDIA H20 GPUs demonstrate that the proposed method achieves 2.15x improvement in attention kernel efficiency and up to 1.97x end-to-end throughput enhancement, surpassing state-of-the-art baseline FlashAttention-3. Notably, our solution maintains orthogonality to existing optimization techniques and can be integrated with current inference frameworks, providing a scalable latency-hiding solution for next-generation LLM inference engines.

Resource-Efficient Generative AI Model Deployment in Mobile Edge Networks

Sep 09, 2024Abstract:The surging development of Artificial Intelligence-Generated Content (AIGC) marks a transformative era of the content creation and production. Edge servers promise attractive benefits, e.g., reduced service delay and backhaul traffic load, for hosting AIGC services compared to cloud-based solutions. However, the scarcity of available resources on the edge pose significant challenges in deploying generative AI models. In this paper, by characterizing the resource and delay demands of typical generative AI models, we find that the consumption of storage and GPU memory, as well as the model switching delay represented by I/O delay during the preloading phase, are significant and vary across models. These multidimensional coupling factors render it difficult to make efficient edge model deployment decisions. Hence, we present a collaborative edge-cloud framework aiming to properly manage generative AI model deployment on the edge. Specifically, we formulate edge model deployment problem considering heterogeneous features of models as an optimization problem, and propose a model-level decision selection algorithm to solve it. It enables pooled resource sharing and optimizes the trade-off between resource consumption and delay in edge generative AI model deployment. Simulation results validate the efficacy of the proposed algorithm compared with baselines, demonstrating its potential to reduce overall costs by providing feature-aware model deployment decisions.

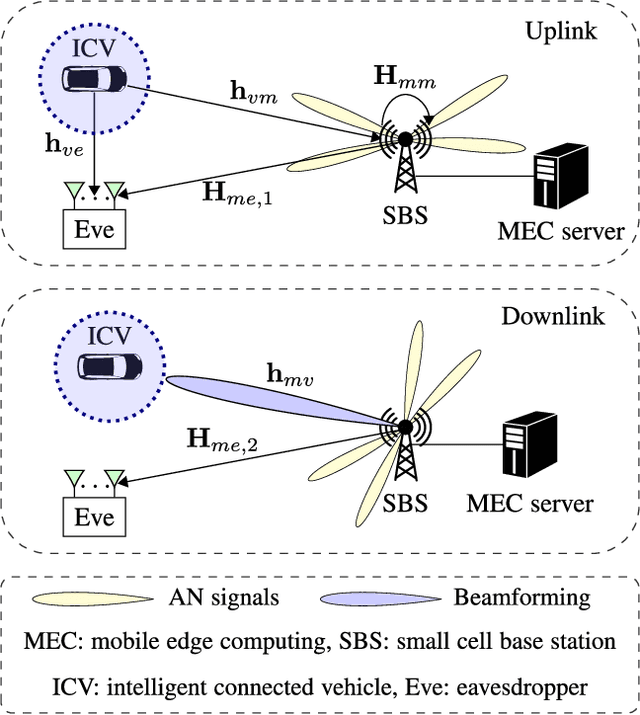

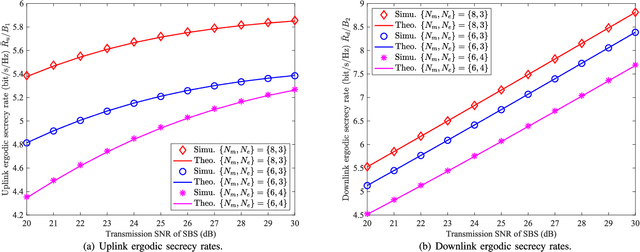

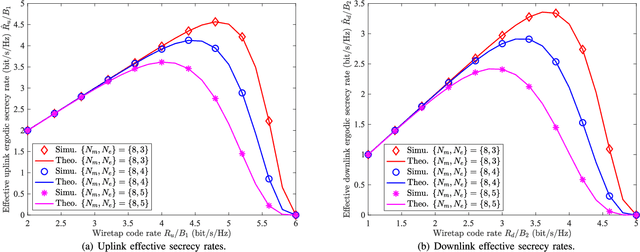

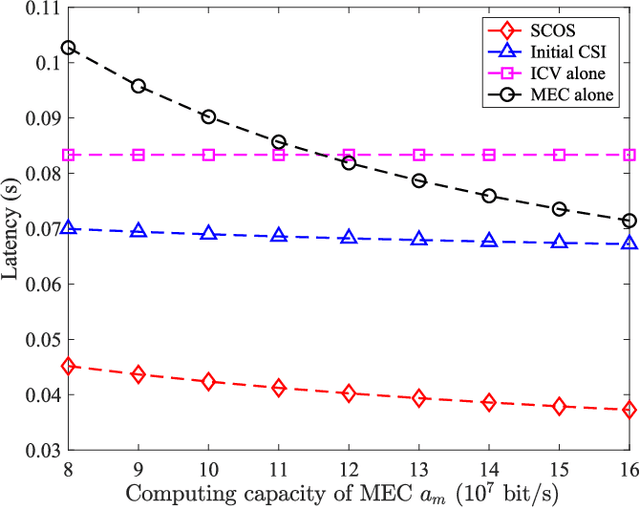

Physical Layer Security Assisted Computation Offloading in Intelligently Connected Vehicle Networks

Mar 25, 2022

Abstract:In this paper, we propose a secure computation offloading scheme (SCOS) in intelligently connected vehicle (ICV) networks, aiming to minimize overall latency of computing via offloading part of computational tasks to nearby servers in small cell base stations (SBSs), while securing the information delivered during offloading and feedback phases via physical layer security. Existing computation offloading schemes usually neglected time-varying characteristics of channels and their corresponding secrecy rates, resulting in an inappropriate task partition ratio and a large secrecy outage probability. To address these issues, we utilize an ergodic secrecy rate to determine how many tasks are offloaded to the edge, where ergodic secrecy rate represents the average secrecy rate over all realizations in a time-varying wireless channel. Adaptive wiretap code rates are proposed with a secrecy outage constraint to match time-varying wireless channels. In addition, the proposed secure beamforming and artificial noise (AN) schemes can improve the ergodic secrecy rates of uplink and downlink channels even without eavesdropper channel state information (CSI). Numerical results demonstrate that the proposed schemes have a shorter system delay than the strategies neglecting time-varying characteristics.

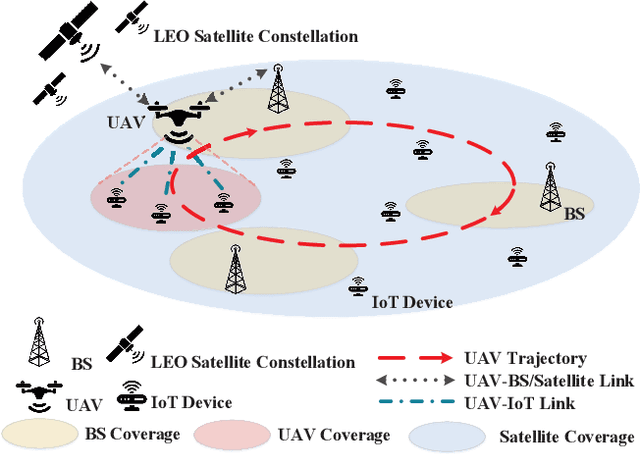

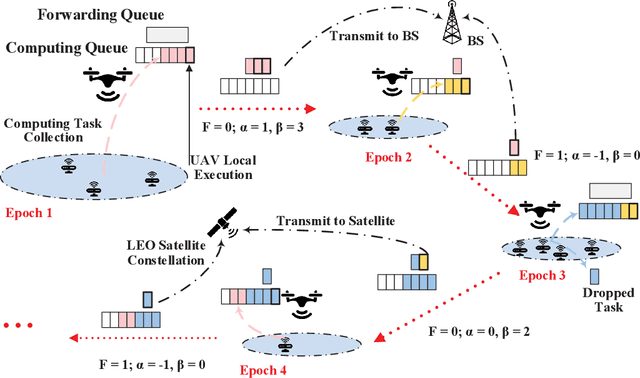

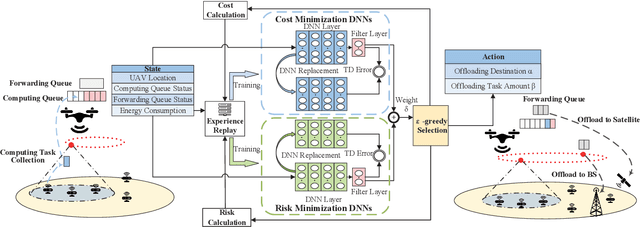

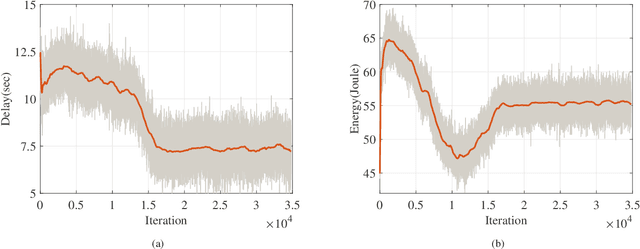

Deep Reinforcement Learning for Delay-Oriented IoT Task Scheduling in Space-Air-Ground Integrated Network

Oct 04, 2020

Abstract:In this paper, we investigate a computing task scheduling problem in space-air-ground integrated network (SAGIN) for delay-oriented Internet of Things (IoT) services. In the considered scenario, an unmanned aerial vehicle (UAV) collects computing tasks from IoT devices and then makes online offloading decisions, in which the tasks can be processed at the UAV or offloaded to the nearby base station or the remote satellite. Our objective is to design a task scheduling policy that minimizes offloading and computing delay of all tasks given the UAV energy capacity constraint. To this end, we first formulate the online scheduling problem as an energy-constrained Markov decision process (MDP). Then, considering the task arrival dynamics, we develop a novel deep risk-sensitive reinforcement learning algorithm. Specifically, the algorithm evaluates the risk, which measures the energy consumption that exceeds the constraint, for each state and searches the optimal parameter weighing the minimization of delay and risk while learning the optimal policy. Extensive simulation results demonstrate that the proposed algorithm can reduce the task processing delay by up to 30% compared to probabilistic configuration methods while satisfying the UAV energy capacity constraint.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge