Feilong Wang

University of Washington, WA, United States

Robustness of LLM-enabled vehicle trajectory prediction under data security threats

Nov 14, 2025Abstract:The integration of large language models (LLMs) into automated driving systems has opened new possibilities for reasoning and decision-making by transforming complex driving contexts into language-understandable representations. Recent studies demonstrate that fine-tuned LLMs can accurately predict vehicle trajectories and lane-change intentions by gathering and transforming data from surrounding vehicles. However, the robustness of such LLM-based prediction models for safety-critical driving systems remains unexplored, despite the increasing concerns about the trustworthiness of LLMs. This study addresses this gap by conducting a systematic vulnerability analysis of LLM-enabled vehicle trajectory prediction. We propose a one-feature differential evolution attack that perturbs a single kinematic feature of surrounding vehicles within the LLM's input prompts under a black-box setting. Experiments on the highD dataset reveal that even minor, physically plausible perturbations can significantly disrupt model outputs, underscoring the susceptibility of LLM-based predictors to adversarial manipulation. Further analyses reveal a trade-off between accuracy and robustness, examine the failure mechanism, and explore potential mitigation solutions. The findings provide the very first insights into adversarial vulnerabilities of LLM-driven automated vehicle models in the context of vehicular interactions and highlight the need for robustness-oriented design in future LLM-based intelligent transportation systems.

Set-Valued Sensitivity Analysis of Deep Neural Networks

Dec 15, 2024Abstract:This paper proposes a sensitivity analysis framework based on set valued mapping for deep neural networks (DNN) to understand and compute how the solutions (model weights) of DNN respond to perturbations in the training data. As a DNN may not exhibit a unique solution (minima) and the algorithm of solving a DNN may lead to different solutions with minor perturbations to input data, we focus on the sensitivity of the solution set of DNN, instead of studying a single solution. In particular, we are interested in the expansion and contraction of the set in response to data perturbations. If the change of solution set can be bounded by the extent of the data perturbation, the model is said to exhibit the Lipschitz like property. This "set-to-set" analysis approach provides a deeper understanding of the robustness and reliability of DNNs during training. Our framework incorporates both isolated and non-isolated minima, and critically, does not require the assumption that the Hessian of loss function is non-singular. By developing set-level metrics such as distance between sets, convergence of sets, derivatives of set-valued mapping, and stability across the solution set, we prove that the solution set of the Fully Connected Neural Network holds Lipschitz-like properties. For general neural networks (e.g., Resnet), we introduce a graphical-derivative-based method to estimate the new solution set following data perturbation without retraining.

Infrastructure-enabled GPS Spoofing Detection and Correction

Feb 11, 2022

Abstract:Accurate and robust localization is crucial for supporting high-level driving automation and safety. Modern localization solutions rely on various sensors, among which GPS has been and will continue to be essential. However, GPS can be vulnerable to malicious attacks and GPS spoofing has been identified as a high threat. GPS spoofing injects false information into true GPS measurements, aiming to deviate a vehicle from its true trajectory, endangering the safety of road users. With various types of vehicle-based sensors emerging, recent studies propose to detect GPS spoofing by fusing data from multiple sensors and identifying inconsistencies among them. Yet, these methods often require sophisticated algorithms and cannot handle stealthy or coordinated attacks targeting multiple sensors. With infrastructure becoming increasingly important in supporting emerging vehicle technologies and systems (e.g., automated vehicles), this study explores the potential of applying infrastructure data in defending against GPS spoofing. We propose an infrastructure-enabled method by deploying roadside infrastructure as an independent, secured data source. A real-time detector, based on the Isolation Forest, is constructed to detect GPS spoofing. Once spoofing is detected, GPS measurements are isolated, and the potentially compromised location estimator is corrected using the infrastructure data. The proposed method relies less on vehicular onboard data than existing solutions. Enabled by the secure infrastructure data, we can design a simpler yet more effective solution against GPS spoofing, compared with state-of-the-art defense methods. We test the proposed method using both simulation data and real-world GPS data, and show its effectiveness in defending various types of GPS spoofing attacks, including a type of stealthy attacks that are proposed to fail the production-grade autonomous driving systems.

Color Recognition for Rubik's Cube Robot

Jan 11, 2019

Abstract:In this paper, we proposed three methods to solve color recognition of Rubik's cube, which includes one offline method and two online methods. Scatter balance \& extreme learning machine (SB-ELM), a offline method, is proposed to illustrate the efficiency of training based method. We also point out the conception of color drifting which indicates offline methods are always ineffectiveness and can not work well in continuous change circumstance. By contrast, dynamic weight label propagation is proposed for labeling blocks color by known center blocks color of Rubik's cube. Furthermore, weak label hierarchic propagation, another online method, is also proposed for unknown all color information but only utilizes weak label of center block in color recognition. We finally design a Rubik's cube robot and construct a dataset to illustrate the efficiency and effectiveness of our online methods and to indicate the ineffectiveness of offline method by color drifting in our dataset.

Rough extreme learning machine: a new classification method based on uncertainty measure

Mar 10, 2018

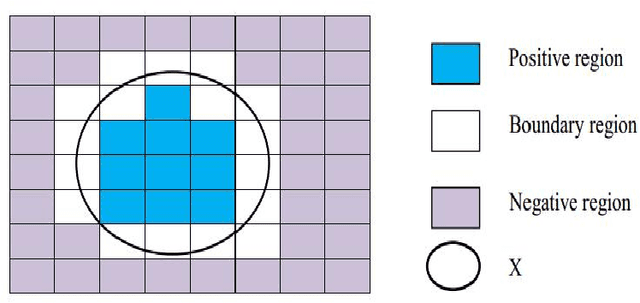

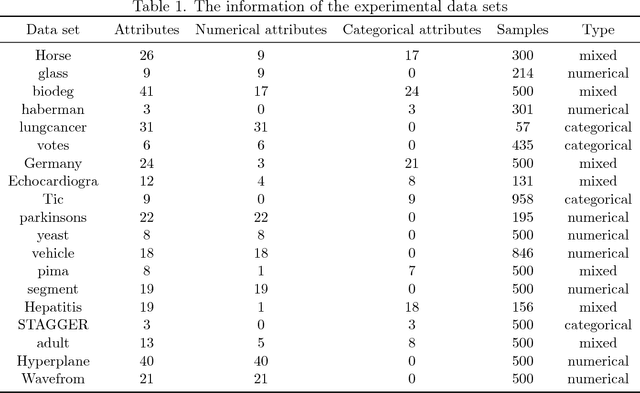

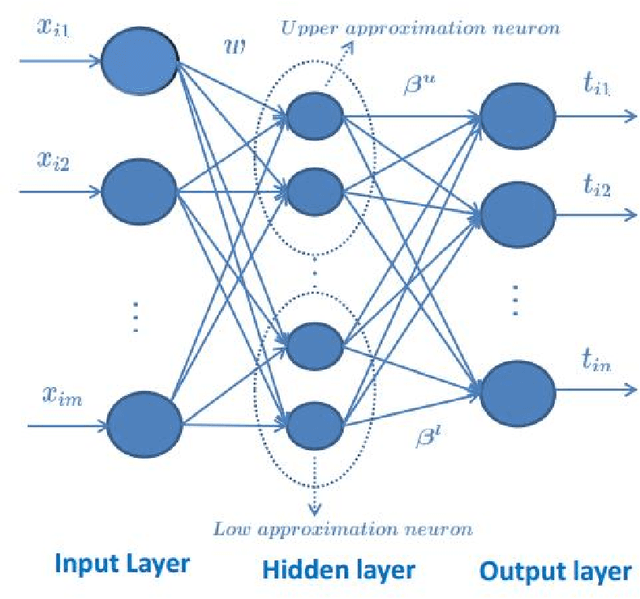

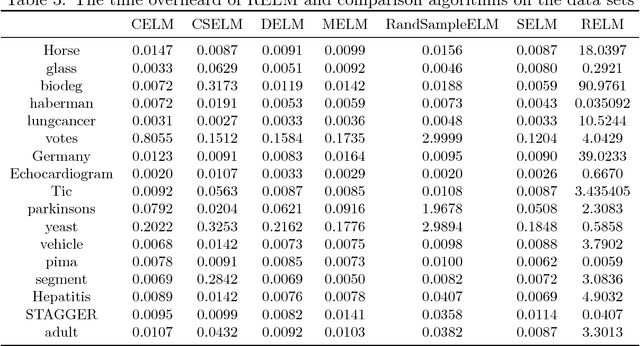

Abstract:Extreme learning machine (ELM) is a new single hidden layer feedback neural network. The weights of the input layer and the biases of neurons in hidden layer are randomly generated, the weights of the output layer can be analytically determined. ELM has been achieved good results for a large number of classification tasks. In this paper, a new extreme learning machine called rough extreme learning machine (RELM) was proposed. RELM uses rough set to divide data into upper approximation set and lower approximation set, and the two approximation sets are utilized to train upper approximation neurons and lower approximation neurons. In addition, an attribute reduction is executed in this algorithm to remove redundant attributes. The experimental results showed, comparing with the comparison algorithms, RELM can get a better accuracy and repeatability in most cases, RELM can not only maintain the advantages of fast speed, but also effectively cope with the classification task for high-dimensional data.

Feature Fusion using Extended Jaccard Graph and Stochastic Gradient Descent for Robot

Mar 24, 2017

Abstract:Robot vision is a fundamental device for human-robot interaction and robot complex tasks. In this paper, we use Kinect and propose a feature graph fusion (FGF) for robot recognition. Our feature fusion utilizes RGB and depth information to construct fused feature from Kinect. FGF involves multi-Jaccard similarity to compute a robust graph and utilize word embedding method to enhance the recognition results. We also collect DUT RGB-D face dataset and a benchmark datset to evaluate the effectiveness and efficiency of our method. The experimental results illustrate FGF is robust and effective to face and object datasets in robot applications.

Neural method for Explicit Mapping of Quasi-curvature Locally Linear Embedding in image retrieval

Mar 11, 2017

Abstract:This paper proposed a new explicit nonlinear dimensionality reduction using neural networks for image retrieval tasks. We first proposed a Quasi-curvature Locally Linear Embedding (QLLE) for training set. QLLE guarantees the linear criterion in neighborhood of each sample. Then, a neural method (NM) is proposed for out-of-sample problem. Combining QLLE and NM, we provide a explicit nonlinear dimensionality reduction approach for efficient image retrieval. The experimental results in three benchmark datasets illustrate that our method can get better performance than other state-of-the-art out-of-sample methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge