Faissal El Bouanani

Continual Conscious Active Fine-Tuning to Robustify Online Machine Learning Models Against Data Distribution Shifts

Nov 02, 2022

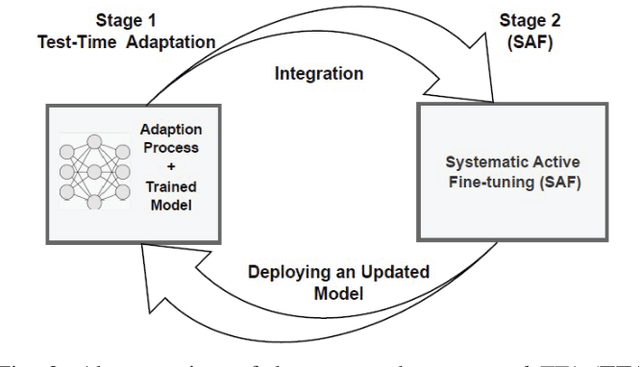

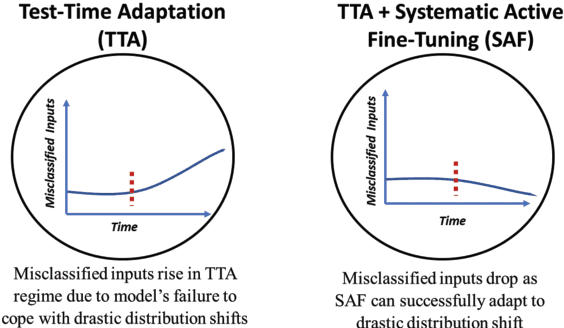

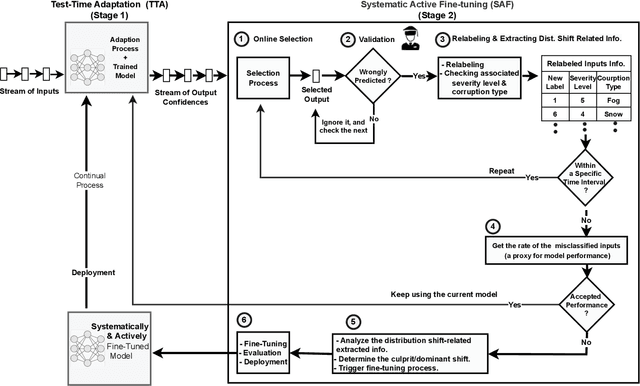

Abstract:Unlike their offline traditional counterpart, online machine learning models are capable of handling data distribution shifts while serving at the test time. However, they have limitations in addressing this phenomenon. They are either expensive or unreliable. We propose augmenting an online learning approach called test-time adaptation with a continual conscious active fine-tuning layer to develop an enhanced variation that can handle drastic data distribution shifts reliably and cost-effectively. The proposed augmentation incorporates the following aspects: a continual aspect to confront the ever-ending data distribution shifts, a conscious aspect to imply that fine-tuning is a distribution-shift-aware process that occurs at the appropriate time to address the recently detected data distribution shifts, and an active aspect to indicate employing human-machine collaboration for the relabeling to be cost-effective and practical for diverse applications. Our empirical results show that the enhanced test-time adaptation variation outperforms the traditional variation by a factor of two.

Interplay Between NOMA and GSSK: Detection Strategies and Performance Analysis

May 24, 2021

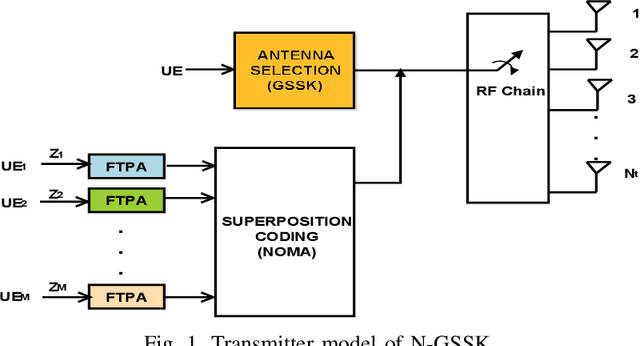

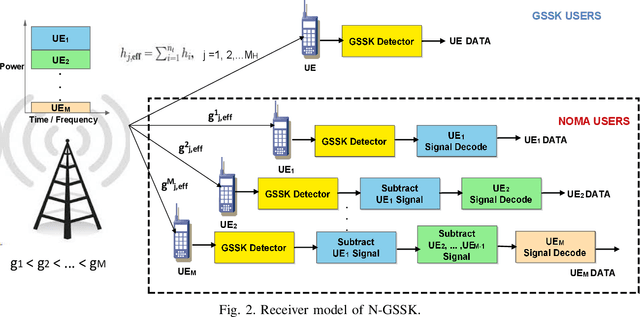

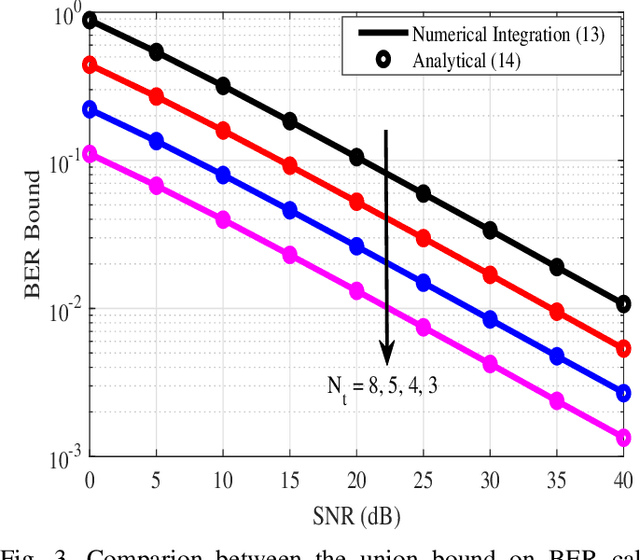

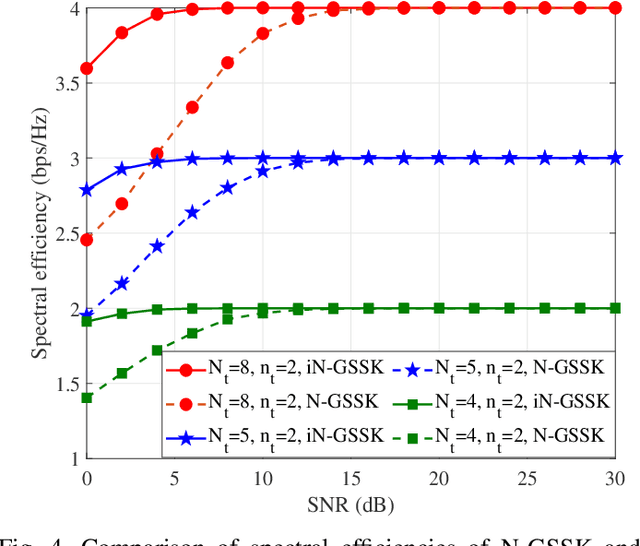

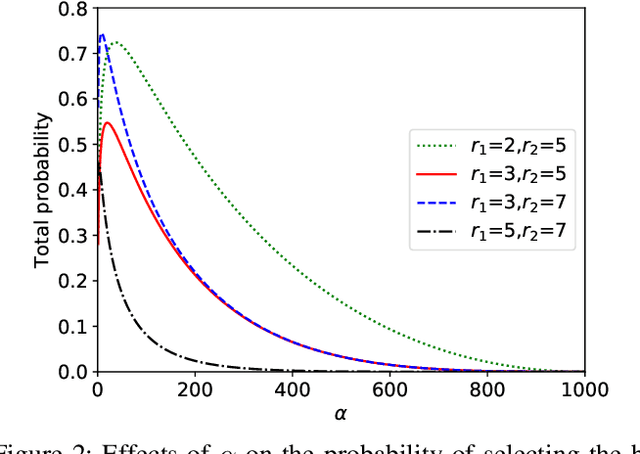

Abstract:Non-orthogonal multiple access (NOMA) is a technology enabler for the fifth generation and beyond networks, which has shown a great flexibility such that it can be readily integrated with other wireless technologies. In this paper, we investigate the interplay between NOMA and generalized space shift keying (GSSK) in a hybrid NOMA-GSSK (N-GSSK) network. Specifically, we provide a comprehensive analytical framework and propose a novel suboptimal energy-based maximum likelihood (ML) detector for the N-GSSK scheme. The proposed ML decoder exploits the energy of the received signals in order to estimate the active antenna indices. Its performance is investigated in terms of pairwise error probability, bit error rate union bound, and achievable rate. Finally, we establish the validity of our analysis through Monte-Carlo simulations and demonstrate that N-GSSK outperforms conventional NOMA and GSSK, particularly in terms of spectral efficiency.

Budgeted Online Selection of Candidate IoT Clients to Participate in Federated Learning

Nov 16, 2020

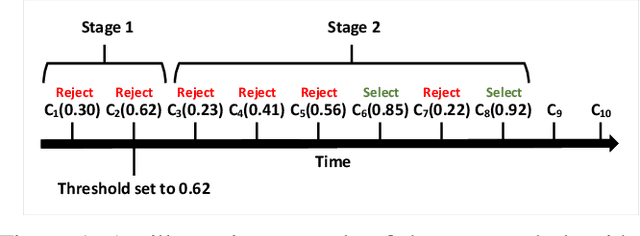

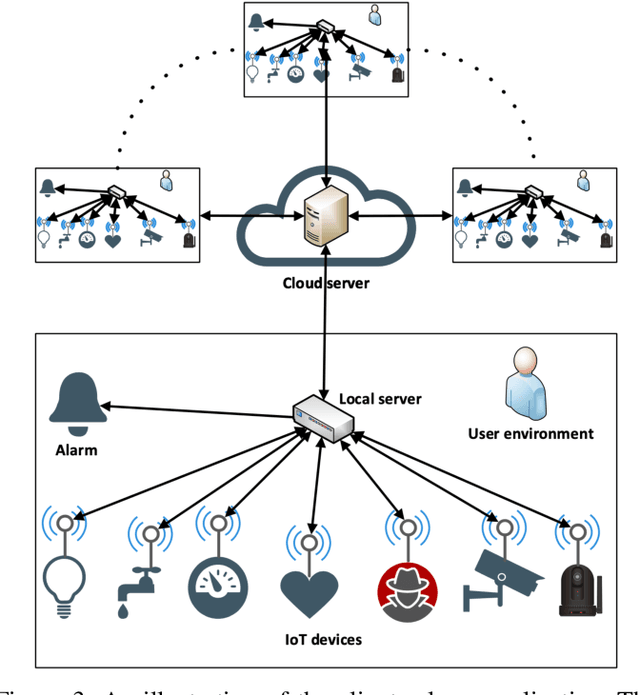

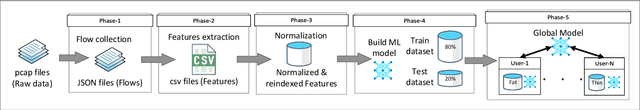

Abstract:Machine Learning (ML), and Deep Learning (DL) in particular, play a vital role in providing smart services to the industry. These techniques however suffer from privacy and security concerns since data is collected from clients and then stored and processed at a central location. Federated Learning (FL), an architecture in which model parameters are exchanged instead of client data, has been proposed as a solution to these concerns. Nevertheless, FL trains a global model by communicating with clients over communication rounds, which introduces more traffic on the network and increases the convergence time to the target accuracy. In this work, we solve the problem of optimizing accuracy in stateful FL with a budgeted number of candidate clients by selecting the best candidate clients in terms of test accuracy to participate in the training process. Next, we propose an online stateful FL heuristic to find the best candidate clients. Additionally, we propose an IoT client alarm application that utilizes the proposed heuristic in training a stateful FL global model based on IoT device type classification to alert clients about unauthorized IoT devices in their environment. To test the efficiency of the proposed online heuristic, we conduct several experiments using a real dataset and compare the results against state-of-the-art algorithms. Our results indicate that the proposed heuristic outperforms the online random algorithm with up to 27% gain in accuracy. Additionally, the performance of the proposed online heuristic is comparable to the performance of the best offline algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge