Continual Conscious Active Fine-Tuning to Robustify Online Machine Learning Models Against Data Distribution Shifts

Paper and Code

Nov 02, 2022

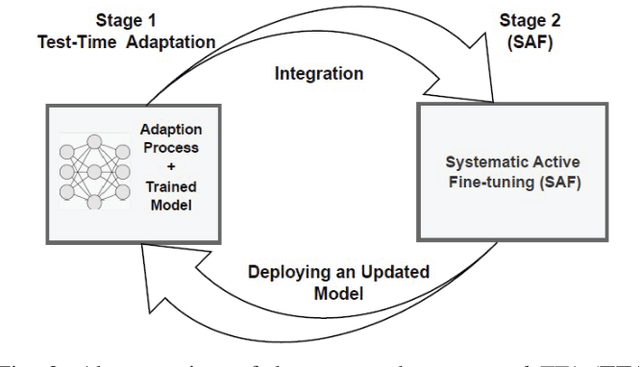

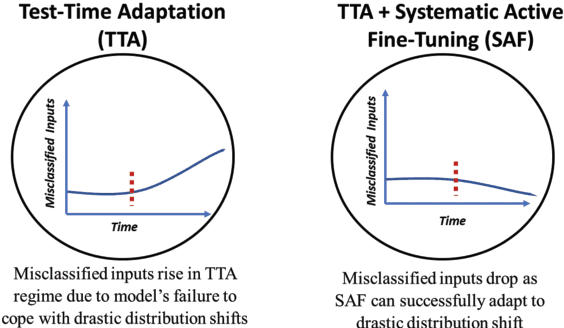

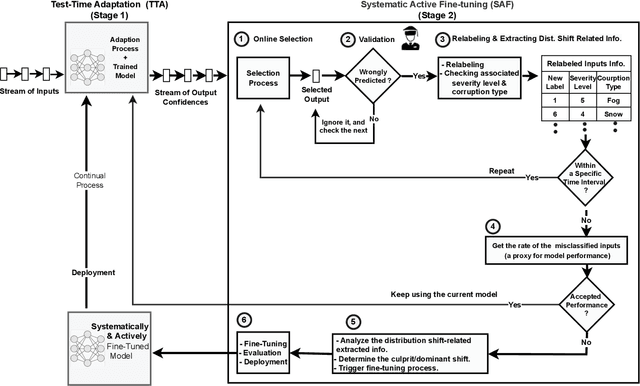

Unlike their offline traditional counterpart, online machine learning models are capable of handling data distribution shifts while serving at the test time. However, they have limitations in addressing this phenomenon. They are either expensive or unreliable. We propose augmenting an online learning approach called test-time adaptation with a continual conscious active fine-tuning layer to develop an enhanced variation that can handle drastic data distribution shifts reliably and cost-effectively. The proposed augmentation incorporates the following aspects: a continual aspect to confront the ever-ending data distribution shifts, a conscious aspect to imply that fine-tuning is a distribution-shift-aware process that occurs at the appropriate time to address the recently detected data distribution shifts, and an active aspect to indicate employing human-machine collaboration for the relabeling to be cost-effective and practical for diverse applications. Our empirical results show that the enhanced test-time adaptation variation outperforms the traditional variation by a factor of two.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge