Fabio Stella

Causal Discovery on Higher-Order Interactions

Nov 18, 2025Abstract:Causal discovery combines data with knowledge provided by experts to learn the DAG representing the causal relationships between a given set of variables. When data are scarce, bagging is used to measure our confidence in an average DAG obtained by aggregating bootstrapped DAGs. However, the aggregation step has received little attention from the specialized literature: the average DAG is constructed using only the confidence in the individual edges of the bootstrapped DAGs, thus disregarding complex higher-order edge structures. In this paper, we introduce a novel theoretical framework based on higher-order structures and describe a new DAG aggregation algorithm. We perform a simulation study, discussing the advantages and limitations of the proposed approach. Our proposal is both computationally efficient and effective, outperforming state-of-the-art solutions, especially in low sample size regimes and under high dimensionality settings.

Expert-Guided POMDP Learning for Data-Efficient Modeling in Healthcare

Nov 18, 2025

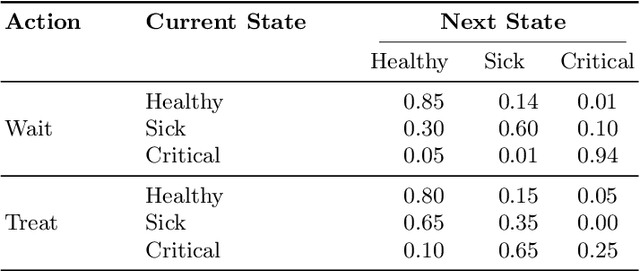

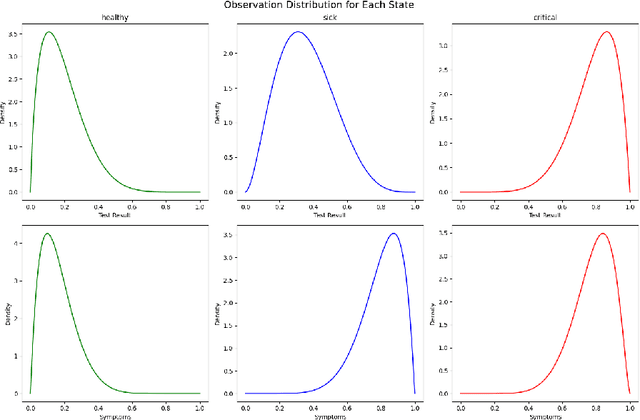

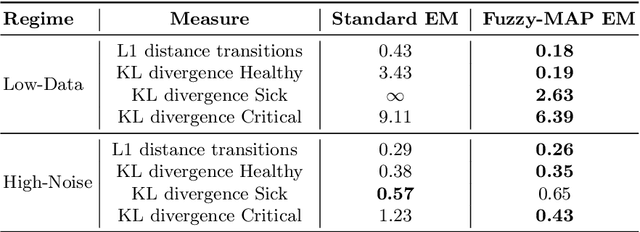

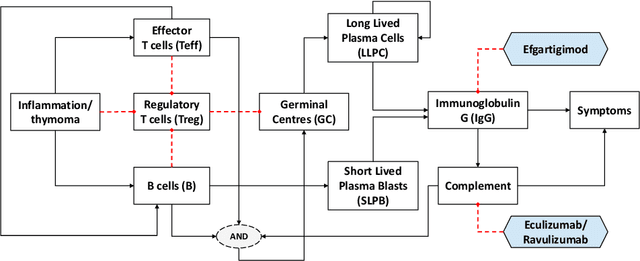

Abstract:Learning the parameters of Partially Observable Markov Decision Processes (POMDPs) from limited data is a significant challenge. We introduce the Fuzzy MAP EM algorithm, a novel approach that incorporates expert knowledge into the parameter estimation process by enriching the Expectation Maximization (EM) framework with fuzzy pseudo-counts derived from an expert-defined fuzzy model. This integration naturally reformulates the problem as a Maximum A Posteriori (MAP) estimation, effectively guiding learning in environments with limited data. In synthetic medical simulations, our method consistently outperforms the standard EM algorithm under both low-data and high-noise conditions. Furthermore, a case study on Myasthenia Gravis illustrates the ability of the Fuzzy MAP EM algorithm to recover a clinically coherent POMDP, demonstrating its potential as a practical tool for data-efficient modeling in healthcare.

LUME-DBN: Full Bayesian Learning of DBNs from Incomplete data in Intensive Care

Nov 06, 2025

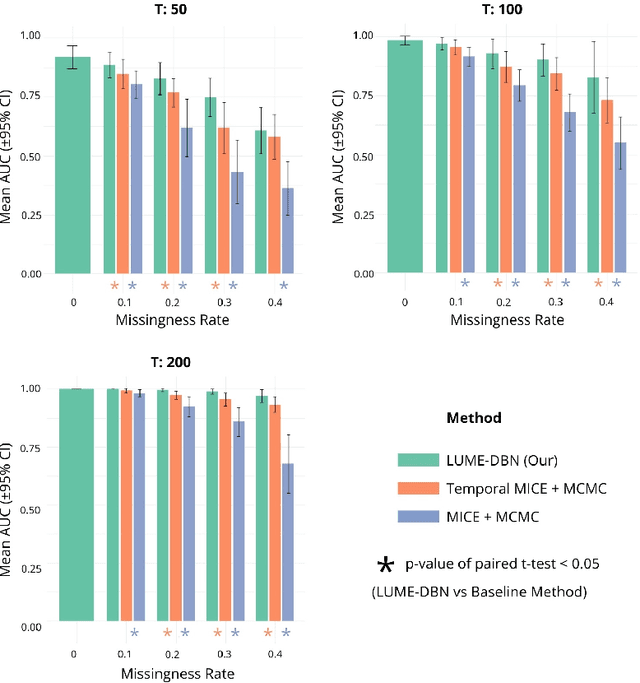

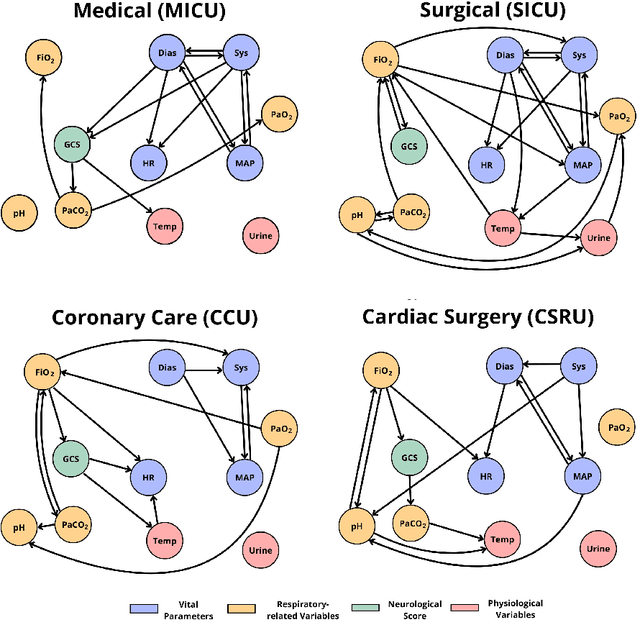

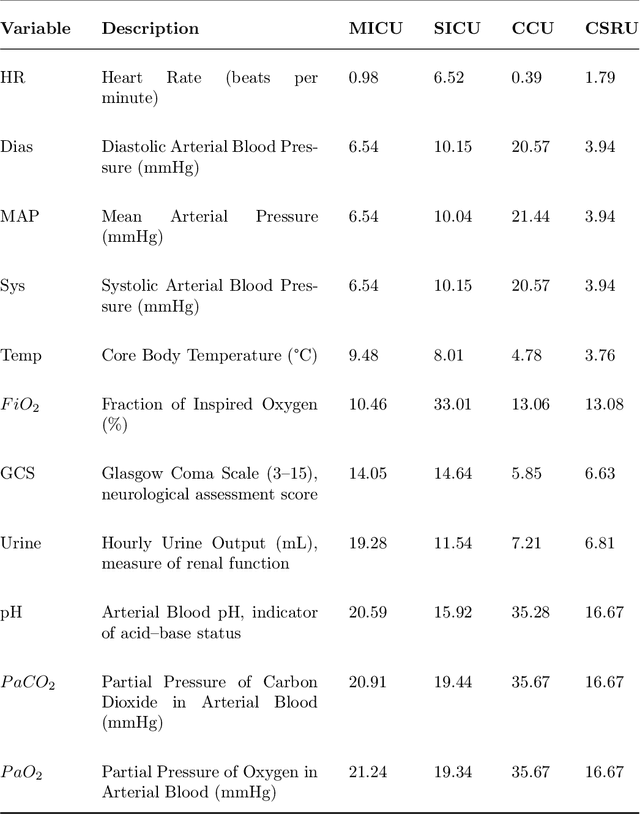

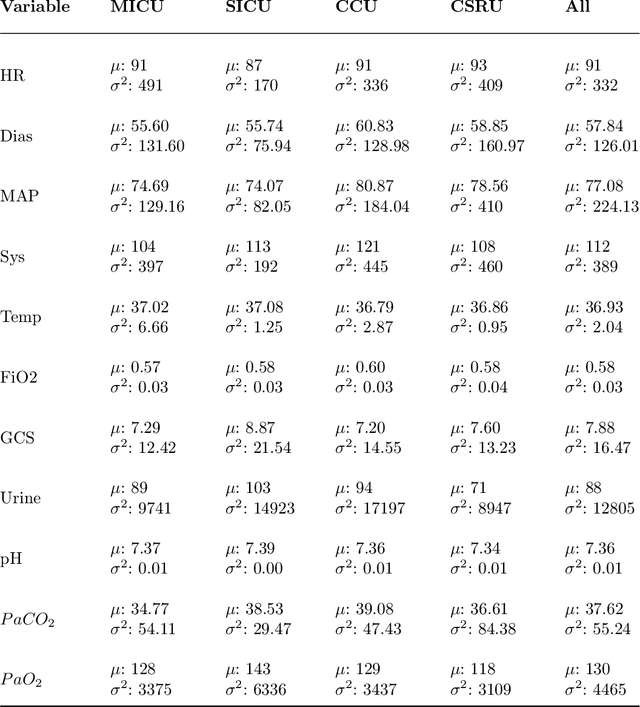

Abstract:Dynamic Bayesian networks (DBNs) are increasingly used in healthcare due to their ability to model complex temporal relationships in patient data while maintaining interpretability, an essential feature for clinical decision-making. However, existing approaches to handling missing data in longitudinal clinical datasets are largely derived from static Bayesian networks literature, failing to properly account for the temporal nature of the data. This gap limits the ability to quantify uncertainty over time, which is particularly critical in settings such as intensive care, where understanding the temporal dynamics is fundamental for model trustworthiness and applicability across diverse patient groups. Despite the potential of DBNs, a full Bayesian framework that integrates missing data handling remains underdeveloped. In this work, we propose a novel Gibbs sampling-based method for learning DBNs from incomplete data. Our method treats each missing value as an unknown parameter following a Gaussian distribution. At each iteration, the unobserved values are sampled from their full conditional distributions, allowing for principled imputation and uncertainty estimation. We evaluate our method on both simulated datasets and real-world intensive care data from critically ill patients. Compared to standard model-agnostic techniques such as MICE, our Bayesian approach demonstrates superior reconstruction accuracy and convergence properties. These results highlight the clinical relevance of incorporating full Bayesian inference in temporal models, providing more reliable imputations and offering deeper insight into model behavior. Our approach supports safer and more informed clinical decision-making, particularly in settings where missing data are frequent and potentially impactful.

Tackling Federated Unlearning as a Parameter Estimation Problem

Aug 26, 2025Abstract:Privacy regulations require the erasure of data from deep learning models. This is a significant challenge that is amplified in Federated Learning, where data remains on clients, making full retraining or coordinated updates often infeasible. This work introduces an efficient Federated Unlearning framework based on information theory, modeling leakage as a parameter estimation problem. Our method uses second-order Hessian information to identify and selectively reset only the parameters most sensitive to the data being forgotten, followed by minimal federated retraining. This model-agnostic approach supports categorical and client unlearning without requiring server access to raw client data after initial information aggregation. Evaluations on benchmark datasets demonstrate strong privacy (MIA success near random, categorical knowledge erased) and high performance (Normalized Accuracy against re-trained benchmarks of $\approx$ 0.9), while aiming for increased efficiency over complete retraining. Furthermore, in a targeted backdoor attack scenario, our framework effectively neutralizes the malicious trigger, restoring model integrity. This offers a practical solution for data forgetting in FL.

Classical and Deep Reinforcement Learning Inventory Control Policies for Pharmaceutical Supply Chains with Perishability and Non-Stationarity

Jan 18, 2025Abstract:We study inventory control policies for pharmaceutical supply chains, addressing challenges such as perishability, yield uncertainty, and non-stationary demand, combined with batching constraints, lead times, and lost sales. Collaborating with Bristol-Myers Squibb (BMS), we develop a realistic case study incorporating these factors and benchmark three policies--order-up-to (OUT), projected inventory level (PIL), and deep reinforcement learning (DRL) using the proximal policy optimization (PPO) algorithm--against a BMS baseline based on human expertise. We derive and validate bounds-based procedures for optimizing OUT and PIL policy parameters and propose a methodology for estimating projected inventory levels, which are also integrated into the DRL policy with demand forecasts to improve decision-making under non-stationarity. Compared to a human-driven policy, which avoids lost sales through higher holding costs, all three implemented policies achieve lower average costs but exhibit greater cost variability. While PIL demonstrates robust and consistent performance, OUT struggles under high lost sales costs, and PPO excels in complex and variable scenarios but requires significant computational effort. The findings suggest that while DRL shows potential, it does not outperform classical policies in all numerical experiments, highlighting 1) the need to integrate diverse policies to manage pharmaceutical challenges effectively, based on the current state-of-the-art, and 2) that practical problems in this domain seem to lack a single policy class that yields universally acceptable performance.

Causal Discovery in Recommender Systems: Example and Discussion

Sep 16, 2024Abstract:Causality is receiving increasing attention by the artificial intelligence and machine learning communities. This paper gives an example of modelling a recommender system problem using causal graphs. Specifically, we approached the causal discovery task to learn a causal graph by combining observational data from an open-source dataset with prior knowledge. The resulting causal graph shows that only a few variables effectively influence the analysed feedback signals. This contrasts with the recent trend in the machine learning community to include more and more variables in massive models, such as neural networks.

Towards a Transportable Causal Network Model Based on Observational Healthcare Data

Nov 20, 2023

Abstract:Over the last decades, many prognostic models based on artificial intelligence techniques have been used to provide detailed predictions in healthcare. Unfortunately, the real-world observational data used to train and validate these models are almost always affected by biases that can strongly impact the outcomes validity: two examples are values missing not-at-random and selection bias. Addressing them is a key element in achieving transportability and in studying the causal relationships that are critical in clinical decision making, going beyond simpler statistical approaches based on probabilistic association. In this context, we propose a novel approach that combines selection diagrams, missingness graphs, causal discovery and prior knowledge into a single graphical model to estimate the cardiovascular risk of adolescent and young females who survived breast cancer. We learn this model from data comprising two different cohorts of patients. The resulting causal network model is validated by expert clinicians in terms of risk assessment, accuracy and explainability, and provides a prognostic model that outperforms competing machine learning methods.

Analyzing Complex Systems with Cascades Using Continuous-Time Bayesian Networks

Aug 21, 2023

Abstract:Interacting systems of events may exhibit cascading behavior where events tend to be temporally clustered. While the cascades themselves may be obvious from the data, it is important to understand which states of the system trigger them. For this purpose, we propose a modeling framework based on continuous-time Bayesian networks (CTBNs) to analyze cascading behavior in complex systems. This framework allows us to describe how events propagate through the system and to identify likely sentry states, that is, system states that may lead to imminent cascading behavior. Moreover, CTBNs have a simple graphical representation and provide interpretable outputs, both of which are important when communicating with domain experts. We also develop new methods for knowledge extraction from CTBNs and we apply the proposed methodology to a data set of alarms in a large industrial system.

A Survey on Causal Discovery: Theory and Practice

May 17, 2023Abstract:Understanding the laws that govern a phenomenon is the core of scientific progress. This is especially true when the goal is to model the interplay between different aspects in a causal fashion. Indeed, causal inference itself is specifically designed to quantify the underlying relationships that connect a cause to its effect. Causal discovery is a branch of the broader field of causality in which causal graphs is recovered from data (whenever possible), enabling the identification and estimation of causal effects. In this paper, we explore recent advancements in a unified manner, provide a consistent overview of existing algorithms developed under different settings, report useful tools and data, present real-world applications to understand why and how these methods can be fruitfully exploited.

Causal Discovery with Missing Data in a Multicentric Clinical Study

May 17, 2023

Abstract:Causal inference for testing clinical hypotheses from observational data presents many difficulties because the underlying data-generating model and the associated causal graph are not usually available. Furthermore, observational data may contain missing values, which impact the recovery of the causal graph by causal discovery algorithms: a crucial issue often ignored in clinical studies. In this work, we use data from a multi-centric study on endometrial cancer to analyze the impact of different missingness mechanisms on the recovered causal graph. This is achieved by extending state-of-the-art causal discovery algorithms to exploit expert knowledge without sacrificing theoretical soundness. We validate the recovered graph with expert physicians, showing that our approach finds clinically-relevant solutions. Finally, we discuss the goodness of fit of our graph and its consistency from a clinical decision-making perspective using graphical separation to validate causal pathways.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge