Markus Zanker

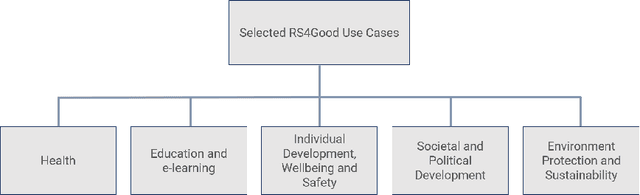

Recommender Systems for Good (RS4Good): Survey of Use Cases and a Call to Action for Research that Matters

Nov 25, 2024

Abstract:In the area of recommender systems, the vast majority of research efforts is spent on developing increasingly sophisticated recommendation models, also using increasingly more computational resources. Unfortunately, most of these research efforts target a very small set of application domains, mostly e-commerce and media recommendation. Furthermore, many of these models are never evaluated with users, let alone put into practice. The scientific, economic and societal value of much of these efforts by scholars therefore remains largely unclear. To achieve a stronger positive impact resulting from these efforts, we posit that we as a research community should more often address use cases where recommender systems contribute to societal good (RS4Good). In this opinion piece, we first discuss a number of examples where the use of recommender systems for problems of societal concern has been successfully explored in the literature. We then proceed by outlining a paradigmatic shift that is needed to conduct successful RS4Good research, where the key ingredients are interdisciplinary collaborations and longitudinal evaluation approaches with humans in the loop.

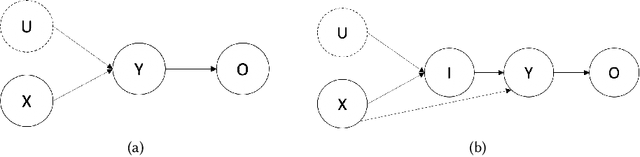

Causal Discovery in Recommender Systems: Example and Discussion

Sep 16, 2024Abstract:Causality is receiving increasing attention by the artificial intelligence and machine learning communities. This paper gives an example of modelling a recommender system problem using causal graphs. Specifically, we approached the causal discovery task to learn a causal graph by combining observational data from an open-source dataset with prior knowledge. The resulting causal graph shows that only a few variables effectively influence the analysed feedback signals. This contrasts with the recent trend in the machine learning community to include more and more variables in massive models, such as neural networks.

A Survey on Intent-aware Recommender Systems

Jun 24, 2024Abstract:Many modern online services feature personalized recommendations. A central challenge when providing such recommendations is that the reason why an individual user accesses the service may change from visit to visit or even during an ongoing usage session. To be effective, a recommender system should therefore aim to take the users' probable intent of using the service at a certain point in time into account. In recent years, researchers have thus started to address this challenge by incorporating intent-awareness into recommender systems. Correspondingly, a number of technical approaches were put forward, including diversification techniques, intent prediction models or latent intent modeling approaches. In this paper, we survey and categorize existing approaches to building the next generation of Intent-Aware Recommender Systems (IARS). Based on an analysis of current evaluation practices, we outline open gaps and possible future directions in this area, which in particular include the consideration of additional interaction signals and contextual information to further improve the effectiveness of such systems.

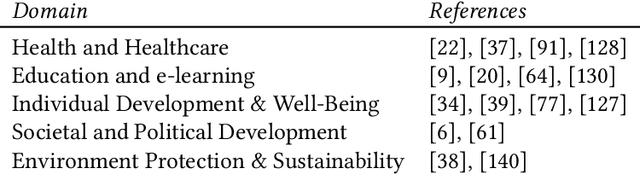

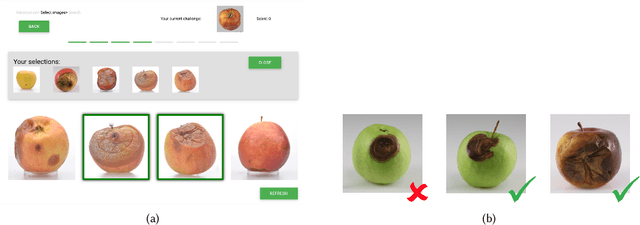

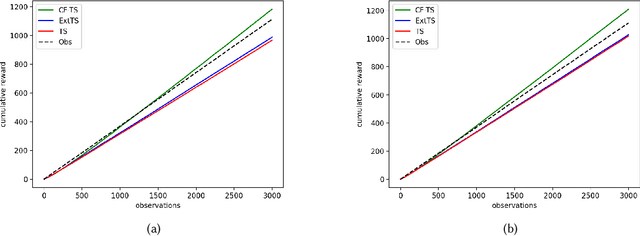

Counterfactual Contextual Multi-Armed Bandit: a Real-World Application to Diagnose Apple Diseases

Feb 08, 2021

Abstract:Post-harvest diseases of apple are one of the major issues in the economical sector of apple production, causing severe economical losses to producers. Thus, we developed DSSApple, a picture-based decision support system able to help users in the diagnosis of apple diseases. Specifically, this paper addresses the problem of sequentially optimizing for the best diagnosis, leveraging past interactions with the system and their contextual information (i.e. the evidence provided by the users). The problem of learning an online model while optimizing for its outcome is commonly addressed in the literature through a stochastic active learning paradigm - i.e. Contextual Multi-Armed Bandit (CMAB). This methodology interactively updates the decision model considering the success of each past interaction with respect to the context provided in each round. However, this information is very often partial and inadequate to handle such complex decision making problems. On the other hand, human decisions implicitly include unobserved factors (referred in the literature as unobserved confounders) that significantly contribute to the human's final decision. In this paper, we take advantage of the information embedded in the observed human decisions to marginalize confounding factors and improve the capability of the CMAB model to identify the correct diagnosis. Specifically, we propose a Counterfactual Contextual Multi-Armed Bandit, a model based on the causal concept of counterfactual. The proposed model is validated with offline experiments based on data collected through a large user study on the application. The results prove that our model is able to outperform both traditional CMAB algorithms and observed user decisions, in real-world tasks of predicting the correct apple disease.

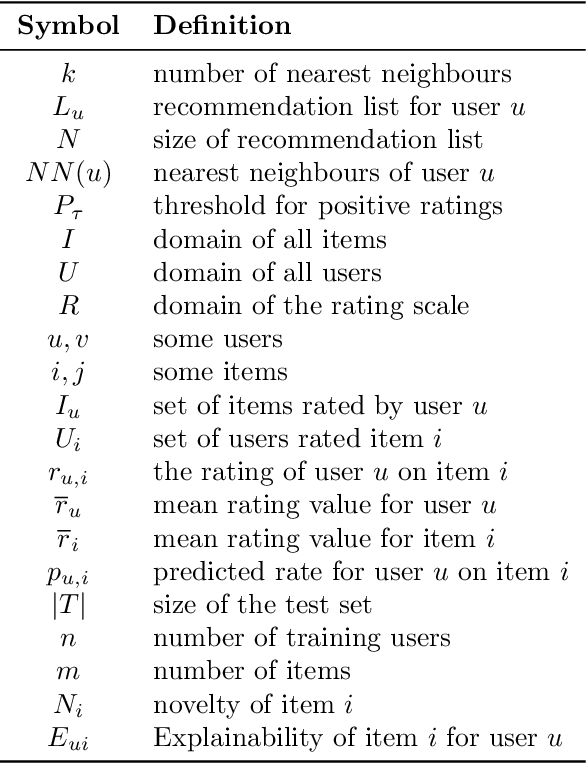

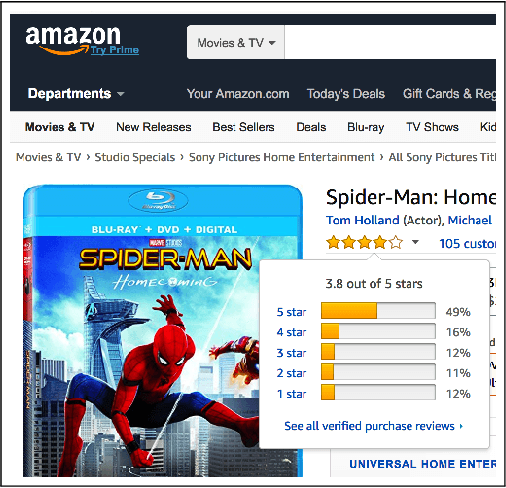

Personalised novel and explainable matrix factorisation

Jul 25, 2019

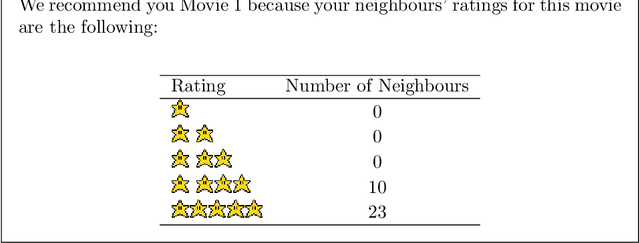

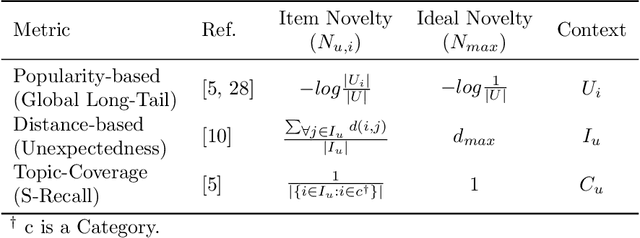

Abstract:Recommendation systems personalise suggestions to individuals to help them in their decision making and exploration tasks. In the ideal case, these recommendations, besides of being accurate, should also be novel and explainable. However, up to now most platforms fail to provide both, novel recommendations that advance users' exploration along with explanations to make their reasoning more transparent to them. For instance, a well-known recommendation algorithm, such as matrix factorisation (MF), optimises only the accuracy criterion, while disregarding other quality criteria such as the explainability or the novelty, of recommended items. In this paper, to the best of our knowledge, we propose a new model, denoted as NEMF, that allows to trade-off the MF performance with respect to the criteria of novelty and explainability, while only minimally compromising on accuracy. In addition, we recommend a new explainability metric based on nDCG, which distinguishes a more explainable item from a less explainable item. An initial user study indicates how users perceive the different attributes of these "user" style explanations and our extensive experimental results demonstrate that we attain high accuracy by recommending also novel and explainable items.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge