Fabio Sciarrino

Deep reinforcement learning for quantum multiparameter estimation

Sep 01, 2022

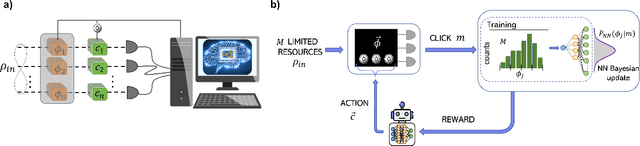

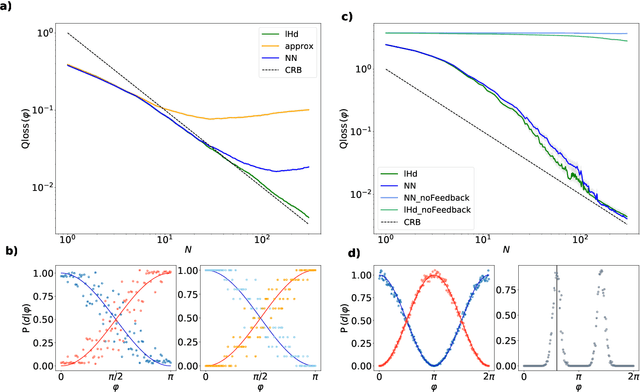

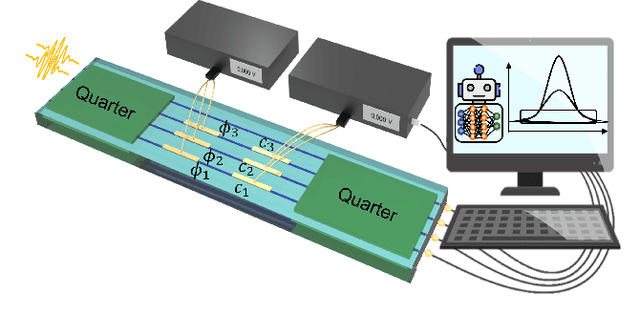

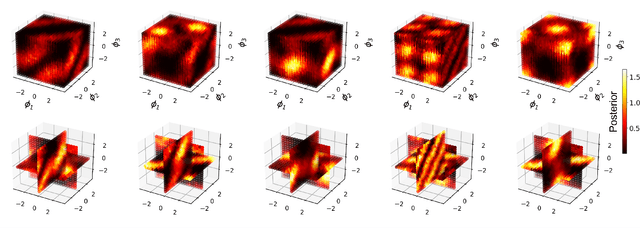

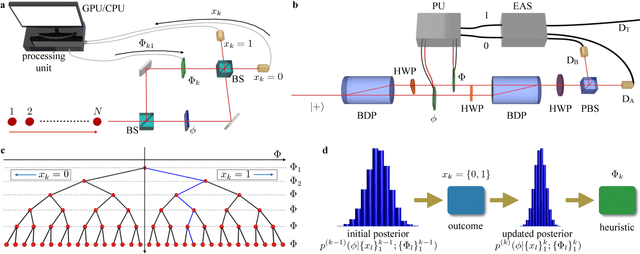

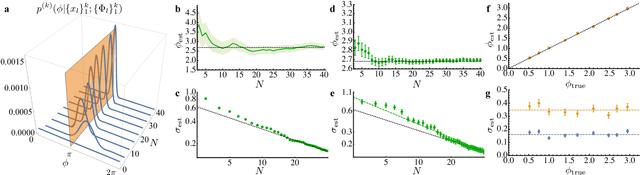

Abstract:Estimation of physical quantities is at the core of most scientific research and the use of quantum devices promises to enhance its performances. In real scenarios, it is fundamental to consider that the resources are limited and Bayesian adaptive estimation represents a powerful approach to efficiently allocate, during the estimation process, all the available resources. However, this framework relies on the precise knowledge of the system model, retrieved with a fine calibration that often results computationally and experimentally demanding. Here, we introduce a model-free and deep learning-based approach to efficiently implement realistic Bayesian quantum metrology tasks accomplishing all the relevant challenges, without relying on any a-priori knowledge on the system. To overcome this need, a neural network is trained directly on experimental data to learn the multiparameter Bayesian update. Then, the system is set at its optimal working point through feedbacks provided by a reinforcement learning algorithm trained to reconstruct and enhance experiment heuristics of the investigated quantum sensor. Notably, we prove experimentally the achievement of higher estimation performances than standard methods, demonstrating the strength of the combination of these two black-box algorithms on an integrated photonic circuit. This work represents an important step towards fully artificial intelligence-based quantum metrology.

Regression of high dimensional angular momentum states of light

Jun 20, 2022

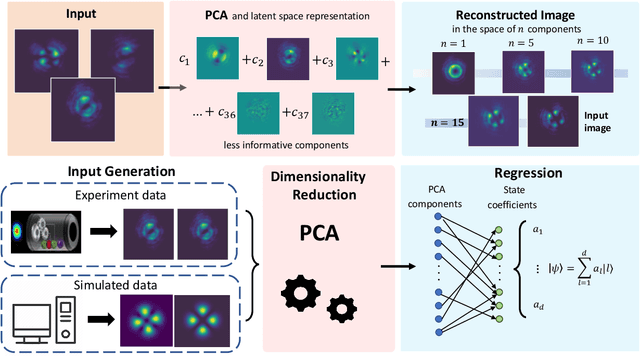

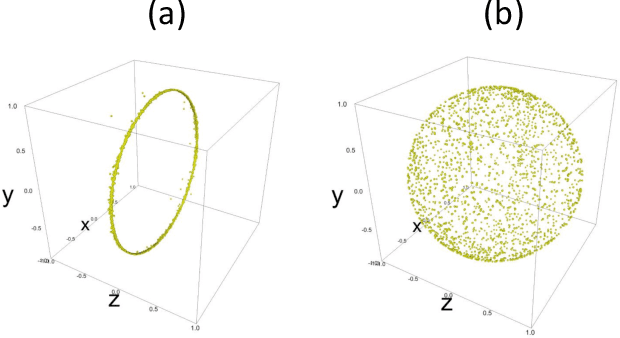

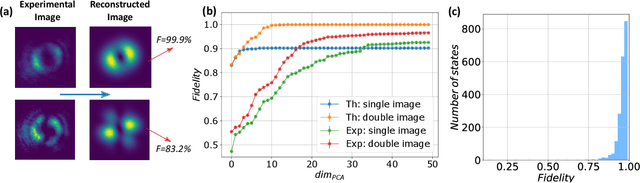

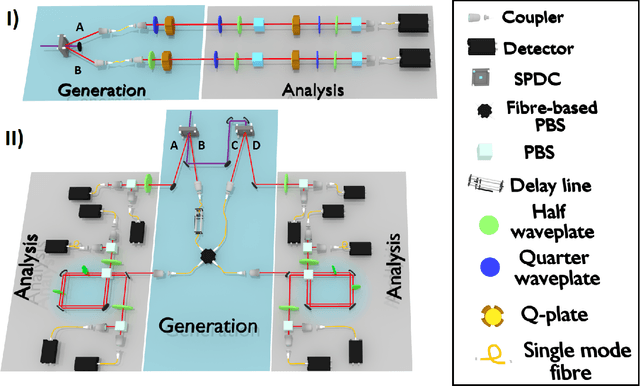

Abstract:The Orbital Angular Momentum (OAM) of light is an infinite-dimensional degree of freedom of light with several applications in both classical and quantum optics. However, to fully take advantage of the potential of OAM states, reliable detection platforms to characterize generated states in experimental conditions are needed. Here, we present an approach to reconstruct input OAM states from measurements of the spatial intensity distributions they produce. To obviate issues arising from intrinsic symmetry of Laguerre-Gauss modes, we employ a pair of intensity profiles per state projecting it only on two distinct bases, showing how this allows to uniquely recover input states from the collected data. Our approach is based on a combined application of dimensionality reduction via principal component analysis, and linear regression, and thus has a low computational cost during both training and testing stages. We showcase our approach in a real photonic setup, generating up-to-four-dimensional OAM states through a quantum walk dynamics. The high performances and versatility of the demonstrated approach make it an ideal tool to characterize high dimensional states in quantum information protocols.

Ab-initio experimental violation of Bell inequalities

Aug 02, 2021

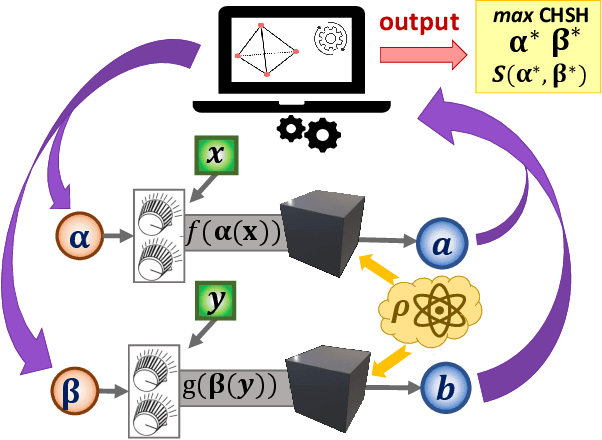

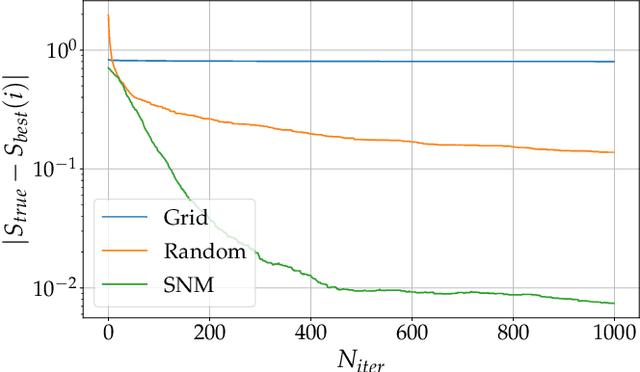

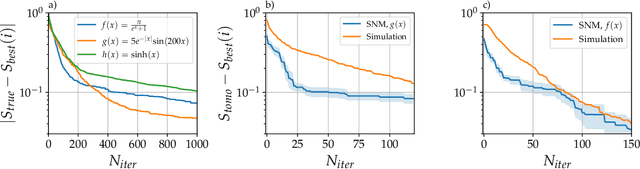

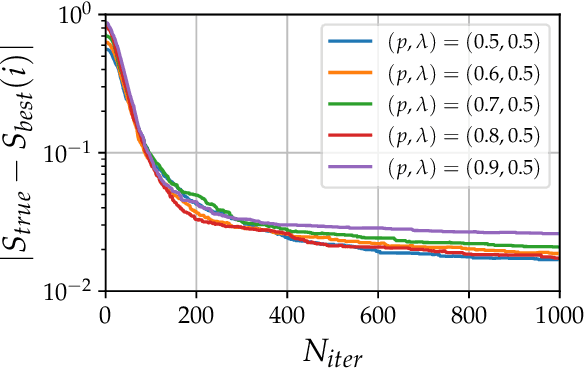

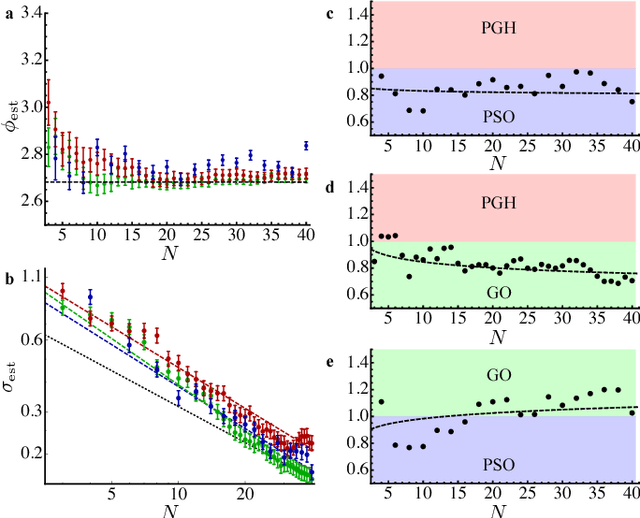

Abstract:The violation of a Bell inequality is the paradigmatic example of device-independent quantum information: the nonclassicality of the data is certified without the knowledge of the functioning of devices. In practice, however, all Bell experiments rely on the precise understanding of the underlying physical mechanisms. Given that, it is natural to ask: Can one witness nonclassical behaviour in a truly black-box scenario? Here we propose and implement, computationally and experimentally, a solution to this ab-initio task. It exploits a robust automated optimization approach based on the Stochastic Nelder-Mead algorithm. Treating preparation and measurement devices as black-boxes, and relying on the observed statistics only, our adaptive protocol approaches the optimal Bell inequality violation after a limited number of iterations for a variety photonic states, measurement responses and Bell scenarios. In particular, we exploit it for randomness certification from unknown states and measurements. Our results demonstrate the power of automated algorithms, opening a new venue for the experimental implementation of device-independent quantum technologies.

Causal networks and freedom of choice in Bell's theorem

May 12, 2021

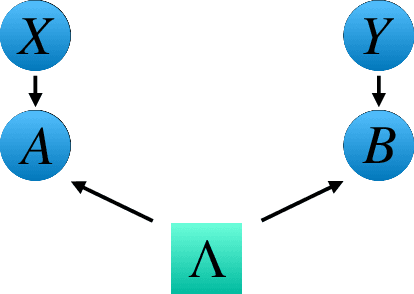

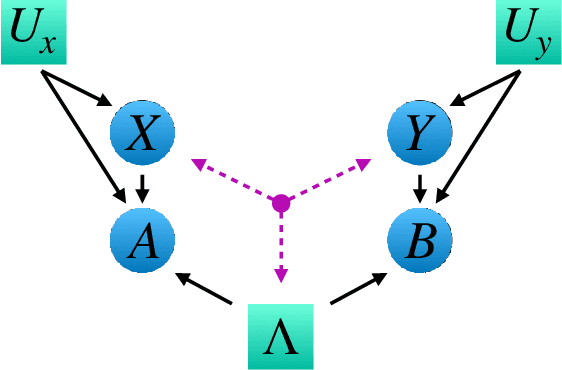

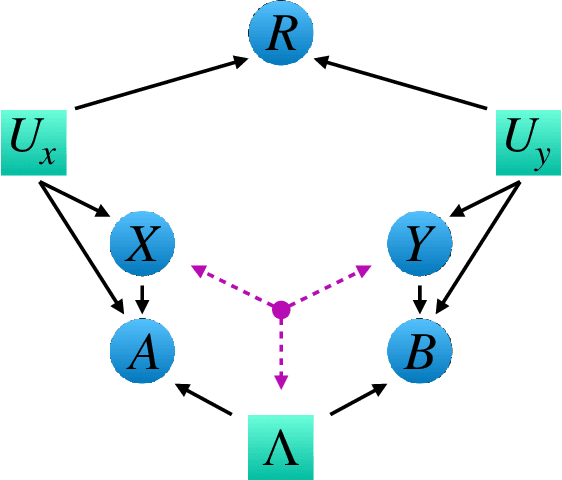

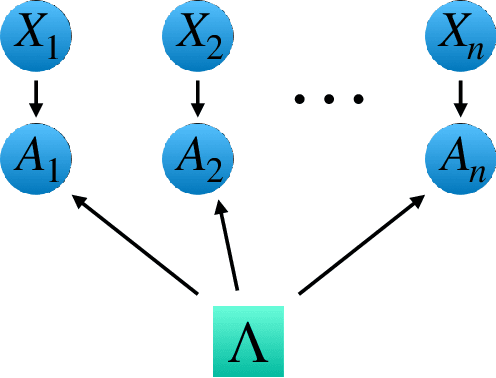

Abstract:Bell's theorem is typically understood as the proof that quantum theory is incompatible with local hidden variable models. More generally, we can see the violation of a Bell inequality as witnessing the impossibility of explaining quantum correlations with classical causal models. The violation of a Bell inequality, however, does not exclude classical models where some level of measurement dependence is allowed, that is, the choice made by observers can be correlated with the source generating the systems to be measured. Here we show that the level of measurement dependence can be quantitatively upper bounded if we arrange the Bell test within a network. Furthermore, we also prove that these results can be adapted in order to derive non-linear Bell inequalities for a large class of causal networks and to identify quantumly realizable correlations which violate them.

Experimental Phase Estimation Enhanced By Machine Learning

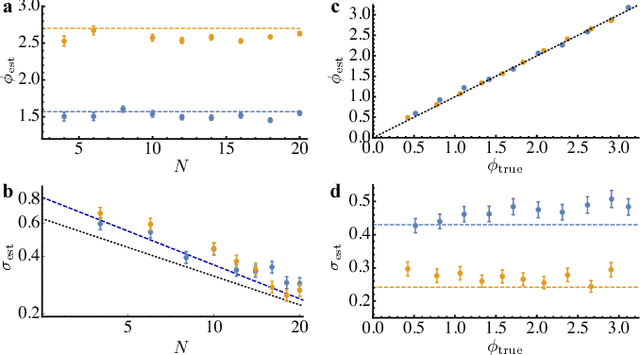

Dec 20, 2017

Abstract:Phase estimation protocols provide a fundamental benchmark for the field of quantum metrology. The latter represents one of the most relevant applications of quantum theory, potentially enabling the capability of measuring unknown physical parameters with improved precision over classical strategies. Within this context, most theoretical and experimental studies have focused on determining the fundamental bounds and how to achieve them in the asymptotic regime where a large number of resources is employed. However, in most applications it is necessary to achieve optimal precisions by performing only a limited number of measurements. To this end, machine learning techniques can be applied as a powerful optimization tool. Here, we implement experimentally single-photon adaptive phase estimation protocols enhanced by machine learning, showing the capability of reaching optimal precision after a small number of trials. In particular, we introduce a new approach for Bayesian estimation that exhibit best performances for very low number of photons N. Furthermore, we study the resilience to noise of the tested methods, showing that the optimized Bayesian approach is very robust in the presence of imperfections. Application of this methodology can be envisaged in the more general multiparameter case, that represents a paradigmatic scenario for several tasks including imaging or Hamiltonian learning.

* 10+4 pages, 6+3 figures

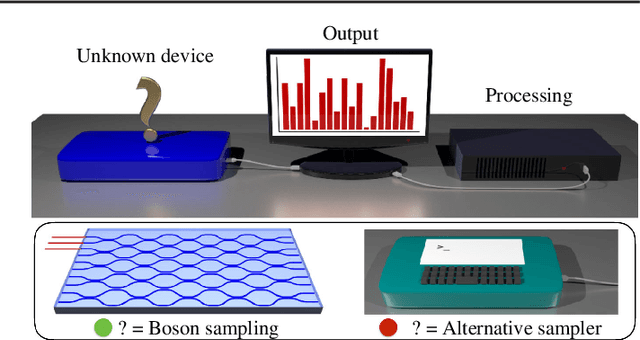

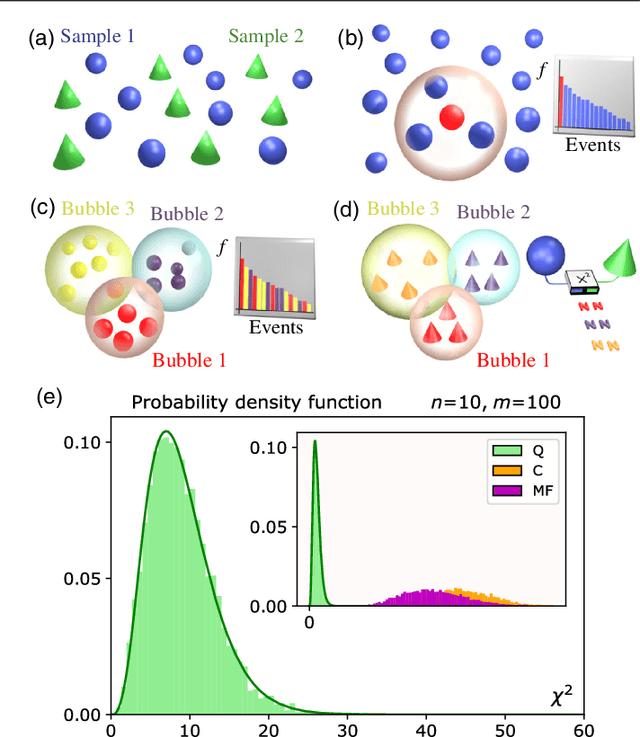

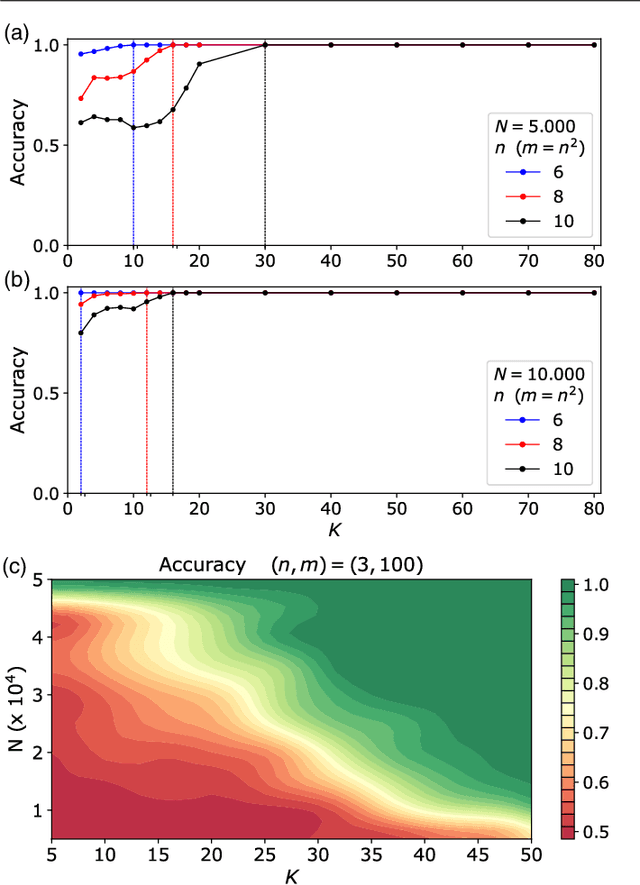

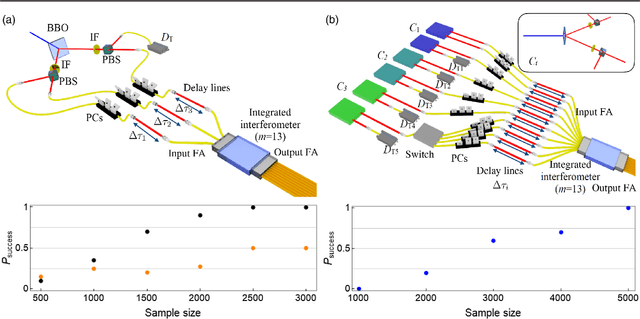

Pattern recognition techniques for Boson Sampling validation

Dec 19, 2017

Abstract:The difficulty of validating large-scale quantum devices, such as Boson Samplers, poses a major challenge for any research program that aims to show quantum advantages over classical hardware. To address this problem, we propose a novel data-driven approach wherein models are trained to identify common pathologies using unsupervised machine learning methods. We illustrate this idea by training a classifier that exploits K-means clustering to distinguish between Boson Samplers that use indistinguishable photons from those that do not. We train the model on numerical simulations of small-scale Boson Samplers and then validate the pattern recognition technique on larger numerical simulations as well as on photonic chips in both traditional Boson Sampling and scattershot experiments. The effectiveness of such method relies on particle-type-dependent internal correlations present in the output distributions. This approach performs substantially better on the test data than previous methods and underscores the ability to further generalize its operation beyond the scope of the examples that it was trained on.

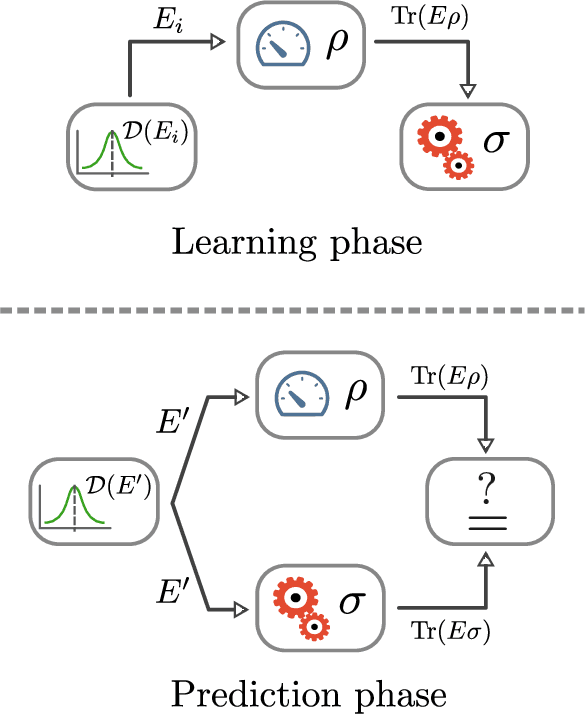

Experimental learning of quantum states

Nov 30, 2017

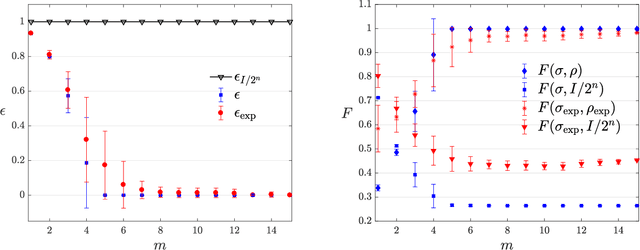

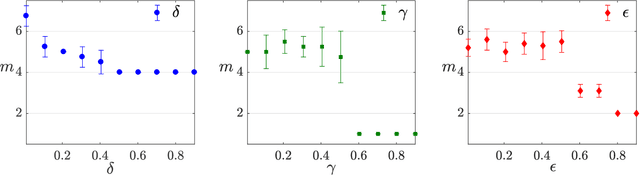

Abstract:The number of parameters describing a quantum state is well known to grow exponentially with the number of particles. This scaling clearly limits our ability to do tomography to systems with no more than a few qubits and has been used to argue against the universal validity of quantum mechanics itself. However, from a computational learning theory perspective, it can be shown that, in a probabilistic setting, quantum states can be approximately learned using only a linear number of measurements. Here we experimentally demonstrate this linear scaling in optical systems with up to 6 qubits. Our results highlight the power of computational learning theory to investigate quantum information, provide the first experimental demonstration that quantum states can be "probably approximately learned" with access to a number of copies of the state that scales linearly with the number of qubits, and pave the way to probing quantum states at new, larger scales.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge