Evan Sheehan

Efficient Conditional Pre-training for Transfer Learning

Dec 10, 2020

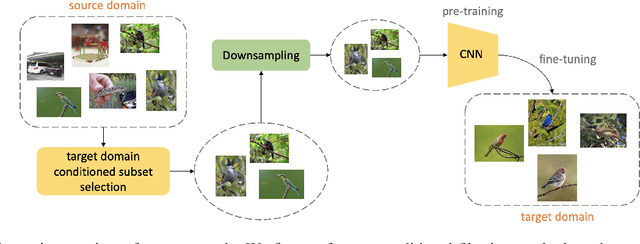

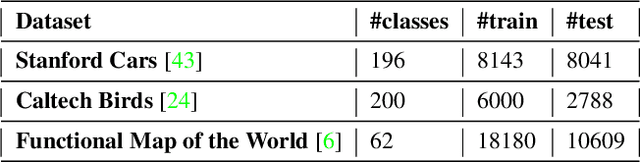

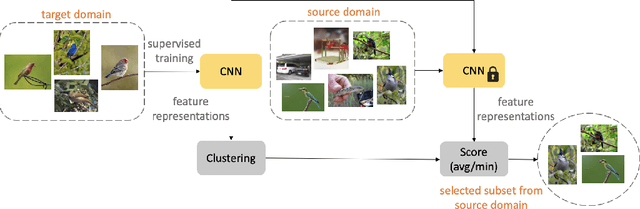

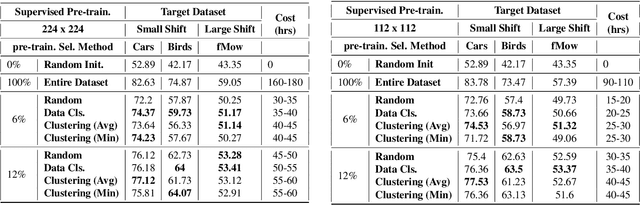

Abstract:Almost all the state-of-the-art neural networks for computer vision tasks are trained by (1) Pre-training on a large scale dataset and (2) finetuning on the target dataset. This strategy helps reduce the dependency on the target dataset and improves convergence rate and generalization on the target task. Although pre-training on large scale datasets is very useful, its foremost disadvantage is high training cost. To address this, we propose efficient target dataset conditioned filtering methods to remove less relevant samples from the pre-training dataset. Unlike prior work, we focus on efficiency, adaptability, and flexibility in addition to performance. Additionally, we discover that lowering image resolutions in the pre-training step offers a great trade-off between cost and performance. We validate our techniques by pre-training on ImageNet in both the unsupervised and supervised settings and finetuning on a diverse collection of target datasets and tasks. Our proposed methods drastically reduce pre-training cost and provide strong performance boosts.

Predicting Economic Development using Geolocated Wikipedia Articles

May 11, 2019

Abstract:Progress on the UN Sustainable Development Goals (SDGs) is hampered by a persistent lack of data regarding key social, environmental, and economic indicators, particularly in developing countries. For example, data on poverty --- the first of seventeen SDGs --- is both spatially sparse and infrequently collected in Sub-Saharan Africa due to the high cost of surveys. Here we propose a novel method for estimating socioeconomic indicators using open-source, geolocated textual information from Wikipedia articles. We demonstrate that modern NLP techniques can be used to predict community-level asset wealth and education outcomes using nearby geolocated Wikipedia articles. When paired with nightlights satellite imagery, our method outperforms all previously published benchmarks for this prediction task, indicating the potential of Wikipedia to inform both research in the social sciences and future policy decisions.

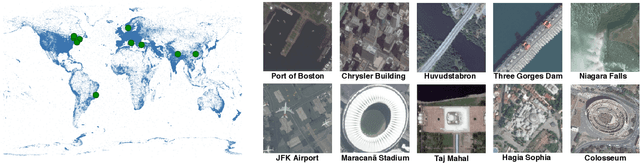

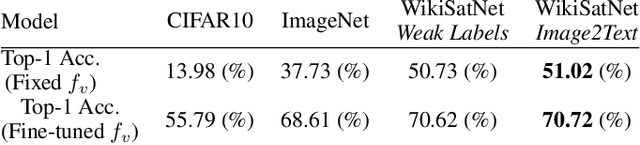

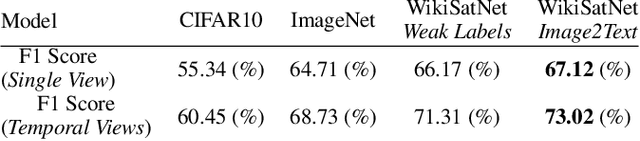

Learning to Interpret Satellite Images in Global Scale Using Wikipedia

May 11, 2019

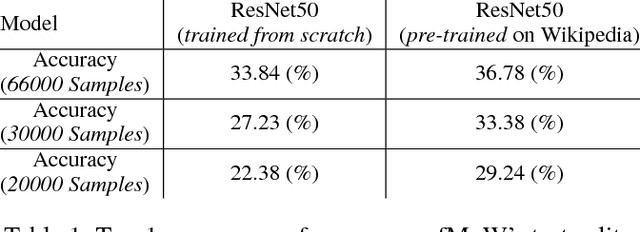

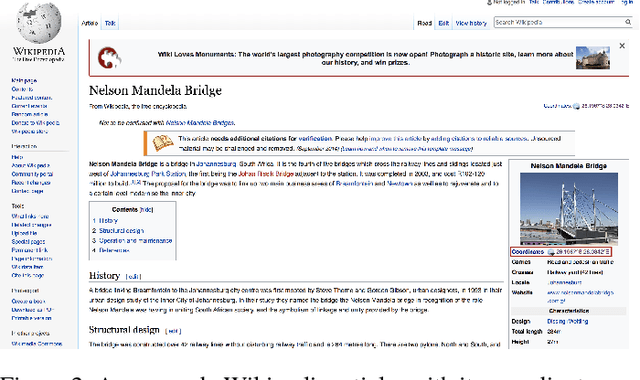

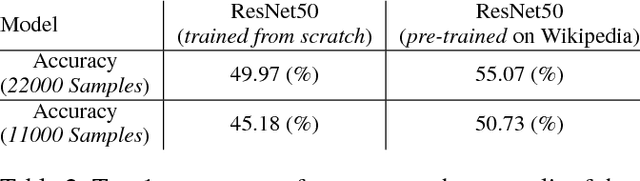

Abstract:Despite recent progress in computer vision, finegrained interpretation of satellite images remains challenging because of a lack of labeled training data. To overcome this limitation, we construct a novel dataset called WikiSatNet by pairing georeferenced Wikipedia articles with satellite imagery of their corresponding locations. We then propose two strategies to learn representations of satellite images by predicting properties of the corresponding articles from the images. Leveraging this new multi-modal dataset, we can drastically reduce the quantity of human-annotated labels and time required for downstream tasks. On the recently released fMoW dataset, our pre-training strategies can boost the performance of a model pre-trained on ImageNet by up to 4:5% in F1 score.

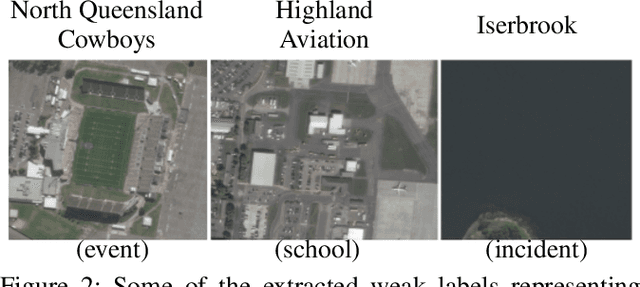

Learning to Interpret Satellite Images Using Wikipedia

Sep 19, 2018

Abstract:Despite recent progress in computer vision, fine-grained interpretation of satellite images remains challenging because of a lack of labeled training data. To overcome this limitation, we propose using Wikipedia as a previously untapped source of rich, georeferenced textual information with global coverage. We construct a novel large-scale, multi-modal dataset by pairing geo-referenced Wikipedia articles with satellite imagery of their corresponding locations. To prove the efficacy of this dataset, we focus on the African continent and train a deep network to classify images based on labels extracted from articles. We then fine-tune the model on a human annotated dataset and demonstrate that this weak form of supervision can drastically reduce the quantity of human annotated labels and time required for downstream tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge