Ettore Merlo

How to Certify Machine Learning Based Safety-critical Systems? A Systematic Literature Review

Aug 03, 2021

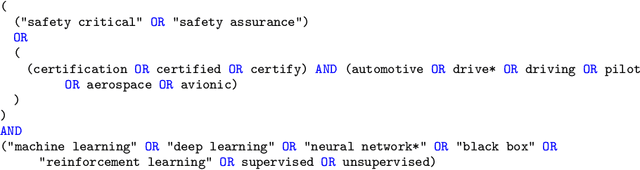

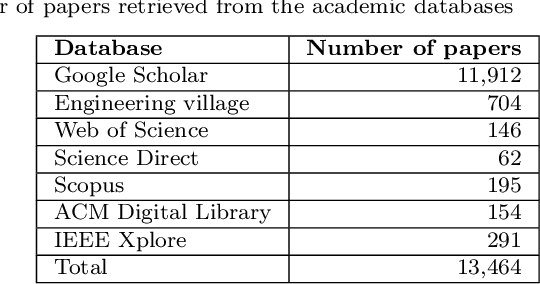

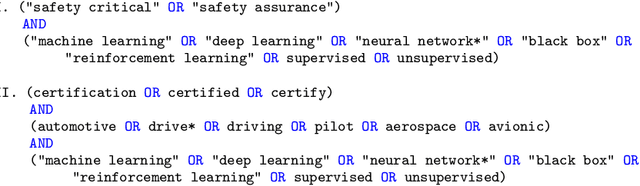

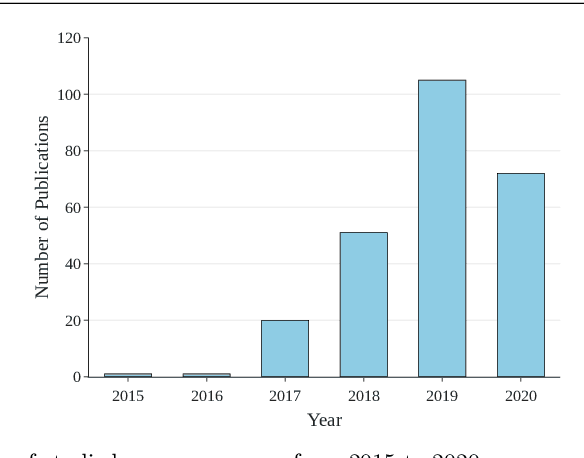

Abstract:Context: Machine Learning (ML) has been at the heart of many innovations over the past years. However, including it in so-called 'safety-critical' systems such as automotive or aeronautic has proven to be very challenging, since the shift in paradigm that ML brings completely changes traditional certification approaches. Objective: This paper aims to elucidate challenges related to the certification of ML-based safety-critical systems, as well as the solutions that are proposed in the literature to tackle them, answering the question 'How to Certify Machine Learning Based Safety-critical Systems?'. Method: We conduct a Systematic Literature Review (SLR) of research papers published between 2015 to 2020, covering topics related to the certification of ML systems. In total, we identified 217 papers covering topics considered to be the main pillars of ML certification: Robustness, Uncertainty, Explainability, Verification, Safe Reinforcement Learning, and Direct Certification. We analyzed the main trends and problems of each sub-field and provided summaries of the papers extracted. Results: The SLR results highlighted the enthusiasm of the community for this subject, as well as the lack of diversity in terms of datasets and type of models. It also emphasized the need to further develop connections between academia and industries to deepen the domain study. Finally, it also illustrated the necessity to build connections between the above mention main pillars that are for now mainly studied separately. Conclusion: We highlighted current efforts deployed to enable the certification of ML based software systems, and discuss some future research directions.

Models of Computational Profiles to Study the Likelihood of DNN Metamorphic Test Cases

Jul 28, 2021

Abstract:Neural network test cases are meant to exercise different reasoning paths in an architecture and used to validate the prediction outcomes. In this paper, we introduce "computational profiles" as vectors of neuron activation levels. We investigate the distribution of computational profile likelihood of metamorphic test cases with respect to the likelihood distributions of training, test and error control cases. We estimate the non-parametric probability densities of neuron activation levels for each distinct output class. Probabilities are inferred using training cases only, without any additional knowledge about metamorphic test cases. Experiments are performed by training a network on the MNIST Fashion library of images and comparing prediction likelihoods with those obtained from error control-data and from metamorphic test cases. Experimental results show that the distributions of computational profile likelihood for training and test cases are somehow similar, while the distribution of the random-noise control-data is always remarkably lower than the observed one for the training and testing sets. In contrast, metamorphic test cases show a prediction likelihood that lies in an extended range with respect to training, tests, and random noise. Moreover, the presented approach allows the independent assessment of different training classes and experiments to show that some of the classes are more sensitive to misclassifying metamorphic test cases than other classes. In conclusion, metamorphic test cases represent very aggressive tests for neural network architectures. Furthermore, since metamorphic test cases force a network to misclassify those inputs whose likelihood is similar to that of training cases, they could also be considered as adversarial attacks that evade defenses based on computational profile likelihood evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge