Elnar Hajiyev

Facial Emotion Recognition using Deep Residual Networks in Real-World Environments

Nov 04, 2021

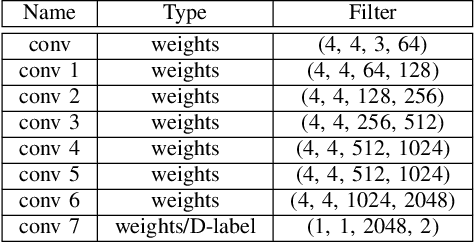

Abstract:Automatic affect recognition using visual cues is an important task towards a complete interaction between humans and machines. Applications can be found in tutoring systems and human computer interaction. A critical step towards that direction is facial feature extraction. In this paper, we propose a facial feature extractor model trained on an in-the-wild and massively collected video dataset provided by the RealEyes company. The dataset consists of a million labelled frames and 2,616 thousand subjects. As temporal information is important to the emotion recognition domain, we utilise LSTM cells to capture the temporal dynamics in the data. To show the favourable properties of our pre-trained model on modelling facial affect, we use the RECOLA database, and compare with the current state-of-the-art approach. Our model provides the best results in terms of concordance correlation coefficient.

Analysing Affective Behavior in the second ABAW2 Competition

Jul 03, 2021

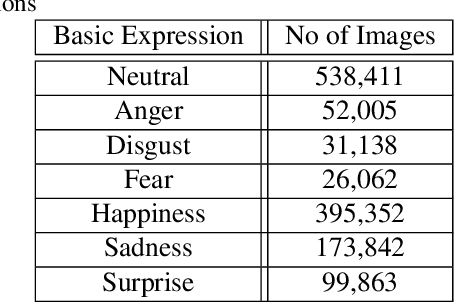

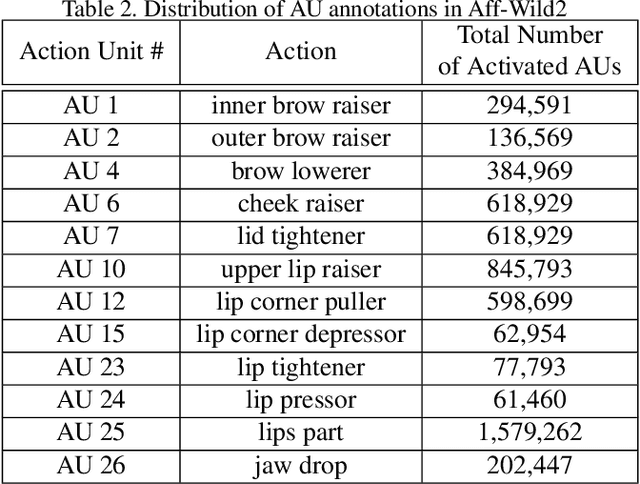

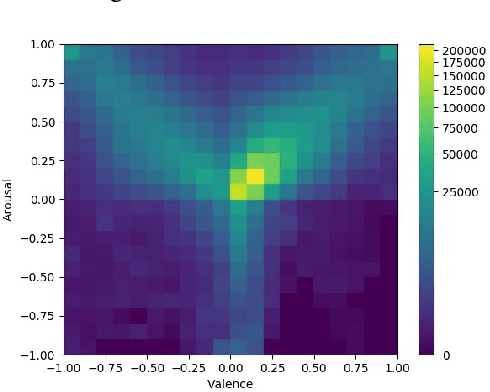

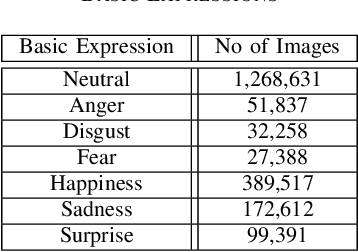

Abstract:The Affective Behavior Analysis in-the-wild (ABAW2) 2021 Competition is the second -- following the first very successful ABAW Competition held in conjunction with IEEE FG 2020- Competition that aims at automatically analyzing affect. ABAW2 is split into three Challenges, each one addressing one of the three main behavior tasks of valence-arousal estimation, basic expression classification and action unit detection. All three Challenges are based on a common benchmark database, Aff-Wild2, which is a large scale in-the-wild database and the first one to be annotated for all these three tasks. In this paper, we describe this Competition, to be held in conjunction with ICCV 2021. We present the three Challenges, with the utilized Competition corpora. We outline the evaluation metrics and present the baseline system with its results. More information regarding the Competition is provided in the Competition site: https://ibug.doc.ic.ac.uk/resources/iccv-2021-2nd-abaw.

Analysing Affective Behavior in the First ABAW 2020 Competition

Jan 30, 2020

Abstract:The Affective Behavior Analysis in-the-wild (ABAW) 2020 Competition is the first Competition aiming at automatic analysis of the three main behavior tasks of valence-arousal estimation, basic expression recognition and action unit detection. It is split into three Challenges, each one addressing a respective behavior task. For the Challenges, we provide a common benchmark database, Aff-Wild2, which is a large scale in-the-wild database and the first one annotated for all these three tasks. In this paper, we describe this Competition, to be held in conjunction with the IEEE Conference on Face and Gesture Recognition, May 2020, in Buenos Aires, Argentina. We present the three Challenges, with the utilized Competition corpora. We outline the evaluation metrics and present the baseline methodologies and the obtained results when these are applied to each Challenge. More information regarding the Competition and details for how to access the utilized database, are provided in the Competition site: http://ibug.doc.ic.ac.uk/resources/fg-2020-competition-affective-behavior-analysis.

SEWA DB: A Rich Database for Audio-Visual Emotion and Sentiment Research in the Wild

Jan 09, 2019

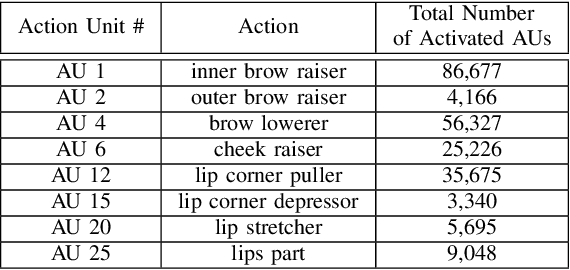

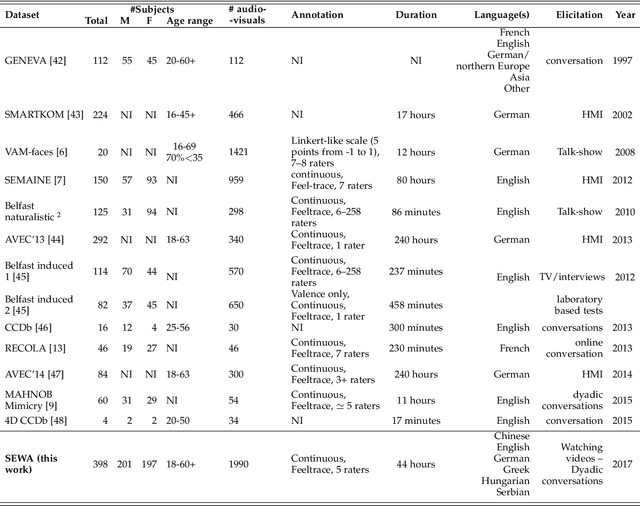

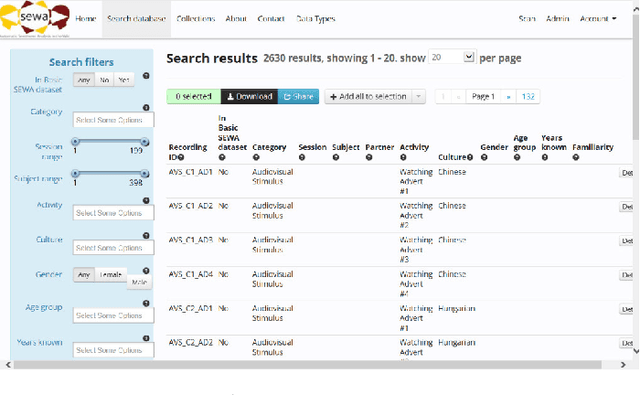

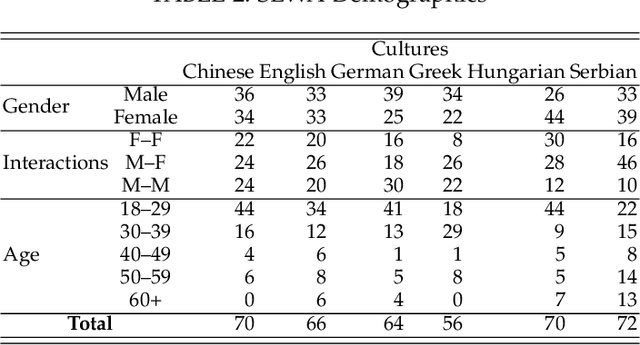

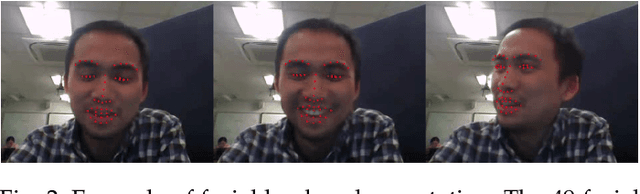

Abstract:Natural human-computer interaction and audio-visual human behaviour sensing systems, which would achieve robust performance in-the-wild are more needed than ever as digital devices are becoming indispensable part of our life more and more. Accurately annotated real-world data are the crux in devising such systems. However, existing databases usually consider controlled settings, low demographic variability, and a single task. In this paper, we introduce the SEWA database of more than 2000 minutes of audio-visual data of 398 people coming from six cultures, 50% female, and uniformly spanning the age range of 18 to 65 years old. Subjects were recorded in two different contexts: while watching adverts and while discussing adverts in a video chat. The database includes rich annotations of the recordings in terms of facial landmarks, facial action units (FAU), various vocalisations, mirroring, and continuously valued valence, arousal, liking, agreement, and prototypic examples of (dis)liking. This database aims to be an extremely valuable resource for researchers in affective computing and automatic human sensing and is expected to push forward the research in human behaviour analysis, including cultural studies. Along with the database, we provide extensive baseline experiments for automatic FAU detection and automatic valence, arousal and (dis)liking intensity estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge