Dongmei Cai

Compressive Shack-Hartmann Wavefront Sensing based on Deep Neural Networks

Nov 20, 2020

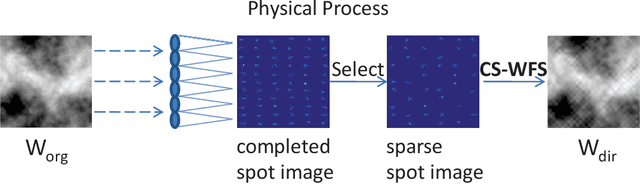

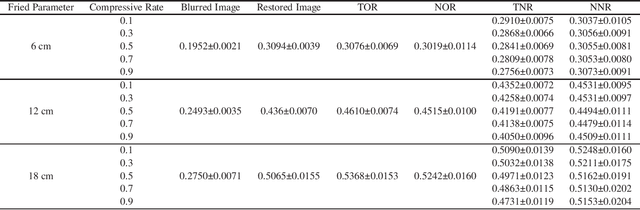

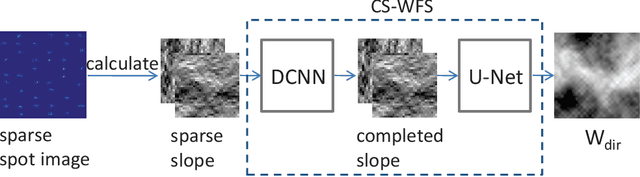

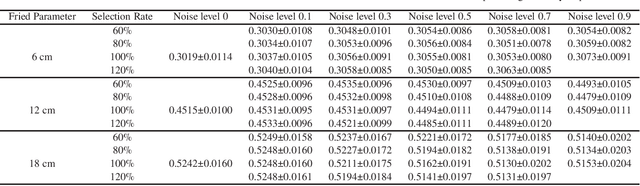

Abstract:The Shack-Hartmann wavefront sensor is widely used to measure aberrations induced by atmospheric turbulence in adaptive optics systems. However if there exists strong atmospheric turbulence or the brightness of guide stars is low, the accuracy of wavefront measurements will be affected. In this paper, we propose a compressive Shack-Hartmann wavefront sensing method. Instead of reconstructing wavefronts with slope measurements of all sub-apertures, our method reconstructs wavefronts with slope measurements of sub-apertures which have spot images with high signal to noise ratio. Besides, we further propose to use a deep neural network to accelerate wavefront reconstruction speed. During the training stage of the deep neural network, we propose to add a drop-out layer to simulate the compressive sensing process, which could increase development speed of our method. After training, the compressive Shack-Hartmann wavefront sensing method can reconstruct wavefronts in high spatial resolution with slope measurements from only a small amount of sub-apertures. We integrate the straightforward compressive Shack-Hartmann wavefront sensing method with image deconvolution algorithm to develop a high-order image restoration method. We use images restored by the high-order image restoration method to test the performance of our the compressive Shack-Hartmann wavefront sensing method. The results show that our method can improve the accuracy of wavefront measurements and is suitable for real-time applications.

Data--driven Image Restoration with Option--driven Learning for Big and Small Astronomical Image Datasets

Nov 07, 2020

Abstract:Image restoration methods are commonly used to improve the quality of astronomical images. In recent years, developments of deep neural networks and increments of the number of astronomical images have evoked a lot of data--driven image restoration methods. However, most of these methods belong to supervised learning algorithms, which require paired images either from real observations or simulated data as training set. For some applications, it is hard to get enough paired images from real observations and simulated images are quite different from real observed ones. In this paper, we propose a new data--driven image restoration method based on generative adversarial networks with option--driven learning. Our method uses several high resolution images as references and applies different learning strategies when the number of reference images is different. For sky surveys with variable observation conditions, our method can obtain very stable image restoration results, regardless of the number of reference images.

PSF--NET: A Non-parametric Point Spread Function Model for Ground Based Optical Telescopes

Mar 02, 2020

Abstract:Ground based optical telescopes are seriously affected by atmospheric turbulence induced aberrations. Understanding properties of these aberrations is important both for instruments design and image restoration methods development. Because the point spread function can reflect performance of the whole optic system, it is appropriate to use the point spread function to describe atmospheric turbulence induced aberrations. Assuming point spread functions induced by the atmospheric turbulence with the same profile belong to the same manifold space, we propose a non-parametric point spread function -- PSF-NET. The PSF-NET has a cycle convolutional neural network structure and is a statistical representation of the manifold space of PSFs induced by the atmospheric turbulence with the same profile. Testing the PSF-NET with simulated and real observation data, we find that a well trained PSF--NET can restore any short exposure images blurred by atmospheric turbulence with the same profile. Besides, we further use the impulse response of the PSF-NET, which can be viewed as the statistical mean PSF, to analyze interpretation properties of the PSF-NET. We find that variations of statistical mean PSFs are caused by variations of the atmospheric turbulence profile: as the difference of the atmospheric turbulence profile increases, the difference between statistical mean PSFs also increases. The PSF-NET proposed in this paper provides a new way to analyze atmospheric turbulence induced aberrations, which would be benefit to develop new observation methods for ground based optical telescopes.

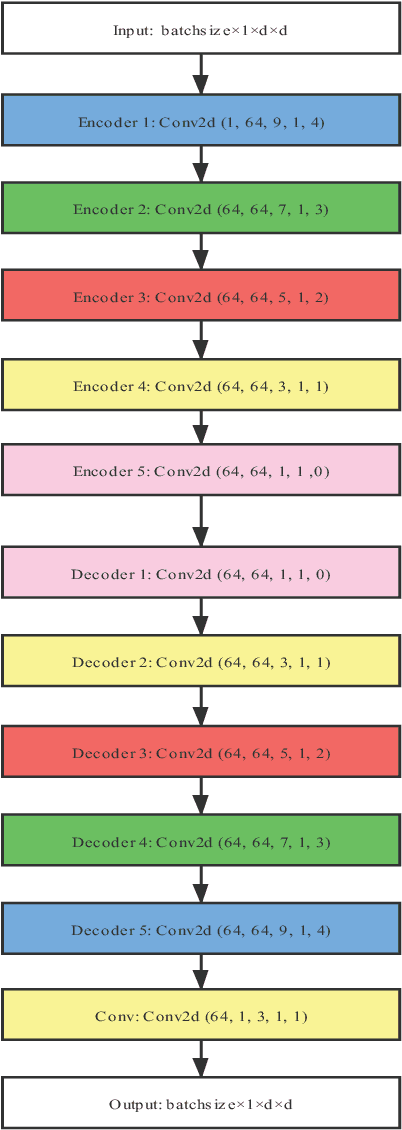

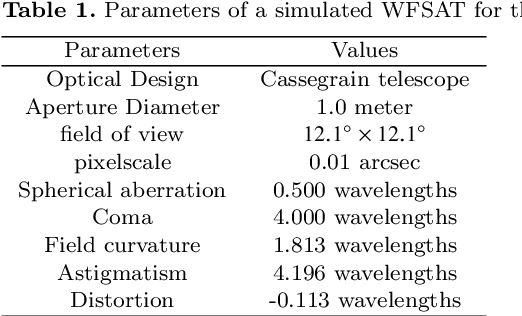

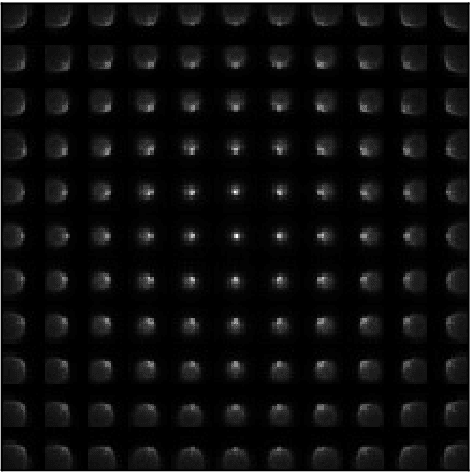

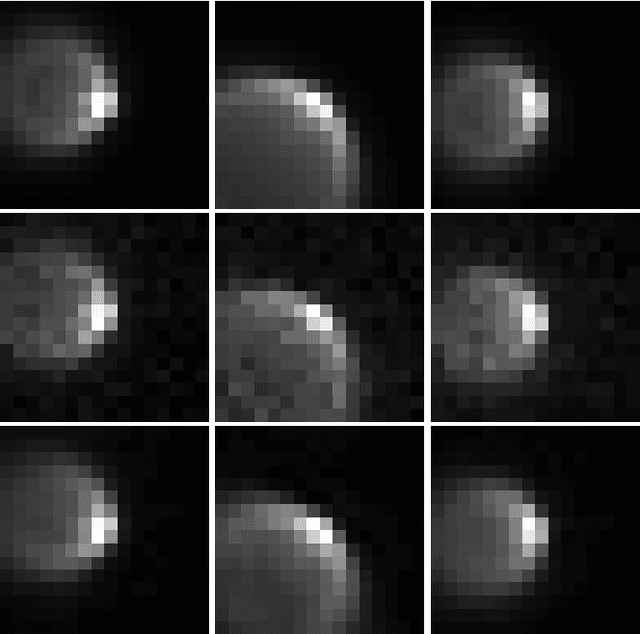

Point Spread Function Modelling for Wide Field Small Aperture Telescopes with a Denoising Autoencoder

Jan 31, 2020

Abstract:The point spread function reflects the state of an optical telescope and it is important for data post-processing methods design. For wide field small aperture telescopes, the point spread function is hard to model, because it is affected by many different effects and has strong temporal and spatial variations. In this paper, we propose to use the denoising autoencoder, a type of deep neural network, to model the point spread function of wide field small aperture telescopes. The denoising autoencoder is a pure data based point spread function modelling method, which uses calibration data from real observations or numerical simulated results as point spread function templates. According to real observation conditions, different levels of random noise or aberrations are added to point spread function templates, making them as realizations of the point spread function, i.e., simulated star images. Then we train the denoising autoencoder with realizations and templates of the point spread function. After training, the denoising autoencoder learns the manifold space of the point spread function and can map any star images obtained by wide field small aperture telescopes directly to its point spread function, which could be used to design data post-processing or optical system alignment methods.

Solar Image Restoration with the Cycle-GAN Based on Multi-Fractal Properties of Texture Features

Aug 11, 2019

Abstract:Texture is one of the most obvious characteristics in solar images and it is normally described by texture features. Because textures from solar images of the same wavelength are similar, we assume texture features of solar images are multi-fractals. Based on this assumption, we propose a pure data-based image restoration method: with several high resolution solar images as references, we use the Cycle-Consistent Adversarial Network to restore burred images of the same steady physical process, in the same wavelength obtained by the same telescope. We test our method with simulated and real observation data and find that our method can improve the spatial resolution of solar images, without loss of any frames. Because our method does not need paired training set or additional instruments, it can be used as a post-processing method for solar images obtained by either seeing limited telescopes or telescopes with ground layer adaptive optic system.

Perception Evaluation -- A new solar image quality metric based on the multi-fractal property of texture features

May 24, 2019

Abstract:Next-generation ground-based solar observations require good image quality metrics for post-facto processing techniques. Based on the assumption that texture features in solar images are multi-fractal which can be extracted by a trained deep neural network as feature maps, a new reduced-reference objective image quality metric, the perception evaluation is proposed. The perception evaluation is defined as cosine distance of Gram matrix between feature maps extracted from high resolution reference image and that from blurred images. We evaluate performance of the perception evaluation with simulated and real observation images. The results show that with a high resolution image as reference, the perception evaluation can give robust estimate of image quality for solar images of different scenes.

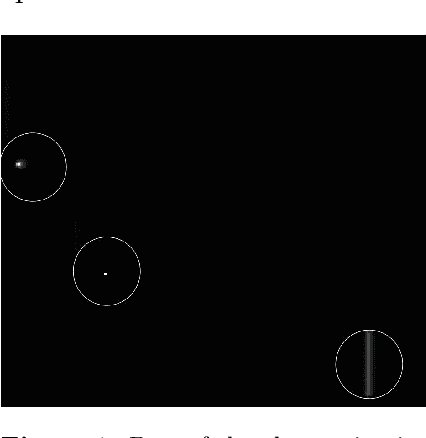

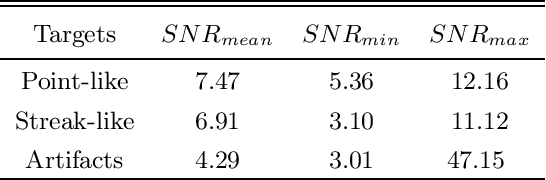

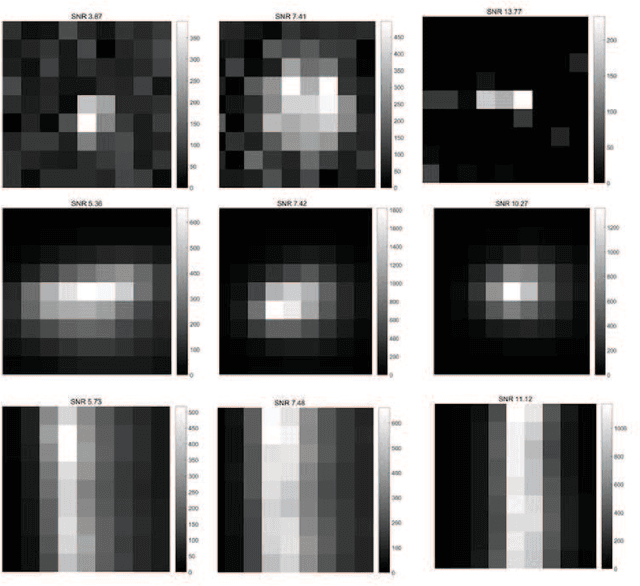

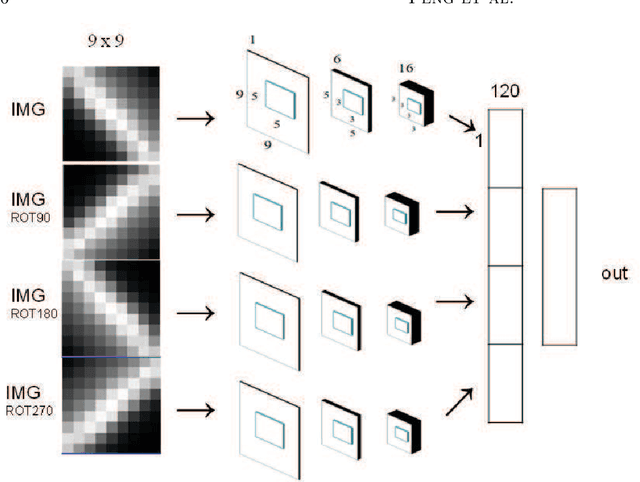

Optical Transient Object Classification in Wide Field Small Aperture Telescopes with Neural Networks

Apr 29, 2019

Abstract:Wide field small aperture telescopes are working horses for fast sky surveying. Transient discovery is one of their main tasks. Classification of candidate transient images between real sources and artifacts with high accuracy is an important step for transient discovery. In this paper, we propose two transient classification methods based on neural networks. The first method uses the convolutional neural network without pooling layers to classify transient images with low sampling rate. The second method assumes transient images as one dimensional signals and is based on recurrent neural networks with long short term memory and leaky ReLu activation function in each detection layer. Testing with real observation data, we find that although these two methods can both achieve more than 94% classification accuracy, they have different classification properties for different targets. Based on this result, we propose to use the ensemble learning method to further increase the classification accuracy to more than 97%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge