Dingwen Li

DistillBEV: Boosting Multi-Camera 3D Object Detection with Cross-Modal Knowledge Distillation

Sep 26, 2023

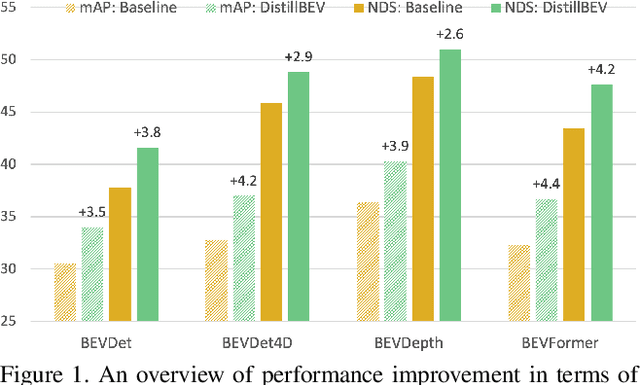

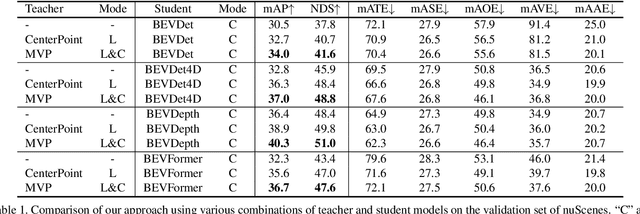

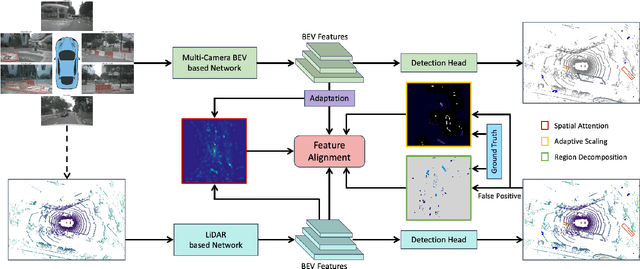

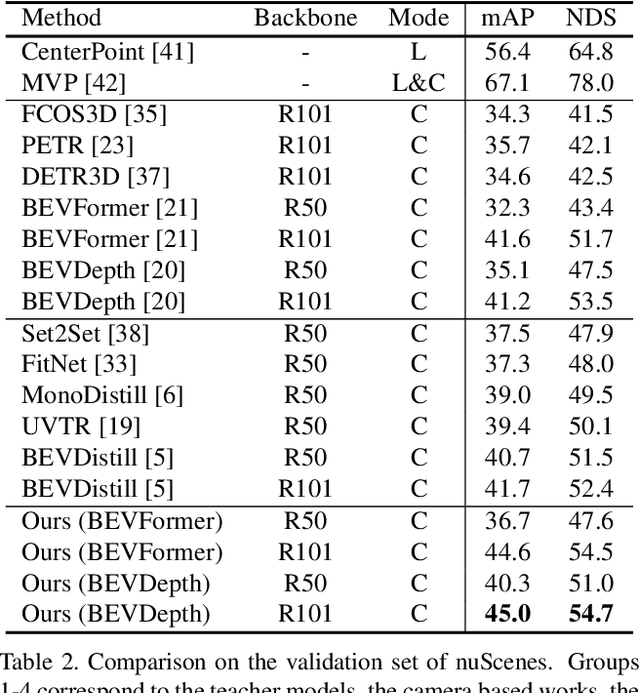

Abstract:3D perception based on the representations learned from multi-camera bird's-eye-view (BEV) is trending as cameras are cost-effective for mass production in autonomous driving industry. However, there exists a distinct performance gap between multi-camera BEV and LiDAR based 3D object detection. One key reason is that LiDAR captures accurate depth and other geometry measurements, while it is notoriously challenging to infer such 3D information from merely image input. In this work, we propose to boost the representation learning of a multi-camera BEV based student detector by training it to imitate the features of a well-trained LiDAR based teacher detector. We propose effective balancing strategy to enforce the student to focus on learning the crucial features from the teacher, and generalize knowledge transfer to multi-scale layers with temporal fusion. We conduct extensive evaluations on multiple representative models of multi-camera BEV. Experiments reveal that our approach renders significant improvement over the student models, leading to the state-of-the-art performance on the popular benchmark nuScenes.

Self-explaining Hierarchical Model for Intraoperative Time Series

Oct 10, 2022

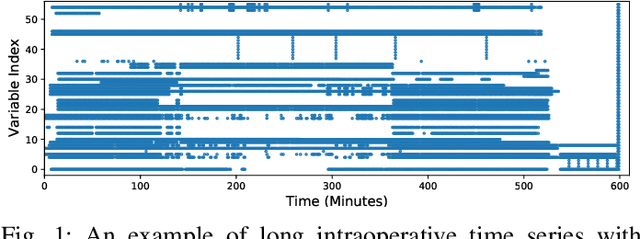

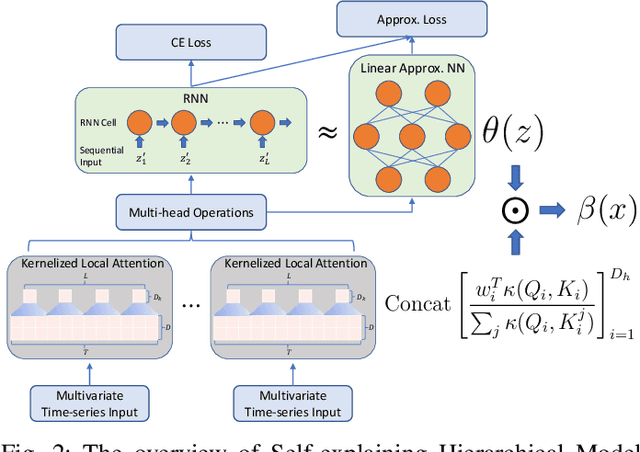

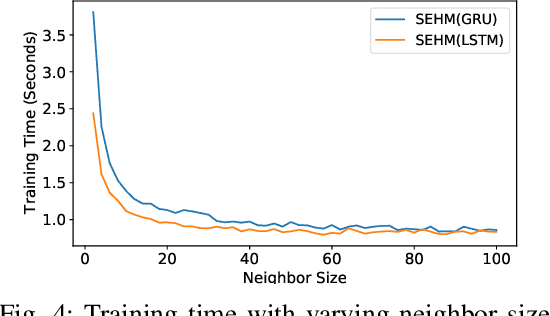

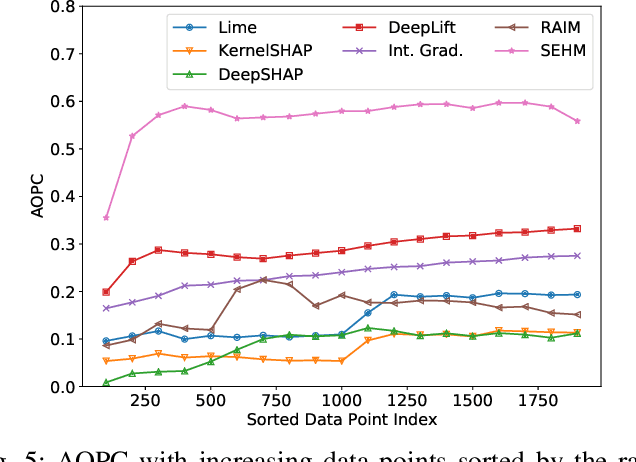

Abstract:Major postoperative complications are devastating to surgical patients. Some of these complications are potentially preventable via early predictions based on intraoperative data. However, intraoperative data comprise long and fine-grained multivariate time series, prohibiting the effective learning of accurate models. The large gaps associated with clinical events and protocols are usually ignored. Moreover, deep models generally lack transparency. Nevertheless, the interpretability is crucial to assist clinicians in planning for and delivering postoperative care and timely interventions. Towards this end, we propose a hierarchical model combining the strength of both attention and recurrent models for intraoperative time series. We further develop an explanation module for the hierarchical model to interpret the predictions by providing contributions of intraoperative data in a fine-grained manner. Experiments on a large dataset of 111,888 surgeries with multiple outcomes and an external high-resolution ICU dataset show that our model can achieve strong predictive performance (i.e., high accuracy) and offer robust interpretations (i.e., high transparency) for predicted outcomes based on intraoperative time series.

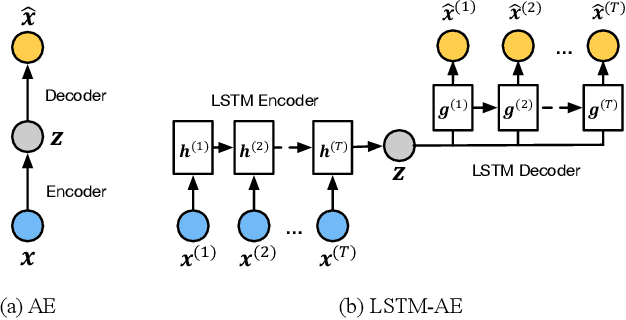

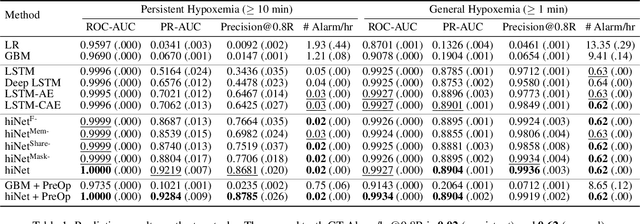

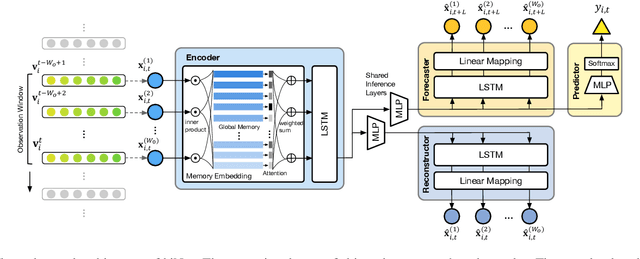

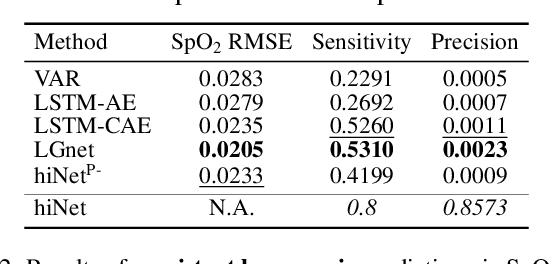

Predicting Intraoperative Hypoxemia with Joint Sequence Autoencoder Networks

May 19, 2021

Abstract:We present an end-to-end model using streaming physiological time series to accurately predict near-term risk for hypoxemia, a rare, but life-threatening condition known to cause serious patient harm during surgery. Our proposed model makes inference on both hypoxemia outcomes and future input sequences, enabled by a joint sequence autoencoder that simultaneously optimizes a discriminative decoder for label prediction, and two auxiliary decoders trained for data reconstruction and forecast, which seamlessly learns future-indicative latent representation. All decoders share a memory-based encoder that helps capture the global dynamics of patient data. In a large surgical cohort of 73,536 surgeries at a major academic medical center, our model outperforms all baselines and gives a large performance gain over the state-of-the-art hypoxemia prediction system. With a high sensitivity cutoff at 80%, it presents 99.36% precision in predicting hypoxemia and 86.81% precision in predicting the much more severe and rare hypoxemic condition, persistent hypoxemia. With exceptionally low rate of false alarms, our proposed model is promising in improving clinical decision making and easing burden on the health system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge