Dean Zadok

Towards Predicting Fine Finger Motions from Ultrasound Images via Kinematic Representation

Feb 10, 2022

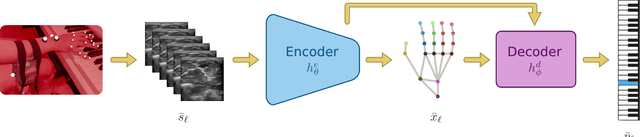

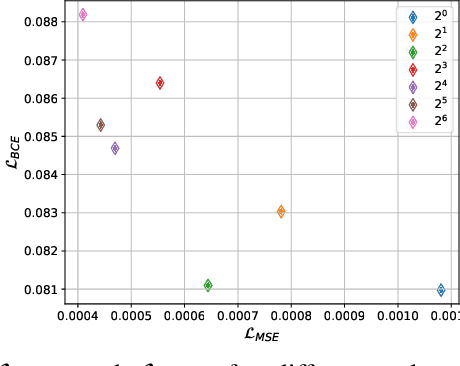

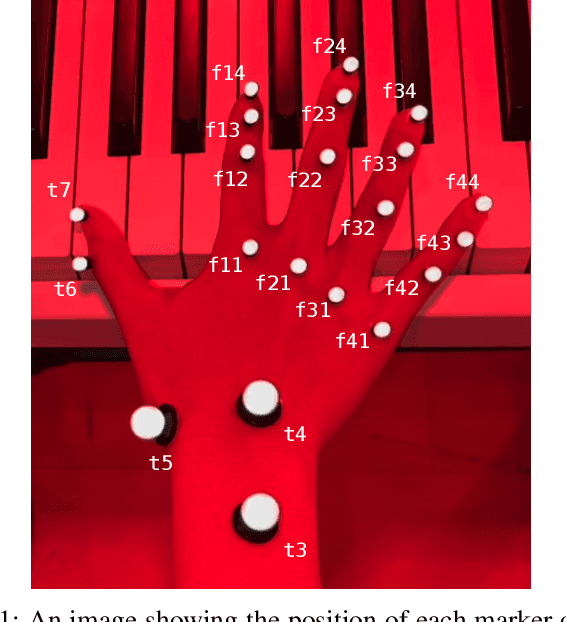

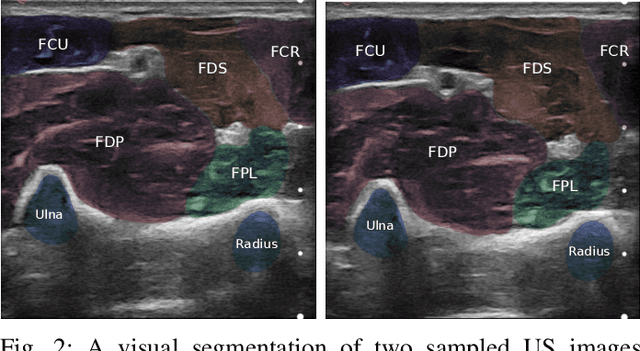

Abstract:A central challenge in building robotic prostheses is the creation of a sensor-based system able to read physiological signals from the lower limb and instruct a robotic hand to perform various tasks. Existing systems typically perform discrete gestures such as pointing or grasping, by employing electromyography (EMG) or ultrasound (US) technologies to analyze the state of the muscles. In this work, we study the inference problem of identifying the activation of specific fingers from a sequence of US images when performing dexterous tasks such as keyboard typing or playing the piano. While estimating finger gestures has been done in the past by detecting prominent gestures, we are interested in classification done in the context of fine motions that evolve over time. We consider this task as an important step towards higher adoption rates of robotic prostheses among arm amputees, as it has the potential to dramatically increase functionality in performing daily tasks. Our key observation, motivating this work, is that modeling the hand as a robotic manipulator allows to encode an intermediate representation wherein US images are mapped to said configurations. Given a sequence of such learned configurations, coupled with a neural-network architecture that exploits temporal coherence, we are able to infer fine finger motions. We evaluated our method by collecting data from a group of subjects and demonstrating how our framework can be used to replay music played or text typed. To the best of our knowledge, this is the first study demonstrating these downstream tasks within an end-to-end system.

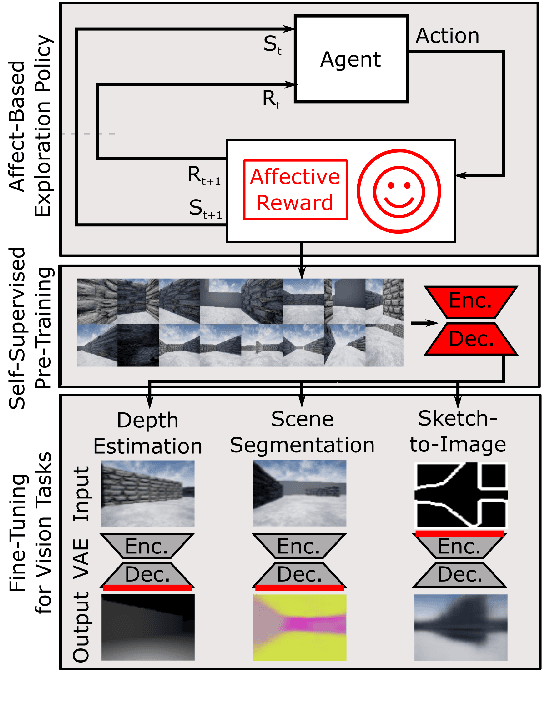

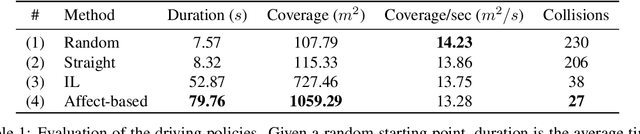

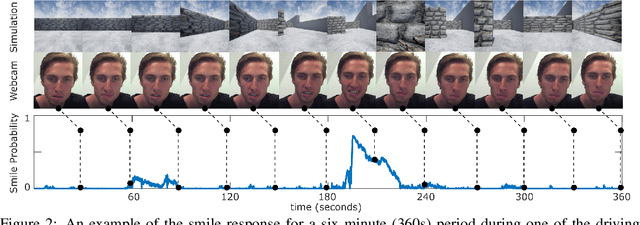

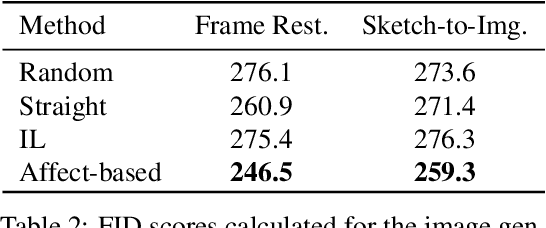

Affect-based Intrinsic Rewards for Learning General Representations

Jan 24, 2020

Abstract:Positive affect has been linked to increased interest, curiosity and satisfaction in human learning. In reinforcement learning extrinsic rewards are often sparse and difficult to define, intrinsically motivated learning can help address these challenges. We argue that positive affect is an important intrinsic reward that effectively helps drive exploration that is useful in gathering experiences critical to learning general representations. We present a novel approach leveraging a task-independent intrinsic reward function trained on spontaneous smile behavior that captures positive affect. To evaluate our approach we trained several downstream computer vision tasks on data collected with our policy and several baseline methods. We show that the policy based on intrinsic affective rewards successfully increases the duration of episodes, area explored and reduces collisions. The impact is increased speed of learning for several downstream computer vision tasks.

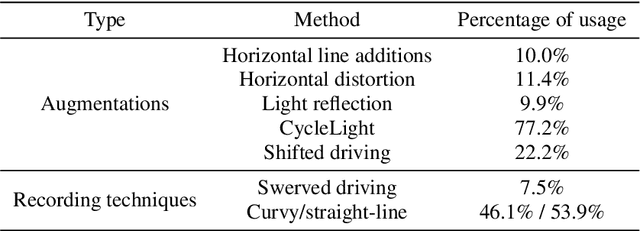

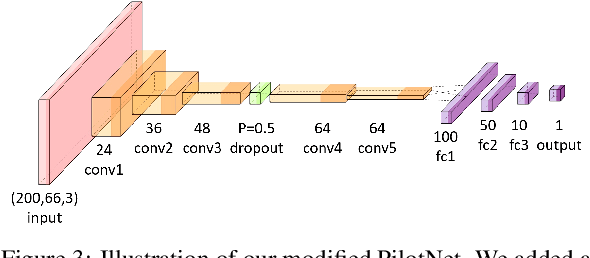

Explorations and Lessons Learned in Building an Autonomous Formula SAE Car from Simulations

May 15, 2019

Abstract:This paper describes the exploration and learnings during the process of developing a self-driving algorithm in simulation, followed by deployment on a real car. We specifically concentrate on the Formula Student Driverless competition. In such competitions, a formula race car, designed and built by students, is challenged to drive through previously unseen tracks that are marked by traffic cones. We explore and highlight the challenges associated with training a deep neural network that uses a single camera as input for inferring car steering angles in real-time. The paper explores in-depth creation of simulation, usage of simulations to train and validate the software stack and then finally the engineering challenges associated with the deployment of the system in real-world.

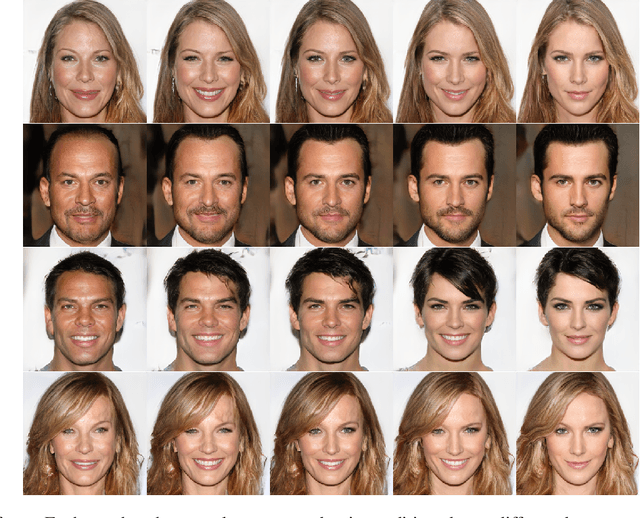

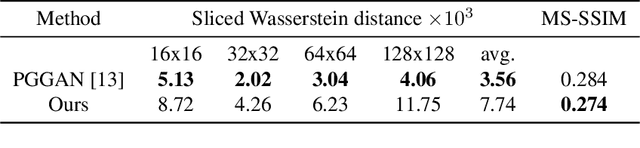

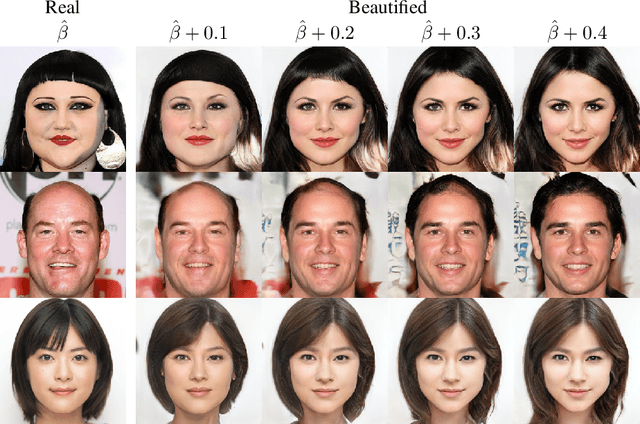

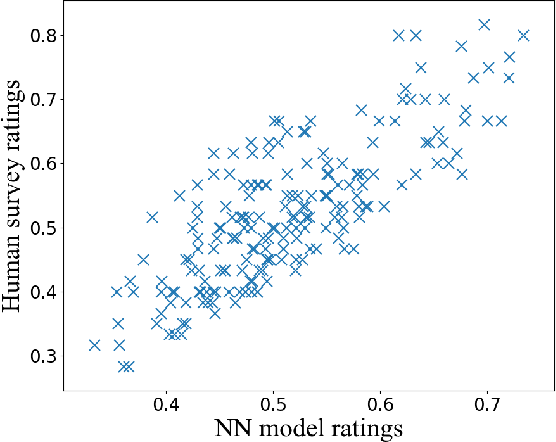

Beholder-GAN: Generation and Beautification of Facial Images with Conditioning on Their Beauty Level

Feb 25, 2019

Abstract:Beauty is in the eye of the beholder. This maxim, emphasizing the subjectivity of the perception of beauty, has enjoyed a wide consensus since ancient times. In the digitalera, data-driven methods have been shown to be able to predict human-assigned beauty scores for facial images. In this work, we augment this ability and train a generative model that generates faces conditioned on a requested beauty score. In addition, we show how this trained generator can be used to beautify an input face image. By doing so, we achieve an unsupervised beautification model, in the sense that it relies on no ground truth target images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge