Nir Diamant

Delta-GAN-Encoder: Encoding Semantic Changes for Explicit Image Editing, using Few Synthetic Samples

Nov 17, 2021

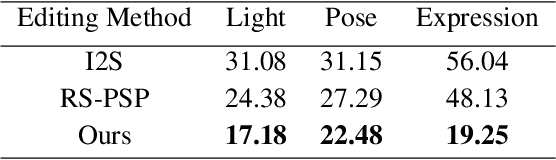

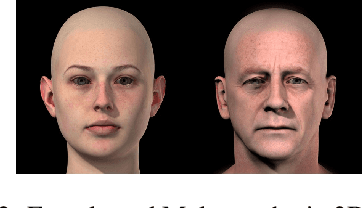

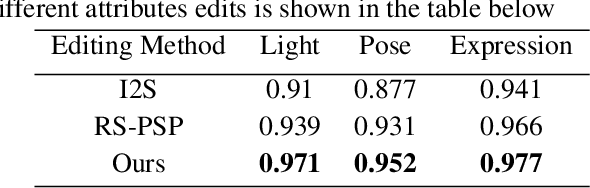

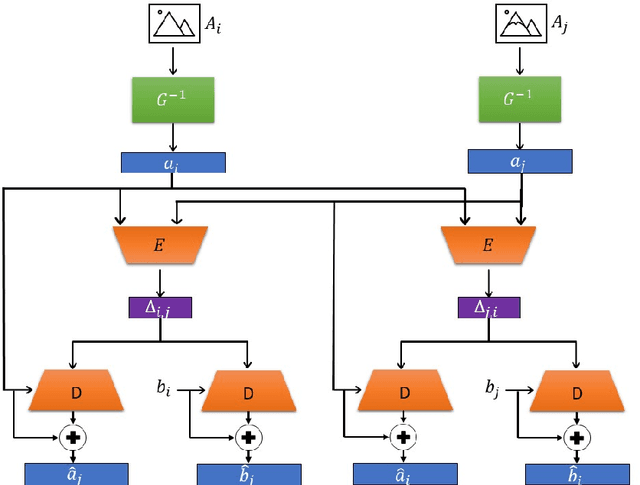

Abstract:Understating and controlling generative models' latent space is a complex task. In this paper, we propose a novel method for learning to control any desired attribute in a pre-trained GAN's latent space, for the purpose of editing synthesized and real-world data samples accordingly. We perform Sim2Real learning, relying on minimal samples to achieve an unlimited amount of continuous precise edits. We present an Autoencoder-based model that learns to encode the semantics of changes between images as a basis for editing new samples later on, achieving precise desired results - example shown in Fig. 1. While previous editing methods rely on a known structure of latent spaces (e.g., linearity of some semantics in StyleGAN), our method inherently does not require any structural constraints. We demonstrate our method in the domain of facial imagery: editing different expressions, poses, and lighting attributes, achieving state-of-the-art results.

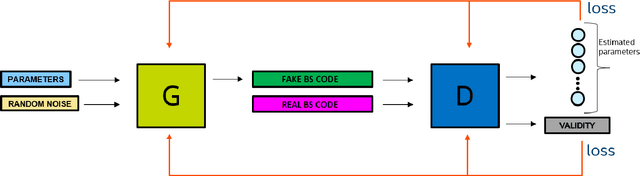

SimuGAN: Unsupervised forward modeling and optimal design of a LIDAR Camera

Dec 16, 2020

Abstract:Energy-saving LIDAR camera for short distances estimates an object's distance using temporally intensity-coded laser light pulses and calculates the maximum correlation with the back-scattered pulse. Though on low power, the backs-scattered pulse is noisy and unstable, which leads to inaccurate and unreliable depth estimation. To address this problem, we use GANs (Generative Adversarial Networks), which are two neural networks that can learn complicated class distributions through an adversarial process. We learn the LIDAR camera's hidden properties and behavior, creating a novel, fully unsupervised forward model that simulates the camera. Then, we use the model's differentiability to explore the camera parameter space and optimize those parameters in terms of depth, accuracy, and stability. To achieve this goal, we also propose a new custom loss function designated to the back-scattered code distribution's weaknesses and its circular behavior. The results are demonstrated on both synthetic and real data.

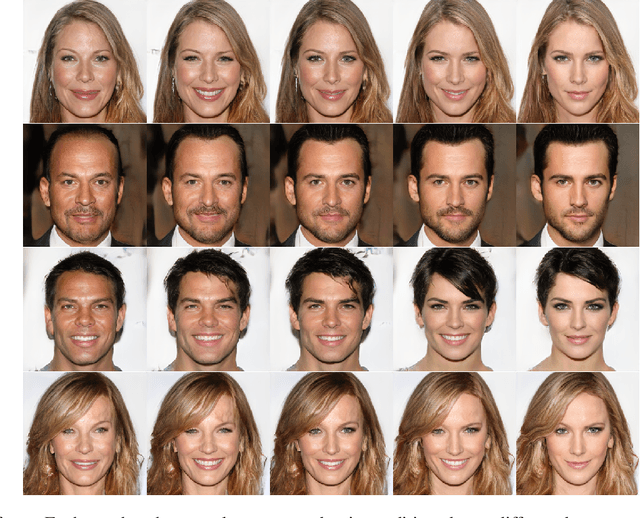

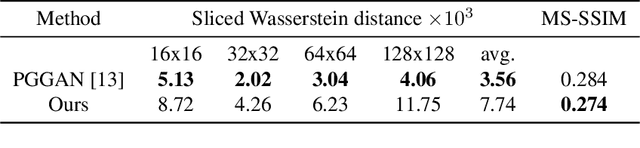

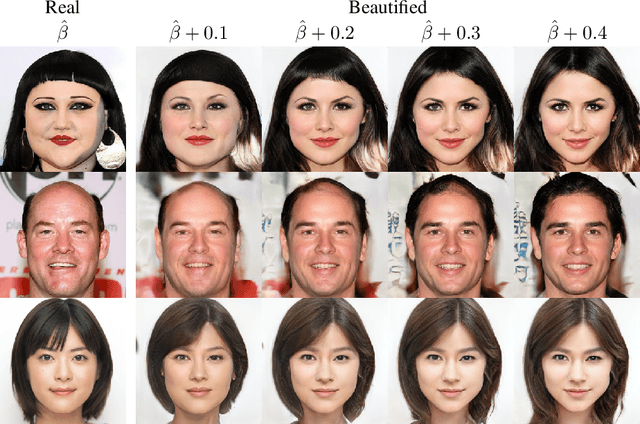

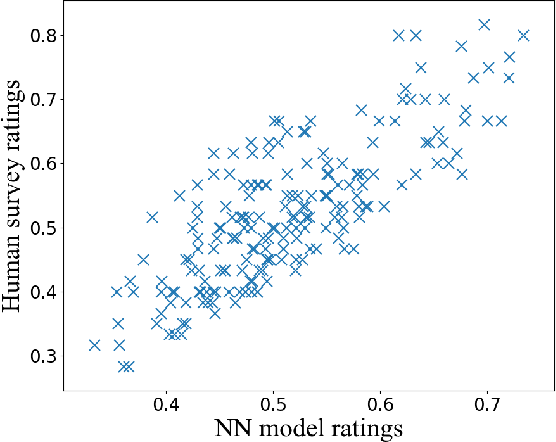

Beholder-GAN: Generation and Beautification of Facial Images with Conditioning on Their Beauty Level

Feb 25, 2019

Abstract:Beauty is in the eye of the beholder. This maxim, emphasizing the subjectivity of the perception of beauty, has enjoyed a wide consensus since ancient times. In the digitalera, data-driven methods have been shown to be able to predict human-assigned beauty scores for facial images. In this work, we augment this ability and train a generative model that generates faces conditioned on a requested beauty score. In addition, we show how this trained generator can be used to beautify an input face image. By doing so, we achieve an unsupervised beautification model, in the sense that it relies on no ground truth target images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge