Tom Hirshberg

Active propulsion noise shaping for multi-rotor aircraft localization

Feb 29, 2024Abstract:Multi-rotor aerial autonomous vehicles (MAVs) primarily rely on vision for navigation purposes. However, visual localization and odometry techniques suffer from poor performance in low or direct sunlight, a limited field of view, and vulnerability to occlusions. Acoustic sensing can serve as a complementary or even alternative modality for vision in many situations, and it also has the added benefits of lower system cost and energy footprint, which is especially important for micro aircraft. This paper proposes actively controlling and shaping the aircraft propulsion noise generated by the rotors to benefit localization tasks, rather than considering it a harmful nuisance. We present a neural network architecture for selfnoise-based localization in a known environment. We show that training it simultaneously with learning time-varying rotor phase modulation achieves accurate and robust localization. The proposed methods are evaluated using a computationally affordable simulation of MAV rotor noise in 2D acoustic environments that is fitted to real recordings of rotor pressure fields.

Safety Considerations in Deep Control Policies with Safety Barrier Certificates Under Uncertainty

Mar 02, 2020

Abstract:Recent advances in Deep Machine Learning have shown promise in solving complex perception and control loops via methods such as reinforcement and imitation learning. However, guaranteeing safety for such learned deep policies has been a challenge due to issues such as partial observability and difficulties in characterizing the behavior of the neural networks. While a lot of emphasis in safe learning has been placed during training, it is non-trivial to guarantee safety at deployment or test time. This paper extends how under mild assumptions, Safety Barrier Certificates can be used to guarantee safety with deep control policies despite uncertainty arising due to perception and other latent variables. Specifically for scenarios where the dynamics are smooth and uncertainty has a finite support, the proposed framework wraps around an existing deep control policy and generates safe actions by dynamically evaluating and modifying the policy from the embedded network. Our framework utilizes control barrier functions to create spaces of control actions that are safe under uncertainty, and when the original actions are found to be in violation of the safety constraint, uses quadratic programming to minimally modify the original actions to ensure they lie in the safe set. Representations of the environment are built through Euclidean signed distance fields that are then used to infer the safety of actions and to guarantee forward invariance. We implement this method in simulation in a drone-racing environment and show that our method results in safer actions compared to a baseline that only relies on imitation learning to generate control actions.

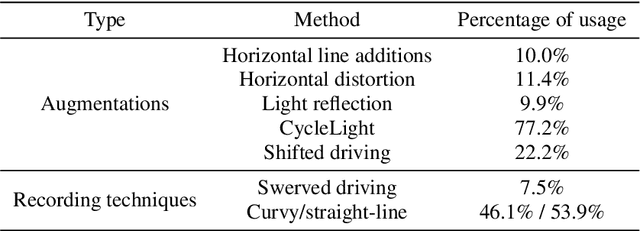

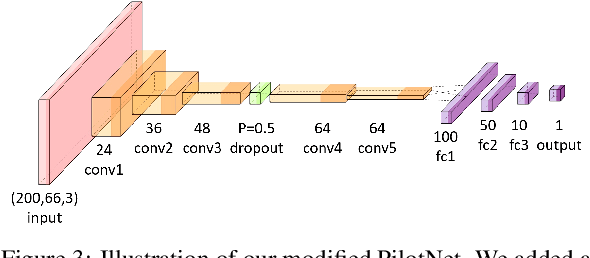

Explorations and Lessons Learned in Building an Autonomous Formula SAE Car from Simulations

May 15, 2019

Abstract:This paper describes the exploration and learnings during the process of developing a self-driving algorithm in simulation, followed by deployment on a real car. We specifically concentrate on the Formula Student Driverless competition. In such competitions, a formula race car, designed and built by students, is challenged to drive through previously unseen tracks that are marked by traffic cones. We explore and highlight the challenges associated with training a deep neural network that uses a single camera as input for inferring car steering angles in real-time. The paper explores in-depth creation of simulation, usage of simulations to train and validate the software stack and then finally the engineering challenges associated with the deployment of the system in real-world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge