Alon Wolf

Continuous Control of Editing Models via Adaptive-Origin Guidance

Feb 03, 2026Abstract:Diffusion-based editing models have emerged as a powerful tool for semantic image and video manipulation. However, existing models lack a mechanism for smoothly controlling the intensity of text-guided edits. In standard text-conditioned generation, Classifier-Free Guidance (CFG) impacts prompt adherence, suggesting it as a potential control for edit intensity in editing models. However, we show that scaling CFG in these models does not produce a smooth transition between the input and the edited result. We attribute this behavior to the unconditional prediction, which serves as the guidance origin and dominates the generation at low guidance scales, while representing an arbitrary manipulation of the input content. To enable continuous control, we introduce Adaptive-Origin Guidance (AdaOr), a method that adjusts this standard guidance origin with an identity-conditioned adaptive origin, using an identity instruction corresponding to the identity manipulation. By interpolating this identity prediction with the standard unconditional prediction according to the edit strength, we ensure a continuous transition from the input to the edited result. We evaluate our method on image and video editing tasks, demonstrating that it provides smoother and more consistent control compared to current slider-based editing approaches. Our method incorporates an identity instruction into the standard training framework, enabling fine-grained control at inference time without per-edit procedure or reliance on specialized datasets.

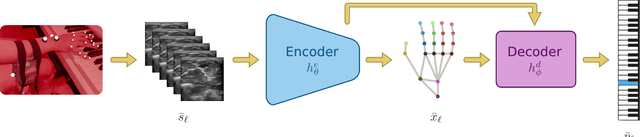

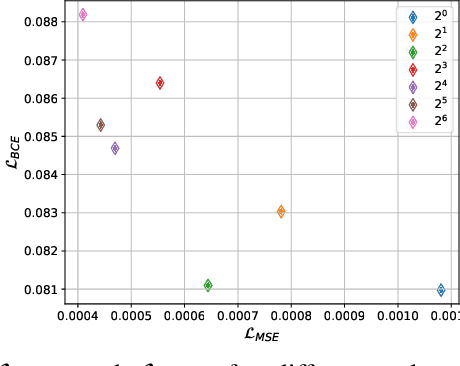

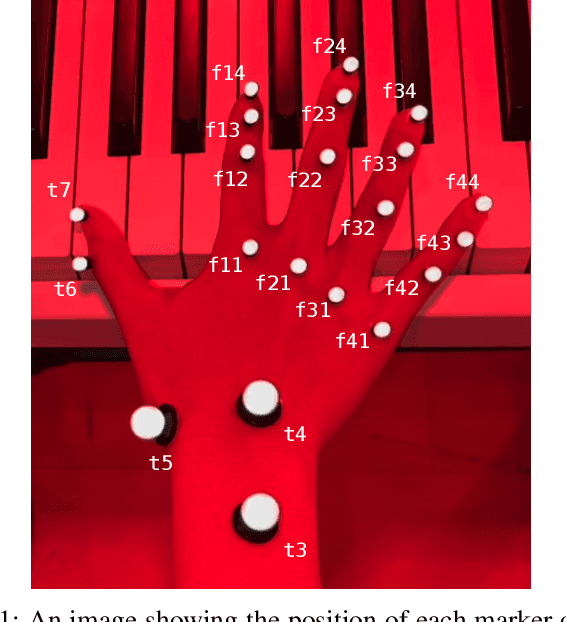

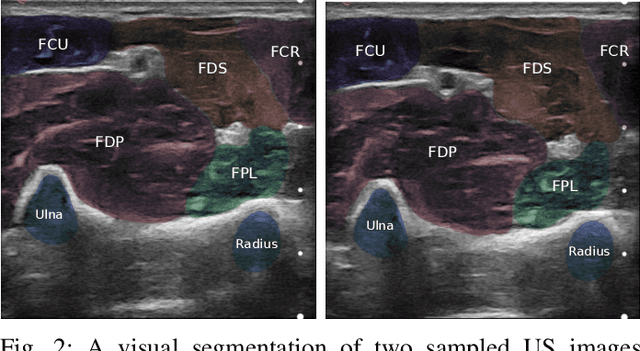

Towards Predicting Fine Finger Motions from Ultrasound Images via Kinematic Representation

Feb 10, 2022

Abstract:A central challenge in building robotic prostheses is the creation of a sensor-based system able to read physiological signals from the lower limb and instruct a robotic hand to perform various tasks. Existing systems typically perform discrete gestures such as pointing or grasping, by employing electromyography (EMG) or ultrasound (US) technologies to analyze the state of the muscles. In this work, we study the inference problem of identifying the activation of specific fingers from a sequence of US images when performing dexterous tasks such as keyboard typing or playing the piano. While estimating finger gestures has been done in the past by detecting prominent gestures, we are interested in classification done in the context of fine motions that evolve over time. We consider this task as an important step towards higher adoption rates of robotic prostheses among arm amputees, as it has the potential to dramatically increase functionality in performing daily tasks. Our key observation, motivating this work, is that modeling the hand as a robotic manipulator allows to encode an intermediate representation wherein US images are mapped to said configurations. Given a sequence of such learned configurations, coupled with a neural-network architecture that exploits temporal coherence, we are able to infer fine finger motions. We evaluated our method by collecting data from a group of subjects and demonstrating how our framework can be used to replay music played or text typed. To the best of our knowledge, this is the first study demonstrating these downstream tasks within an end-to-end system.

U-mesh: Human Correspondence Matching with Mesh Convolutional Networks

Aug 22, 2021

Abstract:The proliferation of 3D scanning technology has driven a need for methods to interpret geometric data, particularly for human subjects. In this paper we propose an elegant fusion of regression (bottom-up) and generative (top-down) methods to fit a parametric template model to raw scan meshes. Our first major contribution is an intrinsic convolutional mesh U-net architecture that predicts pointwise correspondence to a template surface. Soft-correspondence is formulated as coordinates in a newly-constructed Cartesian space. Modeling correspondence as Euclidean proximity enables efficient optimization, both for network training and for the next step of the algorithm. Our second contribution is a generative optimization algorithm that uses the U-net correspondence predictions to guide a parametric Iterative Closest Point registration. By employing pre-trained human surface parametric models we maximally leverage domain-specific prior knowledge. The pairing of a mesh-convolutional network with generative model fitting enables us to predict correspondence for real human surface scans including occlusions, partialities, and varying genus (e.g. from self-contact). We evaluate the proposed method on the FAUST correspondence challenge where we achieve 20% (33%) improvement over state of the art methods for inter- (intra-) subject correspondence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge