Davide Ferrari

Collaborative Conversation in Safe Multimodal Human-Robot Collaboration

Sep 11, 2024

Abstract:In the context of Human-Robot Collaboration (HRC), it is crucial that the two actors are able to communicate with each other in a natural and efficient manner. The absence of a communication interface is often a cause of undesired slowdowns. On one hand, this is because unforeseen events may occur, leading to errors. On the other hand, due to the close contact between humans and robots, the speed must be reduced significantly to comply with safety standard ISO/TS 15066. In this paper, we propose a novel architecture that enables operators and robots to communicate efficiently, emulating human-to-human dialogue, while addressing safety concerns. This approach aims to establish a communication framework that not only facilitates collaboration but also reduces undesired speed reduction. Through the use of a predictive simulator, we can anticipate safety-related limitations, ensuring smoother workflows, minimizing risks, and optimizing efficiency. The overall architecture has been validated with a UR10e and compared with a state of the art technique. The results show a significant improvement in user experience, with a corresponding 23% reduction in execution times and a 50% decrease in robot downtime.

Compliant Blind Handover Control for Human-Robot Collaboration

Sep 11, 2024

Abstract:This paper presents a Human-Robot Blind Handover architecture within the context of Human-Robot Collaboration (HRC). The focus lies on a blind handover scenario where the operator is intentionally faced away, focused in a task, and requires an object from the robot. In this context, it is imperative for the robot to autonomously manage the entire handover process. Key considerations include ensuring safety while handing the object to the operator's hand, and detect the proper timing to release the object. The article explores strategies to navigate these challenges, emphasizing the need for a robot to operate safely and independently in facilitating blind handovers, thereby contributing to the advancement of HRC protocols and fostering a natural and efficient collaboration between humans and robots.

The Critical Role of Effective Communication in Human-Robot Collaborative Assembly

Sep 11, 2024Abstract:In the rapidly evolving landscape of Human-Robot Collaboration (HRC), effective communication between humans and robots is crucial for complex task execution. Traditional request-response systems often lack naturalness and may hinder efficiency. This study emphasizes the importance of adopting human-like communication interactions to enable fluent vocal communication between human operators and robots simulating a collaborative human-robot industrial assembly. We propose a novel approach that employs human-like interactions through natural dialogue, enabling human operators to engage in vocal conversations with robots. Through a comparative experiment, we demonstrate the efficacy of our approach in enhancing task performance and collaboration efficiency. The robot's ability to engage in meaningful vocal conversations enables it to seek clarification, provide status updates, and ask for assistance when required, leading to improved coordination and a smoother workflow. The results indicate that the adoption of human-like conversational interactions positively influences the human-robot collaborative dynamic. Human operators find it easier to convey complex instructions and preferences, resulting in a more productive and satisfying collaboration experience.

Multi-Class Quantum Convolutional Neural Networks

Apr 19, 2024

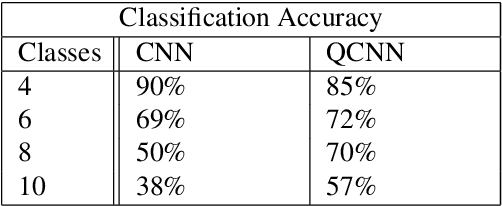

Abstract:Classification is particularly relevant to Information Retrieval, as it is used in various subtasks of the search pipeline. In this work, we propose a quantum convolutional neural network (QCNN) for multi-class classification of classical data. The model is implemented using PennyLane. The optimization process is conducted by minimizing the cross-entropy loss through parameterized quantum circuit optimization. The QCNN is tested on the MNIST dataset with 4, 6, 8 and 10 classes. The results show that with 4 classes, the performance is slightly lower compared to the classical CNN, while with a higher number of classes, the QCNN outperforms the classical neural network.

Question answering systems for health professionals at the point of care -- a systematic review

Jan 24, 2024

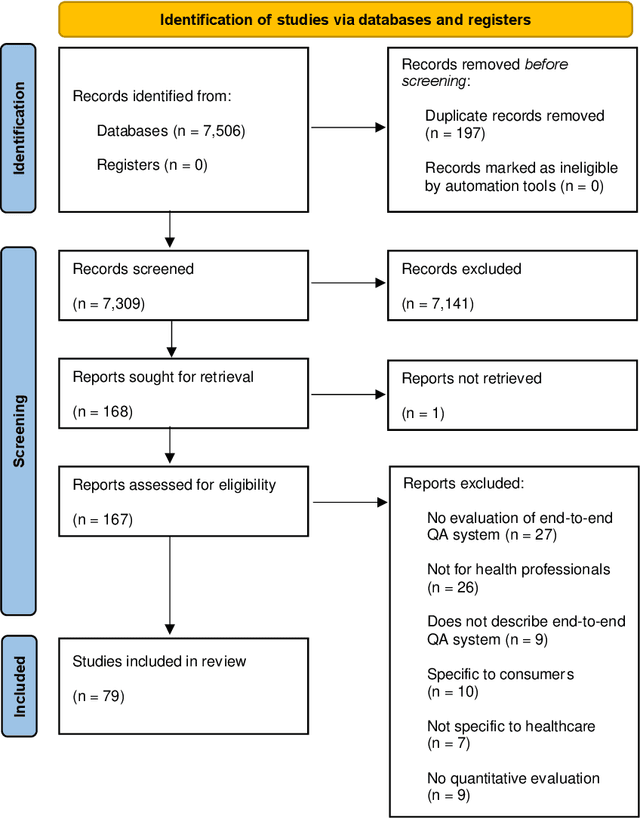

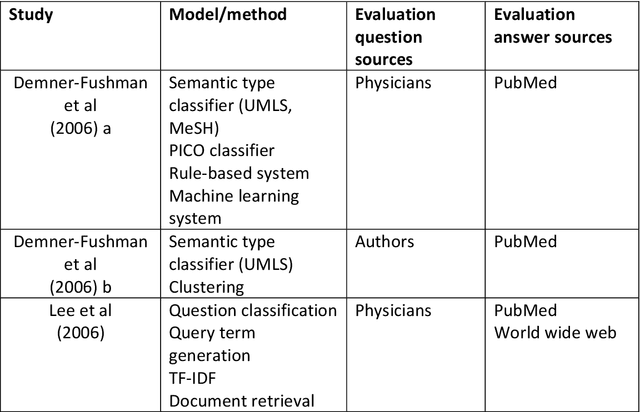

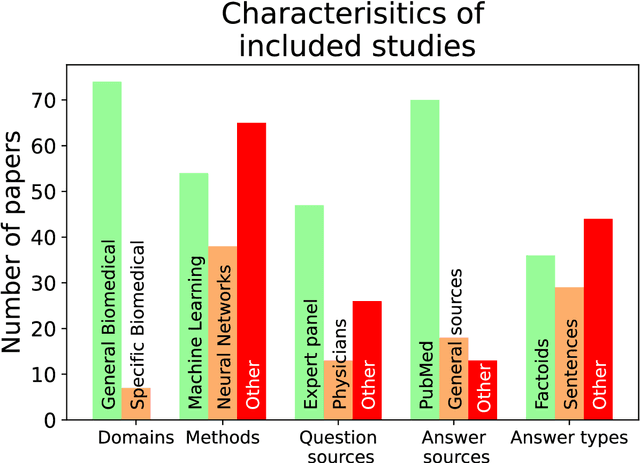

Abstract:Objective: Question answering (QA) systems have the potential to improve the quality of clinical care by providing health professionals with the latest and most relevant evidence. However, QA systems have not been widely adopted. This systematic review aims to characterize current medical QA systems, assess their suitability for healthcare, and identify areas of improvement. Materials and methods: We searched PubMed, IEEE Xplore, ACM Digital Library, ACL Anthology and forward and backward citations on 7th February 2023. We included peer-reviewed journal and conference papers describing the design and evaluation of biomedical QA systems. Two reviewers screened titles, abstracts, and full-text articles. We conducted a narrative synthesis and risk of bias assessment for each study. We assessed the utility of biomedical QA systems. Results: We included 79 studies and identified themes, including question realism, answer reliability, answer utility, clinical specialism, systems, usability, and evaluation methods. Clinicians' questions used to train and evaluate QA systems were restricted to certain sources, types and complexity levels. No system communicated confidence levels in the answers or sources. Many studies suffered from high risks of bias and applicability concerns. Only 8 studies completely satisfied any criterion for clinical utility, and only 7 reported user evaluations. Most systems were built with limited input from clinicians. Discussion: While machine learning methods have led to increased accuracy, most studies imperfectly reflected real-world healthcare information needs. Key research priorities include developing more realistic healthcare QA datasets and considering the reliability of answer sources, rather than merely focusing on accuracy.

Facilitating Human-Robot Collaboration through Natural Vocal Conversations

Nov 23, 2023

Abstract:In the rapidly evolving landscape of human-robot collaboration, effective communication between humans and robots is crucial for complex task execution. Traditional request-response systems often lack naturalness and may hinder efficiency. In this study, we propose a novel approach that employs human-like conversational interactions for vocal communication between human operators and robots. The framework emphasizes the establishment of a natural and interactive dialogue, enabling human operators to engage in vocal conversations with robots. Through a comparative experiment, we demonstrate the efficacy of our approach in enhancing task performance and collaboration efficiency. The robot's ability to engage in meaningful vocal conversations enables it to seek clarification, provide status updates, and ask for assistance when required, leading to improved coordination and a smoother workflow. The results indicate that the adoption of human-like conversational interactions positively influences the human-robot collaborative dynamic. Human operators find it easier to convey complex instructions and preferences, fostering a more productive and satisfying collaboration experience.

Safe Multimodal Communication in Human-Robot Collaboration

Aug 07, 2023Abstract:The new industrial settings are characterized by the presence of human and robots that work in close proximity, cooperating in performing the required job. Such a collaboration, however, requires to pay attention to many aspects. Firstly, it is crucial to enable a communication between this two actors that is natural and efficient. Secondly, the robot behavior must always be compliant with the safety regulations, ensuring always a safe collaboration. In this paper, we propose a framework that enables multi-channel communication between humans and robots by leveraging multimodal fusion of voice and gesture commands while always respecting safety regulations. The framework is validated through a comparative experiment, demonstrating that, thanks to multimodal communication, the robot can extract valuable information for performing the required task and additionally, with the safety layer, the robot can scale its speed to ensure the operator's safety.

Bidirectional Communication Control for Human-Robot Collaboration

Jun 10, 2022

Abstract:A fruitful collaboration is based on the mutual knowledge of each other skills and on the possibility of communicating their own limits and proposing alternatives to adapt the execution of a task to the capabilities of the collaborators. This paper aims at reproducing such a scenario in a human-robot collaboration setting by proposing a novel communication control architecture. Exploiting control barrier functions, the robot is made aware of its (dynamic) skills and limits and, thanks to a local predictor, it is able to assess if it is possible to execute a requested task and, if not, to propose alternative by relaxing some constraints. The controller is interfaced with a communication infrastructure that enables human and robot to set up a bidirectional communication about the task to execute and the human to take an informed decision on the behavior of the robot. A comparative experimental validation is proposed.

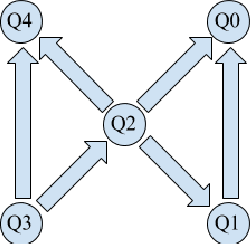

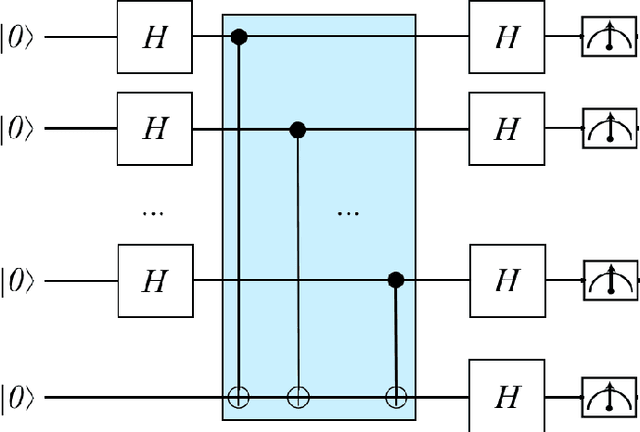

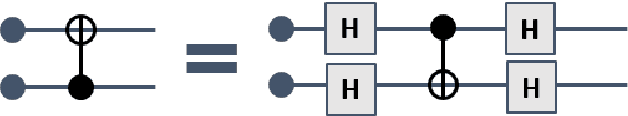

Demonstration of Envariance and Parity Learning on the IBM 16 Qubit Processor

Feb 07, 2018

Abstract:Recently, IBM has made available a quantum computer provided with 16 qubits, denoted as IBM Q16. Previously, only a 5 qubit device, denoted as Q5, was available. Both IBM devices can be used to run quantum programs, by means of a cloud-based platform. In this paper, we illustrate our experience with IBM Q16 in demonstrating entanglement assisted invariance, also known as envariance, and parity learning by querying a uniform quantum example oracle. In particular, we illustrate the non-trivial strategy we have designed for compiling $n$-qubit quantum circuits ($n$ being an input parameter) on any IBM device, taking into account topological constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge