Andrea Pupa

Intuitive Programming, Adaptive Task Planning, and Dynamic Role Allocation in Human-Robot Collaboration

Nov 11, 2025

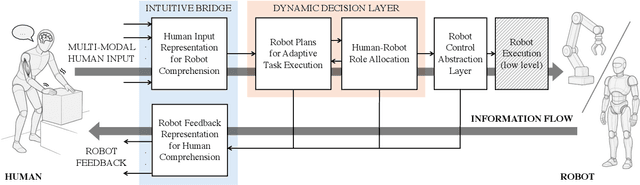

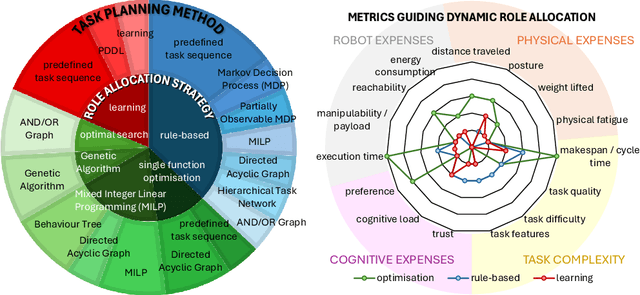

Abstract:Remarkable capabilities have been achieved by robotics and AI, mastering complex tasks and environments. Yet, humans often remain passive observers, fascinated but uncertain how to engage. Robots, in turn, cannot reach their full potential in human-populated environments without effectively modeling human states and intentions and adapting their behavior. To achieve a synergistic human-robot collaboration (HRC), a continuous information flow should be established: humans must intuitively communicate instructions, share expertise, and express needs. In parallel, robots must clearly convey their internal state and forthcoming actions to keep users informed, comfortable, and in control. This review identifies and connects key components enabling intuitive information exchange and skill transfer between humans and robots. We examine the full interaction pipeline: from the human-to-robot communication bridge translating multimodal inputs into robot-understandable representations, through adaptive planning and role allocation, to the control layer and feedback mechanisms to close the loop. Finally, we highlight trends and promising directions toward more adaptive, accessible HRC.

* Published in the Annual Review of Control, Robotics, and Autonomous Systems, Volume 9; copyright 2026 the author(s), CC BY 4.0, https://www.annualreviews.org

Compliant Blind Handover Control for Human-Robot Collaboration

Sep 11, 2024

Abstract:This paper presents a Human-Robot Blind Handover architecture within the context of Human-Robot Collaboration (HRC). The focus lies on a blind handover scenario where the operator is intentionally faced away, focused in a task, and requires an object from the robot. In this context, it is imperative for the robot to autonomously manage the entire handover process. Key considerations include ensuring safety while handing the object to the operator's hand, and detect the proper timing to release the object. The article explores strategies to navigate these challenges, emphasizing the need for a robot to operate safely and independently in facilitating blind handovers, thereby contributing to the advancement of HRC protocols and fostering a natural and efficient collaboration between humans and robots.

Collaborative Conversation in Safe Multimodal Human-Robot Collaboration

Sep 11, 2024

Abstract:In the context of Human-Robot Collaboration (HRC), it is crucial that the two actors are able to communicate with each other in a natural and efficient manner. The absence of a communication interface is often a cause of undesired slowdowns. On one hand, this is because unforeseen events may occur, leading to errors. On the other hand, due to the close contact between humans and robots, the speed must be reduced significantly to comply with safety standard ISO/TS 15066. In this paper, we propose a novel architecture that enables operators and robots to communicate efficiently, emulating human-to-human dialogue, while addressing safety concerns. This approach aims to establish a communication framework that not only facilitates collaboration but also reduces undesired speed reduction. Through the use of a predictive simulator, we can anticipate safety-related limitations, ensuring smoother workflows, minimizing risks, and optimizing efficiency. The overall architecture has been validated with a UR10e and compared with a state of the art technique. The results show a significant improvement in user experience, with a corresponding 23% reduction in execution times and a 50% decrease in robot downtime.

Safe Multimodal Communication in Human-Robot Collaboration

Aug 07, 2023Abstract:The new industrial settings are characterized by the presence of human and robots that work in close proximity, cooperating in performing the required job. Such a collaboration, however, requires to pay attention to many aspects. Firstly, it is crucial to enable a communication between this two actors that is natural and efficient. Secondly, the robot behavior must always be compliant with the safety regulations, ensuring always a safe collaboration. In this paper, we propose a framework that enables multi-channel communication between humans and robots by leveraging multimodal fusion of voice and gesture commands while always respecting safety regulations. The framework is validated through a comparative experiment, demonstrating that, thanks to multimodal communication, the robot can extract valuable information for performing the required task and additionally, with the safety layer, the robot can scale its speed to ensure the operator's safety.

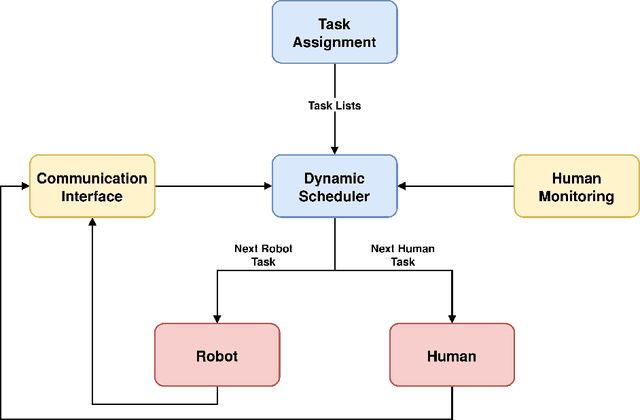

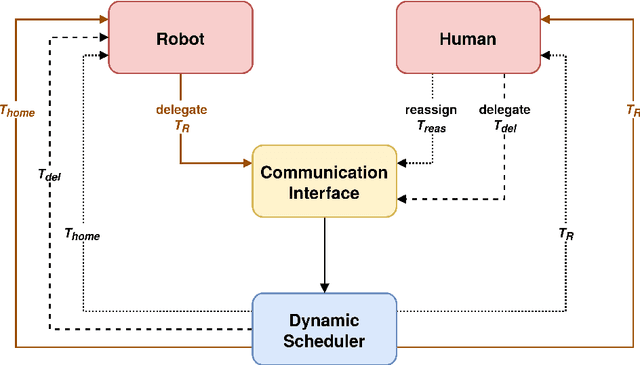

A Dynamic Architecture for Task Assignment and Scheduling for Collaborative Robotic Cells

Apr 29, 2021

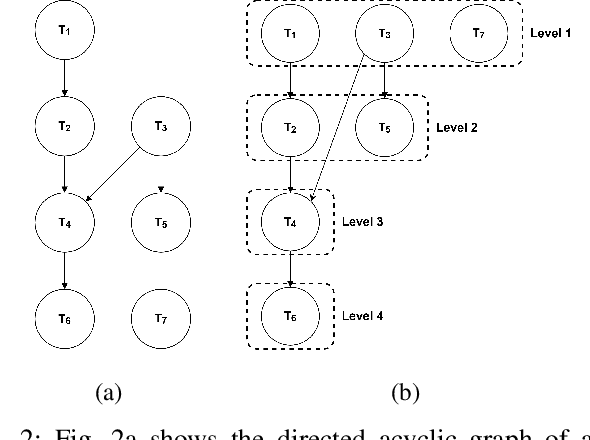

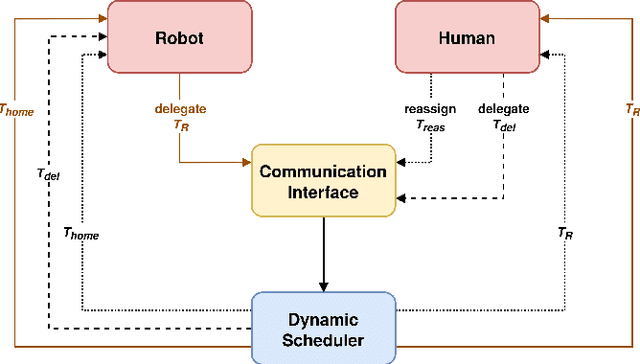

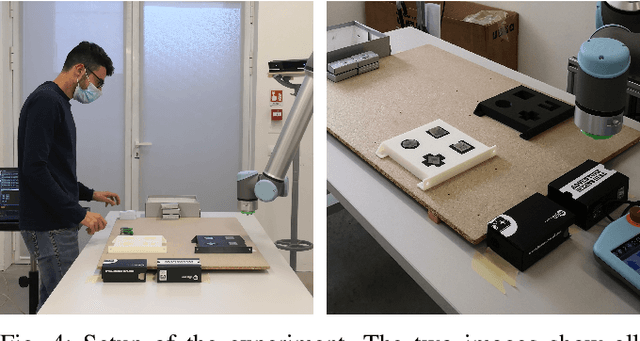

Abstract:In collaborative robotic cells, a human operator and a robot share the workspace in order to execute a common job, consisting of a set of tasks. A proper allocation and scheduling of the tasks for the human and for the robot is crucial for achieving an efficient human-robot collaboration. In order to deal with the dynamic and unpredictable behavior of the human and for allowing the human and the robot to negotiate about the tasks to be executed, a two layers architecture for solving the task allocation and scheduling problem is proposed. The first layer optimally solves the task allocation problem considering nominal execution times. The second layer, which is reactive, adapts online the sequence of tasks to be executed by the robot considering deviations from the nominal behaviors and requests coming from the human and from robot. The proposed architecture is experimentally validated on a collaborative assembly job.

* arXiv admin note: text overlap with arXiv:2103.01831

A Human-Centered Dynamic Scheduling Architecture for Collaborative Application

Mar 03, 2021

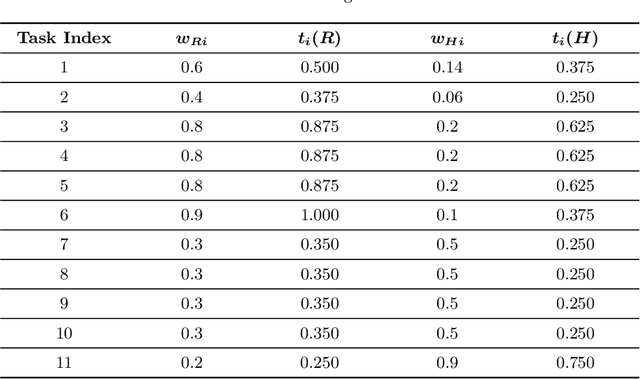

Abstract:In collaborative robotic applications, human and robot have to work together during a whole shift for executing a sequence of jobs. The performance of the human robot team can be enhanced by scheduling the right tasks to the human and the robot. The scheduling should consider the task execution constraints, the variability in the task execution by the human, and the job quality of the human. Therefore, it is necessary to dynamically schedule the assigned tasks. In this paper, we propose a two-layered architecture for task allocation and scheduling in a collaborative cell. Job quality is explicitly considered during the allocation of the tasks and over a sequence of jobs. The tasks are dynamically scheduled based on the real time monitoring of the human's activities. The effectiveness of the proposed architecture is experimentally validated.

A Safety-Aware Kinodynamic Architecture for Human-Robot Collaboration

Mar 02, 2021

Abstract:The new paradigm of human-robot collaboration has led to the creation of shared work environments in which humans and robots work in close contact with each other. Consequently, the safety regulations have been updated addressing these new scenarios. The mere application of these regulations may lead to a very inefficient behavior of the robot. In order to preserve safety for the human operators and allow the robot to reach a desired configuration in a safe and efficient way, a two layers architecture for trajectory planning and scaling is proposed. The first layer calculates the nominal trajectory and continuously adapts it based on the human behavior. The second layer, which explicitly considers the safety regulations, scales the robot velocity and requests for a new trajectory if the robot speed drops. The proposed architecture is experimentally validated on a Pilz PRBT manipulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge