David Meyer

Open-Source Manually Annotated Vocal Tract Database for Automatic Segmentation from 3D MRI Using Deep Learning: Benchmarking 2D and 3D Convolutional and Transformer Networks

Jan 08, 2025

Abstract:Accurate segmentation of the vocal tract from magnetic resonance imaging (MRI) data is essential for various voice and speech applications. Manual segmentation is time intensive and susceptible to errors. This study aimed to evaluate the efficacy of deep learning algorithms for automatic vocal tract segmentation from 3D MRI.

Rapid dynamic speech imaging at 3 Tesla using combination of a custom vocal tract coil, variable density spirals and manifold regularization

Sep 06, 2022

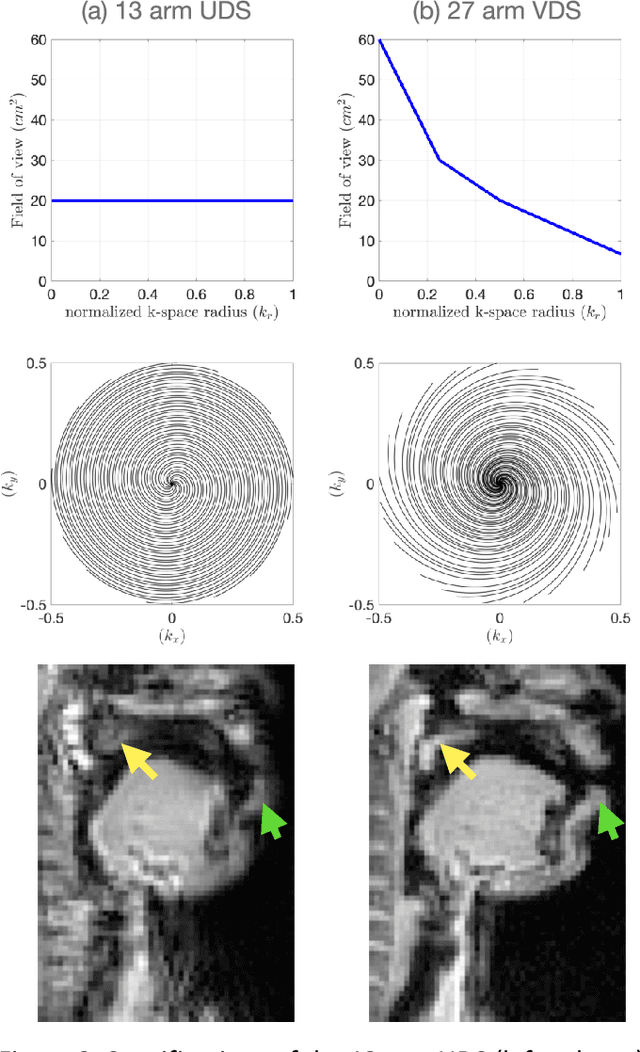

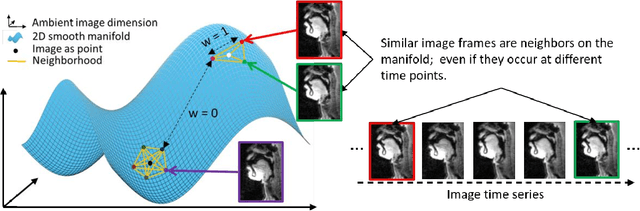

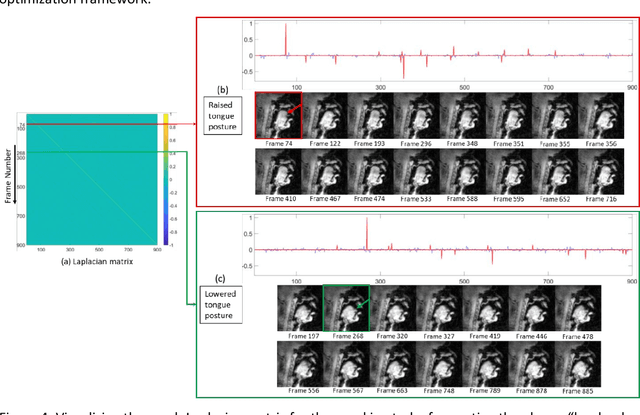

Abstract:Purpose: To improve dynamic speech imaging at 3 Tesla. Methods: A novel scheme combining a 16-channel vocal tract coil, variable density spirals (VDS), and manifold regularization was developed. Short readout duration spirals (1.3 ms long) were used to minimize sensitivity to off-resonance. The manifold model leveraged similarities between frames sharing similar vocal tract postures without explicit motion binning. Reconstruction was posed as a SENSE-based non-local soft weighted temporal regularization scheme. The self-navigating capability of VDS was leveraged to learn the structure of the manifold. Our approach was compared against low-rank and finite difference reconstruction constraints on two volunteers performing repetitive and arbitrary speaking tasks. Blinded image quality evaluation in the categories of alias artifacts, spatial blurring, and temporal blurring were performed by three experts in voice research. Results: We achieved a spatial resolution of 2.4mm2/pixel and a temporal resolution of 17.4 ms/frame for single slice imaging, and 52.2 ms/frame for concurrent 3-slice imaging. Implicit motion binning of the manifold scheme for both repetitive and fluent speaking tasks was demonstrated. The manifold scheme provided superior fidelity in modeling articulatory motion compared to low rank and temporal finite difference schemes. This was reflected by higher image quality scores in spatial and temporal blurring categories. Our technique exhibited faint alias artifacts, but offered a reduced interquartile range of scores compared to other methods in alias artifact category. Conclusion: Synergistic combination of a custom vocal-tract coil, variable density spirals and manifold regularization enables robust dynamic speech imaging at 3 Tesla.

Machine Learning Emulation of Urban Land Surface Processes

Dec 22, 2021

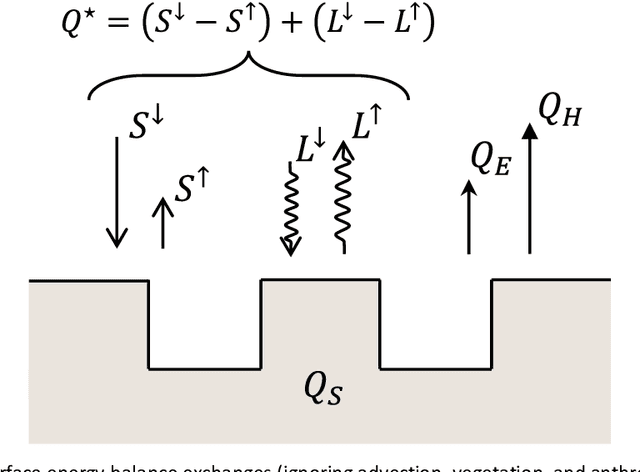

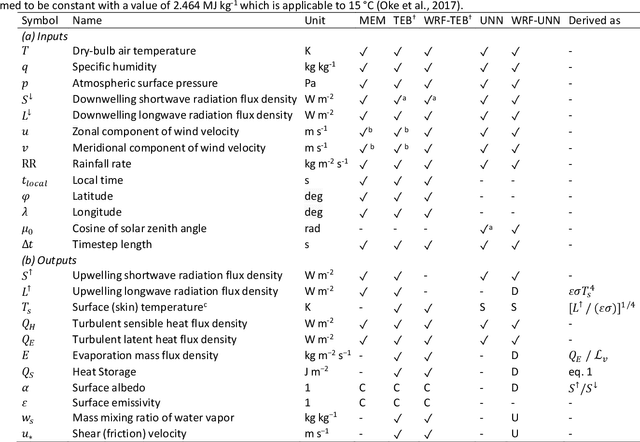

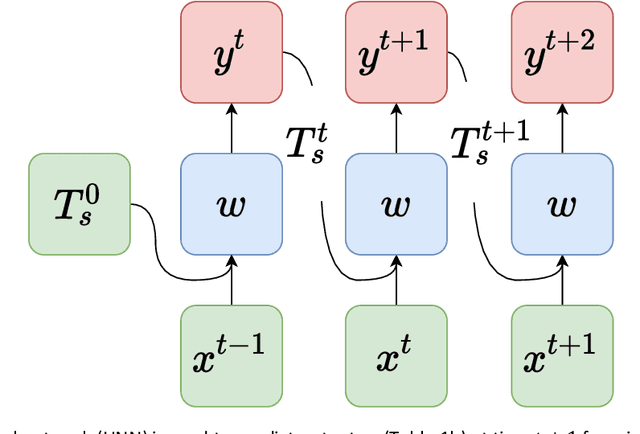

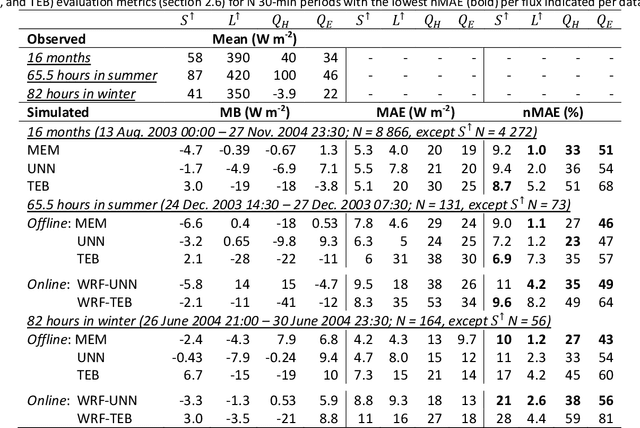

Abstract:Can we improve the modeling of urban land surface processes with machine learning (ML)? A prior comparison of urban land surface models (ULSMs) found that no single model is 'best' at predicting all common surface fluxes. Here, we develop an urban neural network (UNN) trained on the mean predicted fluxes from 22 ULSMs at one site. The UNN emulates the mean output of ULSMs accurately. When compared to a reference ULSM (Town Energy Balance; TEB), the UNN has greater accuracy relative to flux observations, less computational cost, and requires fewer input parameters. When coupled to the Weather Research Forecasting (WRF) model using TensorFlow bindings, WRF-UNN is stable and more accurate than the reference WRF-TEB. Although the application is currently constrained by the training data (1 site), we show a novel approach to improve the modeling of surface fluxes by combining the strengths of several ULSMs into one using ML.

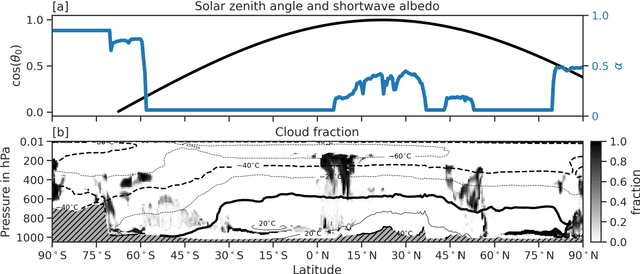

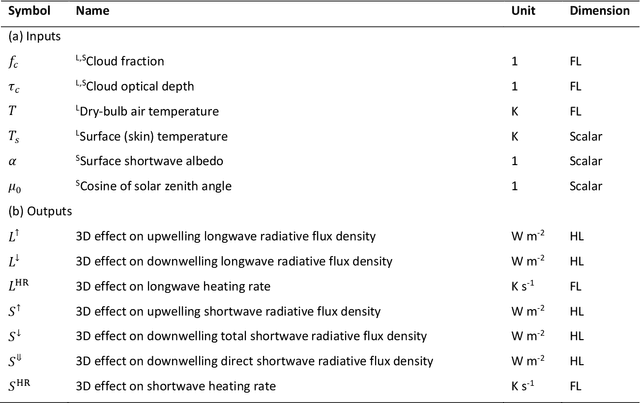

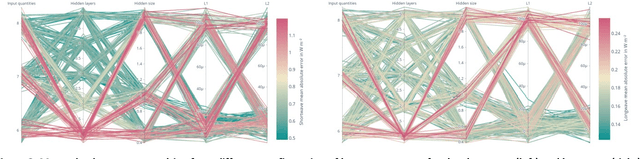

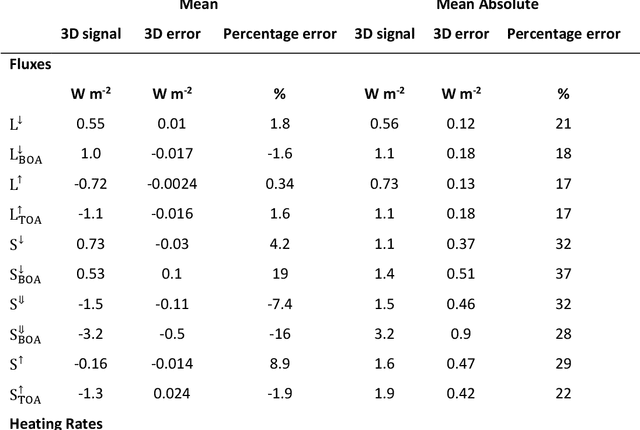

Machine Learning Emulation of 3D Cloud Radiative Effects

Mar 22, 2021

Abstract:The treatment of cloud structure in radiation schemes used in operational numerical weather prediction and climate models is often greatly simplified to make them computationally affordable. Here, we propose to correct the radiation scheme ecRad -- as used for operational predictions at the European Centre for Medium-Range Weather Forecasts -- for 3D cloud effects using computationally cheap neural networks. 3D cloud effects are learned as the difference between ecRad's fast Tripleclouds solver that neglects them, and its SPeedy Algorithm for Radiative TrAnsfer through CloUd Sides (SPARTACUS) solver that includes them but increases the cost of the entire radiation scheme by around five times so is too expensive for operational use. We find that the emulator can be used to increase the accuracy of both longwave and shortwave fluxes of Tripleclouds, with a bulk mean absolute error on the order of 20 and 30 % the 3D signal, for less than 1 % increase in computational cost. By using the neural network to correct the cloud-related errors in a fast radiation scheme, rather than trying to emulate the entire radiation scheme, we take advantage of the fast scheme's accurate performance in cloud-free parts of the atmosphere, particularly in the stratosphere and mesosphere.

Copula-based synthetic data generation for machine learning emulators in weather and climate: application to a simple radiation model

Jan 05, 2021

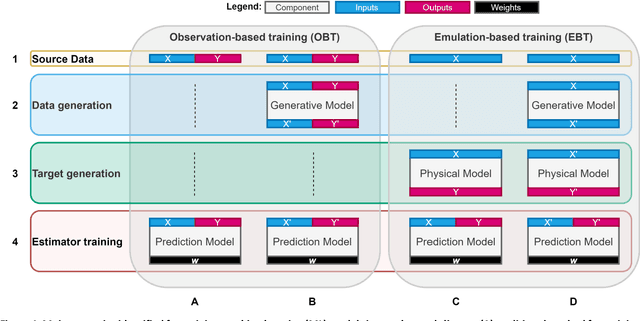

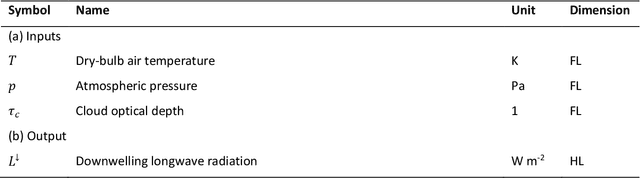

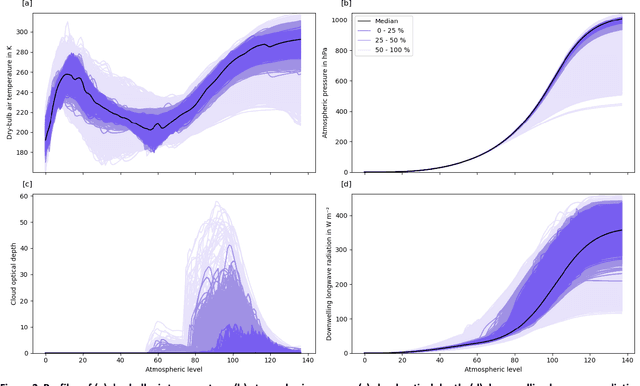

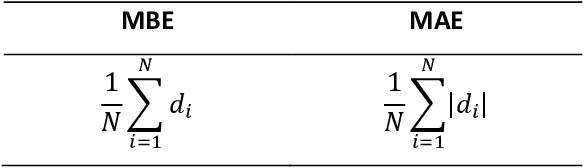

Abstract:Can we improve machine learning (ML) emulators with synthetic data? The use of real data for training ML models is often the cause of major limitations. For example, real data may be (a) only representative of a subset of situations and domains, (b) expensive to source, (c) limited to specific individuals due to licensing restrictions. Although the use of synthetic data is becoming increasingly popular in computer vision, the training of ML emulators in weather and climate still relies on the use of real data datasets. Here we investigate whether the use of copula-based synthetically-augmented datasets improves the prediction of ML emulators for estimating the downwelling longwave radiation. Results show that bulk errors are cut by up to 75 % for the mean bias error (from 0.08 to -0.02 W m$^{-2}$) and by up to 62 % (from 1.17 to 0.44 W m$^{-2}$) for the mean absolute error, thus showing potential for improving the generalization of future ML emulators.

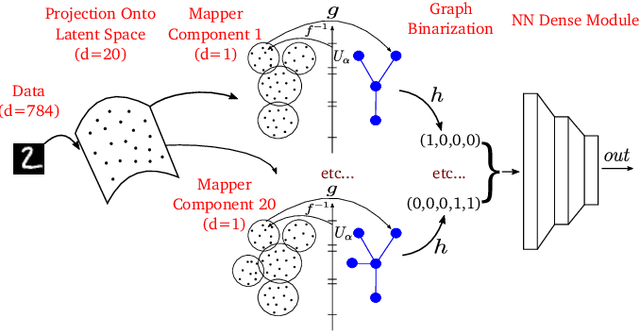

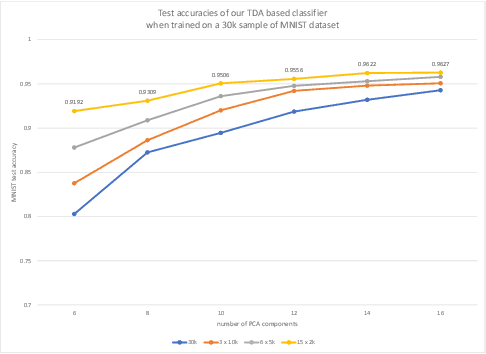

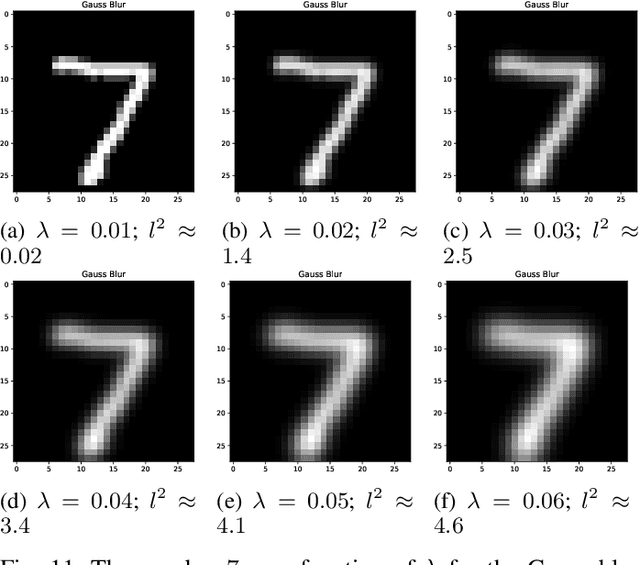

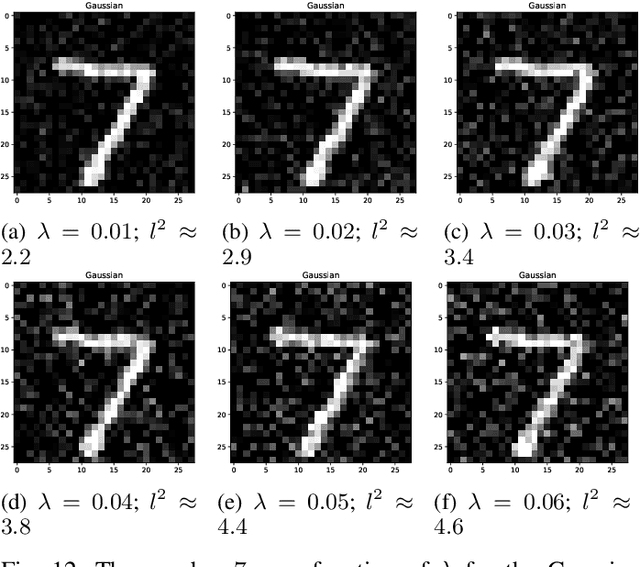

Mapper Based Classifier

Oct 21, 2019

Abstract:Topological data analysis aims to extract topological quantities from data, which tend to focus on the broader global structure of the data rather than local information. The Mapper method, specifically, generalizes clustering methods to identify significant global mathematical structures, which are out of reach of many other approaches. We propose a classifier based on applying the Mapper algorithm to data projected onto a latent space. We obtain the latent space by using PCA or autoencoders. Notably, a classifier based on the Mapper method is immune to any gradient based attack, and improves robustness over traditional CNNs (convolutional neural networks). We report theoretical justification and some numerical experiments that confirm our claims.

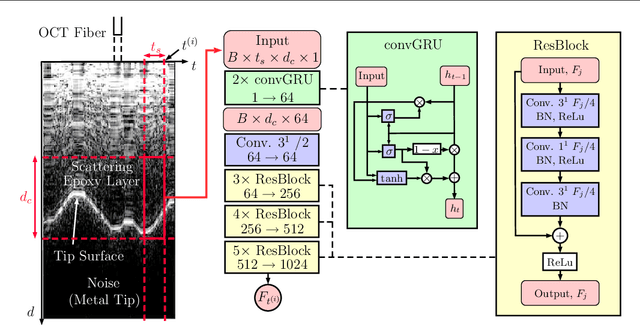

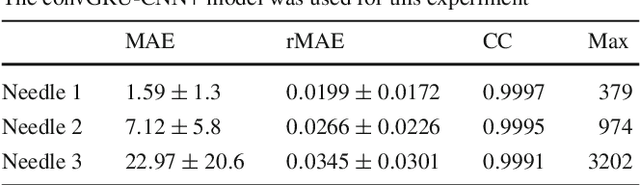

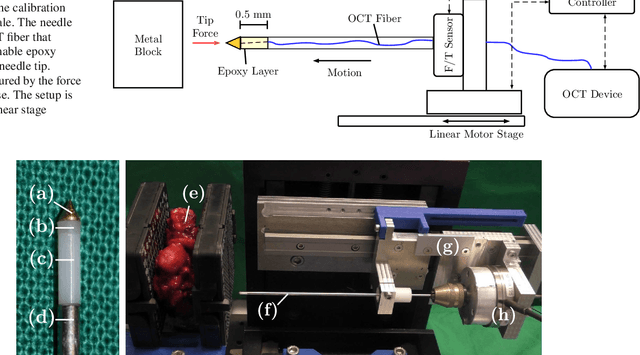

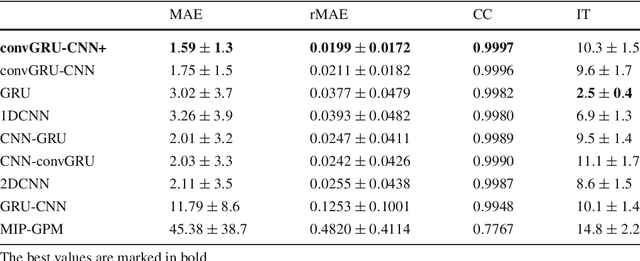

Spatio-Temporal Deep Learning Models for Tip Force Estimation During Needle Insertion

May 22, 2019

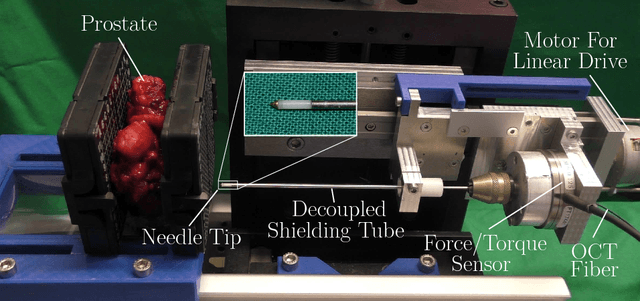

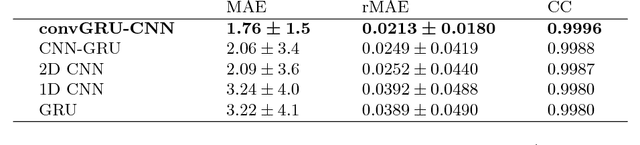

Abstract:Purpose. Precise placement of needles is a challenge in a number of clinical applications such as brachytherapy or biopsy. Forces acting at the needle cause tissue deformation and needle deflection which in turn may lead to misplacement or injury. Hence, a number of approaches to estimate the forces at the needle have been proposed. Yet, integrating sensors into the needle tip is challenging and a careful calibration is required to obtain good force estimates. Methods. We describe a fiber-optical needle tip force sensor design using a single OCT fiber for measurement. The fiber images the deformation of an epoxy layer placed below the needle tip which results in a stream of 1D depth profiles. We study different deep learning approaches to facilitate calibration between this spatio-temporal image data and the related forces. In particular, we propose a novel convGRU-CNN architecture for simultaneous spatial and temporal data processing. Results. The needle can be adapted to different operating ranges by changing the stiffness of the epoxy layer. Likewise, calibration can be adapted by training the deep learning models. Our novel convGRU-CNN architecture results in the lowest mean absolute error of 1.59 +- 1.3 mN and a cross-correlation coefficient of 0.9997, and clearly outperforms the other methods. Ex vivo experiments in human prostate tissue demonstrate the needle's application. Conclusions. Our OCT-based fiber-optical sensor presents a viable alternative for needle tip force estimation. The results indicate that the rich spatio-temporal information included in the stream of images showing the deformation throughout the epoxy layer can be effectively used by deep learning models. Particularly, we demonstrate that the convGRU-CNN architecture performs favorably, making it a promising approach for other spatio-temporal learning problems.

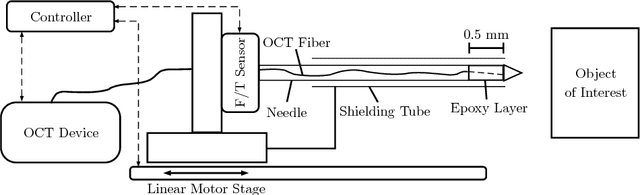

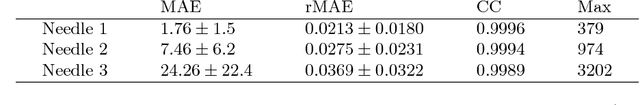

Needle Tip Force Estimation using an OCT Fiber and a Fused convGRU-CNN Architecture

May 30, 2018

Abstract:Needle insertion is common during minimally invasive interventions such as biopsy or brachytherapy. During soft tissue needle insertion, forces acting at the needle tip cause tissue deformation and needle deflection. Accurate needle tip force measurement provides information on needle-tissue interaction and helps detecting and compensating potential misplacement. For this purpose we introduce an image-based needle tip force estimation method using an optical fiber imaging the deformation of an epoxy layer below the needle tip over time. For calibration and force estimation, we introduce a novel deep learning-based fused convolutional GRU-CNN model which effectively exploits the spatio-temporal data structure. The needle is easy to manufacture and our model achieves a mean absolute error of 1.76 +- 1.5 mN with a cross-correlation coefficient of 0.9996, clearly outperforming other methods. We test needles with different materials to demonstrate that the approach can be adapted for different sensitivities and force ranges. Furthermore, we validate our approach in an ex-vivo prostate needle insertion scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge