Robin J. Hogan

Machine Learning Emulation of 3D Cloud Radiative Effects

Mar 22, 2021

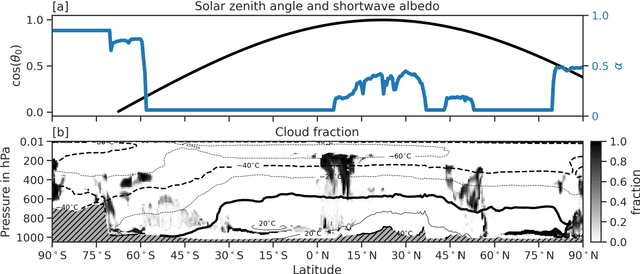

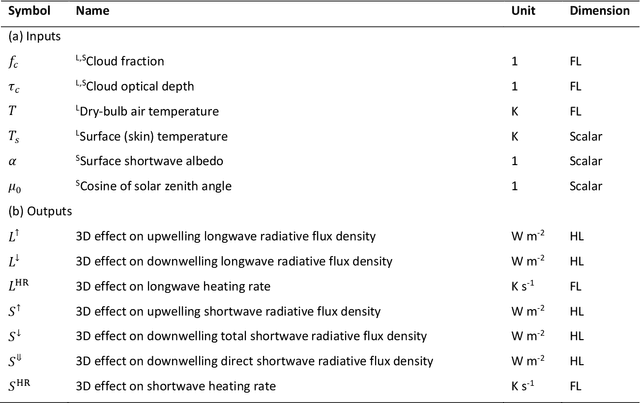

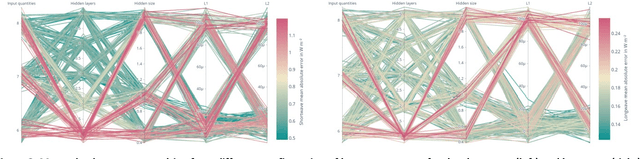

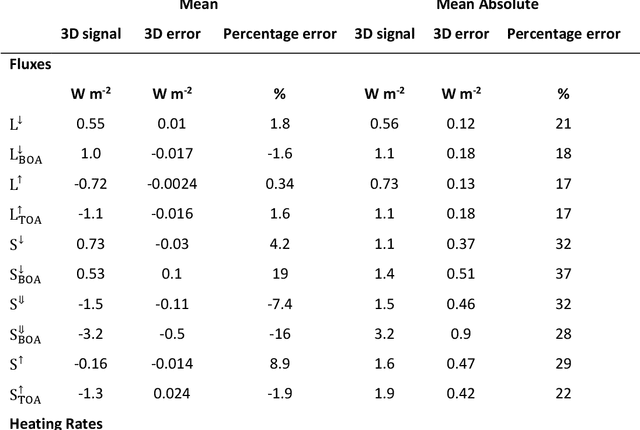

Abstract:The treatment of cloud structure in radiation schemes used in operational numerical weather prediction and climate models is often greatly simplified to make them computationally affordable. Here, we propose to correct the radiation scheme ecRad -- as used for operational predictions at the European Centre for Medium-Range Weather Forecasts -- for 3D cloud effects using computationally cheap neural networks. 3D cloud effects are learned as the difference between ecRad's fast Tripleclouds solver that neglects them, and its SPeedy Algorithm for Radiative TrAnsfer through CloUd Sides (SPARTACUS) solver that includes them but increases the cost of the entire radiation scheme by around five times so is too expensive for operational use. We find that the emulator can be used to increase the accuracy of both longwave and shortwave fluxes of Tripleclouds, with a bulk mean absolute error on the order of 20 and 30 % the 3D signal, for less than 1 % increase in computational cost. By using the neural network to correct the cloud-related errors in a fast radiation scheme, rather than trying to emulate the entire radiation scheme, we take advantage of the fast scheme's accurate performance in cloud-free parts of the atmosphere, particularly in the stratosphere and mesosphere.

Copula-based synthetic data generation for machine learning emulators in weather and climate: application to a simple radiation model

Jan 05, 2021

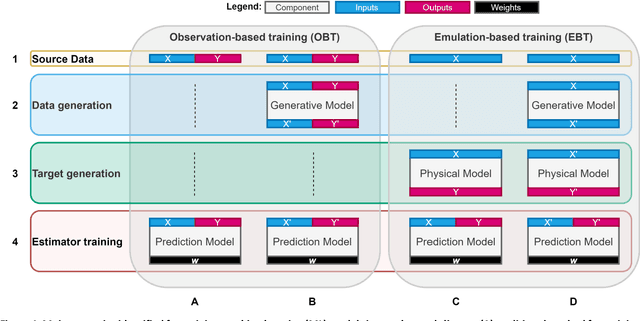

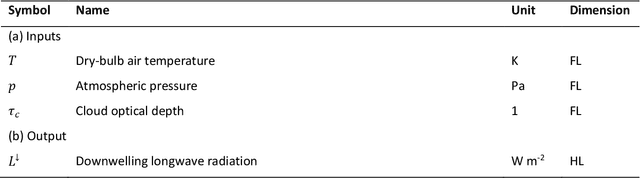

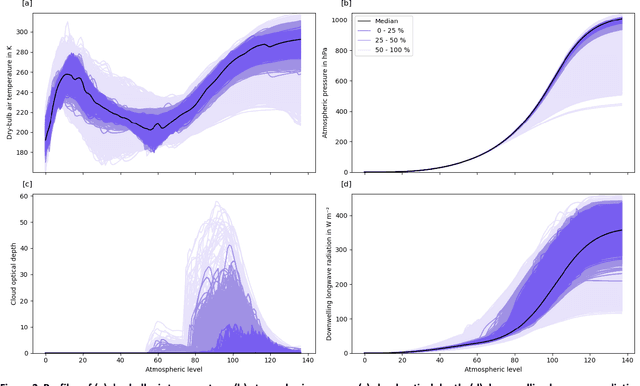

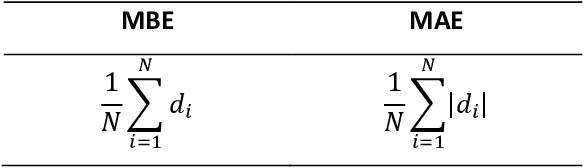

Abstract:Can we improve machine learning (ML) emulators with synthetic data? The use of real data for training ML models is often the cause of major limitations. For example, real data may be (a) only representative of a subset of situations and domains, (b) expensive to source, (c) limited to specific individuals due to licensing restrictions. Although the use of synthetic data is becoming increasingly popular in computer vision, the training of ML emulators in weather and climate still relies on the use of real data datasets. Here we investigate whether the use of copula-based synthetically-augmented datasets improves the prediction of ML emulators for estimating the downwelling longwave radiation. Results show that bulk errors are cut by up to 75 % for the mean bias error (from 0.08 to -0.02 W m$^{-2}$) and by up to 62 % (from 1.17 to 0.44 W m$^{-2}$) for the mean absolute error, thus showing potential for improving the generalization of future ML emulators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge