David Garlan

CLUE: Neural Networks Calibration via Learning Uncertainty-Error alignment

May 28, 2025Abstract:Reliable uncertainty estimation is critical for deploying neural networks (NNs) in real-world applications. While existing calibration techniques often rely on post-hoc adjustments or coarse-grained binning methods, they remain limited in scalability, differentiability, and generalization across domains. In this work, we introduce CLUE (Calibration via Learning Uncertainty-Error Alignment), a novel approach that explicitly aligns predicted uncertainty with observed error during training, grounded in the principle that well-calibrated models should produce uncertainty estimates that match their empirical loss. CLUE adopts a novel loss function that jointly optimizes predictive performance and calibration, using summary statistics of uncertainty and loss as proxies. The proposed method is fully differentiable, domain-agnostic, and compatible with standard training pipelines. Through extensive experiments on vision, regression, and language modeling tasks, including out-of-distribution and domain-shift scenarios, we demonstrate that CLUE achieves superior calibration quality and competitive predictive performance with respect to state-of-the-art approaches without imposing significant computational overhead.

Tolerance of Reinforcement Learning Controllers against Deviations in Cyber Physical Systems

Jun 24, 2024

Abstract:Cyber-physical systems (CPS) with reinforcement learning (RL)-based controllers are increasingly being deployed in complex physical environments such as autonomous vehicles, the Internet-of-Things(IoT), and smart cities. An important property of a CPS is tolerance; i.e., its ability to function safely under possible disturbances and uncertainties in the actual operation. In this paper, we introduce a new, expressive notion of tolerance that describes how well a controller is capable of satisfying a desired system requirement, specified using Signal Temporal Logic (STL), under possible deviations in the system. Based on this definition, we propose a novel analysis problem, called the tolerance falsification problem, which involves finding small deviations that result in a violation of the given requirement. We present a novel, two-layer simulation-based analysis framework and a novel search heuristic for finding small tolerance violations. To evaluate our approach, we construct a set of benchmark problems where system parameters can be configured to represent different types of uncertainties and disturbancesin the system. Our evaluation shows that our falsification approach and heuristic can effectively find small tolerance violations.

Error-Driven Uncertainty Aware Training

May 02, 2024

Abstract:Neural networks are often overconfident about their predictions, which undermines their reliability and trustworthiness. In this work, we present a novel technique, named Error-Driven Uncertainty Aware Training (EUAT), which aims to enhance the ability of neural models to estimate their uncertainty correctly, namely to be highly uncertain when they output inaccurate predictions and low uncertain when their output is accurate. The EUAT approach operates during the model's training phase by selectively employing two loss functions depending on whether the training examples are correctly or incorrectly predicted by the model. This allows for pursuing the twofold goal of i) minimizing model uncertainty for correctly predicted inputs and ii) maximizing uncertainty for mispredicted inputs, while preserving the model's misprediction rate. We evaluate EUAT using diverse neural models and datasets in the image recognition domains considering both non-adversarial and adversarial settings. The results show that EUAT outperforms existing approaches for uncertainty estimation (including other uncertainty-aware training techniques, calibration, ensembles, and DEUP) by providing uncertainty estimates that not only have higher quality when evaluated via statistical metrics (e.g., correlation with residuals) but also when employed to build binary classifiers that decide whether the model's output can be trusted or not and under distributional data shifts.

CURE: Simulation-Augmented Auto-Tuning in Robotics

Feb 08, 2024

Abstract:Robotic systems are typically composed of various subsystems, such as localization and navigation, each encompassing numerous configurable components (e.g., selecting different planning algorithms). Once an algorithm has been selected for a component, its associated configuration options must be set to the appropriate values. Configuration options across the system stack interact non-trivially. Finding optimal configurations for highly configurable robots to achieve desired performance poses a significant challenge due to the interactions between configuration options across software and hardware that result in an exponentially large and complex configuration space. These challenges are further compounded by the need for transferability between different environments and robotic platforms. Data efficient optimization algorithms (e.g., Bayesian optimization) have been increasingly employed to automate the tuning of configurable parameters in cyber-physical systems. However, such optimization algorithms converge at later stages, often after exhausting the allocated budget (e.g., optimization steps, allotted time) and lacking transferability. This paper proposes CURE -- a method that identifies causally relevant configuration options, enabling the optimization process to operate in a reduced search space, thereby enabling faster optimization of robot performance. CURE abstracts the causal relationships between various configuration options and robot performance objectives by learning a causal model in the source (a low-cost environment such as the Gazebo simulator) and applying the learned knowledge to perform optimization in the target (e.g., Turtlebot 3 physical robot). We demonstrate the effectiveness and transferability of CURE by conducting experiments that involve varying degrees of deployment changes in both physical robots and simulation.

Hyper-parameter Tuning for Adversarially Robust Models

Apr 05, 2023

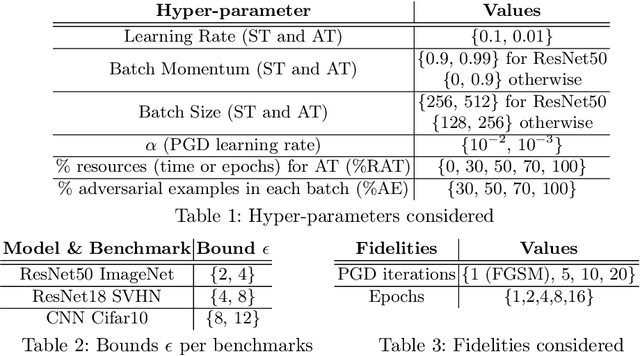

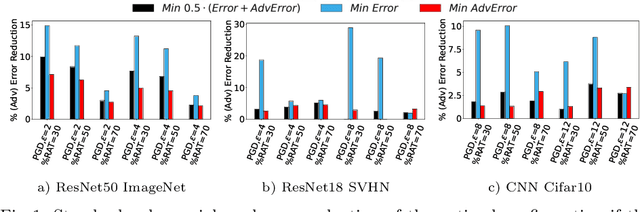

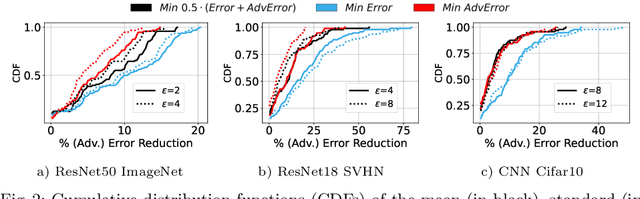

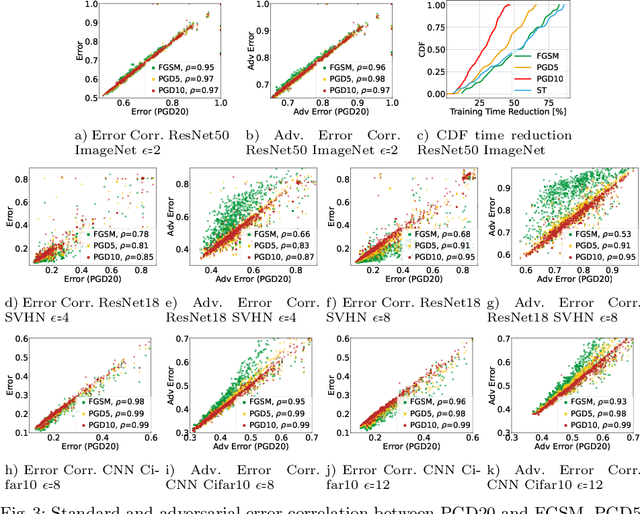

Abstract:This work focuses on the problem of hyper-parameter tuning (HPT) for robust (i.e., adversarially trained) models, with the twofold goal of i) establishing which additional HPs are relevant to tune in adversarial settings, and ii) reducing the cost of HPT for robust models. We pursue the first goal via an extensive experimental study based on 3 recent models widely adopted in the prior literature on adversarial robustness. Our findings show that the complexity of the HPT problem, already notoriously expensive, is exacerbated in adversarial settings due to two main reasons: i) the need of tuning additional HPs which balance standard and adversarial training; ii) the need of tuning the HPs of the standard and adversarial training phases independently. Fortunately, we also identify new opportunities to reduce the cost of HPT for robust models. Specifically, we propose to leverage cheap adversarial training methods to obtain inexpensive, yet highly correlated, estimations of the quality achievable using state-of-the-art methods (PGD). We show that, by exploiting this novel idea in conjunction with a recent multi-fidelity optimizer (taKG), the efficiency of the HPT process can be significantly enhanced.

CaRE: Finding Root Causes of Configuration Issues in Highly-Configurable Robots

Jan 18, 2023

Abstract:Robotic systems have several subsystems that possess a huge combinatorial configuration space and hundreds or even thousands of possible software and hardware configuration options interacting non-trivially. The configurable parameters can be tailored to target specific objectives, but when incorrectly configured, can cause functional faults. Finding the root cause of such faults is challenging due to the exponentially large configuration space and the dependencies between the robot's configuration settings and performance. This paper proposes CaRE, a method for diagnosing the root cause of functional faults through the lens of causality, which abstracts the causal relationships between various configuration options and the robot's performance objectives. We demonstrate CaRE's efficacy by finding the root cause of the observed functional faults via CaRE and validating the diagnosed root cause, conducting experiments in both physical robots (Husky and Turtlebot 3) and in simulation (Gazebo). Furthermore, we demonstrate that the causal models learned from robots in simulation (simulating Husky in Gazebo) are transferable to physical robots across different platforms (Turtlebot 3).

TrimTuner: Efficient Optimization of Machine Learning Jobs in the Cloud via Sub-Sampling

Nov 09, 2020

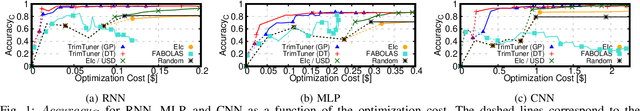

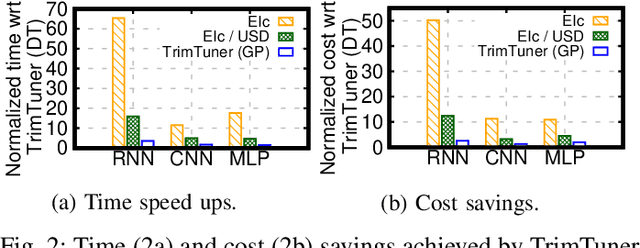

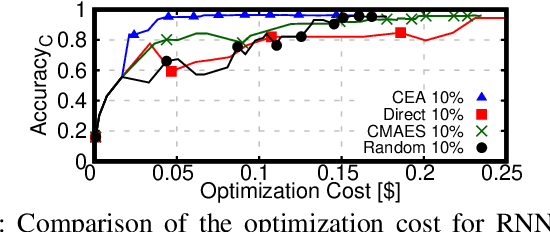

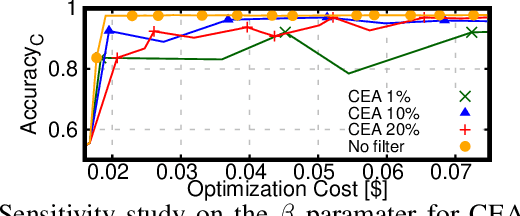

Abstract:This work introduces TrimTuner, the first system for optimizing machine learning jobs in the cloud to exploit sub-sampling techniques to reduce the cost of the optimization process while keeping into account user-specified constraints. TrimTuner jointly optimizes the cloud and application-specific parameters and, unlike state of the art works for cloud optimization, eschews the need to train the model with the full training set every time a new configuration is sampled. Indeed, by leveraging sub-sampling techniques and data-sets that are up to 60x smaller than the original one, we show that TrimTuner can reduce the cost of the optimization process by up to 50x. Further, TrimTuner speeds-up the recommendation process by 65x with respect to state of the art techniques for hyper-parameter optimization that use sub-sampling techniques. The reasons for this improvement are twofold: i) a novel domain specific heuristic that reduces the number of configurations for which the acquisition function has to be evaluated; ii) the adoption of an ensemble of decision trees that enables boosting the speed of the recommendation process by one additional order of magnitude.

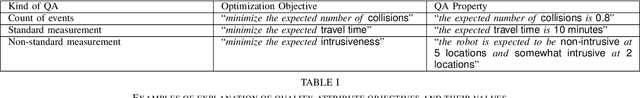

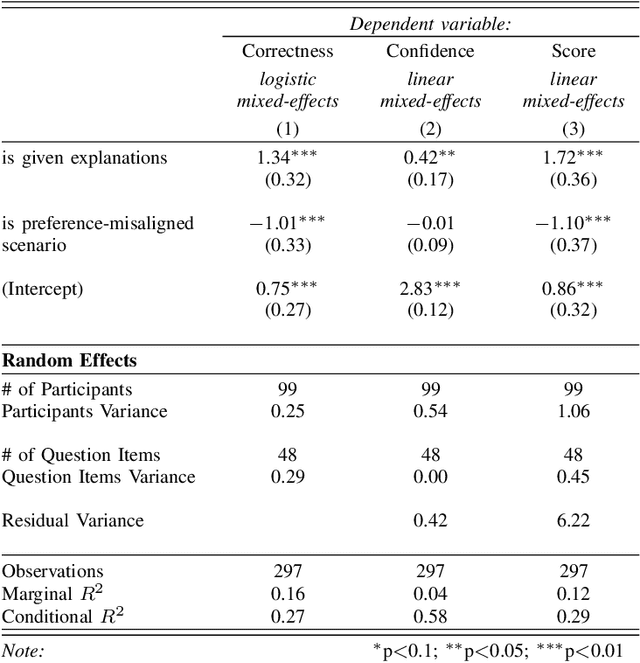

Tradeoff-Focused Contrastive Explanation for MDP Planning

Apr 27, 2020

Abstract:End-users' trust in automated agents is important as automated decision-making and planning is increasingly used in many aspects of people's lives. In real-world applications of planning, multiple optimization objectives are often involved. Thus, planning agents' decisions can involve complex tradeoffs among competing objectives. It can be difficult for the end-users to understand why an agent decides on a particular planning solution on the basis of its objective values. As a result, the users may not know whether the agent is making the right decisions, and may lack trust in it. In this work, we contribute an approach, based on contrastive explanation, that enables a multi-objective MDP planning agent to explain its decisions in a way that communicates its tradeoff rationale in terms of the domain-level concepts. We conduct a human subjects experiment to evaluate the effectiveness of our explanation approach in a mobile robot navigation domain. The results show that our approach significantly improves the users' understanding, and confidence in their understanding, of the tradeoff rationale of the planning agent.

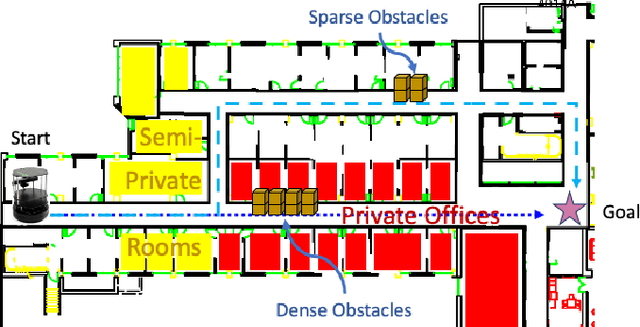

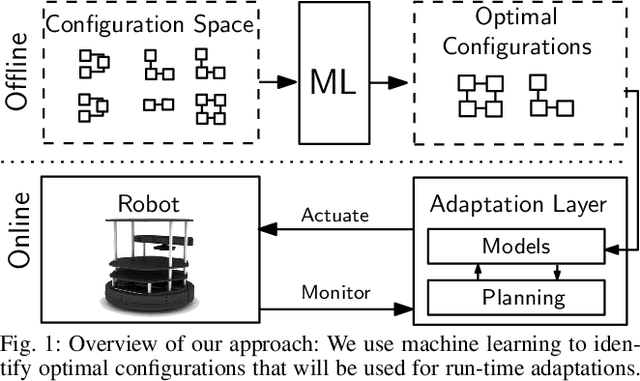

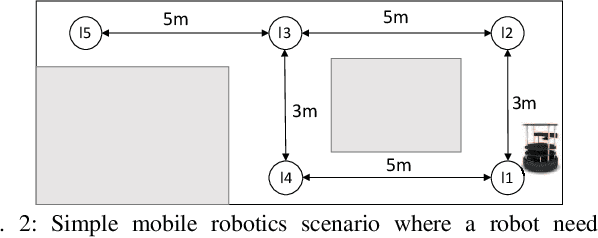

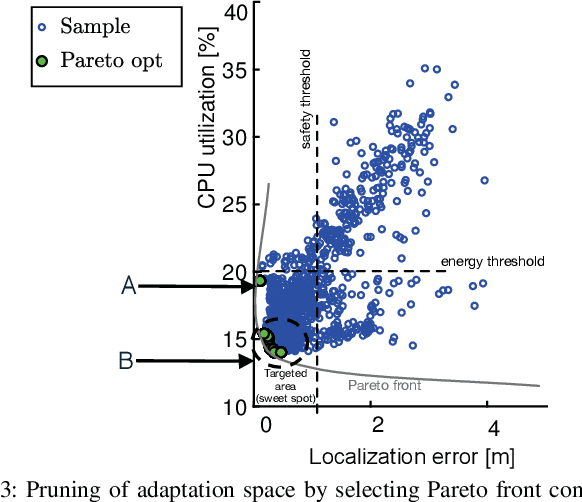

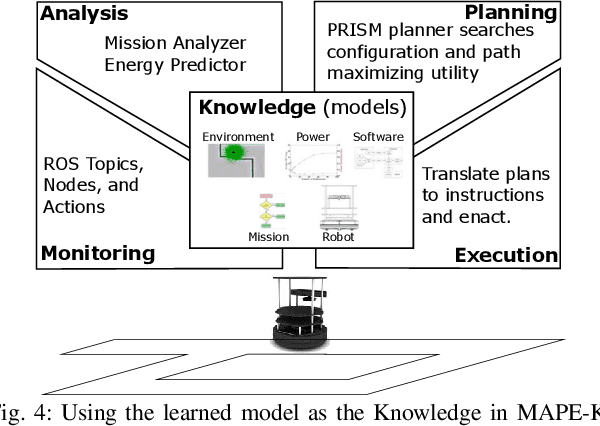

Machine Learning Meets Quantitative Planning: Enabling Self-Adaptation in Autonomous Robots

Mar 10, 2019

Abstract:Modern cyber-physical systems (e.g., robotics systems) are typically composed of physical and software components, the characteristics of which are likely to change over time. Assumptions about parts of the system made at design time may not hold at run time, especially when a system is deployed for long periods (e.g., over decades). Self-adaptation is designed to find reconfigurations of systems to handle such run-time inconsistencies. Planners can be used to find and enact optimal reconfigurations in such an evolving context. However, for systems that are highly configurable, such planning becomes intractable due to the size of the adaptation space. To overcome this challenge, in this paper we explore an approach that (a) uses machine learning to find Pareto-optimal configurations without needing to explore every configuration and (b) restricts the search space to such configurations to make planning tractable. We explore this in the context of robot missions that need to consider task timeliness and energy consumption. An independent evaluation shows that our approach results in high-quality adaptation plans in uncertain and adversarial environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge