Daniel Lengyel

Kernel Sum of Squares for Data Adapted Kernel Learning of Dynamical Systems from Data: A global optimization approach

Aug 12, 2024

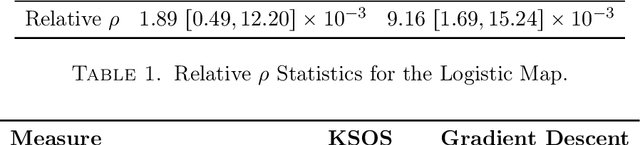

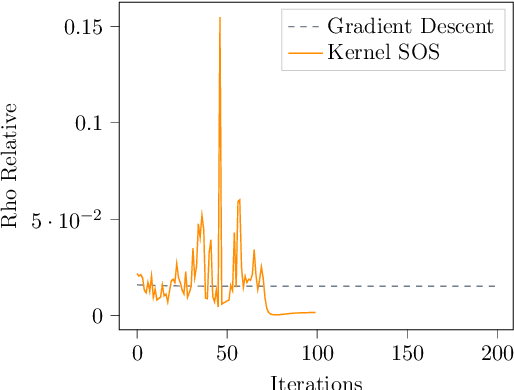

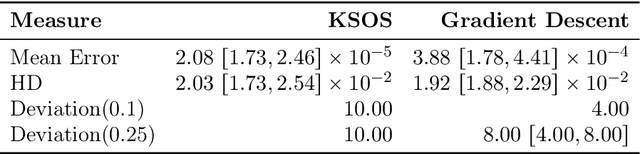

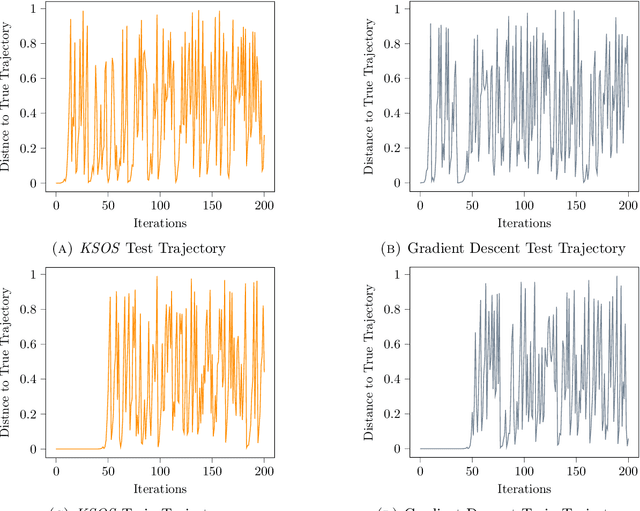

Abstract:This paper examines the application of the Kernel Sum of Squares (KSOS) method for enhancing kernel learning from data, particularly in the context of dynamical systems. Traditional kernel-based methods, despite their theoretical soundness and numerical efficiency, frequently struggle with selecting optimal base kernels and parameter tuning, especially with gradient-based methods prone to local optima. KSOS mitigates these issues by leveraging a global optimization framework with kernel-based surrogate functions, thereby achieving more reliable and precise learning of dynamical systems. Through comprehensive numerical experiments on the Logistic Map, Henon Map, and Lorentz System, KSOS is shown to consistently outperform gradient descent in minimizing the relative-$\rho$ metric and improving kernel accuracy. These results highlight KSOS's effectiveness in predicting the behavior of chaotic dynamical systems, demonstrating its capability to adapt kernels to underlying dynamics and enhance the robustness and predictive power of kernel-based approaches, making it a valuable asset for time series analysis in various scientific fields.

GENNI: Visualising the Geometry of Equivalences for Neural Network Identifiability

Nov 14, 2020Abstract:We propose an efficient algorithm to visualise symmetries in neural networks. Typically, models are defined with respect to a parameter space, where non-equal parameters can produce the same input-output map. Our proposed method, GENNI, allows us to efficiently identify parameters that are functionally equivalent and then visualise the subspace of the resulting equivalence class. By doing so, we are now able to better explore questions surrounding identifiability, with applications to optimisation and generalizability, for commonly used or newly developed neural network architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge