Kate Highnam

Autonomous Network Defence using Reinforcement Learning

Sep 26, 2024

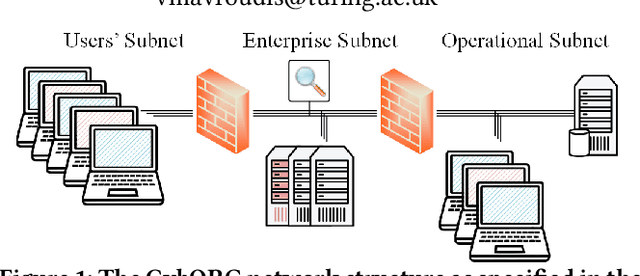

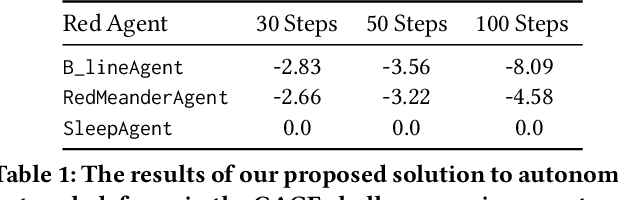

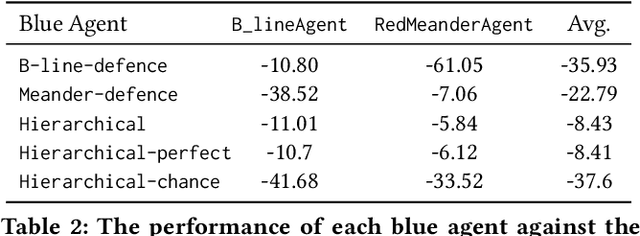

Abstract:In the network security arms race, the defender is significantly disadvantaged as they need to successfully detect and counter every malicious attack. In contrast, the attacker needs to succeed only once. To level the playing field, we investigate the effectiveness of autonomous agents in a realistic network defence scenario. We first outline the problem, provide the background on reinforcement learning and detail our proposed agent design. Using a network environment simulation, with 13 hosts spanning 3 subnets, we train a novel reinforcement learning agent and show that it can reliably defend continual attacks by two advanced persistent threat (APT) red agents: one with complete knowledge of the network layout and another which must discover resources through exploration but is more general.

Canaries and Whistles: Resilient Drone Communication Networks with (or without) Deep Reinforcement Learning

Dec 08, 2023

Abstract:Communication networks able to withstand hostile environments are critically important for disaster relief operations. In this paper, we consider a challenging scenario where drones have been compromised in the supply chain, during their manufacture, and harbour malicious software capable of wide-ranging and infectious disruption. We investigate multi-agent deep reinforcement learning as a tool for learning defensive strategies that maximise communications bandwidth despite continual adversarial interference. Using a public challenge for learning network resilience strategies, we propose a state-of-the-art expert technique and study its superiority over deep reinforcement learning agents. Correspondingly, we identify three specific methods for improving the performance of our learning-based agents: (1) ensuring each observation contains the necessary information, (2) using expert agents to provide a curriculum for learning, and (3) paying close attention to reward. We apply our methods and present a new mixed strategy enabling expert and learning-based agents to work together and improve on all prior results.

* Published in AISec '23. This version fixes some terminology to improve readability

GENNI: Visualising the Geometry of Equivalences for Neural Network Identifiability

Nov 14, 2020Abstract:We propose an efficient algorithm to visualise symmetries in neural networks. Typically, models are defined with respect to a parameter space, where non-equal parameters can produce the same input-output map. Our proposed method, GENNI, allows us to efficiently identify parameters that are functionally equivalent and then visualise the subspace of the resulting equivalence class. By doing so, we are now able to better explore questions surrounding identifiability, with applications to optimisation and generalizability, for commonly used or newly developed neural network architectures.

Real-Time Detection of Dictionary DGA Network Traffic using Deep Learning

Mar 28, 2020

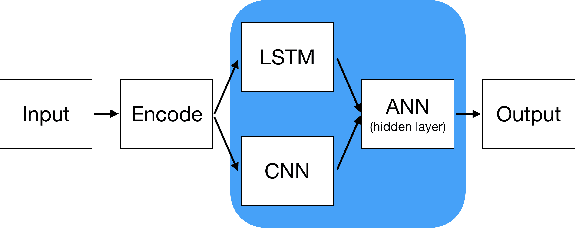

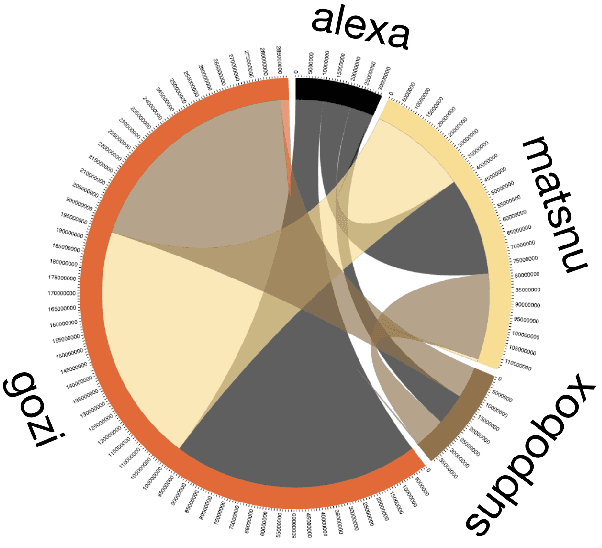

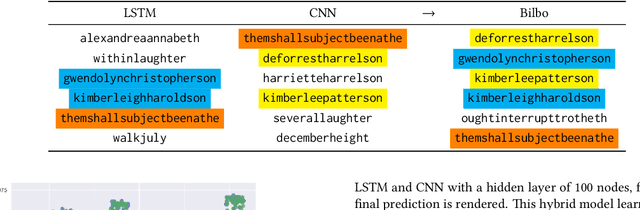

Abstract:Botnets and malware continue to avoid detection by static rules engines when using domain generation algorithms (DGAs) for callouts to unique, dynamically generated web addresses. Common DGA detection techniques fail to reliably detect DGA variants that combine random dictionary words to create domain names that closely mirror legitimate domains. To combat this, we created a novel hybrid neural network, Bilbo the `bagging` model, that analyses domains and scores the likelihood they are generated by such algorithms and therefore are potentially malicious. Bilbo is the first parallel usage of a convolutional neural network (CNN) and a long short-term memory (LSTM) network for DGA detection. Our unique architecture is found to be the most consistent in performance in terms of AUC, F1 score, and accuracy when generalising across different dictionary DGA classification tasks compared to current state-of-the-art deep learning architectures. We validate using reverse-engineered dictionary DGA domains and detail our real-time implementation strategy for scoring real-world network logs within a large financial enterprise. In four hours of actual network traffic, the model discovered at least five potential command-and-control networks that commercial vendor tools did not flag.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge