Daniel Civitarese

A modular framework for extreme weather generation

Feb 05, 2021

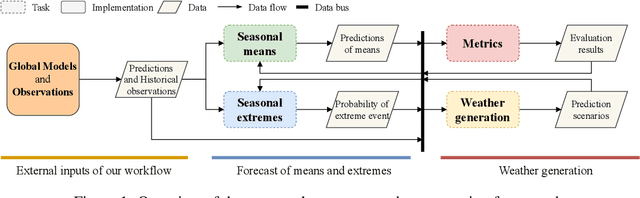

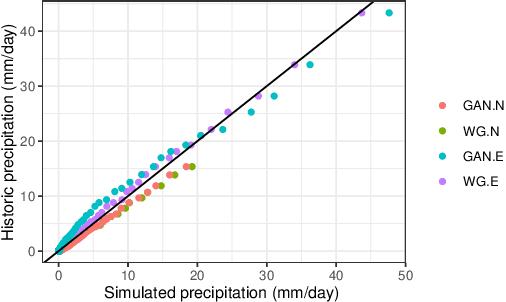

Abstract:Extreme weather events have an enormous impact on society and are expected to become more frequent and severe with climate change. In this context, resilience planning becomes crucial for risk mitigation and coping with these extreme events. Machine learning techniques can play a critical role in resilience planning through the generation of realistic extreme weather event scenarios that can be used to evaluate possible mitigation actions. This paper proposes a modular framework that relies on interchangeable components to produce extreme weather event scenarios. We discuss possible alternatives for each of the components and show initial results comparing two approaches on the task of generating precipitation scenarios.

Workflow Provenance in the Lifecycle of Scientific Machine Learning

Sep 30, 2020

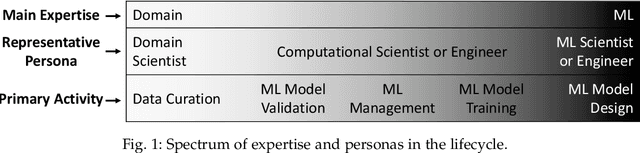

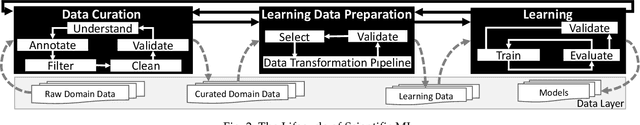

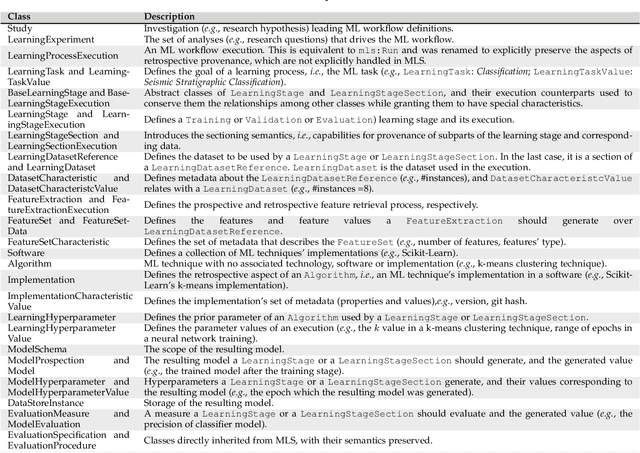

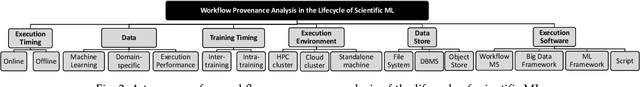

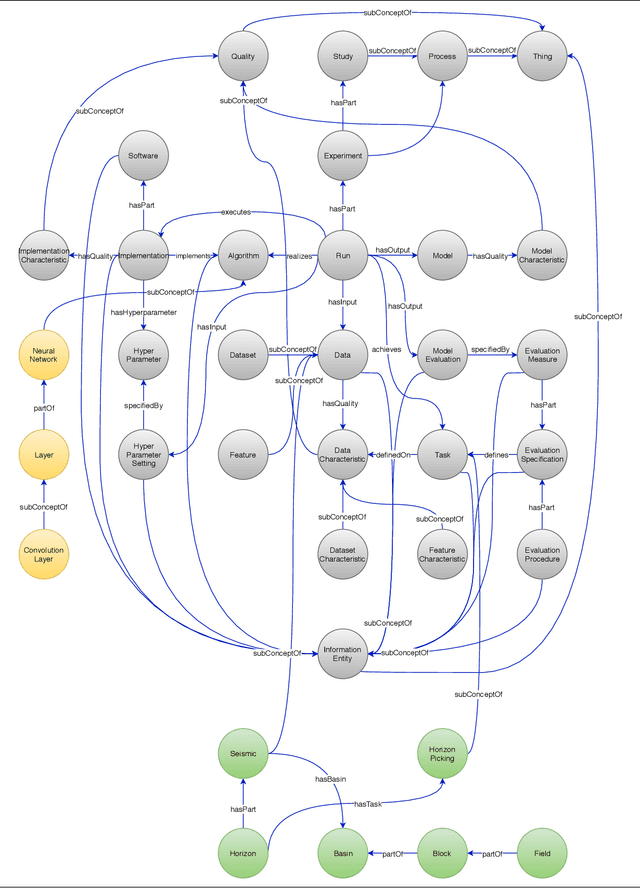

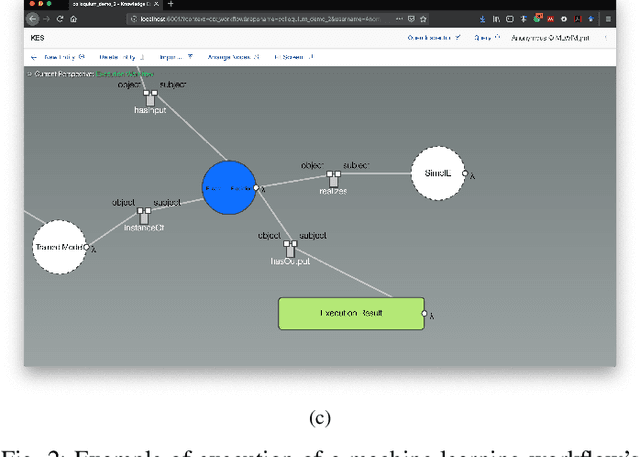

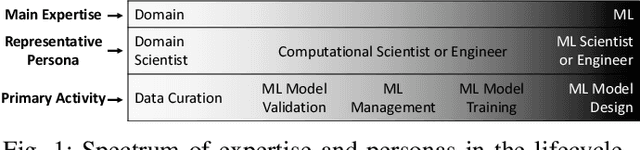

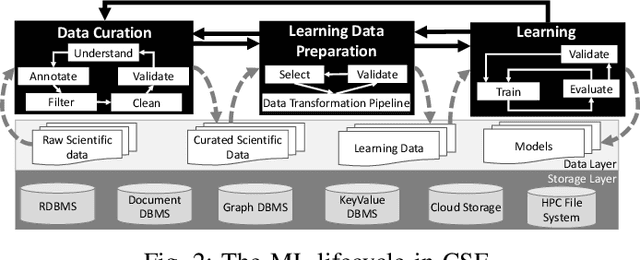

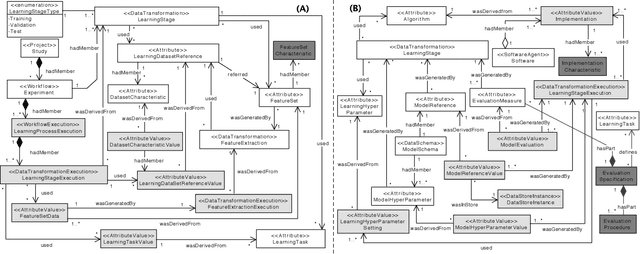

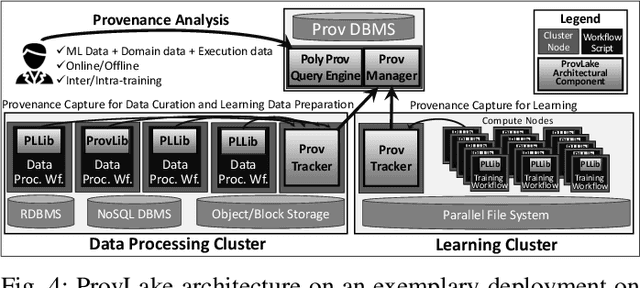

Abstract:Machine Learning (ML) has already fundamentally changed several businesses. More recently, it has also been profoundly impacting the computational science and engineering domains, like geoscience, climate science, and health science. In these domains, users need to perform comprehensive data analyses combining scientific data and ML models to provide for critical requirements, such as reproducibility, model explainability, and experiment data understanding. However, scientific ML is multidisciplinary, heterogeneous, and affected by the physical constraints of the domain, making such analyses even more challenging. In this work, we leverage workflow provenance techniques to build a holistic view to support the lifecycle of scientific ML. We contribute with (i) characterization of the lifecycle and taxonomy for data analyses; (ii) design principles to build this view, with a W3C PROV compliant data representation and a reference system architecture; and (iii) lessons learned after an evaluation in an Oil & Gas case using an HPC cluster with 393 nodes and 946 GPUs. The experiments show that the principles enable queries that integrate domain semantics with ML models while keeping low overhead (<1%), high scalability, and an order of magnitude of query acceleration under certain workloads against without our representation.

Effective Integration of Symbolic and Connectionist Approaches through a Hybrid Representation

Dec 18, 2019

Abstract:In this paper, we present our position for a neuralsymbolic integration strategy, arguing in favor of a hybrid representation to promote an effective integration. Such description differs from others fundamentally, since its entities aim at representing AI models in general, allowing to describe both nonsymbolic and symbolic knowledge, the integration between them and their corresponding processors. Moreover, the entities also support representing workflows, leveraging traceability to keep track of every change applied to models and their related entities (e.g., data or concepts) throughout the lifecycle of the models.

Managing Machine Learning Workflow Components

Dec 10, 2019

Abstract:Machine Learning Workflows~(MLWfs) have become essential and a disruptive approach in problem-solving over several industries. However, the development process of MLWfs may be complicated, hard to achieve, time-consuming, and error-prone. To handle this problem, in this paper, we introduce \emph{machine learning workflow management}~(MLWfM) as a technique to aid the development and reuse of MLWfs and their components through three aspects: representation, execution, and creation. More precisely, we discuss our approach to structure the MLWfs' components and their metadata to aid retrieval and reuse of components in new MLWfs. Also, we consider the execution of these components within a tool. The hybrid knowledge representation, called Hyperknowledge, frames our methodology, supporting the three MLWfM's aspects. To validate our approach, we show a practical use case in the Oil \& Gas industry.

Provenance Data in the Machine Learning Lifecycle in Computational Science and Engineering

Oct 21, 2019

Abstract:Machine Learning (ML) has become essential in several industries. In Computational Science and Engineering (CSE), the complexity of the ML lifecycle comes from the large variety of data, scientists' expertise, tools, and workflows. If data are not tracked properly during the lifecycle, it becomes unfeasible to recreate a ML model from scratch or to explain to stakeholders how it was created. The main limitation of provenance tracking solutions is that they cannot cope with provenance capture and integration of domain and ML data processed in the multiple workflows in the lifecycle while keeping the provenance capture overhead low. To handle this problem, in this paper we contribute with a detailed characterization of provenance data in the ML lifecycle in CSE; a new provenance data representation, called PROV-ML, built on top of W3C PROV and ML Schema; and extensions to a system that tracks provenance from multiple workflows to address the characteristics of ML and CSE, and to allow for provenance queries with a standard vocabulary. We show a practical use in a real case in the Oil and Gas industry, along with its evaluation using 48 GPUs in parallel.

Semantic Segmentation of Seismic Images

May 10, 2019

Abstract:Almost all work to understand Earth's subsurface on a large scale relies on the interpretation of seismic surveys by experts who segment the survey (usually a cube) into layers; a process that is very time demanding. In this paper, we present a new deep neural network architecture specially designed to semantically segment seismic images with a minimal amount of training data. To achieve this, we make use of a transposed residual unit that replaces the traditional dilated convolution for the decode block. Also, instead of using a predefined shape for up-scaling, our network learns all the steps to upscale the features from the encoder. We train our neural network using the Penobscot 3D dataset; a real seismic dataset acquired offshore Nova Scotia, Canada. We compare our approach with two well-known deep neural network topologies: Fully Convolutional Network and U-Net. In our experiments, we show that our approach can achieve more than 99 percent of the mean intersection over union (mIOU) metric, outperforming the existing topologies. Moreover, our qualitative results show that the obtained model can produce masks very close to human interpretation with very little discontinuity.

Netherlands Dataset: A New Public Dataset for Machine Learning in Seismic Interpretation

Mar 26, 2019

Abstract:Machine learning and, more specifically, deep learning algorithms have seen remarkable growth in their popularity and usefulness in the last years. This is arguably due to three main factors: powerful computers, new techniques to train deeper networks and larger datasets. Although the first two are readily available in modern computers and ML libraries, the last one remains a challenge for many domains. It is a fact that big data is a reality in almost all fields nowadays, and geosciences are not an exception. However, to achieve the success of general-purpose applications such as ImageNet - for which there are +14 million labeled images for 1000 target classes - we not only need more data, we need more high-quality labeled data. When it comes to the Oil&Gas industry, confidentiality issues hamper even more the sharing of datasets. In this work, we present the Netherlands interpretation dataset, a contribution to the development of machine learning in seismic interpretation. The Netherlands F3 dataset acquisition was carried out in the North Sea, Netherlands offshore. The data is publicly available and contains pos-stack data, 8 horizons and well logs of 4 wells. For the purposes of our machine learning tasks, the original dataset was reinterpreted, generating 9 horizons separating different seismic facies intervals. The interpreted horizons were used to generate approximatelly 190,000 labeled images for inlines and crosslines. Finally, we present two deep learning applications in which the proposed dataset was employed and produced compelling results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge