Danesh Tarapore

Blind-Wayfarer: A Minimalist, Probing-Driven Framework for Resilient Navigation in Perception-Degraded Environments

Mar 10, 2025Abstract:Navigating autonomous robots through dense forests and rugged terrains is especially daunting when exteroceptive sensors -- such as cameras and LiDAR sensors -- fail under occlusions, low-light conditions, or sensor noise. We present Blind-Wayfarer, a probing-driven navigation framework inspired by maze-solving algorithms that relies primarily on a compass to robustly traverse complex, unstructured environments. In 1,000 simulated forest experiments, Blind-Wayfarer achieved a 99.7% success rate. In real-world tests in two distinct scenarios -- with rover platforms of different sizes -- our approach successfully escaped forest entrapments in all 20 trials. Remarkably, our framework also enabled a robot to escape a dense woodland, traveling from 45 m inside the forest to a paved pathway at its edge. These findings highlight the potential of probing-based methods for reliable navigation in challenging perception-degraded field conditions. Videos and code are available on our website https://sites.google.com/view/blind-wayfarer

Collective Decision-Making on Task Allocation Feasibility

May 13, 2024

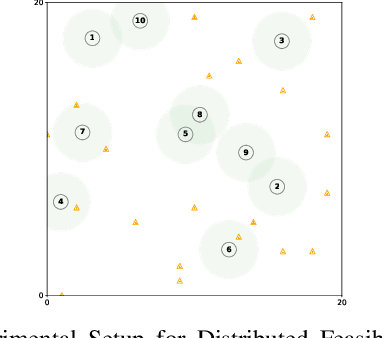

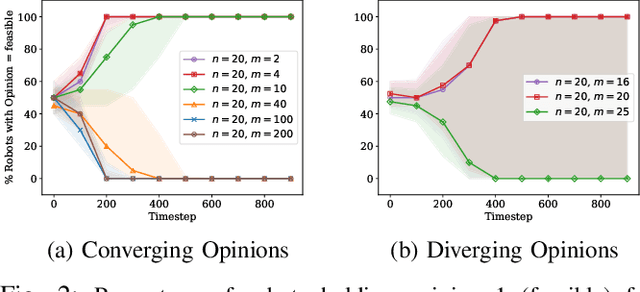

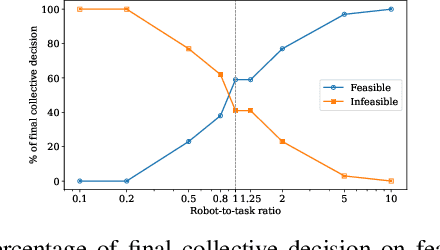

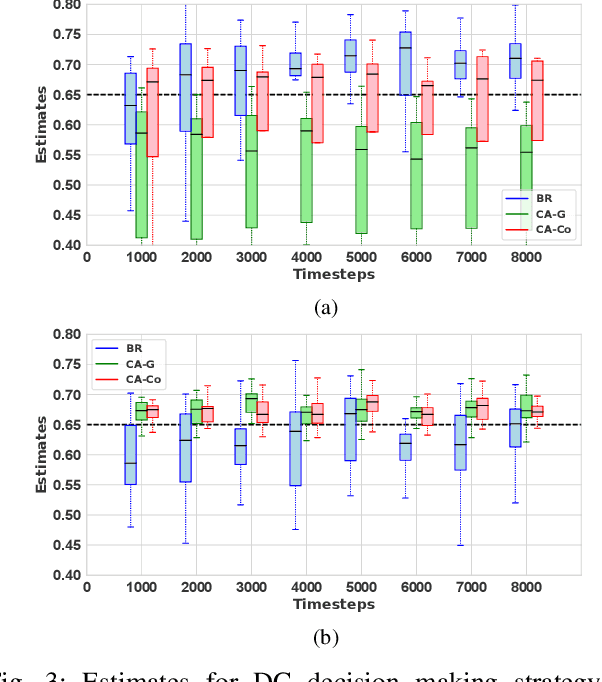

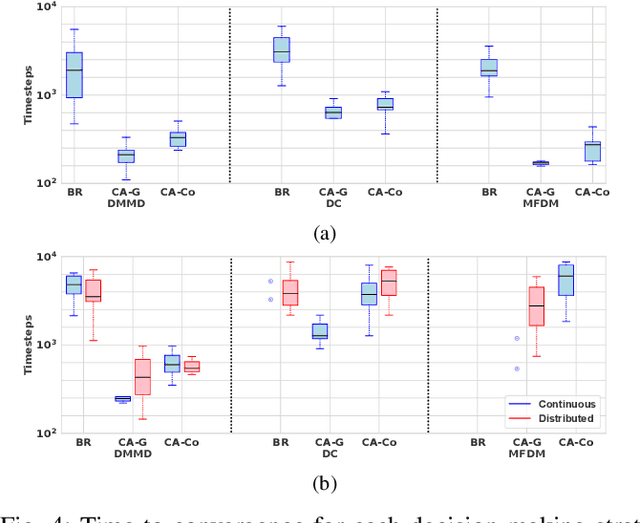

Abstract:Robot swarms offer the potential to bring several advantages to the real-world applications but deploying them presents challenges in ensuring feasibility across diverse environments. Assessing the feasibility of new tasks for swarms is crucial to ensure the effective utilisation of resources, as well as to provide awareness of the suitability of a swarm solution for a particular task. In this paper, we introduce the concept of distributed feasibility, where the swarm collectively assesses the feasibility of task allocation based on local observations and interactions. We apply Direct Modulation of Majority-based Decisions as our collective decision-making strategy and show that, in a homogeneous setting, the swarm is able to collectively decide whether a given setup has a high or low feasibility as long as the robot-to-task ratio is not near one.

Collective Decision Making in Communication-Constrained Environments

Jul 19, 2022

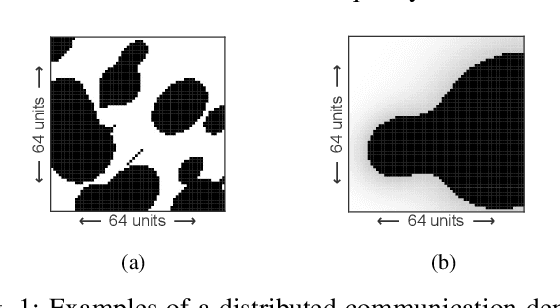

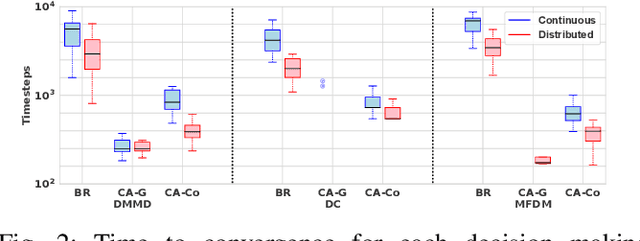

Abstract:One of the main tasks for autonomous robot swarms is to collectively decide on the best available option. Achieving that requires a high quality communication between the agents that may not be always available in a real world environment. In this paper we introduce the communication-constrained collective decision-making problem where some areas of the environment limit the agents' ability to communicate, either by reducing success rate or blocking the communication channels. We propose a decentralised algorithm for mapping environmental features for robot swarms as well as improving collective decision making in communication-limited environments without prior knowledge of the communication landscape. Our results show that making a collective aware of the communication environment can improve the speed of convergence in the presence of communication limitations, at least 3 times faster, without sacrificing accuracy.

Resilient robot teams: a review integrating decentralised control, change-detection, and learning

Apr 21, 2022

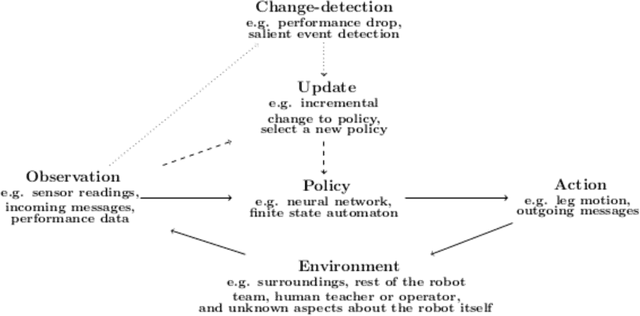

Abstract:Purpose of review: This paper reviews opportunities and challenges for decentralised control, change-detection, and learning in the context of resilient robot teams. Recent findings: Exogenous fault detection methods can provide a generic detection or a specific diagnosis with a recovery solution. Robot teams can perform active and distributed sensing for detecting changes in the environment, including identifying and tracking dynamic anomalies, as well as collaboratively mapping dynamic environments. Resilient methods for decentralised control have been developed in learning perception-action-communication loops, multi-agent reinforcement learning, embodied evolution, offline evolution with online adaptation, explicit task allocation, and stigmergy in swarm robotics. Summary: Remaining challenges for resilient robot teams are integrating change-detection and trial-and-error learning methods, obtaining reliable performance evaluations under constrained evaluation time, improving the safety of resilient robot teams, theoretical results demonstrating rapid adaptation to given environmental perturbations, and designing realistic and compelling case studies.

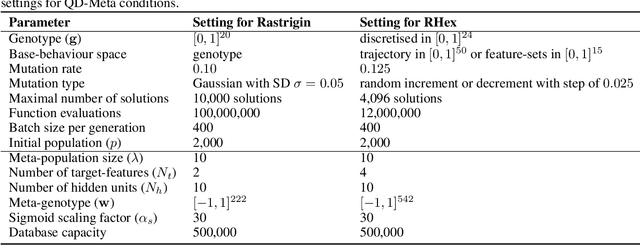

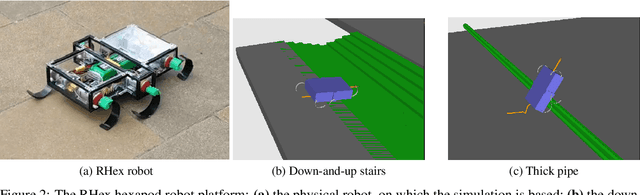

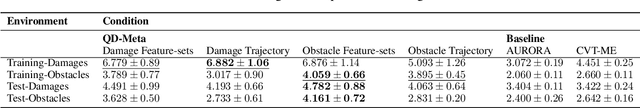

Quality-Diversity Meta-Evolution: customising behaviour spaces to a meta-objective

Sep 08, 2021

Abstract:Quality-Diversity (QD) algorithms evolve behaviourally diverse and high-performing solutions. To illuminate the elite solutions for a space of behaviours, QD algorithms require the definition of a suitable behaviour space. If the behaviour space is high-dimensional, a suitable dimensionality reduction technique is required to maintain a limited number of behavioural niches. While current methodologies for automated behaviour spaces focus on changing the geometry or on unsupervised learning, there remains a need for customising behavioural diversity to a particular meta-objective specified by the end-user. In the newly emerging framework of QD Meta-Evolution, or QD-Meta for short, one evolves a population of QD algorithms, each with different algorithmic and representational characteristics, to optimise the algorithms and their resulting archives to a user-defined meta-objective. Despite promising results compared to traditional QD algorithms, QD-Meta has yet to be compared to state-of-the-art behaviour space automation methods such as Centroidal Voronoi Tessellations Multi-dimensional Archive of Phenotypic Elites Algorithm (CVT-MAP-Elites) and Autonomous Robots Realising their Abilities (AURORA). This paper performs an empirical study of QD-Meta on function optimisation and multilegged robot locomotion benchmarks. Results demonstrate that QD-Meta archives provide improved average performance and faster adaptation to a priori unknown changes to the environment when compared to CVT-MAP-Elites and AURORA. A qualitative analysis shows how the resulting archives are tailored to the meta-objectives provided by the end-user.

Large-scale, Dynamic and Distributed Coalition Formation with Spatial and Temporal Constraints

Jun 01, 2021

Abstract:The Coalition Formation with Spatial and Temporal constraints Problem (CFSTP) is a multi-agent task allocation problem in which few agents have to perform many tasks, each with its deadline and workload. To maximize the number of completed tasks, the agents need to cooperate by forming, disbanding and reforming coalitions. The original mathematical programming formulation of the CFSTP is difficult to implement, since it is lengthy and based on the problematic Big-M method. In this paper, we propose a compact and easy-to-implement formulation. Moreover, we design D-CTS, a distributed version of the state-of-the-art CFSTP algorithm. Using public London Fire Brigade records, we create a dataset with $347588$ tasks and a test framework that simulates the mobilization of firefighters in dynamic environments. In problems with up to $150$ agents and $3000$ tasks, compared to DSA-SDP, a state-of-the-art distributed algorithm, D-CTS completes $3.79\% \pm [42.22\%, 1.96\%]$ more tasks, and is one order of magnitude more efficient in terms of communication overhead and time complexity. D-CTS sets the first large-scale, dynamic and distributed CFSTP benchmark.

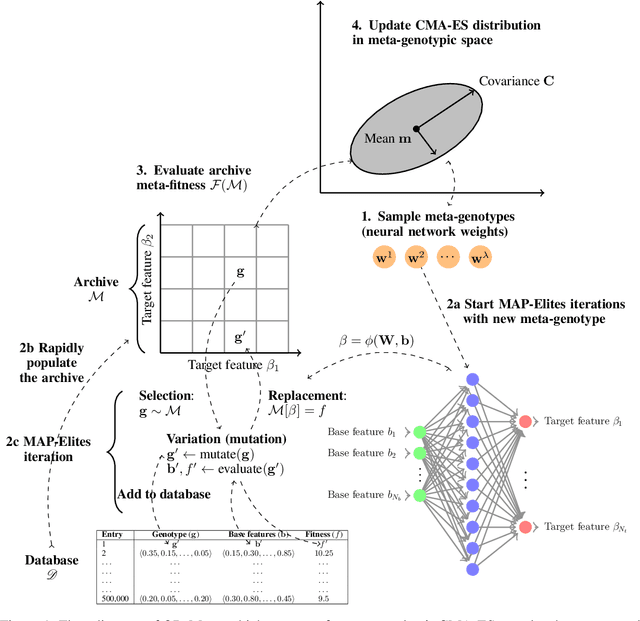

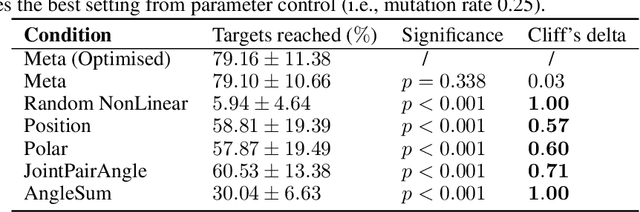

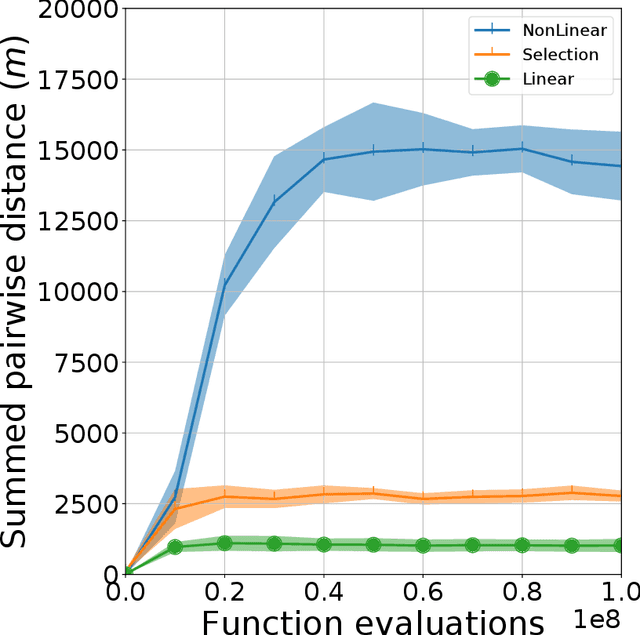

On the use of feature-maps and parameter control for improved quality-diversity meta-evolution

May 21, 2021

Abstract:In Quality-Diversity (QD) algorithms, which evolve a behaviourally diverse archive of high-performing solutions, the behaviour space is a difficult design choice that should be tailored to the target application. In QD meta-evolution, one evolves a population of QD algorithms to optimise the behaviour space based on an archive-level objective, the meta-fitness. This paper proposes an improved meta-evolution system such that (i) the database used to rapidly populate new archives is reformulated to prevent loss of quality-diversity; (ii) the linear transformation of base-features is generalised to a feature-map, a function of the base-features parametrised by the meta-genotype; and (iii) the mutation rate of the QD algorithm and the number of generations per meta-generation are controlled dynamically. Experiments on an 8-joint planar robot arm compare feature-maps (linear, non-linear, and feature-selection), parameter control strategies (static, endogenous, reinforcement learning, and annealing), and traditional MAP-Elites variants, for a total of 49 experimental conditions. Results reveal that non-linear and feature-selection feature-maps yield a 15-fold and 3-fold improvement in meta-fitness, respectively, over linear feature-maps. Reinforcement learning ranks among top parameter control methods. Finally, our approach allows the robot arm to recover a reach of over 80% for most damages and at least 60% for severe damages.

Multi-Agent Routing and Scheduling Through Coalition Formation

May 02, 2021

Abstract:In task allocation for real-time domains, such as disaster response, a limited number of agents is deployed across a large area to carry out numerous tasks, each with its prerequisites, profit, time window and workload. To maximize profits while minimizing time penalties, agents need to cooperate by forming, disbanding and reforming coalitions. In this paper, we name this problem Multi-Agent Routing and Scheduling through Coalition formation (MARSC) and show that it generalizes the important Team Orienteering Problem with Time Windows. We propose a binary integer program and an anytime and scalable heuristic to solve it. Using public London Fire Brigade records, we create a dataset with 347588 tasks and a test framework that simulates the mobilization of firefighters. In problems with up to 150 agents and 3000 tasks, our heuristic finds solutions up to 3.25 times better than the Earliest Deadline First approach commonly used in real-time systems. Our results constitute the first large-scale benchmark for the MARSC problem.

Rapidly adapting robot swarms with Swarm Map-based Bayesian Optimisation

Dec 21, 2020

Abstract:Rapid performance recovery from unforeseen environmental perturbations remains a grand challenge in swarm robotics. To solve this challenge, we investigate a behaviour adaptation approach, where one searches an archive of controllers for potential recovery solutions. To apply behaviour adaptation in swarm robotic systems, we propose two algorithms: (i) Swarm Map-based Optimisation (SMBO), which selects and evaluates one controller at a time, for a homogeneous swarm, in a centralised fashion; and (ii) Swarm Map-based Optimisation Decentralised (SMBO-Dec), which performs an asynchronous batch-based Bayesian optimisation to simultaneously explore different controllers for groups of robots in the swarm. We set up foraging experiments with a variety of disturbances: injected faults to proximity sensors, ground sensors, and the actuators of individual robots, with 100 unique combinations for each type. We also investigate disturbances in the operating environment of the swarm, where the swarm has to adapt to drastic changes in the number of resources available in the environment, and to one of the robots behaving disruptively towards the rest of the swarm, with 30 unique conditions for each such perturbation. The viability of SMBO and SMBO-Dec is demonstrated, comparing favourably to variants of random search and gradient descent, and various ablations, and improving performance up to 80% compared to the performance at the time of fault injection within at most 30 evaluations.

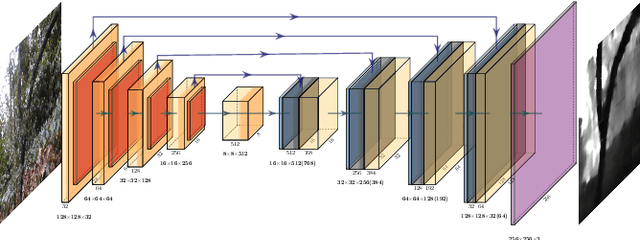

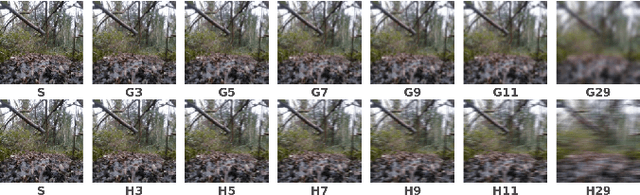

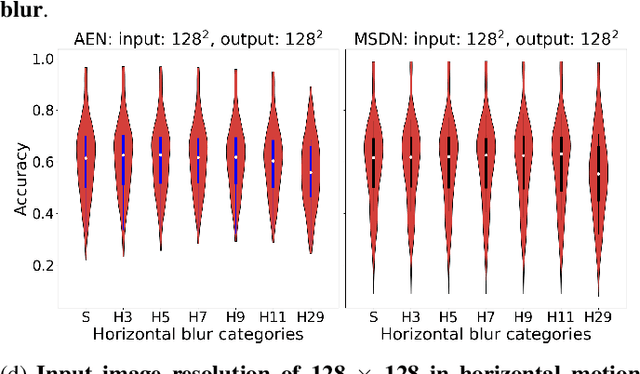

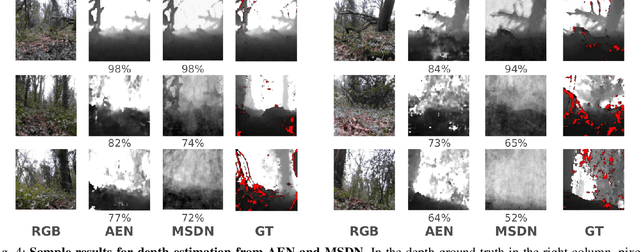

Depth estimation on embedded computers for robot swarms in forest

Dec 05, 2020

Abstract:Robot swarms to date are not prepared for autonomous navigation such as path planning and obstacle detection in forest floor, unable to achieve low-cost. The development of depth sensing and embedded computing hardware paves the way for swarm of terrestrial robots. The goal of this research is to improve this situation by developing low cost vision system for small ground robots to rapidly perceive terrain. We develop two depth estimation models and evaluate their performance on Raspberry Pi 4 and Jetson Nano in terms of accuracy, runtime and model size of depth estimation models, as well as memory consumption, power draw, temperature, and cost of above two embedded on-board computers. Our research demonstrated that auto-encoder network deployed on Raspberry Pi 4 runs at a power consumption of 3.4 W, memory consumption of about 200 MB, and mean runtime of 13 ms. This can be to meet our requirement for low-cost swarm of robots. Moreover, our analysis also indicated multi-scale deep network performs better for predicting depth map from blurred RGB images caused by camera motion. This paper mainly describes depth estimation models trained on our own dataset recorded in forest, and their performance on embedded on-board computers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge