Dan Barnes

Under the Radar: Learning to Predict Robust Keypoints for Odometry Estimation and Metric Localisation in Radar

Feb 24, 2020

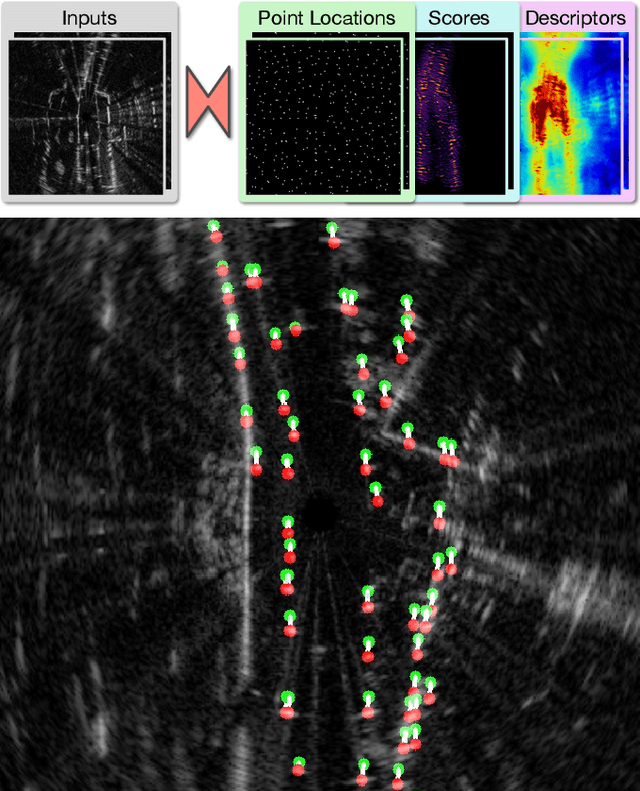

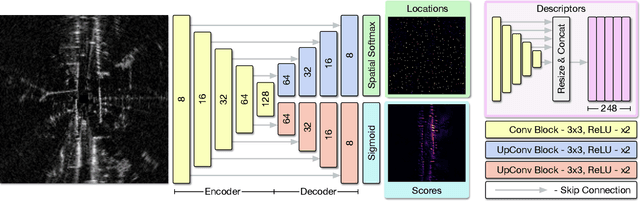

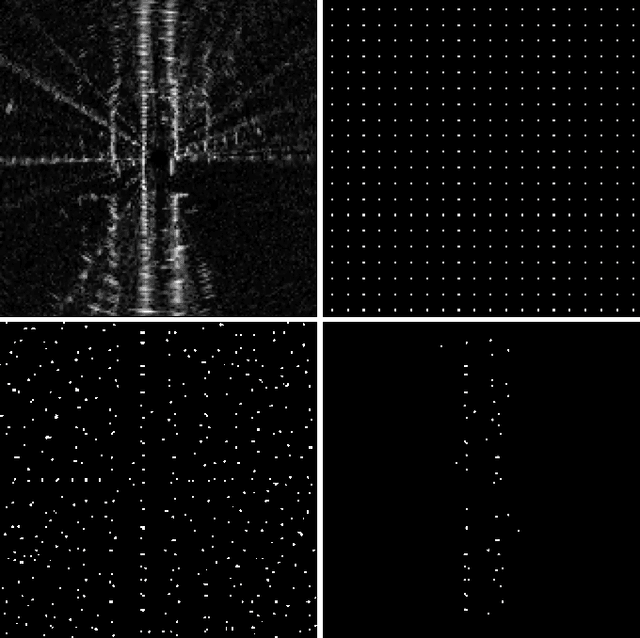

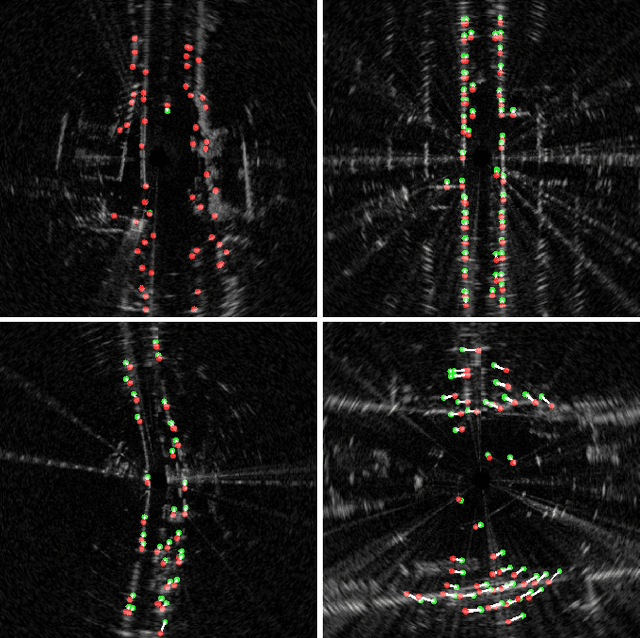

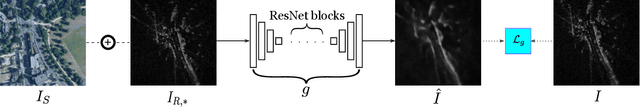

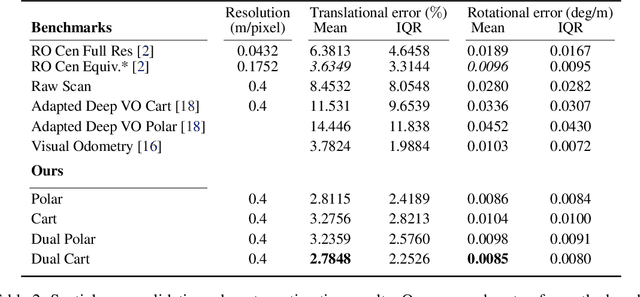

Abstract:This paper presents a self-supervised framework for learning to detect robust keypoints for odometry estimation and metric localisation in radar. By embedding a differentiable point-based motion estimator inside our architecture, we learn keypoint locations, scores and descriptors from localisation error alone. This approach avoids imposing any assumption on what makes a robust keypoint and crucially allows them to be optimised for our application. Furthermore the architecture is sensor agnostic and can be applied to most modalities. We run experiments on 280km of real world driving from the Oxford Radar RobotCar Dataset and improve on the state-of-the-art in point-based radar odometry, reducing errors by up to 45% whilst running an order of magnitude faster, simultaneously solving metric loop closures. Combining these outputs, we provide a framework capable of full mapping and localisation with radar in urban environments.

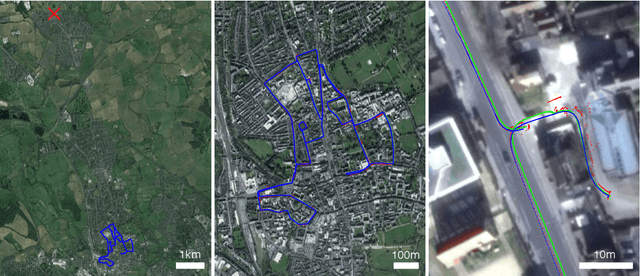

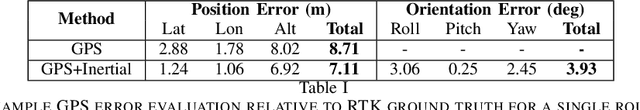

Real-time Kinematic Ground Truth for the Oxford RobotCar Dataset

Feb 24, 2020

Abstract:We describe the release of reference data towards a challenging long-term localisation and mapping benchmark based on the large-scale Oxford RobotCar Dataset. The release includes 72 traversals of a route through Oxford, UK, gathered in all illumination, weather and traffic conditions, and is representative of the conditions an autonomous vehicle would be expected to operate reliably in. Using post-processed raw GPS, IMU, and static GNSS base station recordings, we have produced a globally-consistent centimetre-accurate ground truth for the entire year-long duration of the dataset. Coupled with a planned online benchmarking service, we hope to enable quantitative evaluation and comparison of different localisation and mapping approaches focusing on long-term autonomy for road vehicles in urban environments challenged by changing weather.

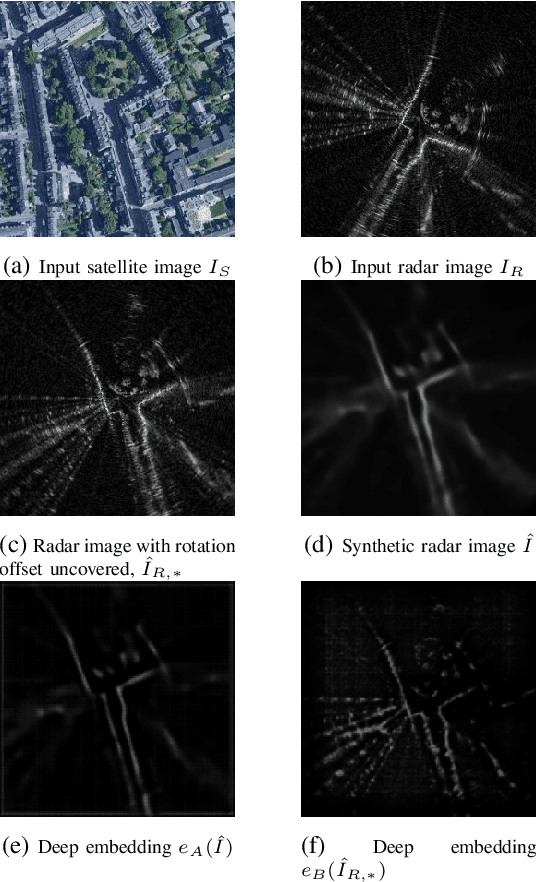

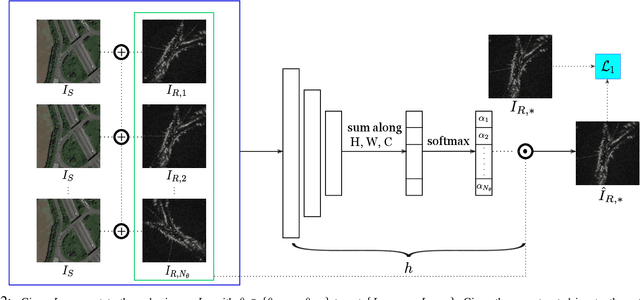

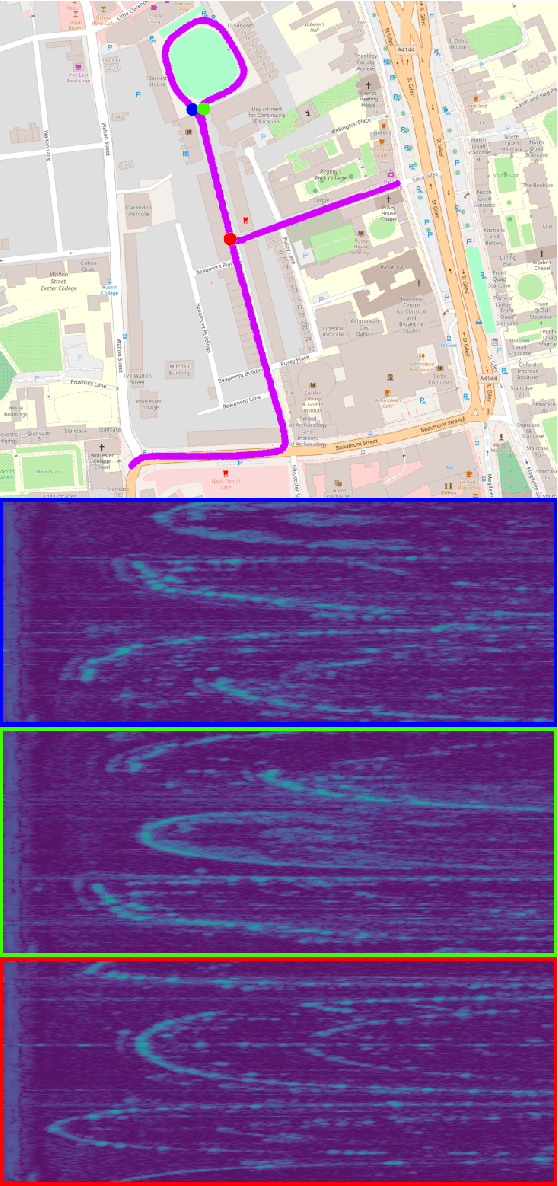

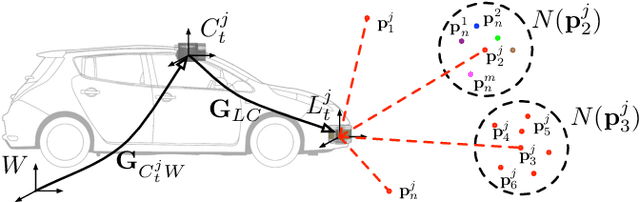

RSL-Net: Localising in Satellite Images From a Radar on the Ground

Feb 06, 2020

Abstract:This paper is about localising a vehicle in an overhead image using FMCW radar mounted on a ground vehicle. FMCW radar offers extraordinary promise and efficacy for vehicle localisation. It is impervious to all weather types and lighting conditions. However the complexity of the interactions between millimetre radar wave and the physical environment makes it a challenging domain. Infrastructure-free large-scale radar-based localisation is in its infancy. Typically here a map is built and suitable techniques, compatible with the nature of sensor, are brought to bear. In this work we eschew the need for a radar-based map; instead we simply use an overhead image -- a resource readily available everywhere. This paper introduces a method that not only naturally deals with the complexity of the signal type but does so in the context of cross modal processing.

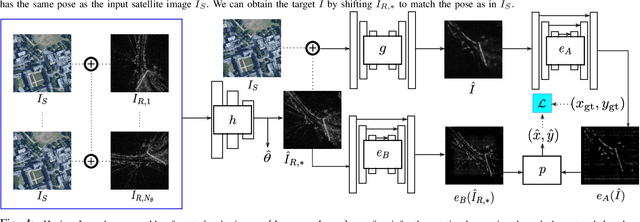

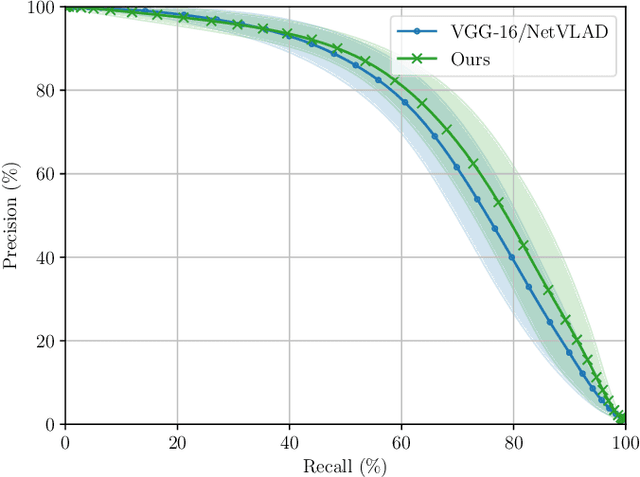

Kidnapped Radar: Topological Radar Localisation using Rotationally-Invariant Metric Learning

Jan 26, 2020

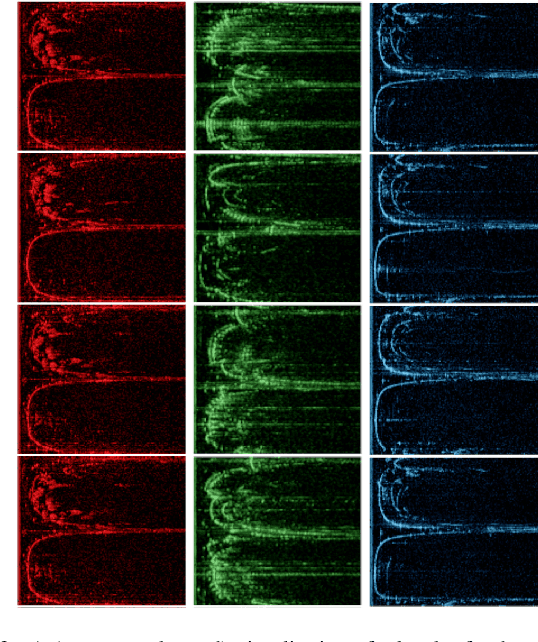

Abstract:This paper presents a system for robust, large-scale topological localisation using Frequency-Modulated Continuous-Wave (FMCW) scanning radar. We learn a metric space for embedding polar radar scans using CNN and NetVLAD architectures traditionally applied to the visual domain. However, we tailor the feature extraction for more suitability to the polar nature of radar scan formation using cylindrical convolutions, anti-aliasing blurring, and azimuth-wise max-pooling; all in order to bolster the rotational invariance. The enforced metric space is then used to encode a reference trajectory, serving as a map, which is queried for nearest neighbours (NNs) for recognition of places at run-time. We demonstrate the performance of our topological localisation system over the course of many repeat forays using the largest radar-focused mobile autonomy dataset released to date, totalling 280 km of urban driving, a small portion of which we also use to learn the weights of the modified architecture. As this work represents a novel application for FMCW radar, we analyse the utility of the proposed method via a comprehensive set of metrics which provide insight into the efficacy when used in a realistic system, showing improved performance over the root architecture even in the face of random rotational perturbation.

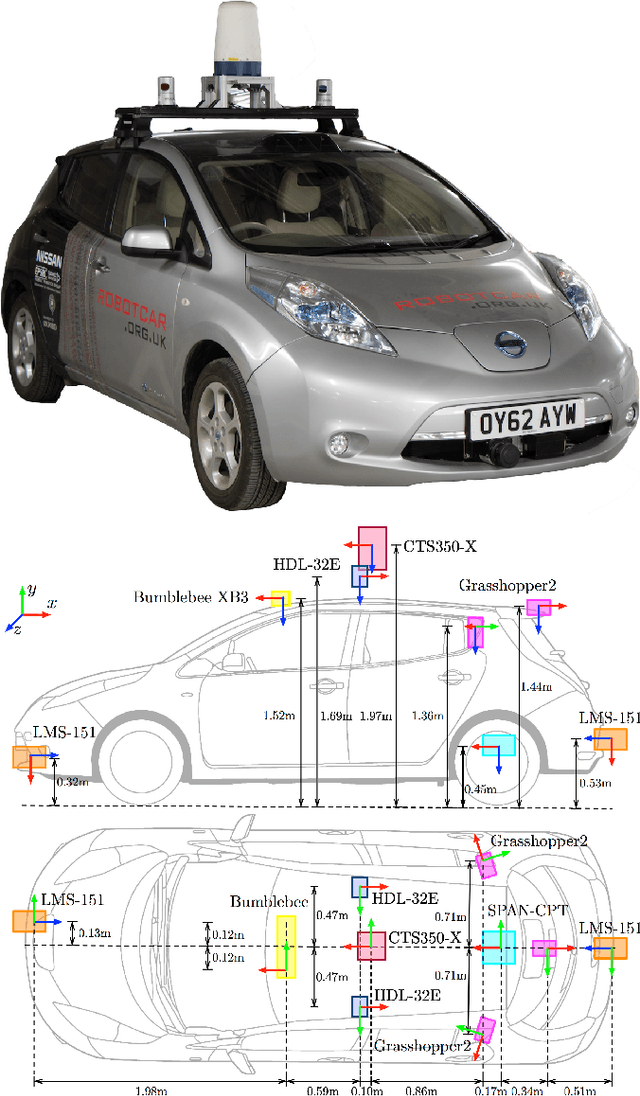

The Oxford Radar RobotCar Dataset: A Radar Extension to the Oxford RobotCar Dataset

Sep 12, 2019

Abstract:In this paper we present The Oxford Radar RobotCar Dataset, a new dataset for researching scene understanding using Millimetre-Wave FMCW scanning radar data. The target application is autonomous vehicles where this modality remains unencumbered by environmental conditions such as fog, rain, snow, or lens flare, which typically challenge other sensor modalities such as vision and LIDAR. The data were gathered in January 2019 over thirty-two traversals of a central Oxford route spanning a total of 280 km of urban driving. It encompasses a variety of weather, traffic, and lighting conditions. This 4.7 TB dataset consists of over 240,000 scans from a Navtech CTS350-X radar and 2.4 million scans from two Velodyne HDL-32E 3D LIDARs; along with six cameras, two 2D LIDARs, and a GPS/INS receiver. In addition we release ground truth optimised radar odometry to provide an additional impetus to research in this domain. The full dataset is available for download at: ori.ox.ac.uk/datasets/radar-robotcar-dataset

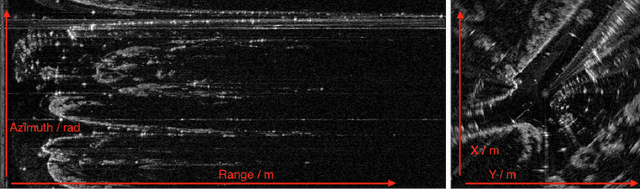

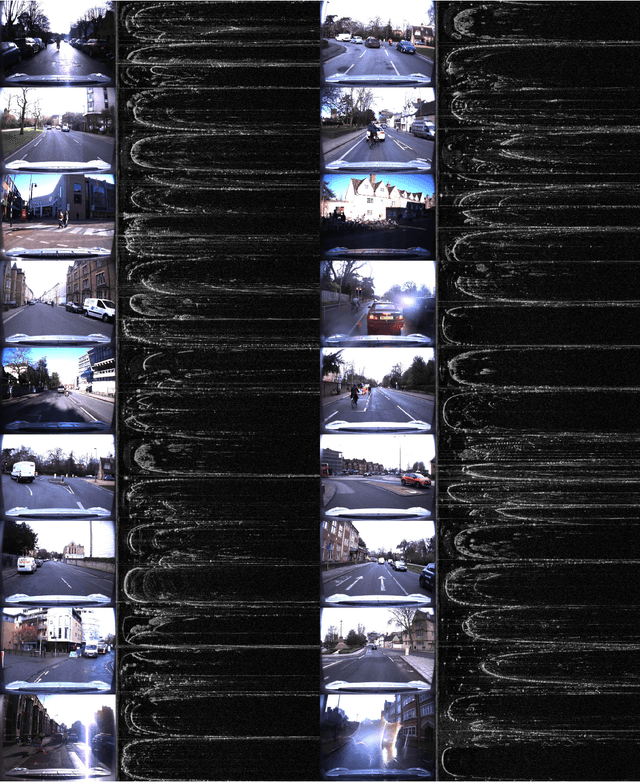

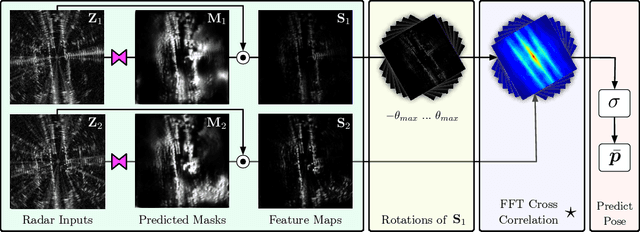

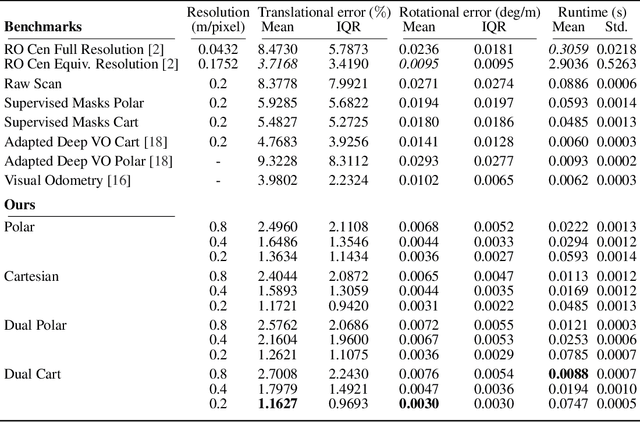

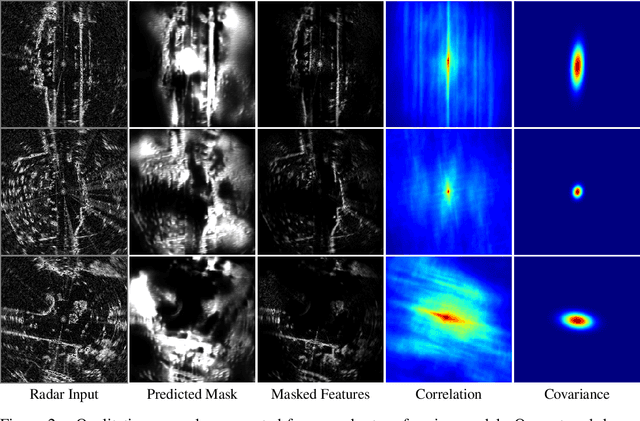

Masking by Moving: Learning Distraction-Free Radar Odometry from Pose Information

Sep 12, 2019

Abstract:This paper presents an end-to-end radar odometry system which delivers robust, real-time pose estimates based on a learned embedding space free of sensing artefacts and distractor objects. The system deploys a fully differentiable, correlation-based radar matching approach. This provides the same level of interpretability as established scan-matching methods and allows for a principled derivation of uncertainty estimates. The system is trained in a (self-)supervised way using only previously obtained pose information as a training signal. Using 280km of urban driving data, we demonstrate that our approach outperforms the previous state-of-the-art in radar odometry by reducing errors by up 68% whilst running an order of magnitude faster.

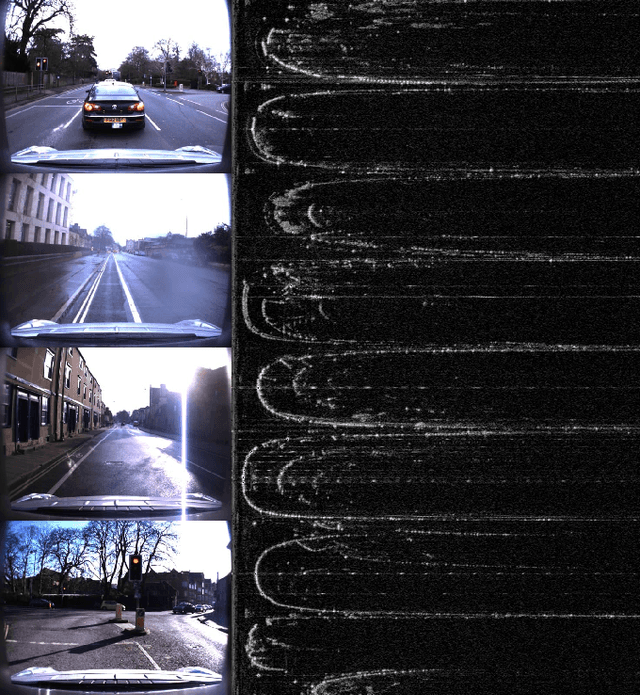

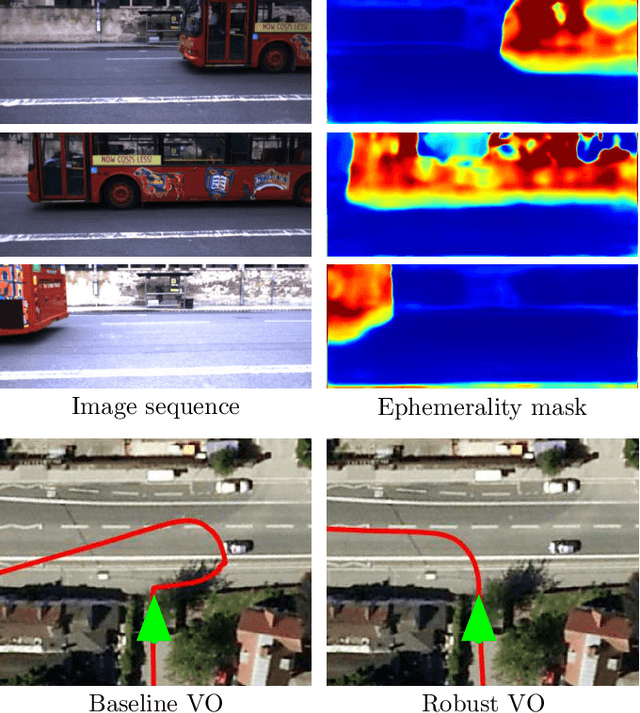

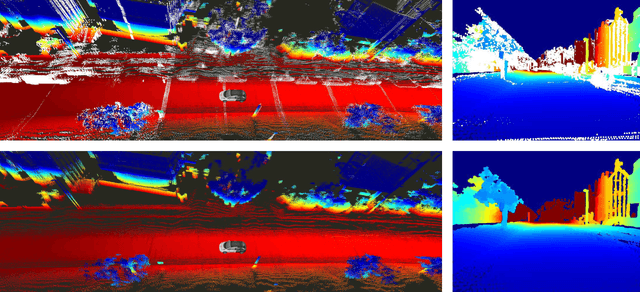

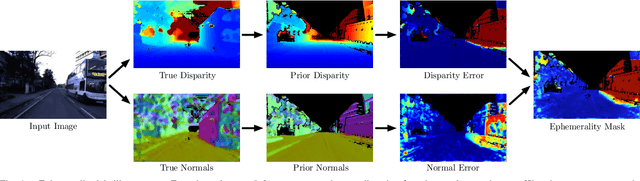

Driven to Distraction: Self-Supervised Distractor Learning for Robust Monocular Visual Odometry in Urban Environments

Mar 05, 2018

Abstract:We present a self-supervised approach to ignoring "distractors" in camera images for the purposes of robustly estimating vehicle motion in cluttered urban environments. We leverage offline multi-session mapping approaches to automatically generate a per-pixel ephemerality mask and depth map for each input image, which we use to train a deep convolutional network. At run-time we use the predicted ephemerality and depth as an input to a monocular visual odometry (VO) pipeline, using either sparse features or dense photometric matching. Our approach yields metric-scale VO using only a single camera and can recover the correct egomotion even when 90% of the image is obscured by dynamic, independently moving objects. We evaluate our robust VO methods on more than 400km of driving from the Oxford RobotCar Dataset and demonstrate reduced odometry drift and significantly improved egomotion estimation in the presence of large moving vehicles in urban traffic.

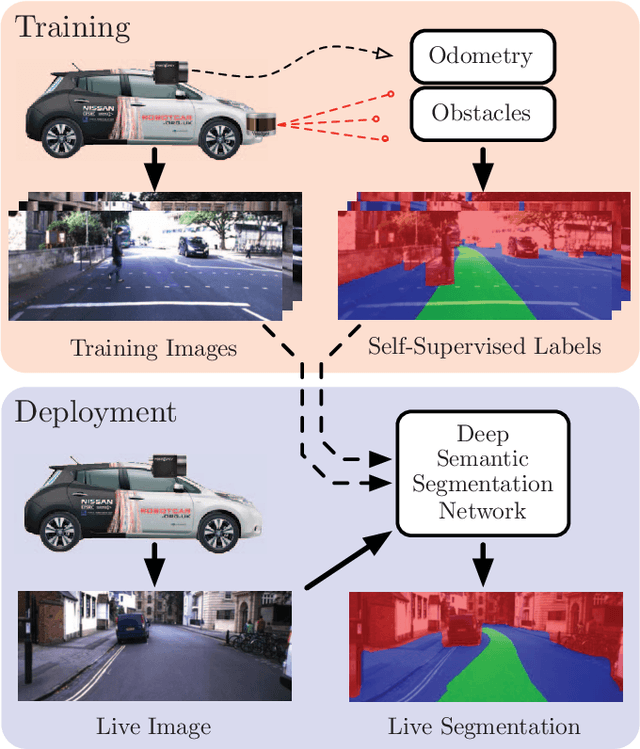

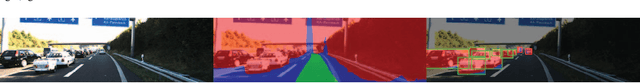

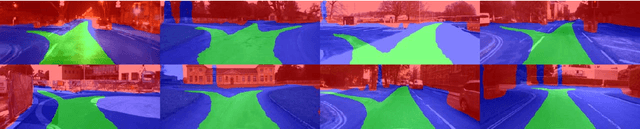

Find Your Own Way: Weakly-Supervised Segmentation of Path Proposals for Urban Autonomy

Nov 17, 2017

Abstract:We present a weakly-supervised approach to segmenting proposed drivable paths in images with the goal of autonomous driving in complex urban environments. Using recorded routes from a data collection vehicle, our proposed method generates vast quantities of labelled images containing proposed paths and obstacles without requiring manual annotation, which we then use to train a deep semantic segmentation network. With the trained network we can segment proposed paths and obstacles at run-time using a vehicle equipped with only a monocular camera without relying on explicit modelling of road or lane markings. We evaluate our method on the large-scale KITTI and Oxford RobotCar datasets and demonstrate reliable path proposal and obstacle segmentation in a wide variety of environments under a range of lighting, weather and traffic conditions. We illustrate how the method can generalise to multiple path proposals at intersections and outline plans to incorporate the system into a framework for autonomous urban driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge