Rob Weston

There and Back Again: Learning to Simulate Radar Data for Real-World Applications

Nov 29, 2020

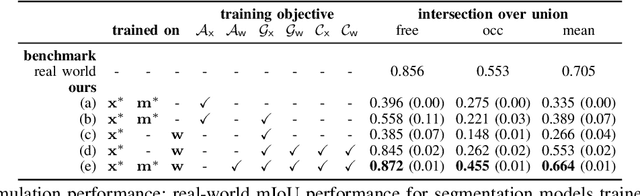

Abstract:Simulating realistic radar data has the potential to significantly accelerate the development of data-driven approaches to radar processing. However, it is fraught with difficulty due to the notoriously complex image formation process. Here we propose to learn a radar sensor model capable of synthesising faithful radar observations based on simulated elevation maps. In particular, we adopt an adversarial approach to learning a forward sensor model from unaligned radar examples. In addition, modelling the backward model encourages the output to remain aligned to the world state through a cyclical consistency criterion. The backward model is further constrained to predict elevation maps from real radar data that are grounded by partial measurements obtained from corresponding lidar scans. Both models are trained in a joint optimisation. We demonstrate the efficacy of our approach by evaluating a down-stream segmentation model trained purely on simulated data in a real-world deployment. This achieves performance within four percentage points of the same model trained entirely on real data.

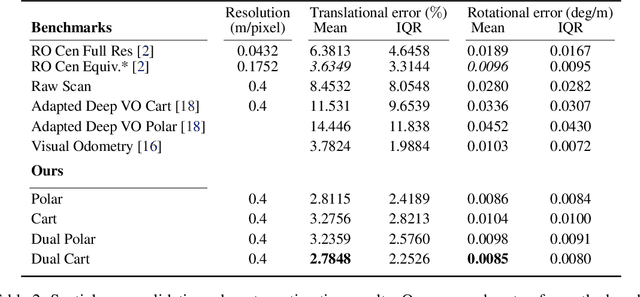

Masking by Moving: Learning Distraction-Free Radar Odometry from Pose Information

Sep 12, 2019

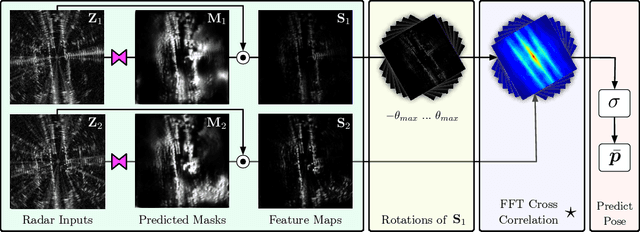

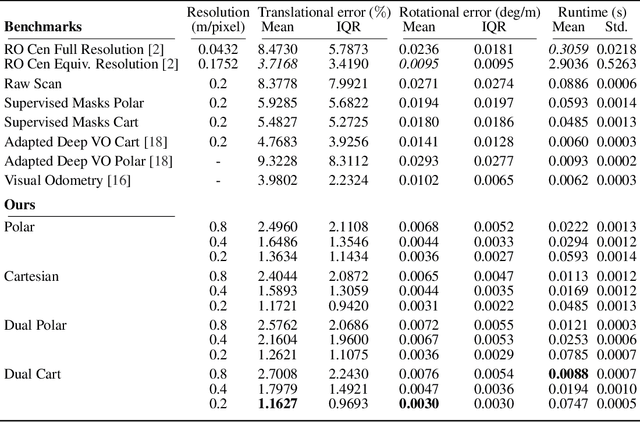

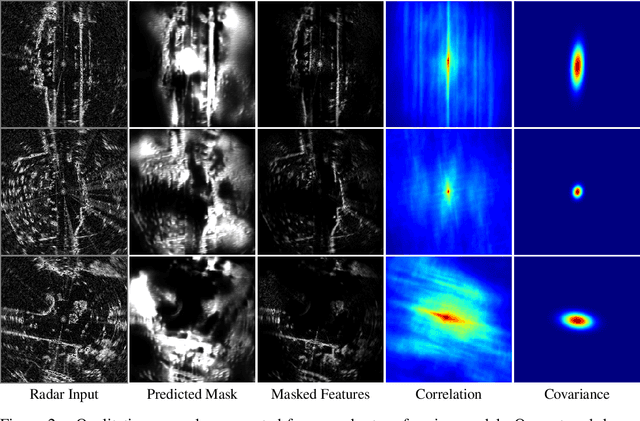

Abstract:This paper presents an end-to-end radar odometry system which delivers robust, real-time pose estimates based on a learned embedding space free of sensing artefacts and distractor objects. The system deploys a fully differentiable, correlation-based radar matching approach. This provides the same level of interpretability as established scan-matching methods and allows for a principled derivation of uncertainty estimates. The system is trained in a (self-)supervised way using only previously obtained pose information as a training signal. Using 280km of urban driving data, we demonstrate that our approach outperforms the previous state-of-the-art in radar odometry by reducing errors by up 68% whilst running an order of magnitude faster.

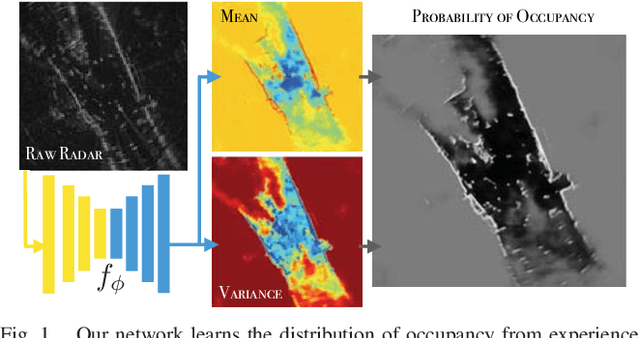

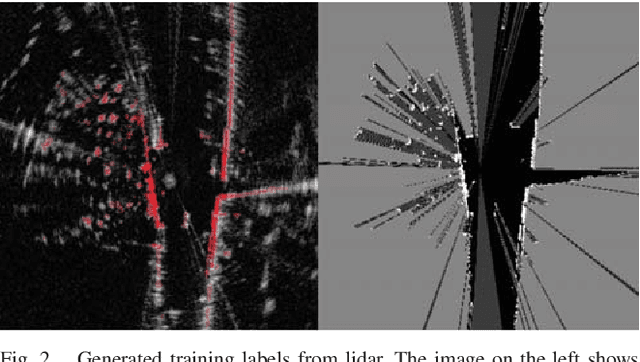

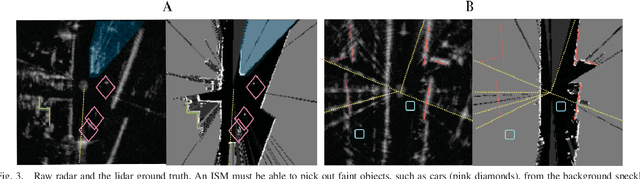

Probably Unknown: Deep Inverse Sensor Modelling In Radar

Oct 18, 2018

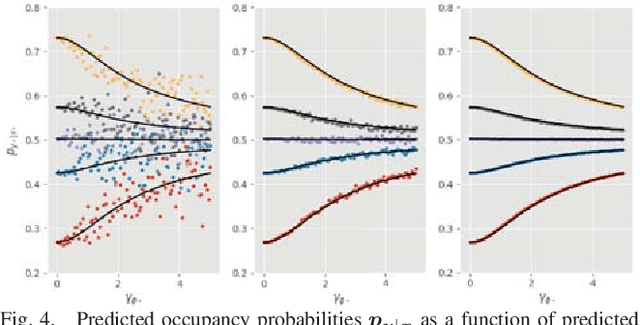

Abstract:Radar presents a promising alternative to lidar and vision in autonomous vehicle applications, being able to detect objects at long range under a variety of weather conditions. However, distinguishing between occupied and free space from a raw radar scan is notoriously difficult. We consider the challenge of learning an Inverse Sensor Model (ISM) mapping a raw radar observation to occupancy probabilities in a discretised space. We frame this problem as a segmentation task, utilising a deep neural network that is able to learn an inherently probabilistic ISM from raw sensor data considers scene context. In doing so our approach explicitly accounts for the heteroscedastic aleatoric uncertainty for radar that arises due to complex interactions between occlusion and sensor noise. Our network is trained using only partial occupancy labels generated from lidar and able to successfully distinguish between occupied and free space. We evaluate our approach on five hours of data recorded in a dynamic urban environment and show that it significantly outperforms classical constant false-alarm rate (CFAR) filtering approaches in light of challenging noise artefacts whilst identifying space that is inherently uncertain because of occlusion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge