Damien Petit

Accelerating Robot Learning of Contact-Rich Manipulations: A Curriculum Learning Study

Apr 28, 2022

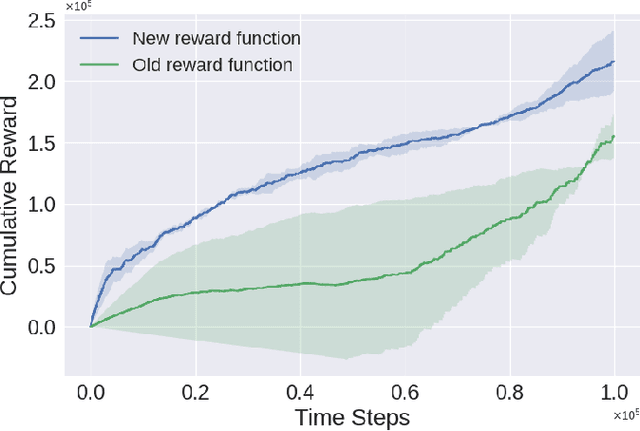

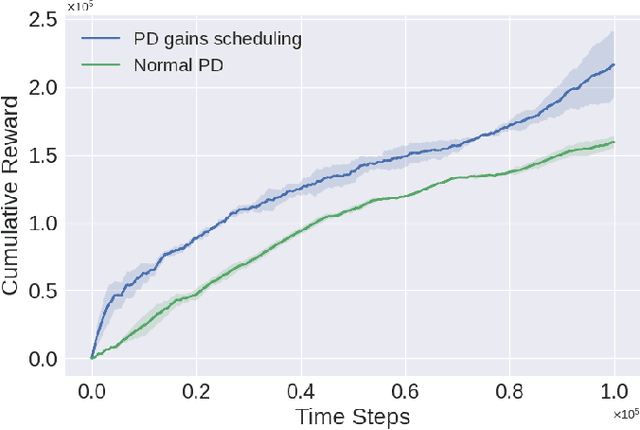

Abstract:The Reinforcement Learning (RL) paradigm has been an essential tool for automating robotic tasks. Despite the advances in RL, it is still not widely adopted in the industry due to the need for an expensive large amount of robot interaction with its environment. Curriculum Learning (CL) has been proposed to expedite learning. However, most research works have been only evaluated in simulated environments, from video games to robotic toy tasks. This paper presents a study for accelerating robot learning of contact-rich manipulation tasks based on Curriculum Learning combined with Domain Randomization (DR). We tackle complex industrial assembly tasks with position-controlled robots, such as insertion tasks. We compare different curricula designs and sampling approaches for DR. Based on this study, we propose a method that significantly outperforms previous work, which uses DR only (No CL is used), with less than a fifth of the training time (samples). Results also show that even when training only in simulation with toy tasks, our method can learn policies that can be transferred to the real-world robot. The learned policies achieved success rates of up to 86\% on real-world complex industrial insertion tasks (with tolerances of $\pm 0.01~mm$) not seen during the training.

Variable Compliance Control for Robotic Peg-in-Hole Assembly: A Deep Reinforcement Learning Approach

Sep 25, 2020

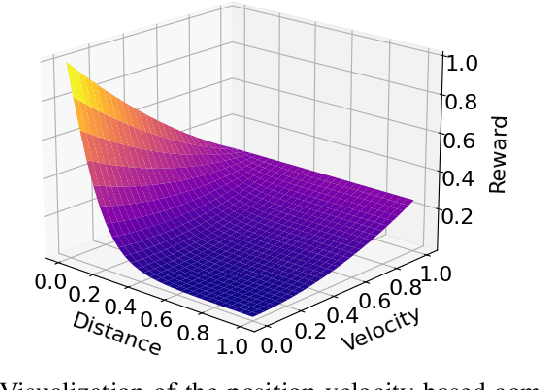

Abstract:Industrial robot manipulators are playing a more significant role in modern manufacturing industries. Though peg-in-hole assembly is a common industrial task which has been extensively researched, safely solving complex high precision assembly in an unstructured environment remains an open problem. Reinforcement Learning (RL) methods have been proven successful in solving manipulation tasks autonomously. However, RL is still not widely adopted on real robotic systems because working with real hardware entails additional challenges, especially when using position-controlled manipulators. The main contribution of this work is a learning-based method to solve peg-in-hole tasks with position uncertainty of the hole. We proposed the use of an off-policy model-free reinforcement learning method and bootstrap the training speed by using several transfer learning techniques (sim2real) and domain randomization. Our proposed learning framework for position-controlled robots was extensively evaluated on contact-rich insertion tasks on a variety of environments.

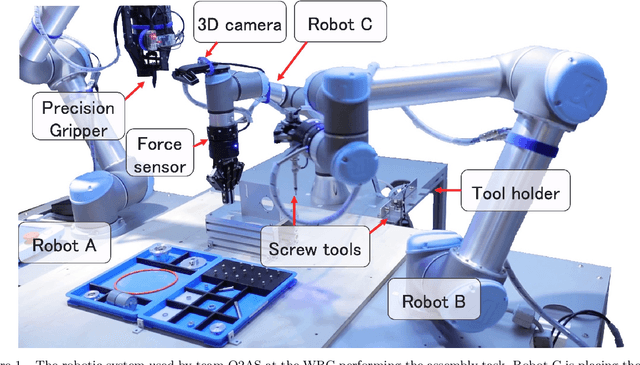

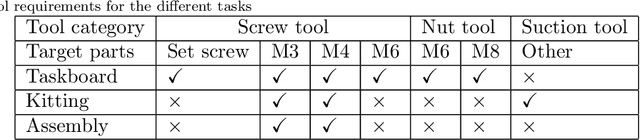

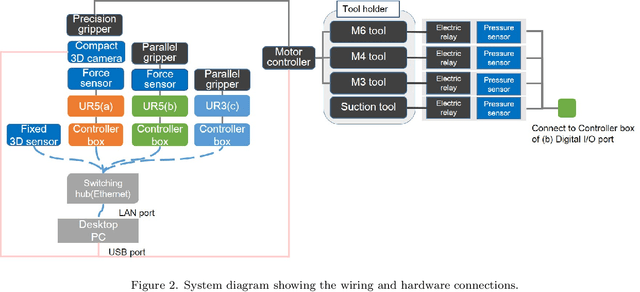

Team O2AS at the World Robot Summit 2018: An Approach to Robotic Kitting and Assembly Tasks using General Purpose Grippers and Tools

Mar 05, 2020

Abstract:We propose a versatile robotic system for kitting and assembly tasks which uses no jigs or commercial tool changers. Instead of specialized end effectors, it uses its two-finger grippers to grasp and hold tools to perform subtasks such as screwing and suctioning. A third gripper is used as a precision picking and centering tool, and uses in-built passive compliance to compensate for small position errors and uncertainty. A novel grasp point detection for bin picking is described for the kitting task, using a single depth map. Using the proposed system we competed in the Assembly Challenge of the Industrial Robotics Category of the World Robot Challenge at the World Robot Summit 2018, obtaining 4th place and the SICE award for lean design and versatile tool use. We show the effectiveness of our approach through experiments performed during the competition.

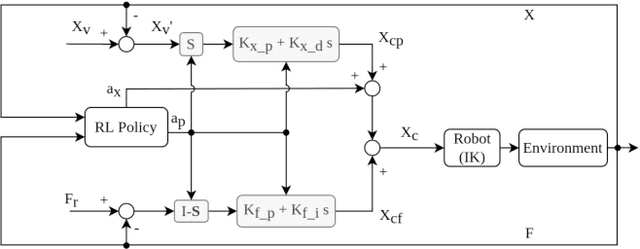

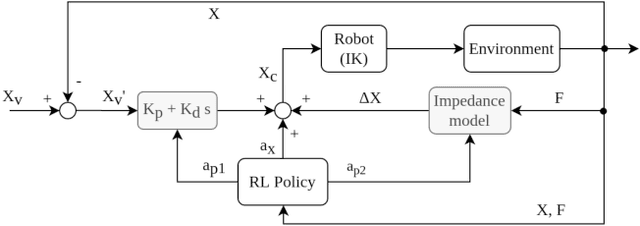

Learning Contact-Rich Manipulation Tasks with Rigid Position-Controlled Robots: Learning to Force Control

Mar 02, 2020

Abstract:To fully realize industrial automation, it is indispensable to give the robot manipulators the ability to adapt by themselves to their surroundings and to learn to handle novel manipulation tasks. Reinforcement Learning (RL) methods have been proven successful in solving manipulation tasks autonomously. However, RL is still not widely adopted on real robotic systems because working with real hardware entails additional challenges, especially when using rigid position-controlled manipulators. These challenges include the need for a robust controller to avoid undesired behavior, that risk damaging the robot and its environment, and constant supervision from a human operator. The main contributions of this work are, first, we propose a framework for safely training an RL agent on manipulation tasks using a rigid robot. Second, to enable a position-controlled manipulator to perform contact-rich manipulation tasks, we implemented two different force control schemes based on standard force feedback controllers; one is a modified hybrid position-force control, and the other one is an impedance control. Third, we empirically study both control schemes when used as the action representation of an RL agent. We evaluate the trade-off between control complexity and performance by comparing several versions of the control schemes, each with a different number of force control parameters. The proposed methods are validated both on simulation and a real robot, a UR3 e-series robotic arm when executing contact-rich manipulation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge