Dalcimar Casanova

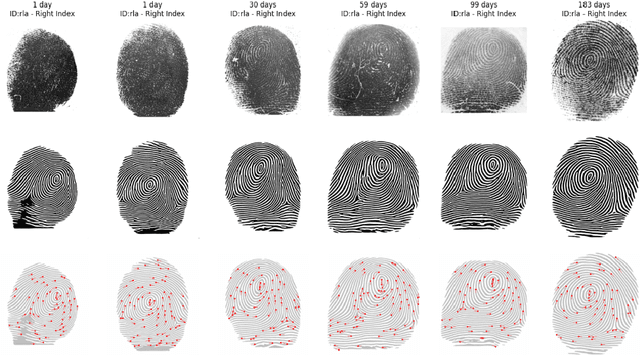

An on-production high-resolution longitudinal neonatal fingerprint database in Brazil

Apr 27, 2025

Abstract:The neonatal period is critical for survival, requiring accurate and early identification to enable timely interventions such as vaccinations, HIV treatment, and nutrition programs. Biometric solutions offer potential for child protection by helping to prevent baby swaps, locate missing children, and support national identity systems. However, developing effective biometric identification systems for newborns remains a major challenge due to the physiological variability caused by finger growth, weight changes, and skin texture alterations during early development. Current literature has attempted to address these issues by applying scaling factors to emulate growth-induced distortions in minutiae maps, but such approaches fail to capture the complex and non-linear growth patterns of infants. A key barrier to progress in this domain is the lack of comprehensive, longitudinal biometric datasets capturing the evolution of neonatal fingerprints over time. This study addresses this gap by focusing on designing and developing a high-quality biometric database of neonatal fingerprints, acquired at multiple early life stages. The dataset is intended to support the training and evaluation of machine learning models aimed at emulating the effects of growth on biometric features. We hypothesize that such a dataset will enable the development of more robust and accurate Deep Learning-based models, capable of predicting changes in the minutiae map with higher fidelity than conventional scaling-based methods. Ultimately, this effort lays the groundwork for more reliable biometric identification systems tailored to the unique developmental trajectory of newborns.

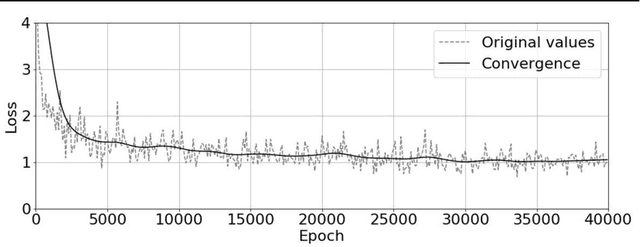

The use of Multi-domain Electroencephalogram Representations in the building of Models based on Convolutional and Recurrent Neural Networks for Epilepsy Detection

Apr 24, 2025

Abstract:Epilepsy, affecting approximately 50 million people globally, is characterized by abnormal brain activity and remains challenging to treat. The diagnosis of epilepsy relies heavily on electroencephalogram (EEG) data, where specialists manually analyze epileptiform patterns across pre-ictal, ictal, post-ictal, and interictal periods. However, the manual analysis of EEG signals is prone to variability between experts, emphasizing the need for automated solutions. Although previous studies have explored preprocessing techniques and machine learning approaches for seizure detection, there is a gap in understanding how the representation of EEG data (time, frequency, or time-frequency domains) impacts the predictive performance of deep learning models. This work addresses this gap by systematically comparing deep neural networks trained on EEG data in these three domains. Through the use of statistical tests, we identify the optimal data representation and model architecture for epileptic seizure detection. The results demonstrate that frequency-domain data achieves detection metrics exceeding 97\%, providing a robust foundation for more accurate and reliable seizure detection systems.

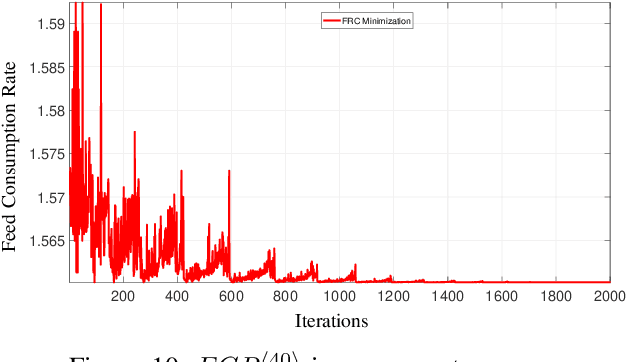

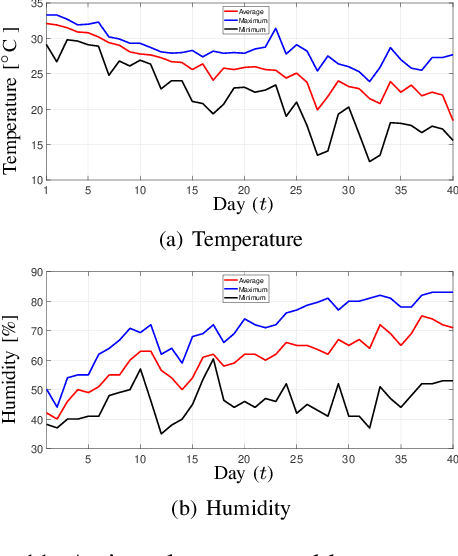

Estimating action plans for smart poultry houses

Aug 17, 2020

Abstract:In poultry farming, the systematic choice, update, and implementation of periodic (t) action plans define the feed conversion rate (FCR[t]), which is an acceptable measure for successful production. Appropriate action plans provide tailored resources for broilers, allowing them to grow within the so-called thermal comfort zone, without wast or lack of resources. Although the implementation of an action plan is automatic, its configuration depends on the knowledge of the specialist, tending to be inefficient and error-prone, besides to result in different FCR[t] for each poultry house. In this article, we claim that the specialist's perception can be reproduced, to some extent, by computational intelligence. By combining deep learning and genetic algorithm techniques, we show how action plans can adapt their performance over the time, based on previous well succeeded plans. We also implement a distributed network infrastructure that allows to replicate our method over distributed poultry houses, for their smart, interconnected, and adaptive control. A supervision system is provided as interface to users. Experiments conducted over real data show that our method improves 5% on the performance of the most productive specialist, staying very close to the optimal FCR[t].

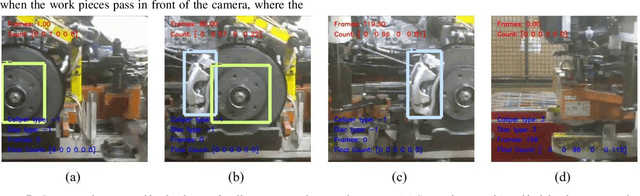

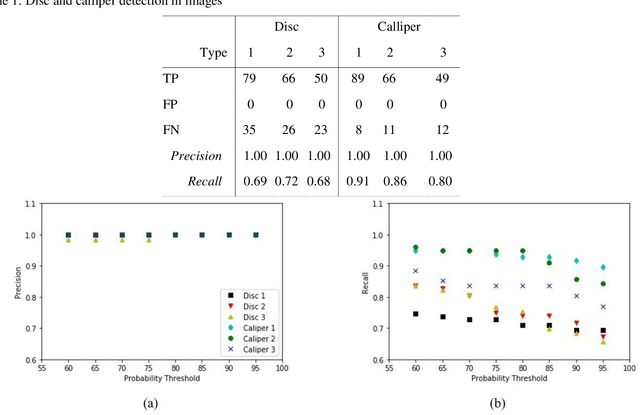

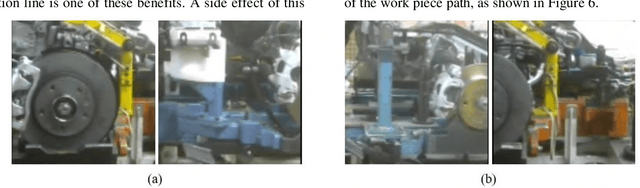

Deep Learning Models for Visual Inspection on Automotive Assembling Line

Jul 02, 2020

Abstract:Automotive manufacturing assembly tasks are built upon visual inspections such as scratch identification on machined surfaces, part identification and selection, etc, which guarantee product and process quality. These tasks can be related to more than one type of vehicle that is produced within the same manufacturing line. Visual inspection was essentially human-led but has recently been supplemented by the artificial perception provided by computer vision systems (CVSs). Despite their relevance, the accuracy of CVSs varies accordingly to environmental settings such as lighting, enclosure and quality of image acquisition. These issues entail costly solutions and override part of the benefits introduced by computer vision systems, mainly when it interferes with the operating cycle time of the factory. In this sense, this paper proposes the use of deep learning-based methodologies to assist in visual inspection tasks while leaving very little footprints in the manufacturing environment and exploring it as an end-to-end tool to ease CVSs setup. The proposed approach is illustrated by four proofs of concept in a real automotive assembly line based on models for object detection, semantic segmentation, and anomaly detection.

* arXiv admin note: text overlap with arXiv:1802.08717, arXiv:1703.05921 by other authors

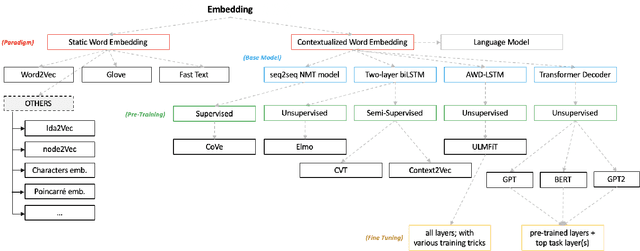

SE3M: A Model for Software Effort Estimation Using Pre-trained Embedding Models

Jun 30, 2020

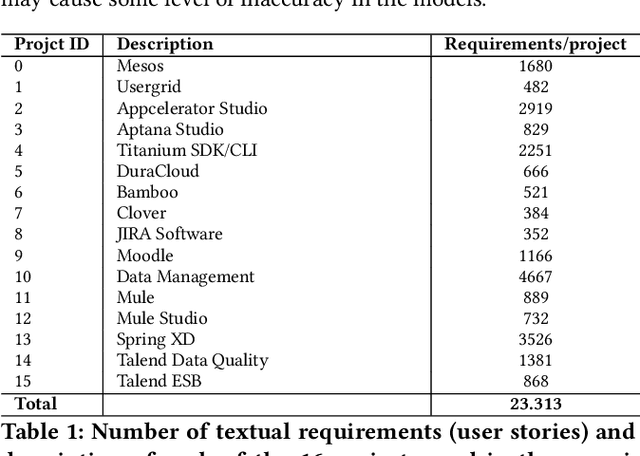

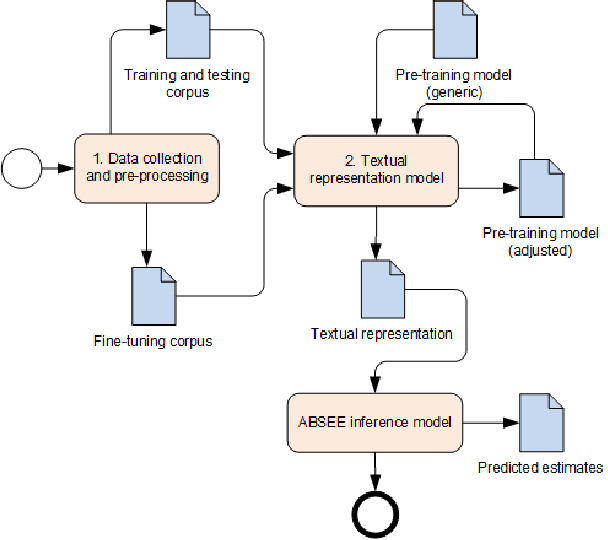

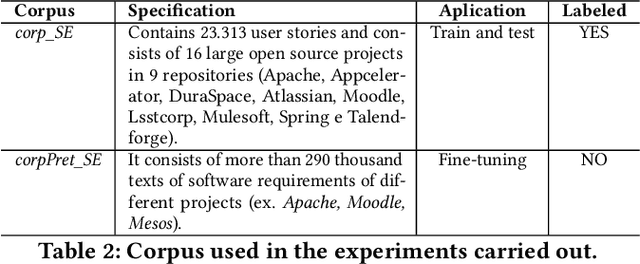

Abstract:Estimating effort based on requirement texts presents many challenges, especially in obtaining viable features to infer effort. Aiming to explore a more effective technique for representing textual requirements to infer effort estimates by analogy, this paper proposes to evaluate the effectiveness of pre-trained embeddings models. For this, two embeddings approach, context-less and contextualized models are used. Generic pre-trained models for both approaches went through a fine-tuning process. The generated models were used as input in the applied deep learning architecture, with linear output. The results were very promising, realizing that pre-trained incorporation models can be used to estimate software effort based only on requirements texts. We highlight the results obtained to apply the pre-trained BERT model with fine-tuning in a single project repository, whose value is the Mean Absolute Error (MAE) is 4.25 and the standard deviation of only 0.17, which represents a result very positive when compared to similar works. The main advantages of the proposed estimation method are reliability, the possibility of generalization, speed, and low computational cost provided by the fine-tuning process, and the possibility to infer new or existing requirements.

Concept and the implementation of a tool to convert industry 4.0 environments modeled as FSM to an OpenAI Gym wrapper

Jun 29, 2020

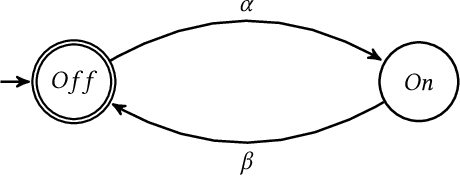

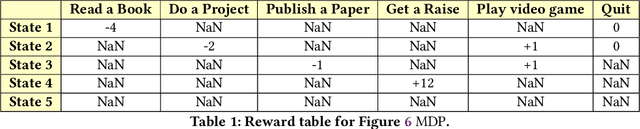

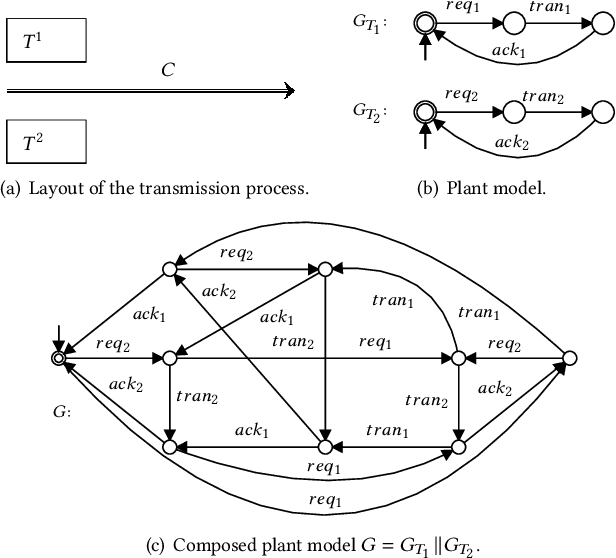

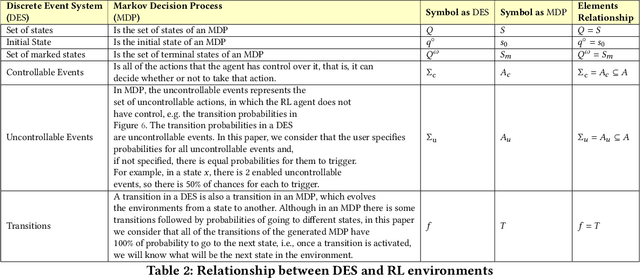

Abstract:Industry 4.0 systems have a high demand for optimization in their tasks, whether to minimize cost, maximize production, or even synchronize their actuators to finish or speed up the manufacture of a product. Those challenges make industrial environments a suitable scenario to apply all modern reinforcement learning (RL) concepts. The main difficulty, however, is the lack of that industrial environments. In this way, this work presents the concept and the implementation of a tool that allows us to convert any dynamic system modeled as an FSM to the open-source Gym wrapper. After that, it is possible to employ any RL methods to optimize any desired task. In the first tests of the proposed tool, we show traditional Q-learning and Deep Q-learning methods running over two simple environments.

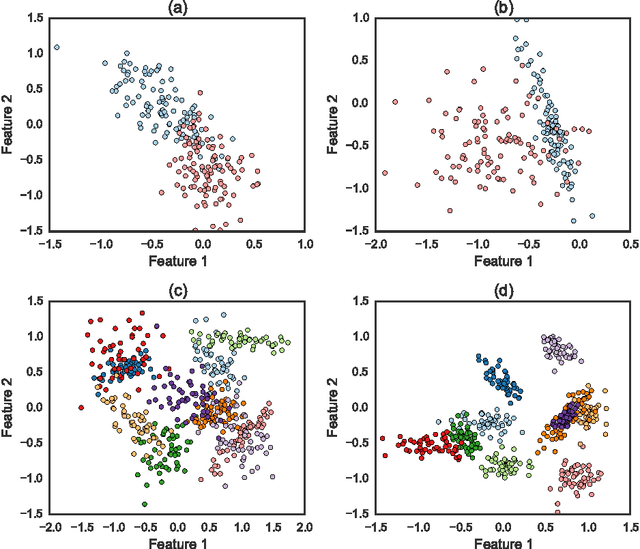

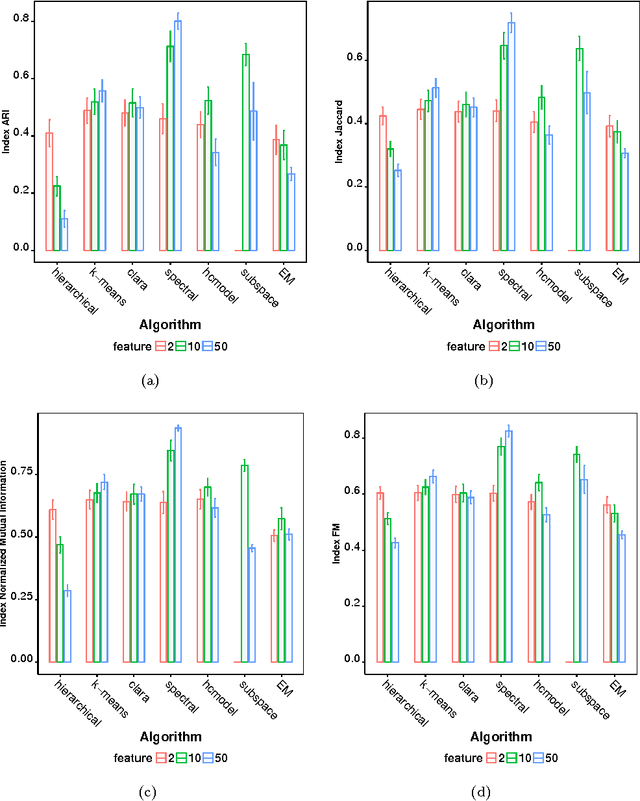

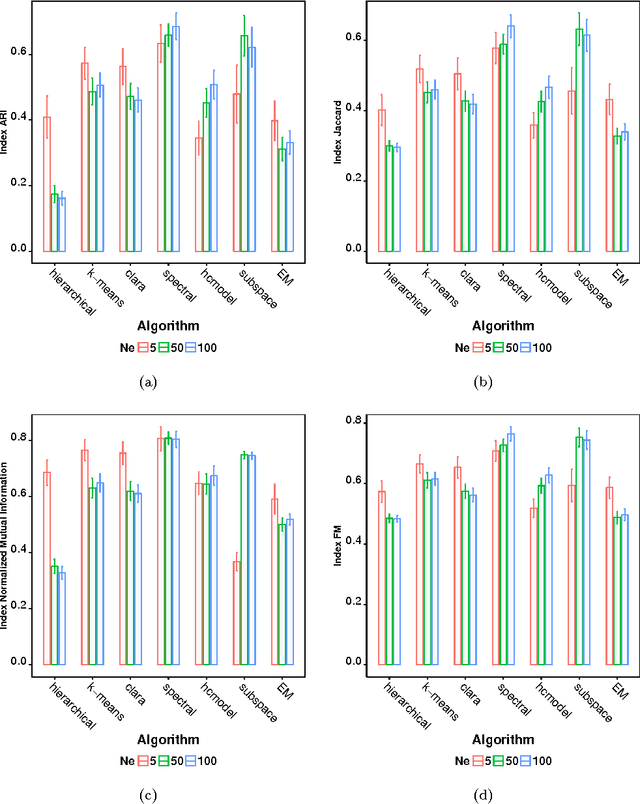

Clustering Algorithms: A Comparative Approach

Dec 26, 2016

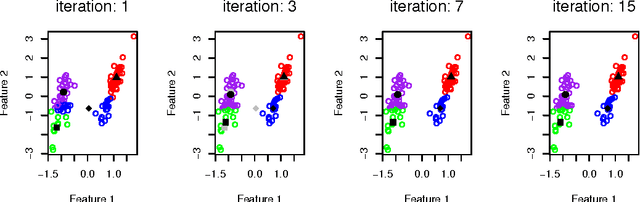

Abstract:Many real-world systems can be studied in terms of pattern recognition tasks, so that proper use (and understanding) of machine learning methods in practical applications becomes essential. While a myriad of classification methods have been proposed, there is no consensus on which methods are more suitable for a given dataset. As a consequence, it is important to comprehensively compare methods in many possible scenarios. In this context, we performed a systematic comparison of 7 well-known clustering methods available in the R language. In order to account for the many possible variations of data, we considered artificial datasets with several tunable properties (number of classes, separation between classes, etc). In addition, we also evaluated the sensitivity of the clustering methods with regard to their parameters configuration. The results revealed that, when considering the default configurations of the adopted methods, the spectral approach usually outperformed the other clustering algorithms. We also found that the default configuration of the adopted implementations was not accurate. In these cases, a simple approach based on random selection of parameters values proved to be a good alternative to improve the performance. All in all, the reported approach provides subsidies guiding the choice of clustering algorithms.

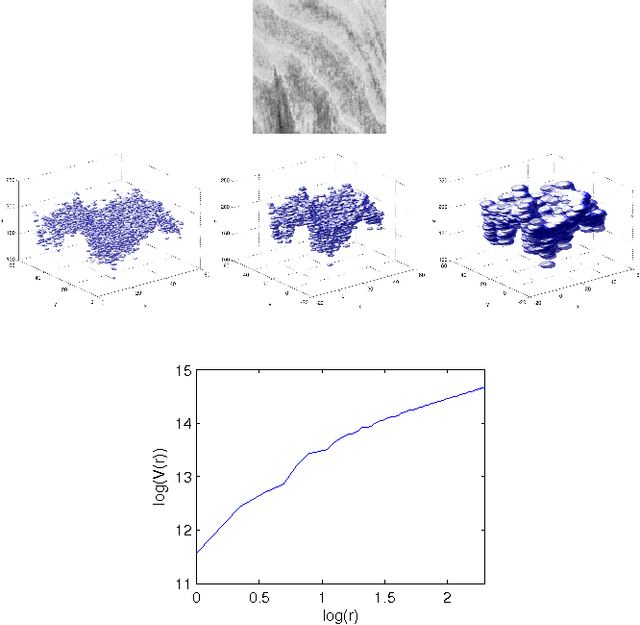

Enhancing fractal descriptors on images by combining boundary and interior of Minkowski dilation

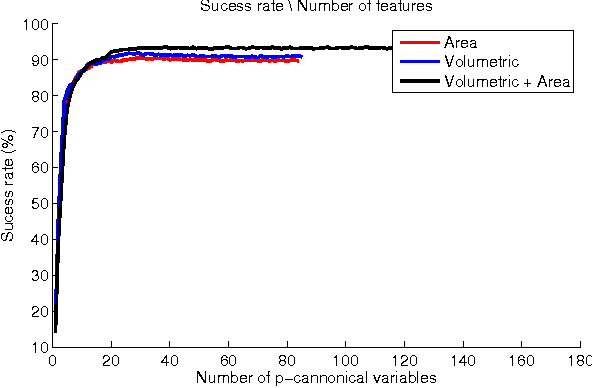

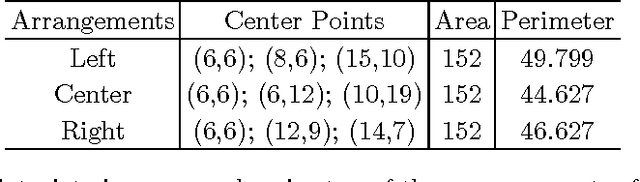

Dec 26, 2014

Abstract:This work proposes to obtain novel fractal descriptors from gray-level texture images by combining information from interior and boundary measures of the Minkowski dilation applied to the texture surface. At first, the image is converted into a surface where the height of each point is the gray intensity of the respective pixel in that position in the image. Thus, this surface is morphologically dilated by spheres. The radius of such spheres is ranged within an interval and the volume and the external area of the dilated structure are computed for each radius. The final descriptors are given by such measures concatenated and subject to a canonical transform to reduce the dimensionality. The proposal is an enhancement to the classical Bouligand-Minkowski fractal descriptors, where only the volume (interior) information is considered. As different structures may have the same volume, but not the same area, the proposal yields to more rich descriptors as confirmed by results on the classification of benchmark databases.

* 6 pages 3 figures

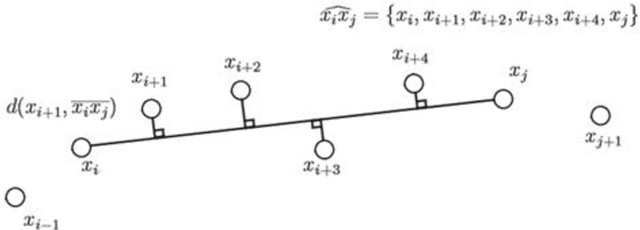

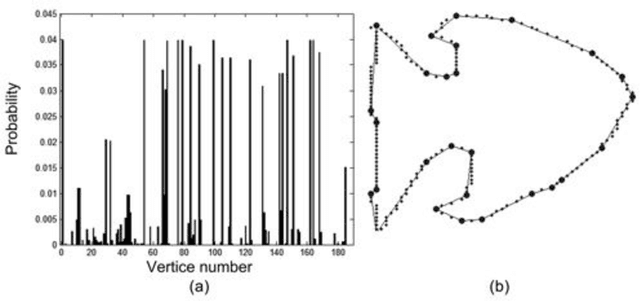

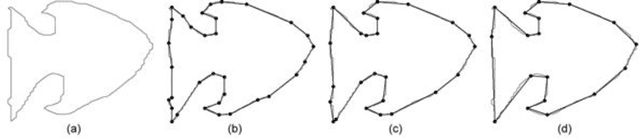

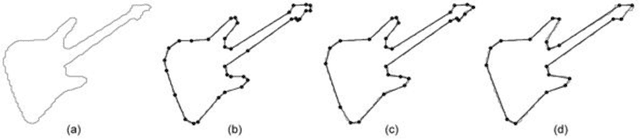

Contour polygonal approximation using shortest path in networks

Nov 18, 2013

Abstract:Contour polygonal approximation is a simplified representation of a contour by line segments, so that the main characteristics of the contour remain in a small number of line segments. This paper presents a novel method for polygonal approximation based on the Complex Networks theory. We convert each point of the contour into a vertex, so that we model a regular network. Then we transform this network into a Small-World Complex Network by applying some transformations over its edges. By analyzing of network properties, especially the geodesic path, we compute the polygonal approximation. The paper presents the main characteristics of the method, as well as its functionality. We evaluate the proposed method using benchmark contours, and compare its results with other polygonal approximation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge