Odemir M. Bruno

Network classification through random walks

May 27, 2025

Abstract:Network models have been widely used to study diverse systems and analyze their dynamic behaviors. Given the structural variability of networks, an intriguing question arises: Can we infer the type of system represented by a network based on its structure? This classification problem involves extracting relevant features from the network. Existing literature has proposed various methods that combine structural measurements and dynamical processes for feature extraction. In this study, we introduce a novel approach to characterize networks using statistics from random walks, which can be particularly informative about network properties. We present the employed statistical metrics and compare their performance on multiple datasets with other state-of-the-art feature extraction methods. Our results demonstrate that the proposed method is effective in many cases, often outperforming existing approaches, although some limitations are observed across certain datasets.

VORTEX: Challenging CNNs at Texture Recognition by using Vision Transformers with Orderless and Randomized Token Encodings

Mar 09, 2025

Abstract:Texture recognition has recently been dominated by ImageNet-pre-trained deep Convolutional Neural Networks (CNNs), with specialized modifications and feature engineering required to achieve state-of-the-art (SOTA) performance. However, although Vision Transformers (ViTs) were introduced a few years ago, little is known about their texture recognition ability. Therefore, in this work, we introduce VORTEX (ViTs with Orderless and Randomized Token Encodings for Texture Recognition), a novel method that enables the effective use of ViTs for texture analysis. VORTEX extracts multi-depth token embeddings from pre-trained ViT backbones and employs a lightweight module to aggregate hierarchical features and perform orderless encoding, obtaining a better image representation for texture recognition tasks. This approach allows seamless integration with any ViT with the common transformer architecture. Moreover, no fine-tuning of the backbone is performed, since they are used only as frozen feature extractors, and the features are fed to a linear SVM. We evaluate VORTEX on nine diverse texture datasets, demonstrating its ability to achieve or surpass SOTA performance in a variety of texture analysis scenarios. By bridging the gap between texture recognition with CNNs and transformer-based architectures, VORTEX paves the way for adopting emerging transformer foundation models. Furthermore, VORTEX demonstrates robust computational efficiency when coupled with ViT backbones compared to CNNs with similar costs. The method implementation and experimental scripts are publicly available in our online repository.

A Comparative Survey of Vision Transformers for Feature Extraction in Texture Analysis

Jun 10, 2024

Abstract:Texture, a significant visual attribute in images, has been extensively investigated across various image recognition applications. Convolutional Neural Networks (CNNs), which have been successful in many computer vision tasks, are currently among the best texture analysis approaches. On the other hand, Vision Transformers (ViTs) have been surpassing the performance of CNNs on tasks such as object recognition, causing a paradigm shift in the field. However, ViTs have so far not been scrutinized for texture recognition, hindering a proper appreciation of their potential in this specific setting. For this reason, this work explores various pre-trained ViT architectures when transferred to tasks that rely on textures. We review 21 different ViT variants and perform an extensive evaluation and comparison with CNNs and hand-engineered models on several tasks, such as assessing robustness to changes in texture rotation, scale, and illumination, and distinguishing color textures, material textures, and texture attributes. The goal is to understand the potential and differences among these models when directly applied to texture recognition, using pre-trained ViTs primarily for feature extraction and employing linear classifiers for evaluation. We also evaluate their efficiency, which is one of the main drawbacks in contrast to other methods. Our results show that ViTs generally outperform both CNNs and hand-engineered models, especially when using stronger pre-training and tasks involving in-the-wild textures (images from the internet). We highlight the following promising models: ViT-B with DINO pre-training, BeiTv2, and the Swin architecture, as well as the EfficientFormer as a low-cost alternative. In terms of efficiency, although having a higher number of GFLOPs and parameters, ViT-B and BeiT(v2) can achieve a lower feature extraction time on GPUs compared to ResNet50.

Advanced wood species identification based on multiple anatomical sections and using deep feature transfer and fusion

Apr 12, 2024Abstract:In recent years, we have seen many advancements in wood species identification. Methods like DNA analysis, Near Infrared (NIR) spectroscopy, and Direct Analysis in Real Time (DART) mass spectrometry complement the long-established wood anatomical assessment of cell and tissue morphology. However, most of these methods have some limitations such as high costs, the need for skilled experts for data interpretation, and the lack of good datasets for professional reference. Therefore, most of these methods, and certainly the wood anatomical assessment, may benefit from tools based on Artificial Intelligence. In this paper, we apply two transfer learning techniques with Convolutional Neural Networks (CNNs) to a multi-view Congolese wood species dataset including sections from different orientations and viewed at different microscopic magnifications. We explore two feature extraction methods in detail, namely Global Average Pooling (GAP) and Random Encoding of Aggregated Deep Activation Maps (RADAM), for efficient and accurate wood species identification. Our results indicate superior accuracy on diverse datasets and anatomical sections, surpassing the results of other methods. Our proposal represents a significant advancement in wood species identification, offering a robust tool to support the conservation of forest ecosystems and promote sustainable forestry practices.

RADAM: Texture Recognition through Randomized Aggregated Encoding of Deep Activation Maps

Mar 08, 2023

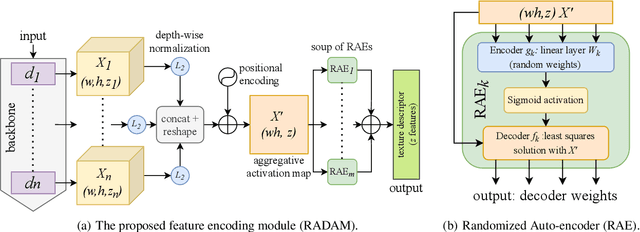

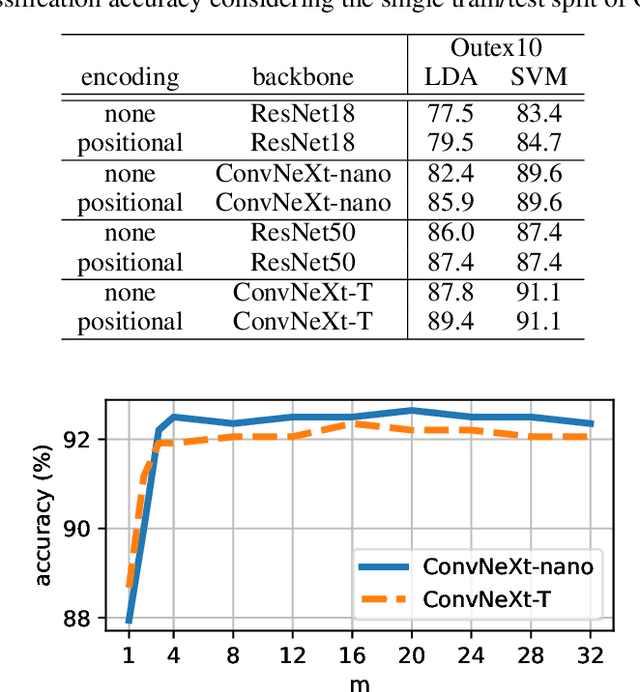

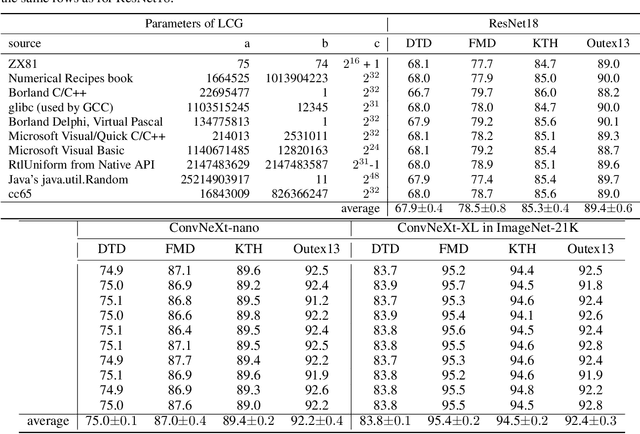

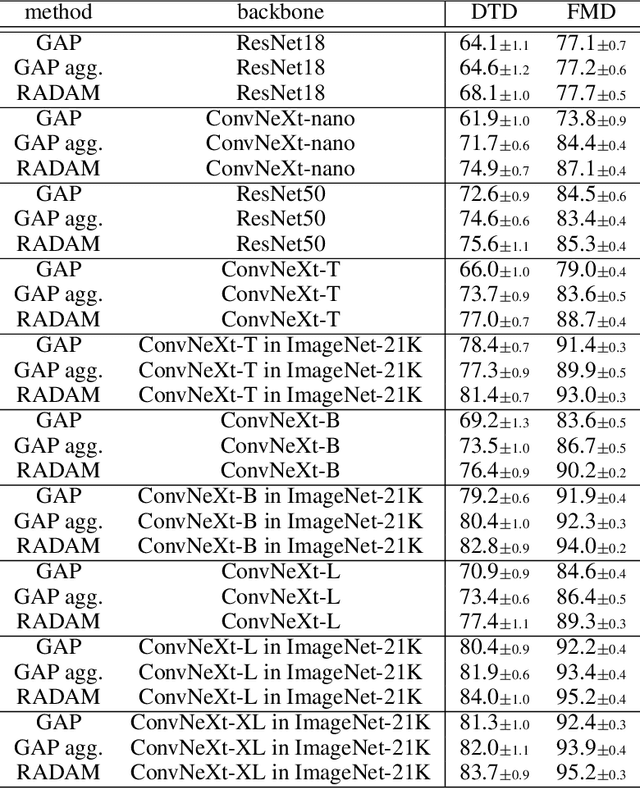

Abstract:Texture analysis is a classical yet challenging task in computer vision for which deep neural networks are actively being applied. Most approaches are based on building feature aggregation modules around a pre-trained backbone and then fine-tuning the new architecture on specific texture recognition tasks. Here we propose a new method named \textbf{R}andom encoding of \textbf{A}ggregated \textbf{D}eep \textbf{A}ctivation \textbf{M}aps (RADAM) which extracts rich texture representations without ever changing the backbone. The technique consists of encoding the output at different depths of a pre-trained deep convolutional network using a Randomized Autoencoder (RAE). The RAE is trained locally to each image using a closed-form solution, and its decoder weights are used to compose a 1-dimensional texture representation that is fed into a linear SVM. This means that no fine-tuning or backpropagation is needed. We explore RADAM on several texture benchmarks and achieve state-of-the-art results with different computational budgets. Our results suggest that pre-trained backbones may not require additional fine-tuning for texture recognition if their learned representations are better encoded.

Exploring ordered patterns in the adjacency matrix for improving machine learning on complex networks

Jan 20, 2023

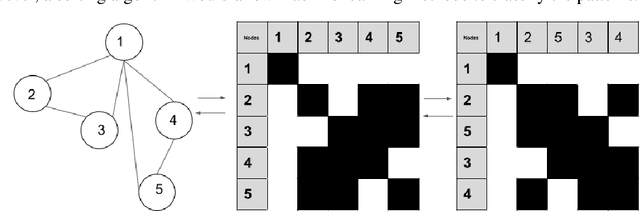

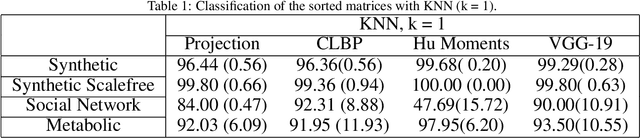

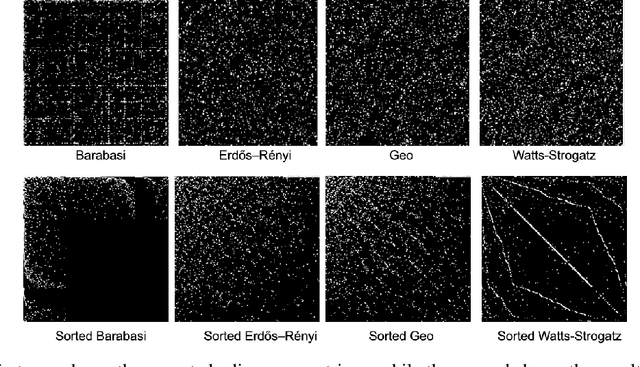

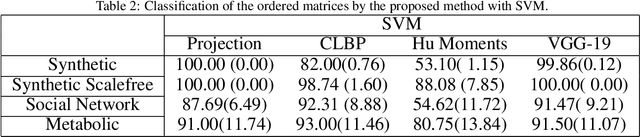

Abstract:The use of complex networks as a modern approach to understanding the world and its dynamics is well-established in literature. The adjacency matrix, which provides a one-to-one representation of a complex network, can also yield several metrics of the graph. However, it is not always clear that this representation is unique, as the permutation of lines and rows in the matrix can represent the same graph. To address this issue, the proposed methodology employs a sorting algorithm to rearrange the elements of the adjacency matrix of a complex graph in a specific order. The resulting sorted adjacency matrix is then used as input for feature extraction and machine learning algorithms to classify the networks. The results indicate that the proposed methodology outperforms previous literature results on synthetic and real-world data.

A Network Classification Method based on Density Time Evolution Patterns Extracted from Network Automata

Nov 18, 2022Abstract:Network modeling has proven to be an efficient tool for many interdisciplinary areas, including social, biological, transport, and many other real world complex systems. In addition, cellular automata (CA) are a formalism that has been studied in the last decades as a model for exploring patterns in the dynamic spatio-temporal behavior of these systems based on local rules. Some studies explore the use of cellular automata to analyze the dynamic behavior of networks, denominating them as network automata (NA). Recently, NA proved to be efficient for network classification, since it uses a time-evolution pattern (TEP) for the feature extraction. However, the TEPs explored by previous studies are composed of binary values, which does not represent detailed information on the network analyzed. Therefore, in this paper, we propose alternate sources of information to use as descriptor for the classification task, which we denominate as density time-evolution pattern (D-TEP) and state density time-evolution pattern (SD-TEP). We explore the density of alive neighbors of each node, which is a continuous value, and compute feature vectors based on histograms of the TEPs. Our results show a significant improvement compared to previous studies at five synthetic network databases and also seven real world databases. Our proposed method demonstrates not only a good approach for pattern recognition in networks, but also shows great potential for other kinds of data, such as images.

Improving Deep Neural Network Random Initialization Through Neuronal Rewiring

Jul 17, 2022

Abstract:The deep learning literature is continuously updated with new architectures and training techniques. However, weight initialization is overlooked by most recent research, despite some intriguing findings regarding random weights. On the other hand, recent works have been approaching Network Science to understand the structure and dynamics of Artificial Neural Networks (ANNs) after training. Therefore, in this work, we analyze the centrality of neurons in randomly initialized networks. We show that a higher neuronal strength variance may decrease performance, while a lower neuronal strength variance usually improves it. A new method is then proposed to rewire neuronal connections according to a preferential attachment (PA) rule based on their strength, which significantly reduces the strength variance of layers initialized by common methods. In this sense, PA rewiring only reorganizes connections, while preserving the magnitude and distribution of the weights. We show through an extensive statistical analysis in image classification that performance is improved in most cases, both during training and testing, when using both simple and complex architectures and learning schedules. Our results show that, aside from the magnitude, the organization of the weights is also relevant for better initialization of deep ANNs.

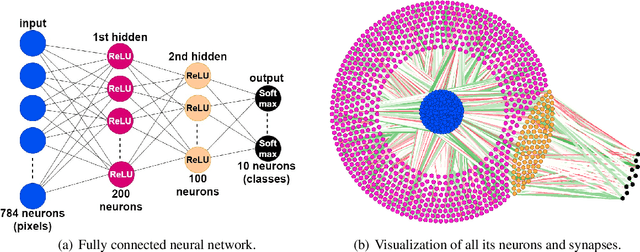

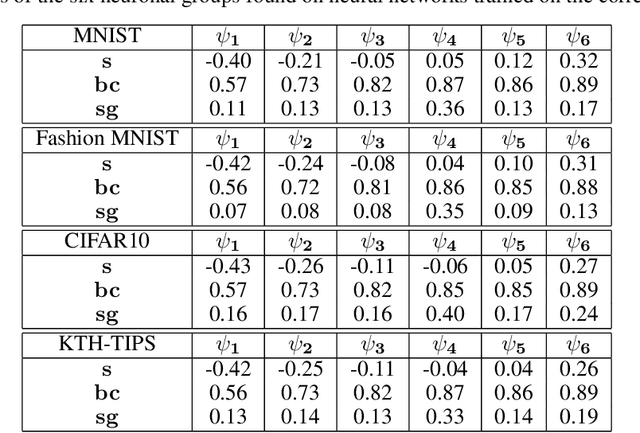

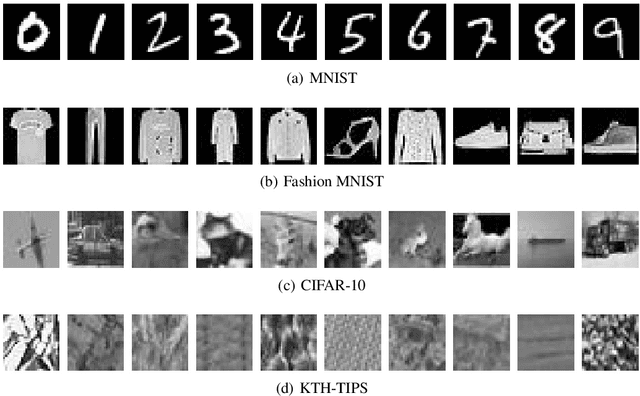

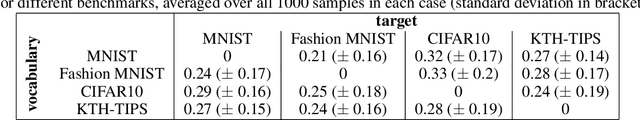

Structure and Performance of Fully Connected Neural Networks: Emerging Complex Network Properties

Jul 29, 2021

Abstract:Understanding the behavior of Artificial Neural Networks is one of the main topics in the field recently, as black-box approaches have become usual since the widespread of deep learning. Such high-dimensional models may manifest instabilities and weird properties that resemble complex systems. Therefore, we propose Complex Network (CN) techniques to analyze the structure and performance of fully connected neural networks. For that, we build a dataset with 4 thousand models and their respective CN properties. They are employed in a supervised classification setup considering four vision benchmarks. Each neural network is approached as a weighted and undirected graph of neurons and synapses, and centrality measures are computed after training. Results show that these measures are highly related to the network classification performance. We also propose the concept of Bag-Of-Neurons (BoN), a CN-based approach for finding topological signatures linking similar neurons. Results suggest that six neuronal types emerge in such networks, independently of the target domain, and are distributed differently according to classification accuracy. We also tackle specific CN properties related to performance, such as higher subgraph centrality on lower-performing models. Our findings suggest that CN properties play a critical role in the performance of fully connected neural networks, with topological patterns emerging independently on a wide range of models.

Learning Local Complex Features using Randomized Neural Networks for Texture Analysis

Jul 10, 2020

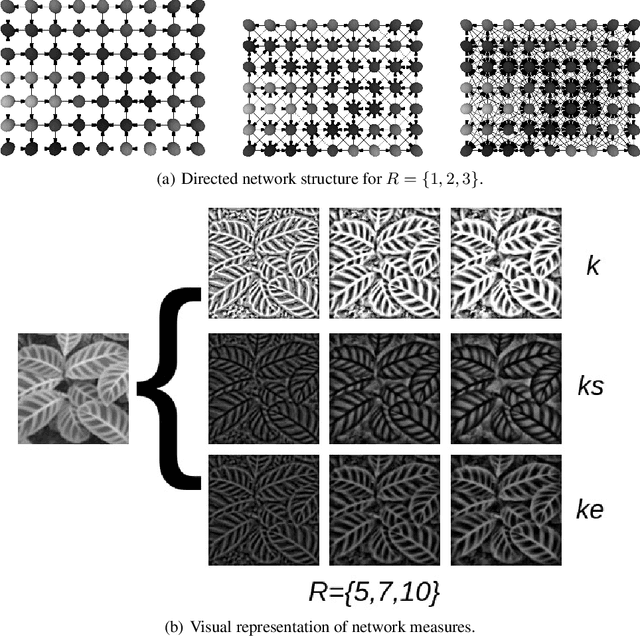

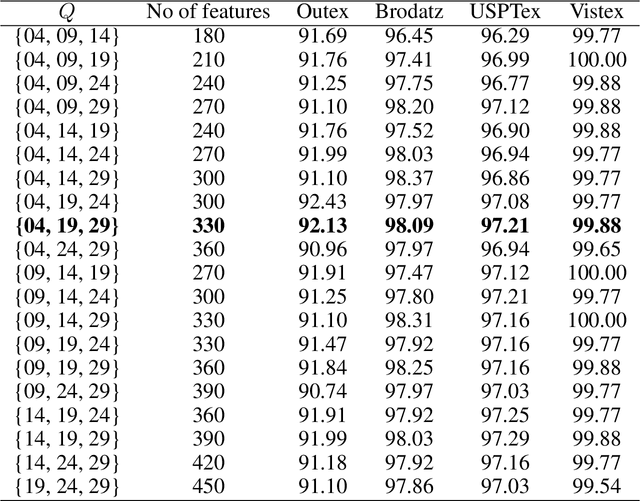

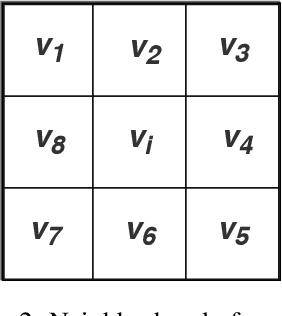

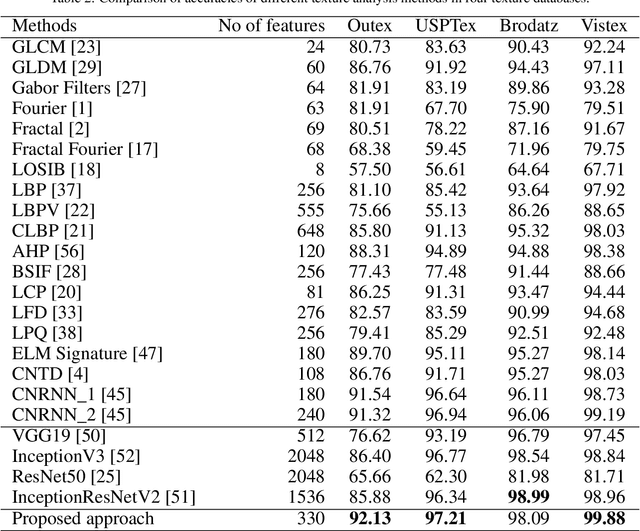

Abstract:Texture is a visual attribute largely used in many problems of image analysis. Currently, many methods that use learning techniques have been proposed for texture discrimination, achieving improved performance over previous handcrafted methods. In this paper, we present a new approach that combines a learning technique and the Complex Network (CN) theory for texture analysis. This method takes advantage of the representation capacity of CN to model a texture image as a directed network and uses the topological information of vertices to train a randomized neural network. This neural network has a single hidden layer and uses a fast learning algorithm, which is able to learn local CN patterns for texture characterization. Thus, we use the weighs of the trained neural network to compose a feature vector. These feature vectors are evaluated in a classification experiment in four widely used image databases. Experimental results show a high classification performance of the proposed method when compared to other methods, indicating that our approach can be used in many image analysis problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge