Kallil M. C. Zielinski

A Network Classification Method based on Density Time Evolution Patterns Extracted from Network Automata

Nov 18, 2022Abstract:Network modeling has proven to be an efficient tool for many interdisciplinary areas, including social, biological, transport, and many other real world complex systems. In addition, cellular automata (CA) are a formalism that has been studied in the last decades as a model for exploring patterns in the dynamic spatio-temporal behavior of these systems based on local rules. Some studies explore the use of cellular automata to analyze the dynamic behavior of networks, denominating them as network automata (NA). Recently, NA proved to be efficient for network classification, since it uses a time-evolution pattern (TEP) for the feature extraction. However, the TEPs explored by previous studies are composed of binary values, which does not represent detailed information on the network analyzed. Therefore, in this paper, we propose alternate sources of information to use as descriptor for the classification task, which we denominate as density time-evolution pattern (D-TEP) and state density time-evolution pattern (SD-TEP). We explore the density of alive neighbors of each node, which is a continuous value, and compute feature vectors based on histograms of the TEPs. Our results show a significant improvement compared to previous studies at five synthetic network databases and also seven real world databases. Our proposed method demonstrates not only a good approach for pattern recognition in networks, but also shows great potential for other kinds of data, such as images.

Concept and the implementation of a tool to convert industry 4.0 environments modeled as FSM to an OpenAI Gym wrapper

Jun 29, 2020

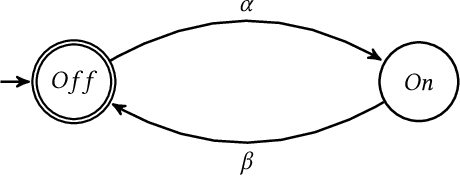

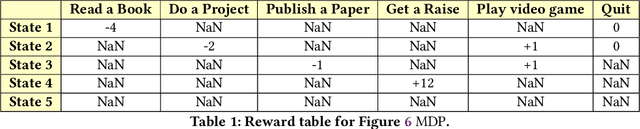

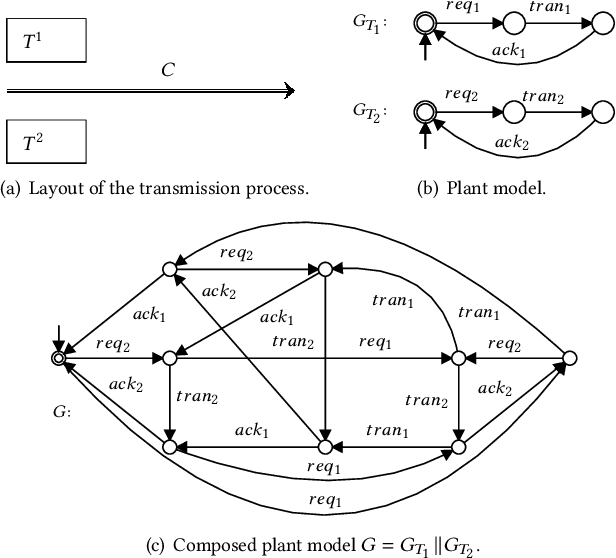

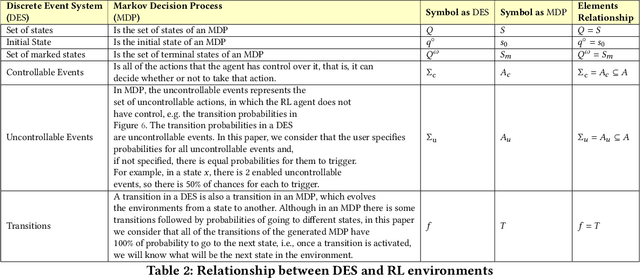

Abstract:Industry 4.0 systems have a high demand for optimization in their tasks, whether to minimize cost, maximize production, or even synchronize their actuators to finish or speed up the manufacture of a product. Those challenges make industrial environments a suitable scenario to apply all modern reinforcement learning (RL) concepts. The main difficulty, however, is the lack of that industrial environments. In this way, this work presents the concept and the implementation of a tool that allows us to convert any dynamic system modeled as an FSM to the open-source Gym wrapper. After that, it is possible to employ any RL methods to optimize any desired task. In the first tests of the proposed tool, we show traditional Q-learning and Deep Q-learning methods running over two simple environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge