Dakai Zhu

Validating LLM-Generated Programs with Metamorphic Prompt Testing

Jun 11, 2024

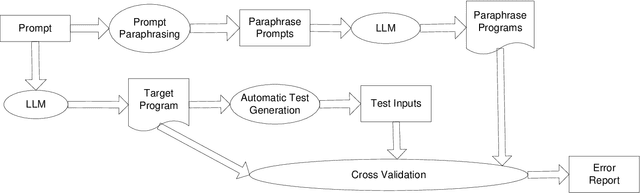

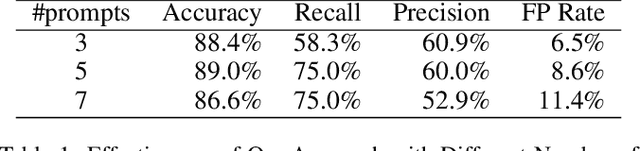

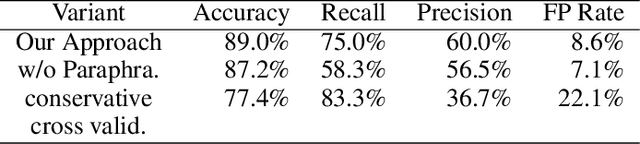

Abstract:The latest paradigm shift in software development brings in the innovation and automation afforded by Large Language Models (LLMs), showcased by Generative Pre-trained Transformer (GPT), which has shown remarkable capacity to generate code autonomously, significantly reducing the manual effort required for various programming tasks. Although, the potential benefits of LLM-generated code are vast, most notably in efficiency and rapid prototyping, as LLMs become increasingly integrated into the software development lifecycle and hence the supply chain, complex and multifaceted challenges arise as the code generated from these language models carry profound questions on quality and correctness. Research is required to comprehensively explore these critical concerns surrounding LLM-generated code. In this paper, we propose a novel solution called metamorphic prompt testing to address these challenges. Our intuitive observation is that intrinsic consistency always exists among correct code pieces but may not exist among flawed code pieces, so we can detect flaws in the code by detecting inconsistencies. Therefore, we can vary a given prompt to multiple prompts with paraphrasing, and to ask the LLM to acquire multiple versions of generated code, so that we can validate whether the semantic relations still hold in the acquired code through cross-validation. Our evaluation on HumanEval shows that metamorphic prompt testing is able to detect 75 percent of the erroneous programs generated by GPT-4, with a false positive rate of 8.6 percent.

Testing learning-enabled cyber-physical systems with Large-Language Models: A Formal Approach

Nov 13, 2023Abstract:The integration of machine learning (ML) into cyber-physical systems (CPS) offers significant benefits, including enhanced efficiency, predictive capabilities, real-time responsiveness, and the enabling of autonomous operations. This convergence has accelerated the development and deployment of a range of real-world applications, such as autonomous vehicles, delivery drones, service robots, and telemedicine procedures. However, the software development life cycle (SDLC) for AI-infused CPS diverges significantly from traditional approaches, featuring data and learning as two critical components. Existing verification and validation techniques are often inadequate for these new paradigms. In this study, we pinpoint the main challenges in ensuring formal safety for learningenabled CPS.We begin by examining testing as the most pragmatic method for verification and validation, summarizing the current state-of-the-art methodologies. Recognizing the limitations in current testing approaches to provide formal safety guarantees, we propose a roadmap to transition from foundational probabilistic testing to a more rigorous approach capable of delivering formal assurance.

PPG-based Heart Rate Estimation with Efficient Sensor Sampling and Learning Models

Mar 23, 2023

Abstract:Recent studies showed that Photoplethysmography (PPG) sensors embedded in wearable devices can estimate heart rate (HR) with high accuracy. However, despite of prior research efforts, applying PPG sensor based HR estimation to embedded devices still faces challenges due to the energy-intensive high-frequency PPG sampling and the resource-intensive machine-learning models. In this work, we aim to explore HR estimation techniques that are more suitable for lower-power and resource-constrained embedded devices. More specifically, we seek to design techniques that could provide high-accuracy HR estimation with low-frequency PPG sampling, small model size, and fast inference time. First, we show that by combining signal processing and ML, it is possible to reduce the PPG sampling frequency from 125 Hz to only 25 Hz while providing higher HR estimation accuracy. This combination also helps to reduce the ML model feature size, leading to smaller models. Additionally, we present a comprehensive analysis on different ML models and feature sizes to compare their accuracy, model size, and inference time. The models explored include Decision Tree (DT), Random Forest (RF), K-nearest neighbor (KNN), Support vector machines (SVM), and Multi-layer perceptron (MLP). Experiments were conducted using both a widely-utilized dataset and our self-collected dataset. The experimental results show that our method by combining signal processing and ML had only 5% error for HR estimation using low-frequency PPG data. Moreover, our analysis showed that DT models with 10 to 20 input features usually have good accuracy, while are several magnitude smaller in model sizes and faster in inference time.

Efficient and Direct Inference of Heart Rate Variability using Both Signal Processing and Machine Learning

Mar 23, 2023

Abstract:Heart Rate Variability (HRV) measures the variation of the time between consecutive heartbeats and is a major indicator of physical and mental health. Recent research has demonstrated that photoplethysmography (PPG) sensors can be used to infer HRV. However, many prior studies had high errors because they only employed signal processing or machine learning (ML), or because they indirectly inferred HRV, or because there lacks large training datasets. Many prior studies may also require large ML models. The low accuracy and large model sizes limit their applications to small embedded devices and potential future use in healthcare. To address the above issues, we first collected a large dataset of PPG signals and HRV ground truth. With this dataset, we developed HRV models that combine signal processing and ML to directly infer HRV. Evaluation results show that our method had errors between 3.5% to 25.7% and outperformed signal-processing-only and ML-only methods. We also explored different ML models, which showed that Decision Trees and Multi-level Perceptrons have 13.0% and 9.1% errors on average with models at most hundreds of KB and inference time less than 1ms. Hence, they are more suitable for small embedded devices and potentially enable the future use of PPG-based HRV monitoring in healthcare.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge