Chuanwei Zhou

SGFeat: Salient Geometric Feature for Point Cloud Registration

Sep 12, 2023Abstract:Point Cloud Registration (PCR) is a critical and challenging task in computer vision. One of the primary difficulties in PCR is identifying salient and meaningful points that exhibit consistent semantic and geometric properties across different scans. Previous methods have encountered challenges with ambiguous matching due to the similarity among patch blocks throughout the entire point cloud and the lack of consideration for efficient global geometric consistency. To address these issues, we propose a new framework that includes several novel techniques. Firstly, we introduce a semantic-aware geometric encoder that combines object-level and patch-level semantic information. This encoder significantly improves registration recall by reducing ambiguity in patch-level superpoint matching. Additionally, we incorporate a prior knowledge approach that utilizes an intrinsic shape signature to identify salient points. This enables us to extract the most salient super points and meaningful dense points in the scene. Secondly, we introduce an innovative transformer that encodes High-Order (HO) geometric features. These features are crucial for identifying salient points within initial overlap regions while considering global high-order geometric consistency. To optimize this high-order transformer further, we introduce an anchor node selection strategy. By encoding inter-frame triangle or polyhedron consistency features based on these anchor nodes, we can effectively learn high-order geometric features of salient super points. These high-order features are then propagated to dense points and utilized by a Sinkhorn matching module to identify key correspondences for successful registration. In our experiments conducted on well-known datasets such as 3DMatch/3DLoMatch and KITTI, our approach has shown promising results, highlighting the effectiveness of our novel method.

Structure-Sensitive Graph Dictionary Embedding for Graph Classification

Jun 18, 2023

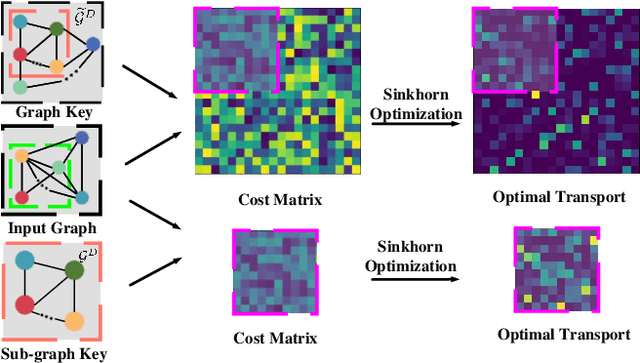

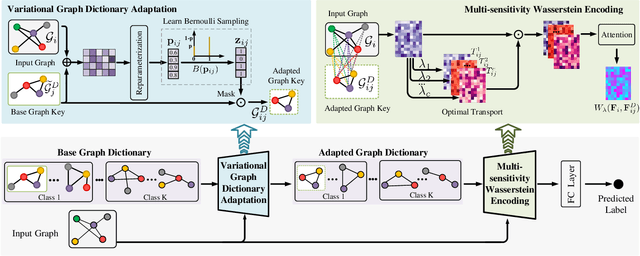

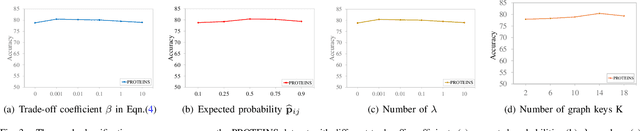

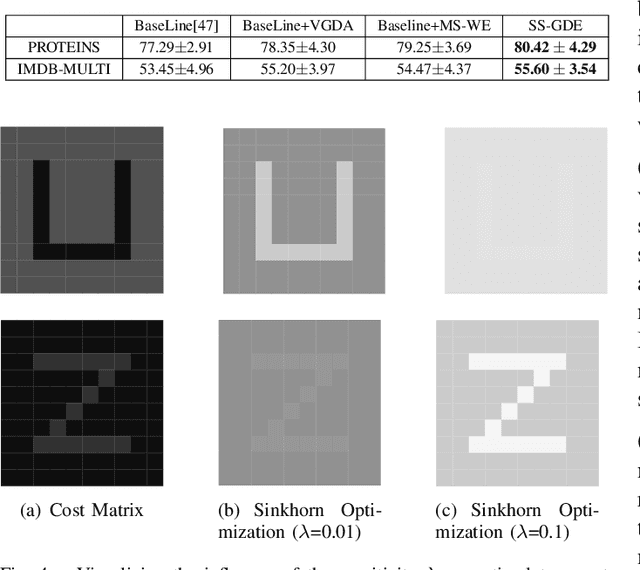

Abstract:Graph structure expression plays a vital role in distinguishing various graphs. In this work, we propose a Structure-Sensitive Graph Dictionary Embedding (SS-GDE) framework to transform input graphs into the embedding space of a graph dictionary for the graph classification task. Instead of a plain use of a base graph dictionary, we propose the variational graph dictionary adaptation (VGDA) to generate a personalized dictionary (named adapted graph dictionary) for catering to each input graph. In particular, for the adaptation, the Bernoulli sampling is introduced to adjust substructures of base graph keys according to each input, which increases the expression capacity of the base dictionary tremendously. To make cross-graph measurement sensitive as well as stable, multi-sensitivity Wasserstein encoding is proposed to produce the embeddings by designing multi-scale attention on optimal transport. To optimize the framework, we introduce mutual information as the objective, which further deduces to variational inference of the adapted graph dictionary. We perform our SS-GDE on multiple datasets of graph classification, and the experimental results demonstrate the effectiveness and superiority over the state-of-the-art methods.

Localizing Anomalies from Weakly-Labeled Videos

Aug 20, 2020

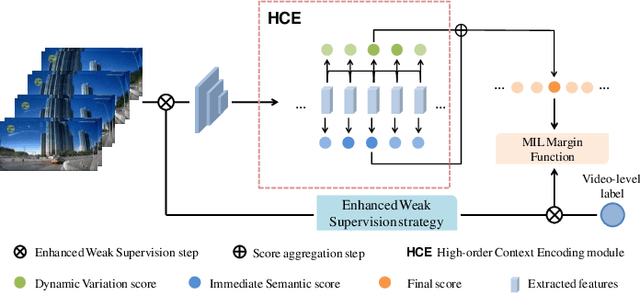

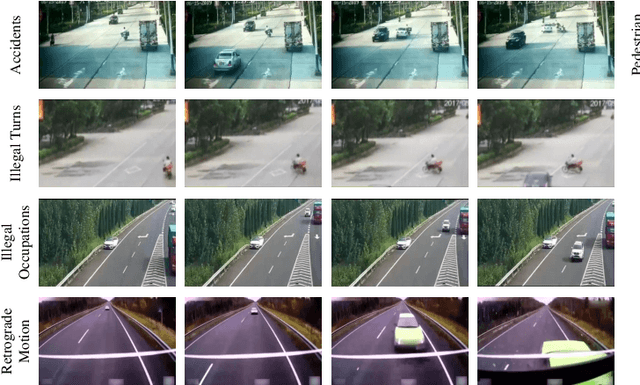

Abstract:Video anomaly detection under video-level labels is currently a challenging task. Previous works have made progresses on discriminating whether a video sequencecontains anomalies. However, most of them fail to accurately localize the anomalous events within videos in the temporal domain. In this paper, we propose a Weakly Supervised Anomaly Localization (WSAL) method focusing on temporally localizing anomalous segments within anomalous videos. Inspired by the appearance difference in anomalous videos, the evolution of adjacent temporal segments is evaluated for the localization of anomalous segments. To this end, a high-order context encoding model is proposed to not only extract semantic representations but also measure the dynamic variations so that the temporal context could be effectively utilized. In addition, in order to fully utilize the spatial context information, the immediate semantics are directly derived from the segment representations. The dynamic variations as well as the immediate semantics, are efficiently aggregated to obtain the final anomaly scores. An enhancement strategy is further proposed to deal with noise interference and the absence of localization guidance in anomaly detection. Moreover, to facilitate the diversity requirement for anomaly detection benchmarks, we also collect a new traffic anomaly (TAD) dataset which specifies in the traffic conditions, differing greatly from the current popular anomaly detection evaluation benchmarks.Extensive experiments are conducted to verify the effectiveness of different components, and our proposed method achieves new state-of-the-art performance on the UCF-Crime and TAD datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge