Christopher Thierauf

FLEX: A Framework for Learning Robot-Agnostic Force-based Skills Involving Sustained Contact Object Manipulation

Mar 17, 2025

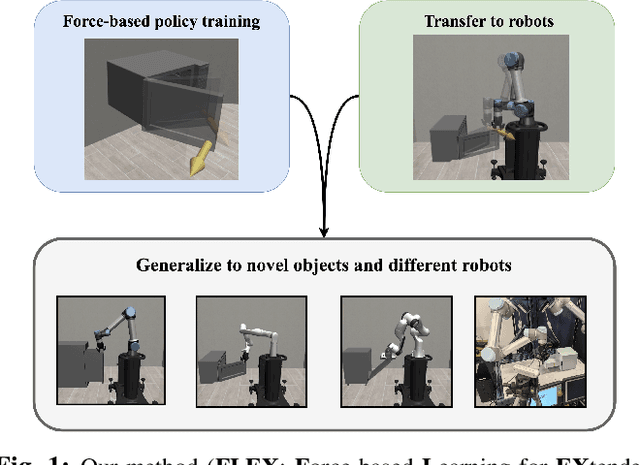

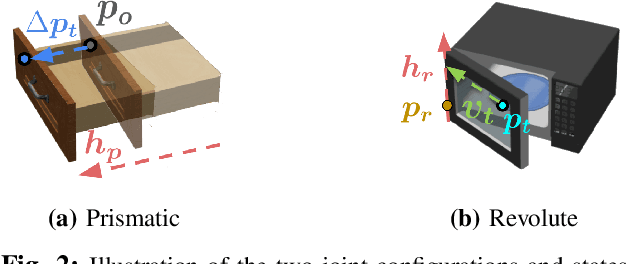

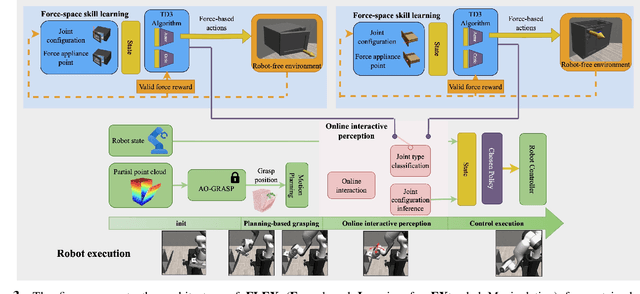

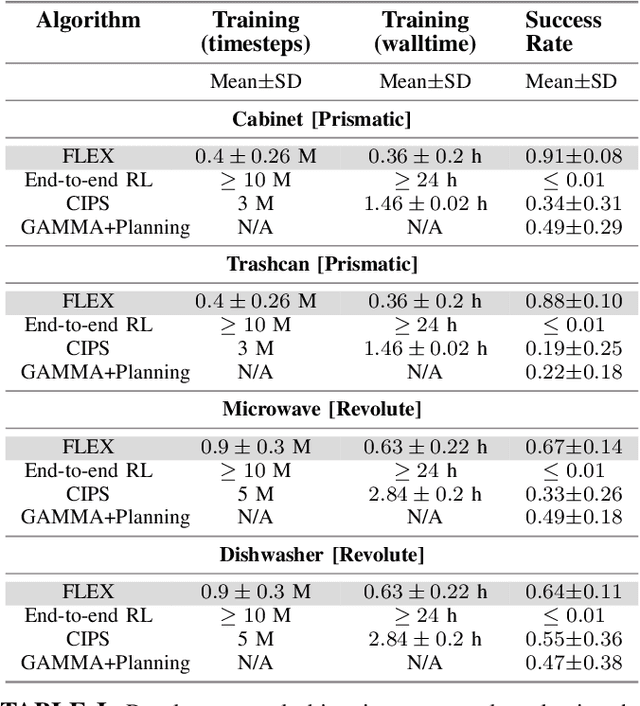

Abstract:Learning to manipulate objects efficiently, particularly those involving sustained contact (e.g., pushing, sliding) and articulated parts (e.g., drawers, doors), presents significant challenges. Traditional methods, such as robot-centric reinforcement learning (RL), imitation learning, and hybrid techniques, require massive training and often struggle to generalize across different objects and robot platforms. We propose a novel framework for learning object-centric manipulation policies in force space, decoupling the robot from the object. By directly applying forces to selected regions of the object, our method simplifies the action space, reduces unnecessary exploration, and decreases simulation overhead. This approach, trained in simulation on a small set of representative objects, captures object dynamics -- such as joint configurations -- allowing policies to generalize effectively to new, unseen objects. Decoupling these policies from robot-specific dynamics enables direct transfer to different robotic platforms (e.g., Kinova, Panda, UR5) without retraining. Our evaluations demonstrate that the method significantly outperforms baselines, achieving over an order of magnitude improvement in training efficiency compared to other state-of-the-art methods. Additionally, operating in force space enhances policy transferability across diverse robot platforms and object types. We further showcase the applicability of our method in a real-world robotic setting. For supplementary materials and videos, please visit: https://tufts-ai-robotics-group.github.io/FLEX/

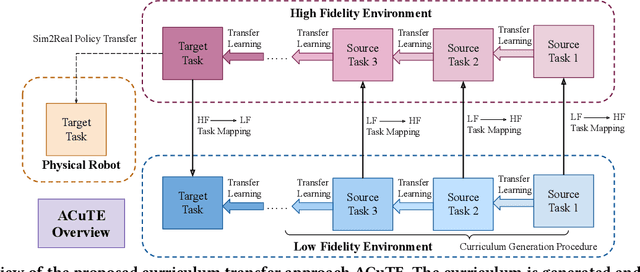

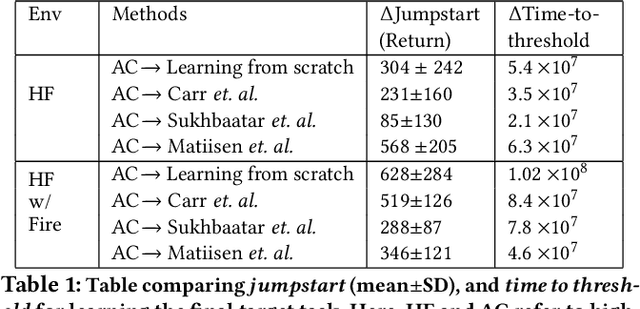

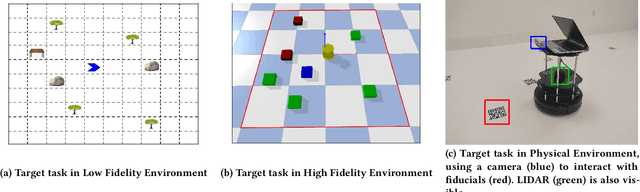

ACuTE: Automatic Curriculum Transfer from Simple to Complex Environments

Apr 11, 2022

Abstract:Despite recent advances in Reinforcement Learning (RL), many problems, especially real-world tasks, remain prohibitively expensive to learn. To address this issue, several lines of research have explored how tasks, or data samples themselves, can be sequenced into a curriculum to learn a problem that may otherwise be too difficult to learn from scratch. However, generating and optimizing a curriculum in a realistic scenario still requires extensive interactions with the environment. To address this challenge, we formulate the curriculum transfer problem, in which the schema of a curriculum optimized in a simpler, easy-to-solve environment (e.g., a grid world) is transferred to a complex, realistic scenario (e.g., a physics-based robotics simulation or the real world). We present "ACuTE", Automatic Curriculum Transfer from Simple to Complex Environments, a novel framework to solve this problem, and evaluate our proposed method by comparing it to other baseline approaches (e.g., domain adaptation) designed to speed up learning. We observe that our approach produces improved jumpstart and time-to-threshold performance even when adding task elements that further increase the difficulty of the realistic scenario. Finally, we demonstrate that our approach is independent of the learning algorithm used for curriculum generation, and is Sim2Real transferable to a real world scenario using a physical robot.

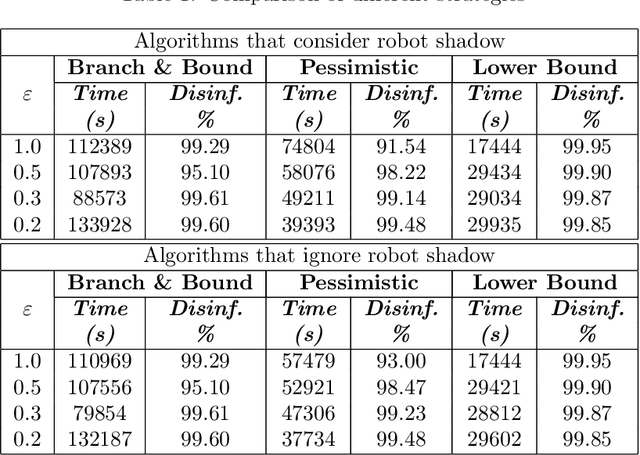

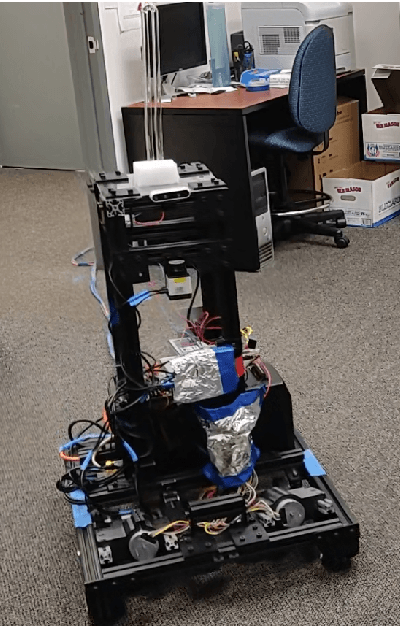

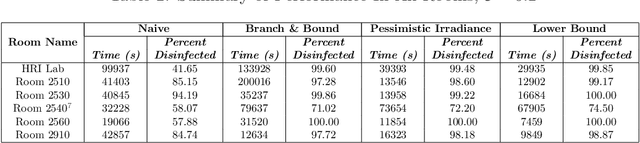

Robot Development and Path Planning for Indoor Ultraviolet Light Disinfection

Apr 12, 2021

Abstract:Regular irradiation of indoor environments with ultraviolet C (UVC) light has become a regular task for many indoor settings as a result of COVID-19, but current robotic systems attempting to automate it suffer from high costs and inefficient irradiation. In this paper, we propose a purpose-made inexpensive robotic platform with off-the-shelf components and standard navigation software that, with a novel algorithm for finding optimal irradiation locations, addresses both shortcomings to offer affordable and efficient solutions for UVC irradiation. We demonstrate in simulations the efficacy of the algorithm and show a prototypical run of the autonomous integrated robotic system in an indoor environment. In our sample instances, our proposed algorithm reduces the time needed by roughly 30\% while it increases the coverage by a factor of 35\% (when compared to the best possible placement of a static light).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge