Christopher A. Strong

ZoPE: A Fast Optimizer for ReLU Networks with Low-Dimensional Inputs

Jun 09, 2021

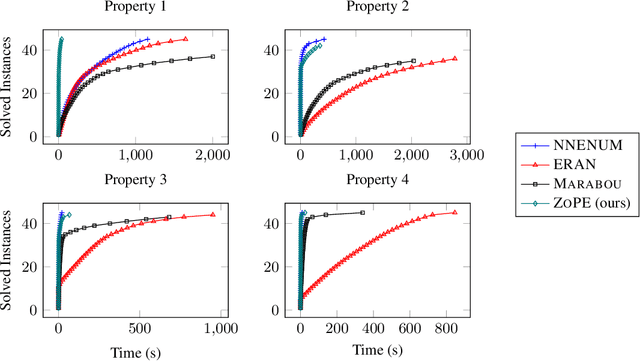

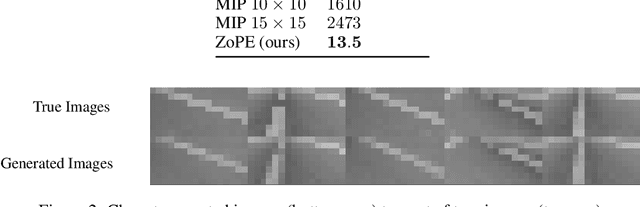

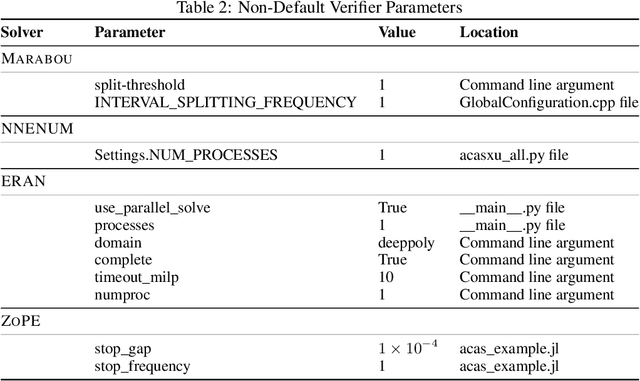

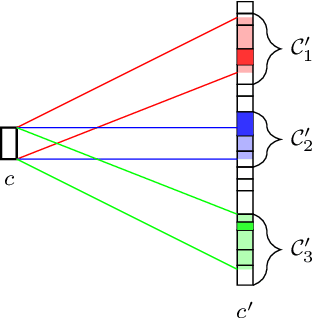

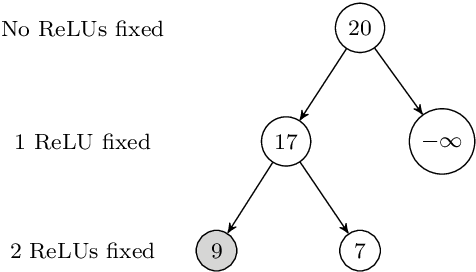

Abstract:Deep neural networks often lack the safety and robustness guarantees needed to be deployed in safety critical systems. Formal verification techniques can be used to prove input-output safety properties of networks, but when properties are difficult to specify, we rely on the solution to various optimization problems. In this work, we present an algorithm called ZoPE that solves optimization problems over the output of feedforward ReLU networks with low-dimensional inputs. The algorithm eagerly splits the input space, bounding the objective using zonotope propagation at each step, and improves computational efficiency compared to existing mixed integer programming approaches. We demonstrate how to formulate and solve three types of optimization problems: (i) minimization of any convex function over the output space, (ii) minimization of a convex function over the output of two networks in series with an adversarial perturbation in the layer between them, and (iii) maximization of the difference in output between two networks. Using ZoPE, we observe a $25\times$ speedup on property 1 of the ACAS Xu neural network verification benchmark and an $85\times$ speedup on a set of linear optimization problems. We demonstrate the versatility of the optimizer in analyzing networks by projecting onto the range of a generative adversarial network and visualizing the differences between a compressed and uncompressed network.

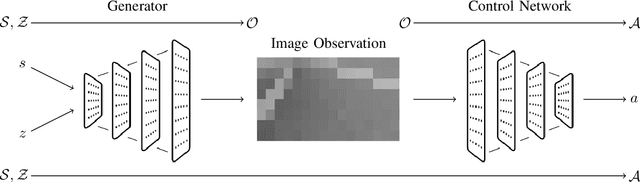

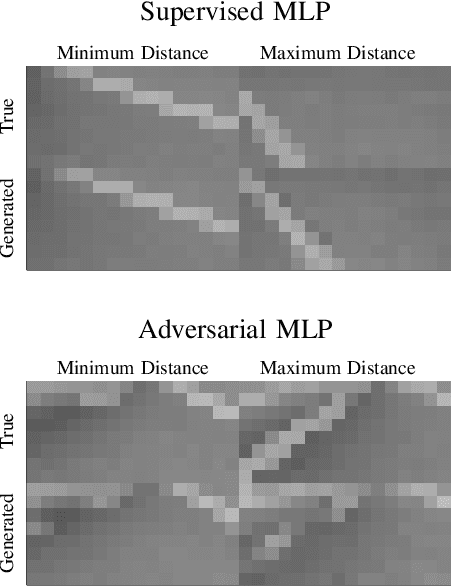

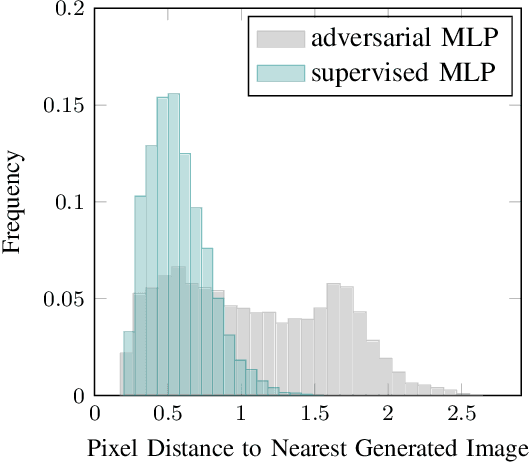

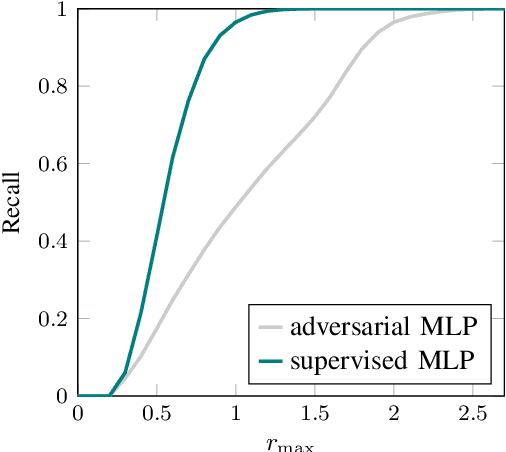

Verification of Image-based Neural Network Controllers Using Generative Models

May 14, 2021

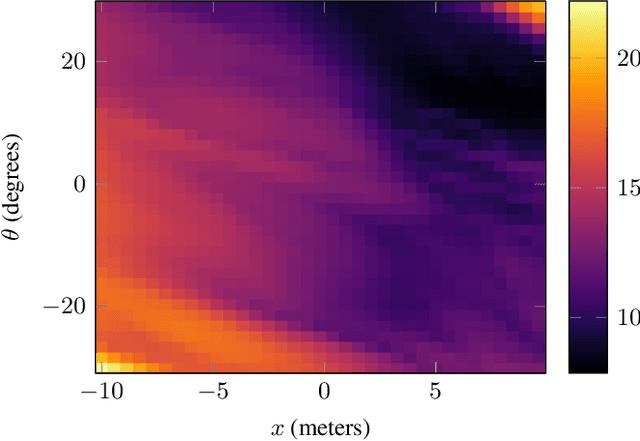

Abstract:Neural networks are often used to process information from image-based sensors to produce control actions. While they are effective for this task, the complex nature of neural networks makes their output difficult to verify and predict, limiting their use in safety-critical systems. For this reason, recent work has focused on combining techniques in formal methods and reachability analysis to obtain guarantees on the closed-loop performance of neural network controllers. However, these techniques do not scale to the high-dimensional and complicated input space of image-based neural network controllers. In this work, we propose a method to address these challenges by training a generative adversarial network (GAN) to map states to plausible input images. By concatenating the generator network with the control network, we obtain a network with a low-dimensional input space. This insight allows us to use existing closed-loop verification tools to obtain formal guarantees on the performance of image-based controllers. We apply our approach to provide safety guarantees for an image-based neural network controller for an autonomous aircraft taxi problem. We guarantee that the controller will keep the aircraft on the runway and guide the aircraft towards the center of the runway. The guarantees we provide are with respect to the set of input images modeled by our generator network, so we provide a recall metric to evaluate how well the generator captures the space of plausible images.

Generating Probabilistic Safety Guarantees for Neural Network Controllers

Mar 01, 2021

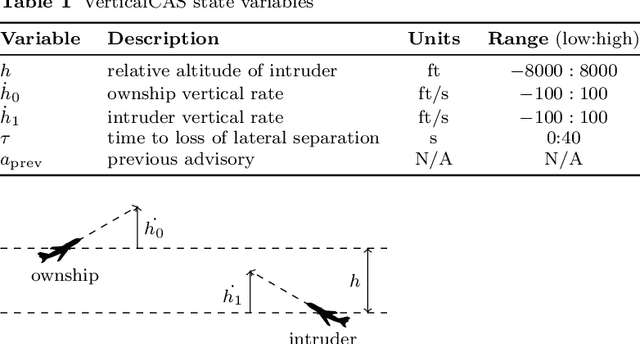

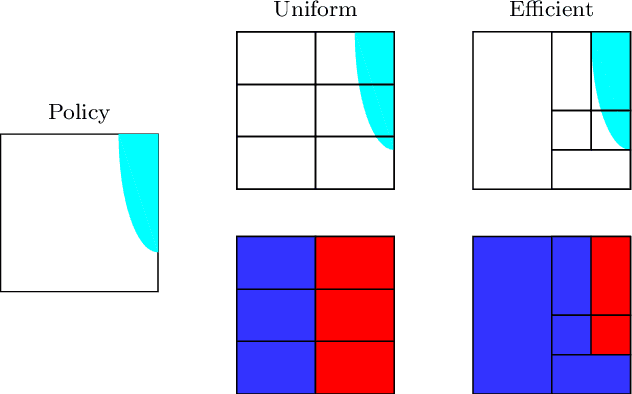

Abstract:Neural networks serve as effective controllers in a variety of complex settings due to their ability to represent expressive policies. The complex nature of neural networks, however, makes their output difficult to verify and predict, which limits their use in safety-critical applications. While simulations provide insight into the performance of neural network controllers, they are not enough to guarantee that the controller will perform safely in all scenarios. To address this problem, recent work has focused on formal methods to verify properties of neural network outputs. For neural network controllers, we can use a dynamics model to determine the output properties that must hold for the controller to operate safely. In this work, we develop a method to use the results from neural network verification tools to provide probabilistic safety guarantees on a neural network controller. We develop an adaptive verification approach to efficiently generate an overapproximation of the neural network policy. Next, we modify the traditional formulation of Markov decision process (MDP) model checking to provide guarantees on the overapproximated policy given a stochastic dynamics model. Finally, we incorporate techniques in state abstraction to reduce overapproximation error during the model checking process. We show that our method is able to generate meaningful probabilistic safety guarantees for aircraft collision avoidance neural networks that are loosely inspired by Airborne Collision Avoidance System X (ACAS X), a family of collision avoidance systems that formulates the problem as a partially observable Markov decision process (POMDP).

Global Optimization of Objective Functions Represented by ReLU Networks

Oct 08, 2020

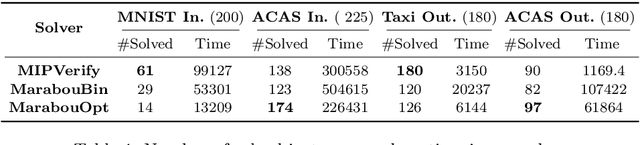

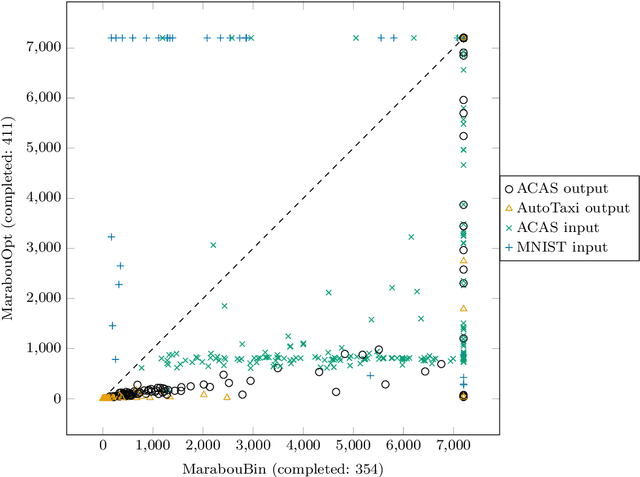

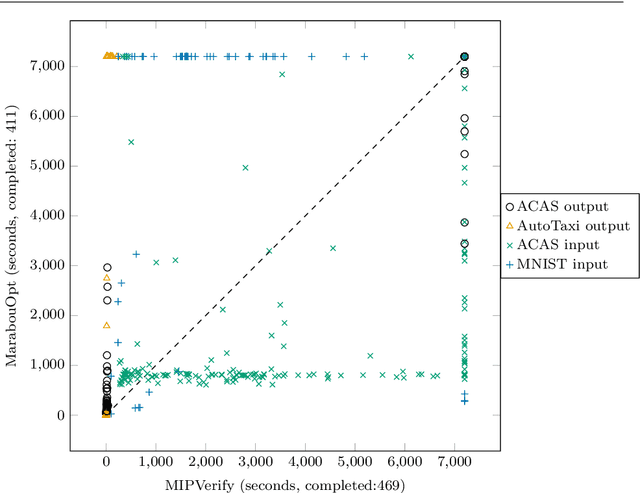

Abstract:Neural networks (NN) learn complex non-convex functions, making them desirable solutions in many contexts. Applying NNs to safety-critical tasks demands formal guarantees about their behavior. Recently, a myriad of verification solutions for NNs emerged using reachability, optimization, and search based techniques. Particularly interesting are adversarial examples, which reveal ways the network can fail. They are widely generated using incomplete methods, such as local optimization, which cannot guarantee optimality. We propose strategies to extend existing verifiers to provide provably optimal adversarial examples. Naive approaches combine bisection search with an off-the-shelf verifier, resulting in many expensive calls to the verifier. Instead, our proposed approach yields tightly integrated optimizers, achieving better runtime performance. We extend Marabou, an SMT-based verifier, and compare it with the bisection based approach and MIPVerify, an optimization based verifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge