Anthony L. Corso

SAVME: Efficient Safety Validation for Autonomous Systems Using Meta-Learning

Sep 30, 2023

Abstract:Discovering potential failures of an autonomous system is important prior to deployment. Falsification-based methods are often used to assess the safety of such systems, but the cost of running many accurate simulation can be high. The validation can be accelerated by identifying critical failure scenarios for the system under test and by reducing the simulation runtime. We propose a Bayesian approach that integrates meta-learning strategies with a multi-armed bandit framework. Our method involves learning distributions over scenario parameters that are prone to triggering failures in the system under test, as well as a distribution over fidelity settings that enable fast and accurate simulations. In the spirit of meta-learning, we also assess whether the learned fidelity settings distribution facilitates faster learning of the scenario parameter distributions for new scenarios. We showcase our methodology using a cutting-edge 3D driving simulator, incorporating 16 fidelity settings for an autonomous vehicle stack that includes camera and lidar sensors. We evaluate various scenarios based on an autonomous vehicle pre-crash typology. As a result, our approach achieves a significant speedup, up to 18 times faster compared to traditional methods that solely rely on a high-fidelity simulator.

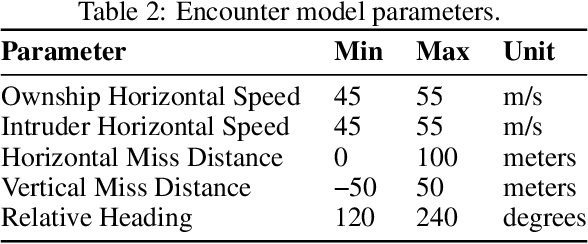

Efficient Determination of Safety Requirements for Perception Systems

Jul 03, 2023Abstract:Perception systems operate as a subcomponent of the general autonomy stack, and perception system designers often need to optimize performance characteristics while maintaining safety with respect to the overall closed-loop system. For this reason, it is useful to distill high-level safety requirements into component-level requirements on the perception system. In this work, we focus on efficiently determining sets of safe perception system performance characteristics given a black-box simulator of the fully-integrated, closed-loop system. We combine the advantages of common black-box estimation techniques such as Gaussian processes and threshold bandits to develop a new estimation method, which we call smoothing bandits. We demonstrate our method on a vision-based aircraft collision avoidance problem and show improvements in terms of both accuracy and efficiency over the Gaussian process and threshold bandit baselines.

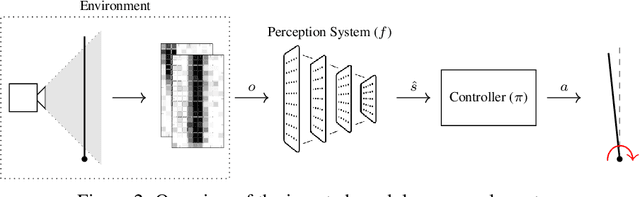

AVOIDDS: Aircraft Vision-based Intruder Detection Dataset and Simulator

Jun 19, 2023Abstract:Designing robust machine learning systems remains an open problem, and there is a need for benchmark problems that cover both environmental changes and evaluation on a downstream task. In this work, we introduce AVOIDDS, a realistic object detection benchmark for the vision-based aircraft detect-and-avoid problem. We provide a labeled dataset consisting of 72,000 photorealistic images of intruder aircraft with various lighting conditions, weather conditions, relative geometries, and geographic locations. We also provide an interface that evaluates trained models on slices of this dataset to identify changes in performance with respect to changing environmental conditions. Finally, we implement a fully-integrated, closed-loop simulator of the vision-based detect-and-avoid problem to evaluate trained models with respect to the downstream collision avoidance task. This benchmark will enable further research in the design of robust machine learning systems for use in safety-critical applications. The AVOIDDS dataset and code are publicly available at $\href{https://purl.stanford.edu/hj293cv5980}{purl.stanford.edu/hj293cv5980}$ and $\href{https://github.com/sisl/VisionBasedAircraftDAA}{github.com/sisl/VisionBasedAircraftDAA}$, respectively.

Experience Filter: Using Past Experiences on Unseen Tasks or Environments

May 29, 2023Abstract:One of the bottlenecks of training autonomous vehicle (AV) agents is the variability of training environments. Since learning optimal policies for unseen environments is often very costly and requires substantial data collection, it becomes computationally intractable to train the agent on every possible environment or task the AV may encounter. This paper introduces a zero-shot filtering approach to interpolate learned policies of past experiences to generalize to unseen ones. We use an experience kernel to correlate environments. These correlations are then exploited to produce policies for new tasks or environments from learned policies. We demonstrate our methods on an autonomous vehicle driving through T-intersections with different characteristics, where its behavior is modeled as a partially observable Markov decision process (POMDP). We first construct compact representations of learned policies for POMDPs with unknown transition functions given a dataset of sequential actions and observations. Then, we filter parameterized policies of previously visited environments to generate policies to new, unseen environments. We demonstrate our approaches on both an actual AV and a high-fidelity simulator. Results indicate that our experience filter offers a fast, low-effort, and near-optimal solution to create policies for tasks or environments never seen before. Furthermore, the generated new policies outperform the policy learned using the entire data collected from past environments, suggesting that the correlation among different environments can be exploited and irrelevant ones can be filtered out.

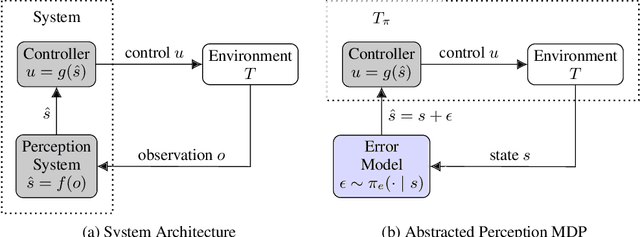

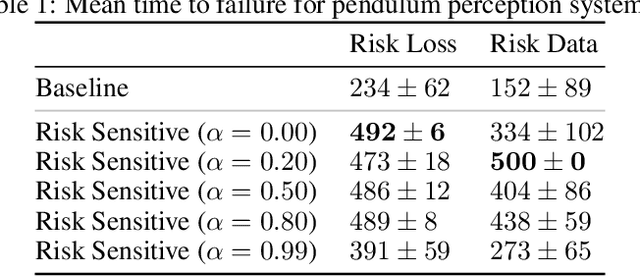

Risk-Driven Design of Perception Systems

May 21, 2022

Abstract:Modern autonomous systems rely on perception modules to process complex sensor measurements into state estimates. These estimates are then passed to a controller, which uses them to make safety-critical decisions. It is therefore important that we design perception systems to minimize errors that reduce the overall safety of the system. We develop a risk-driven approach to designing perception systems that accounts for the effect of perceptual errors on the performance of the fully-integrated, closed-loop system. We formulate a risk function to quantify the effect of a given perceptual error on overall safety, and show how we can use it to design safer perception systems by including a risk-dependent term in the loss function and generating training data in risk-sensitive regions. We evaluate our techniques on a realistic vision-based aircraft detect and avoid application and show that risk-driven design reduces collision risk by 37% over a baseline system.

ZoPE: A Fast Optimizer for ReLU Networks with Low-Dimensional Inputs

Jun 09, 2021

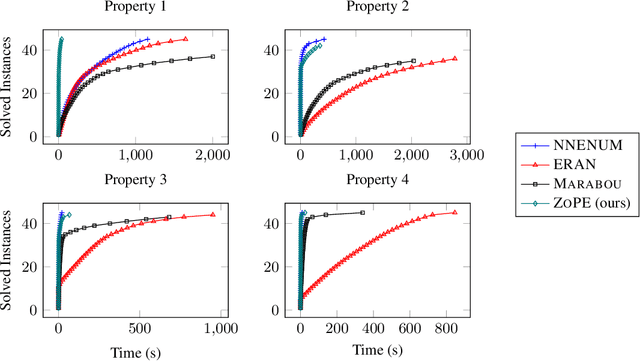

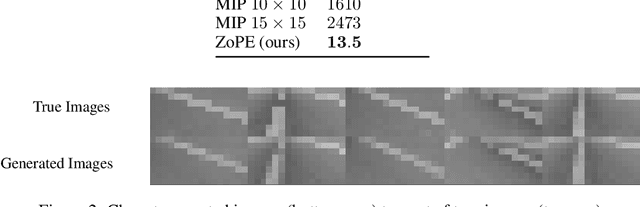

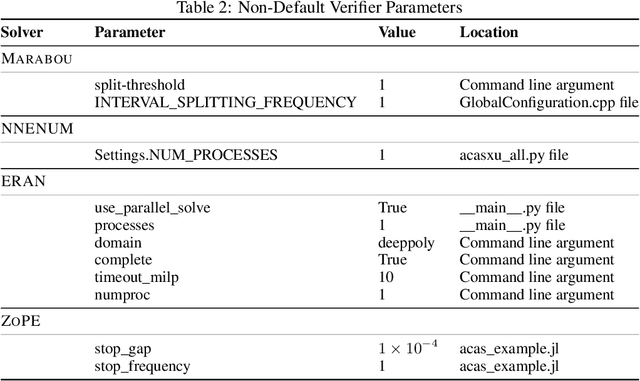

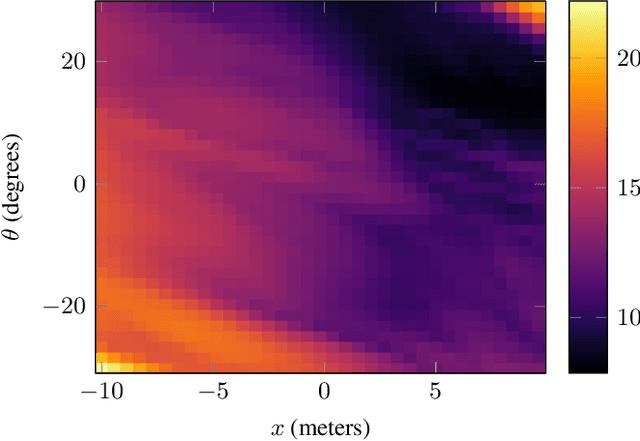

Abstract:Deep neural networks often lack the safety and robustness guarantees needed to be deployed in safety critical systems. Formal verification techniques can be used to prove input-output safety properties of networks, but when properties are difficult to specify, we rely on the solution to various optimization problems. In this work, we present an algorithm called ZoPE that solves optimization problems over the output of feedforward ReLU networks with low-dimensional inputs. The algorithm eagerly splits the input space, bounding the objective using zonotope propagation at each step, and improves computational efficiency compared to existing mixed integer programming approaches. We demonstrate how to formulate and solve three types of optimization problems: (i) minimization of any convex function over the output space, (ii) minimization of a convex function over the output of two networks in series with an adversarial perturbation in the layer between them, and (iii) maximization of the difference in output between two networks. Using ZoPE, we observe a $25\times$ speedup on property 1 of the ACAS Xu neural network verification benchmark and an $85\times$ speedup on a set of linear optimization problems. We demonstrate the versatility of the optimizer in analyzing networks by projecting onto the range of a generative adversarial network and visualizing the differences between a compressed and uncompressed network.

Verification of Image-based Neural Network Controllers Using Generative Models

May 14, 2021

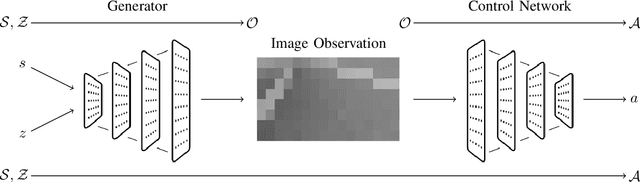

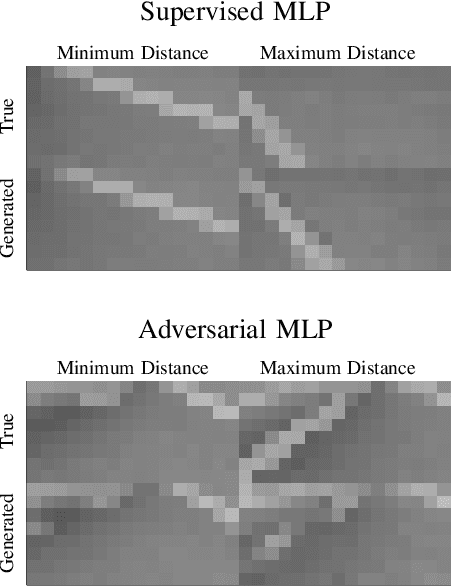

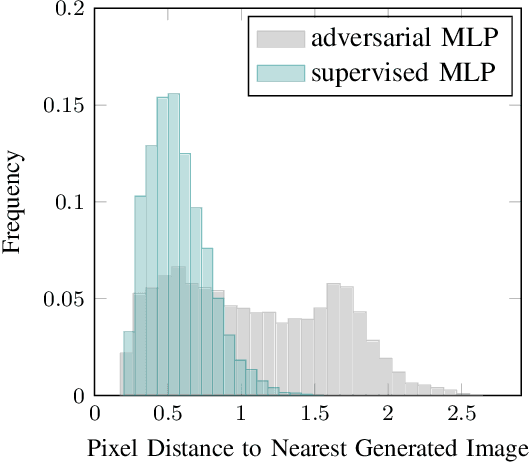

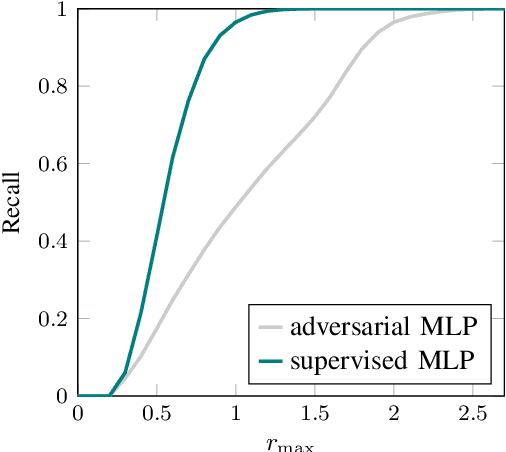

Abstract:Neural networks are often used to process information from image-based sensors to produce control actions. While they are effective for this task, the complex nature of neural networks makes their output difficult to verify and predict, limiting their use in safety-critical systems. For this reason, recent work has focused on combining techniques in formal methods and reachability analysis to obtain guarantees on the closed-loop performance of neural network controllers. However, these techniques do not scale to the high-dimensional and complicated input space of image-based neural network controllers. In this work, we propose a method to address these challenges by training a generative adversarial network (GAN) to map states to plausible input images. By concatenating the generator network with the control network, we obtain a network with a low-dimensional input space. This insight allows us to use existing closed-loop verification tools to obtain formal guarantees on the performance of image-based controllers. We apply our approach to provide safety guarantees for an image-based neural network controller for an autonomous aircraft taxi problem. We guarantee that the controller will keep the aircraft on the runway and guide the aircraft towards the center of the runway. The guarantees we provide are with respect to the set of input images modeled by our generator network, so we provide a recall metric to evaluate how well the generator captures the space of plausible images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge