Global Optimization of Objective Functions Represented by ReLU Networks

Paper and Code

Oct 08, 2020

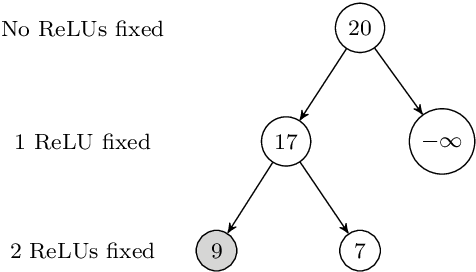

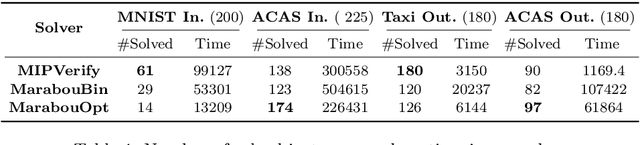

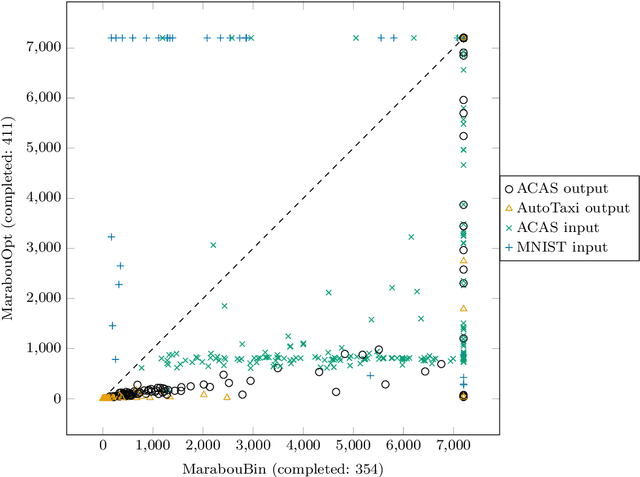

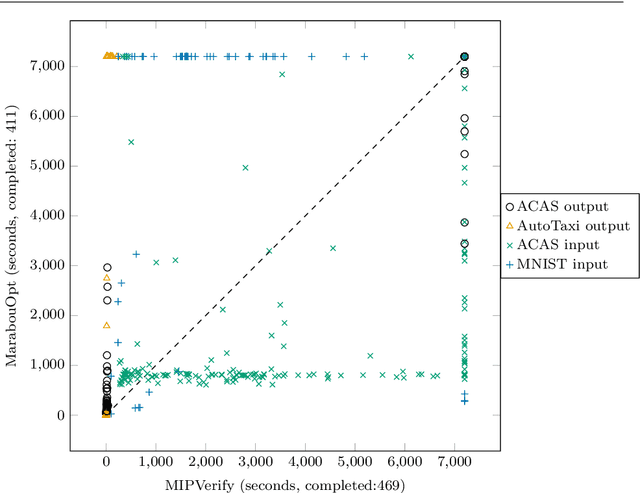

Neural networks (NN) learn complex non-convex functions, making them desirable solutions in many contexts. Applying NNs to safety-critical tasks demands formal guarantees about their behavior. Recently, a myriad of verification solutions for NNs emerged using reachability, optimization, and search based techniques. Particularly interesting are adversarial examples, which reveal ways the network can fail. They are widely generated using incomplete methods, such as local optimization, which cannot guarantee optimality. We propose strategies to extend existing verifiers to provide provably optimal adversarial examples. Naive approaches combine bisection search with an off-the-shelf verifier, resulting in many expensive calls to the verifier. Instead, our proposed approach yields tightly integrated optimizers, achieving better runtime performance. We extend Marabou, an SMT-based verifier, and compare it with the bisection based approach and MIPVerify, an optimization based verifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge