Christin Katharina Kreutz

Sim4IA-Bench: A User Simulation Benchmark Suite for Next Query and Utterance Prediction

Nov 12, 2025Abstract:Validating user simulation is a difficult task due to the lack of established measures and benchmarks, which makes it challenging to assess whether a simulator accurately reflects real user behavior. As part of the Sim4IA Micro-Shared Task at the Sim4IA Workshop, SIGIR 2025, we present Sim4IA-Bench, a simulation benchmark suit for the prediction of the next queries and utterances, the first of its kind in the IR community. Our dataset as part of the suite comprises 160 real-world search sessions from the CORE search engine. For 70 of these sessions, up to 62 simulator runs are available, divided into Task A and Task B, in which different approaches predicted users next search queries or utterances. Sim4IA-Bench provides a basis for evaluating and comparing user simulation approaches and for developing new measures of simulator validity. Although modest in size, the suite represents the first publicly available benchmark that links real search sessions with simulated next-query predictions. In addition to serving as a testbed for next query prediction, it also enables exploratory studies on query reformulation behavior, intent drift, and interaction-aware retrieval evaluation. We also introduce a new measure for evaluating next-query predictions in this task. By making the suite publicly available, we aim to promote reproducible research and stimulate further work on realistic and explainable user simulation for information access: https://github.com/irgroup/Sim4IA-Bench.

Second SIGIR Workshop on Simulations for Information Access (Sim4IA 2025)

May 16, 2025Abstract:Simulations in information access (IA) have recently gained interest, as shown by various tutorials and workshops around that topic. Simulations can be key contributors to central IA research and evaluation questions, especially around interactive settings when real users are unavailable, or their participation is impossible due to ethical reasons. In addition, simulations in IA can help contribute to a better understanding of users, reduce complexity of evaluation experiments, and improve reproducibility. Building on recent developments in methods and toolkits, the second iteration of our Sim4IA workshop aims to again bring together researchers and practitioners to form an interactive and engaging forum for discussions on the future perspectives of the field. An additional aim is to plan an upcoming TREC/CLEF campaign.

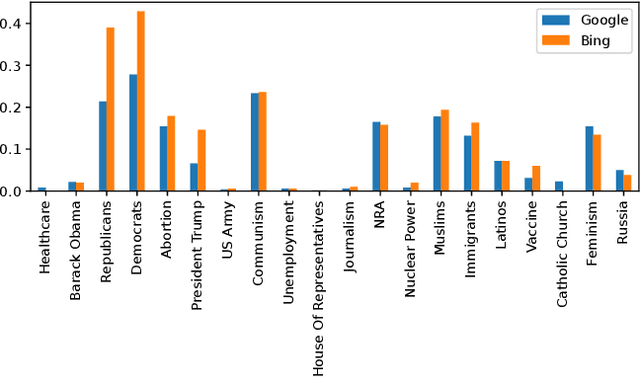

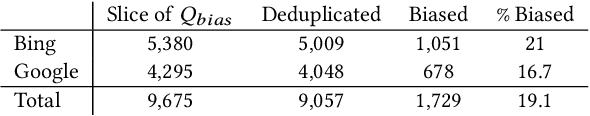

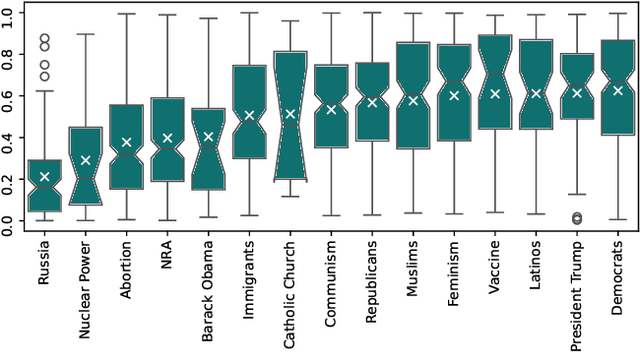

Investigating Bias in Political Search Query Suggestions by Relative Comparison with LLMs

Oct 31, 2024

Abstract:Search query suggestions affect users' interactions with search engines, which then influences the information they encounter. Thus, bias in search query suggestions can lead to exposure to biased search results and can impact opinion formation. This is especially critical in the political domain. Detecting and quantifying bias in web search engines is difficult due to its topic dependency, complexity, and subjectivity. The lack of context and phrasality of query suggestions emphasizes this problem. In a multi-step approach, we combine the benefits of large language models, pairwise comparison, and Elo-based scoring to identify and quantify bias in English search query suggestions. We apply our approach to the U.S. political news domain and compare bias in Google and Bing.

Report on the Workshop on Simulations for Information Access (Sim4IA 2024) at SIGIR 2024

Sep 26, 2024

Abstract:This paper is a report of the Workshop on Simulations for Information Access (Sim4IA) workshop at SIGIR 2024. The workshop had two keynotes, a panel discussion, nine lightning talks, and two breakout sessions. Key takeaways were user simulation's importance in academia and industry, the possible bridging of online and offline evaluation, and the issues of organizing a companion shared task around user simulations for information access. We report on how we organized the workshop, provide a brief overview of what happened at the workshop, and summarize the main topics and findings of the workshop and future work.

Text Simplification of Scientific Texts for Non-Expert Readers

Jul 07, 2023Abstract:Reading levels are highly individual and can depend on a text's language, a person's cognitive abilities, or knowledge on a topic. Text simplification is the task of rephrasing a text to better cater to the abilities of a specific target reader group. Simplification of scientific abstracts helps non-experts to access the core information by bypassing formulations that require domain or expert knowledge. This is especially relevant for, e.g., cancer patients reading about novel treatment options. The SimpleText lab hosts the simplification of scientific abstracts for non-experts (Task 3) to advance this field. We contribute three runs employing out-of-the-box summarization models (two based on T5, one based on PEGASUS) and one run using ChatGPT with complex phrase identification.

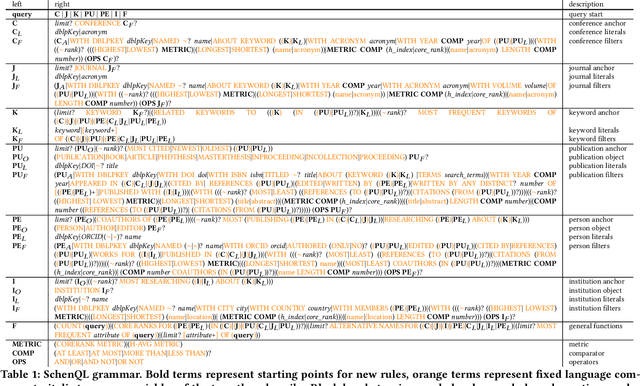

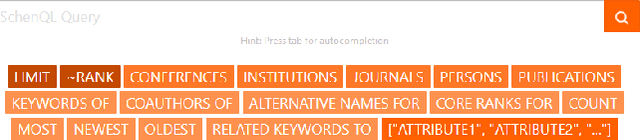

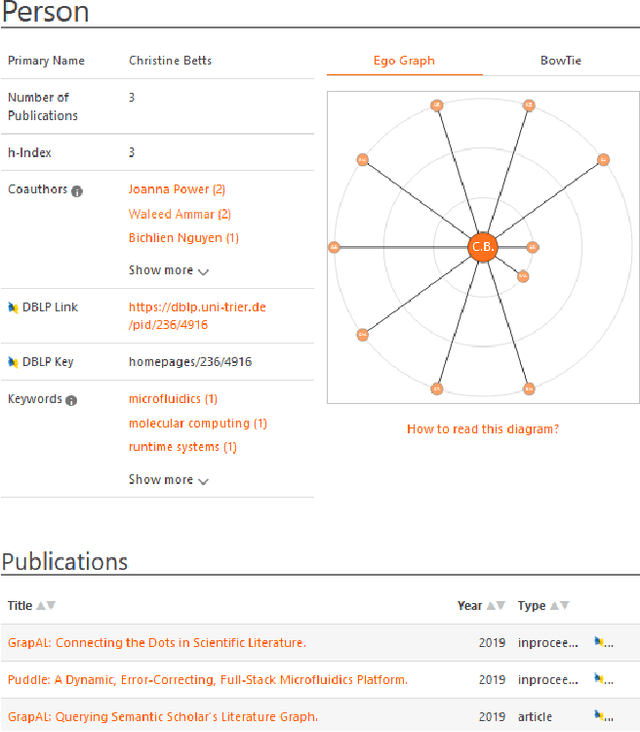

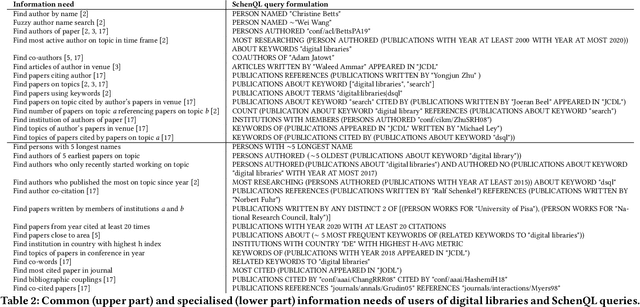

SchenQL: A query language for bibliographic data with aggregations and domain-specific functions

May 13, 2022

Abstract:Current search interfaces of digital libraries are not suitable to satisfy complex or convoluted information needs directly, when it comes to cases such as "Find authors who only recently started working on a topic". They might offer possibilities to obtain this information only by requiring vast user interaction. We present SchenQL, a web interface of a domain-specific query language on bibliographic metadata, which offers information search and exploration by query formulation and navigation in the system. Our system focuses on supporting aggregation of data and providing specialised domain dependent functions while being suitable for domain experts as well as casual users of digital libraries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge