Chris Lin

SurrogateSHAP: Training-Free Contributor Attribution for Text-to-Image (T2I) Models

Jan 29, 2026Abstract:As Text-to-Image (T2I) diffusion models are increasingly used in real-world creative workflows, a principled framework for valuing contributors who provide a collection of data is essential for fair compensation and sustainable data marketplaces. While the Shapley value offers a theoretically grounded approach to attribution, it faces a dual computational bottleneck: (i) the prohibitive cost of exhaustive model retraining for each sampled subset of players (i.e., data contributors) and (ii) the combinatorial number of subsets needed to estimate marginal contributions due to contributor interactions. To this end, we propose SurrogateSHAP, a retraining-free framework that approximates the expensive retraining game through inference from a pretrained model. To further improve efficiency, we employ a gradient-boosted tree to approximate the utility function and derive Shapley values analytically from the tree-based model. We evaluate SurrogateSHAP across three diverse attribution tasks: (i) image quality for DDPM-CFG on CIFAR-20, (ii) aesthetics for Stable Diffusion on Post-Impressionist artworks, and (iii) product diversity for FLUX.1 on Fashion-Product data. Across settings, SurrogateSHAP outperforms prior methods while substantially reducing computational overhead, consistently identifying influential contributors across multiple utility metrics. Finally, we demonstrate that SurrogateSHAP effectively localizes data sources responsible for spurious correlations in clinical images, providing a scalable path toward auditing safety-critical generative models.

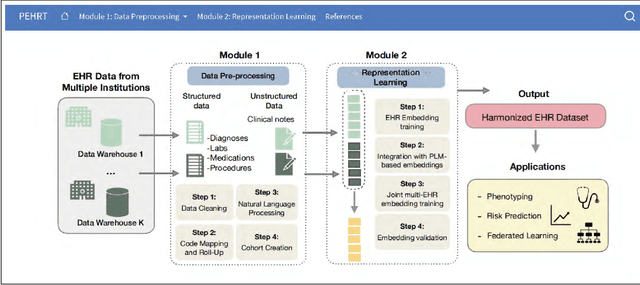

PEHRT: A Common Pipeline for Harmonizing Electronic Health Record data for Translational Research

Sep 10, 2025

Abstract:Integrative analysis of multi-institutional Electronic Health Record (EHR) data enhances the reliability and generalizability of translational research by leveraging larger, more diverse patient cohorts and incorporating multiple data modalities. However, harmonizing EHR data across institutions poses major challenges due to data heterogeneity, semantic differences, and privacy concerns. To address these challenges, we introduce $\textit{PEHRT}$, a standardized pipeline for efficient EHR data harmonization consisting of two core modules: (1) data pre-processing and (2) representation learning. PEHRT maps EHR data to standard coding systems and uses advanced machine learning to generate research-ready datasets without requiring individual-level data sharing. Our pipeline is also data model agnostic and designed for streamlined execution across institutions based on our extensive real-world experience. We provide a complete suite of open source software, accompanied by a user-friendly tutorial, and demonstrate the utility of PEHRT in a variety of tasks using data from diverse healthcare systems.

Ensembling Sparse Autoencoders

May 21, 2025Abstract:Sparse autoencoders (SAEs) are used to decompose neural network activations into human-interpretable features. Typically, features learned by a single SAE are used for downstream applications. However, it has recently been shown that SAEs trained with different initial weights can learn different features, demonstrating that a single SAE captures only a limited subset of features that can be extracted from the activation space. Motivated by this limitation, we propose to ensemble multiple SAEs through naive bagging and boosting. Specifically, SAEs trained with different weight initializations are ensembled in naive bagging, whereas SAEs sequentially trained to minimize the residual error are ensembled in boosting. We evaluate our ensemble approaches with three settings of language models and SAE architectures. Our empirical results demonstrate that ensembling SAEs can improve the reconstruction of language model activations, diversity of features, and SAE stability. Furthermore, ensembling SAEs performs better than applying a single SAE on downstream tasks such as concept detection and spurious correlation removal, showing improved practical utility.

On the Robustness of Removal-Based Feature Attributions

Jun 12, 2023

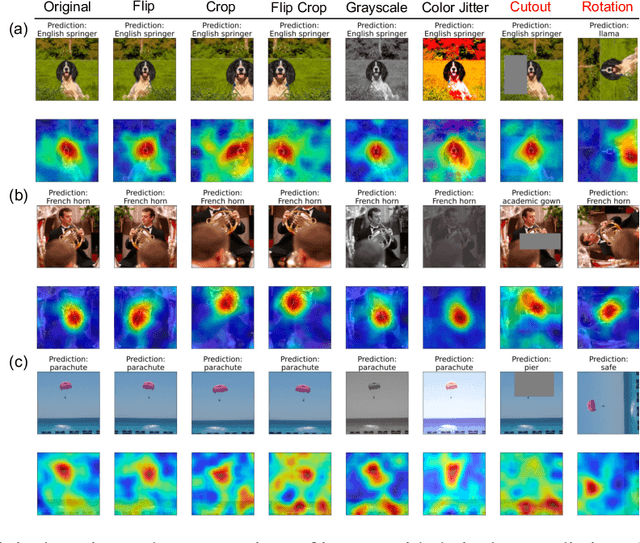

Abstract:To explain complex models based on their inputs, many feature attribution methods have been developed that assign importance scores to input features. However, some recent work challenges the robustness of feature attributions by showing that these methods are sensitive to input and model perturbations, while other work addresses this robustness issue by proposing robust attribution methods and model modifications. Nevertheless, previous work on attribution robustness has focused primarily on gradient-based feature attributions. In contrast, the robustness properties of removal-based attribution methods are not comprehensively well understood. To bridge this gap, we theoretically characterize the robustness of removal-based feature attributions. Specifically, we provide a unified analysis of such methods and prove upper bounds for the difference between intact and perturbed attributions, under settings of both input and model perturbations. Our empirical experiments on synthetic and real-world data validate our theoretical results and demonstrate their practical implications.

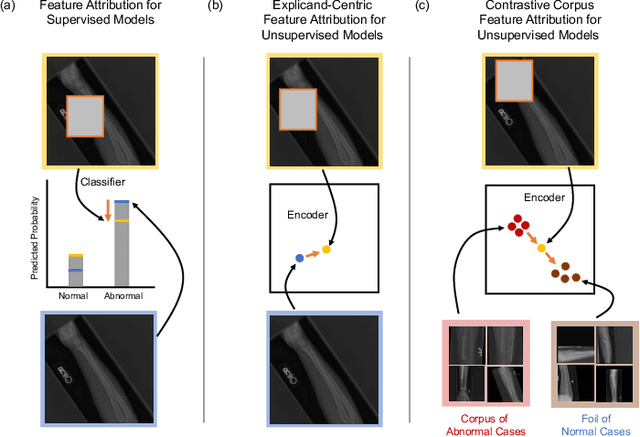

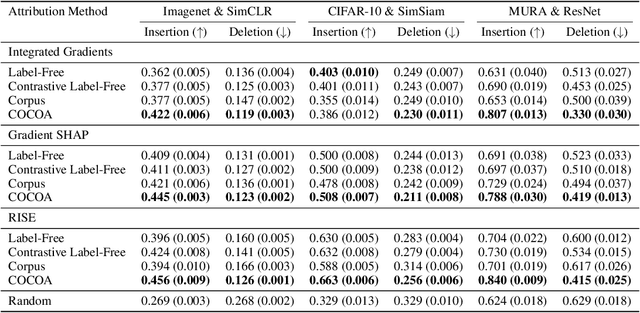

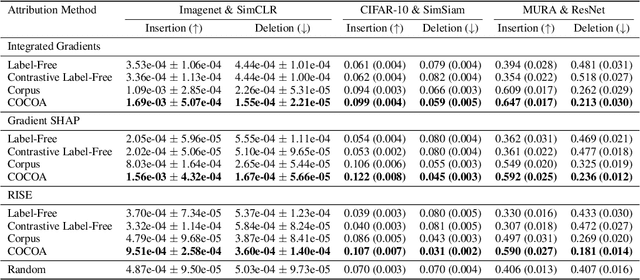

Contrastive Corpus Attribution for Explaining Representations

Sep 30, 2022

Abstract:Despite the widespread use of unsupervised models, very few methods are designed to explain them. Most explanation methods explain a scalar model output. However, unsupervised models output representation vectors, the elements of which are not good candidates to explain because they lack semantic meaning. To bridge this gap, recent works defined a scalar explanation output: a dot product-based similarity in the representation space to the sample being explained (i.e., an explicand). Although this enabled explanations of unsupervised models, the interpretation of this approach can still be opaque because similarity to the explicand's representation may not be meaningful to humans. To address this, we propose contrastive corpus similarity, a novel and semantically meaningful scalar explanation output based on a reference corpus and a contrasting foil set of samples. We demonstrate that contrastive corpus similarity is compatible with many post-hoc feature attribution methods to generate COntrastive COrpus Attributions (COCOA) and quantitatively verify that features important to the corpus are identified. We showcase the utility of COCOA in two ways: (i) we draw insights by explaining augmentations of the same image in a contrastive learning setting (SimCLR); and (ii) we perform zero-shot object localization by explaining the similarity of image representations to jointly learned text representations (CLIP).

Graph Neural Networks Including Sparse Interpretability

Jun 30, 2020

Abstract:Graph Neural Networks (GNNs) are versatile, powerful machine learning methods that enable graph structure and feature representation learning, and have applications across many domains. For applications critically requiring interpretation, attention-based GNNs have been leveraged. However, these approaches either rely on specific model architectures or lack a joint consideration of graph structure and node features in their interpretation. Here we present a model-agnostic framework for interpreting important graph structure and node features, Graph neural networks Including SparSe inTerpretability (GISST). With any GNN model, GISST combines an attention mechanism and sparsity regularization to yield an important subgraph and node feature subset related to any graph-based task. Through a single self-attention layer, a GISST model learns an importance probability for each node feature and edge in the input graph. By including these importance probabilities in the model loss function, the probabilities are optimized end-to-end and tied to the task-specific performance. Furthermore, GISST sparsifies these importance probabilities with entropy and L1 regularization to reduce noise in the input graph topology and node features. Our GISST models achieve superior node feature and edge explanation precision in synthetic datasets, as compared to alternative interpretation approaches. Moreover, our GISST models are able to identify important graph structure in real-world datasets. We demonstrate in theory that edge feature importance and multiple edge types can be considered by incorporating them into the GISST edge probability computation. By jointly accounting for topology, node features, and edge features, GISST inherently provides simple and relevant interpretations for any GNN models and tasks.

Predicting Inpatient Discharge Prioritization With Electronic Health Records

Dec 02, 2018

Abstract:Identifying patients who will be discharged within 24 hours can improve hospital resource management and quality of care. We studied this problem using eight years of Electronic Health Records (EHR) data from Stanford Hospital. We fit models to predict 24 hour discharge across the entire inpatient population. The best performing models achieved an area under the receiver-operator characteristic curve (AUROC) of 0.85 and an AUPRC of 0.53 on a held out test set. This model was also well calibrated. Finally, we analyzed the utility of this model in a decision theoretic framework to identify regions of ROC space in which using the model increases expected utility compared to the trivial always negative or always positive classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge