Chris Hankin

Certified Federated Adversarial Training

Dec 20, 2021

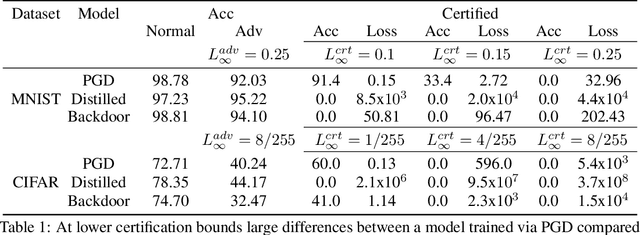

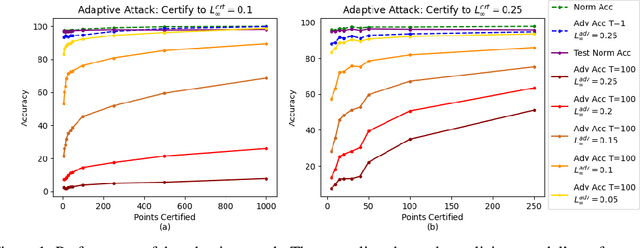

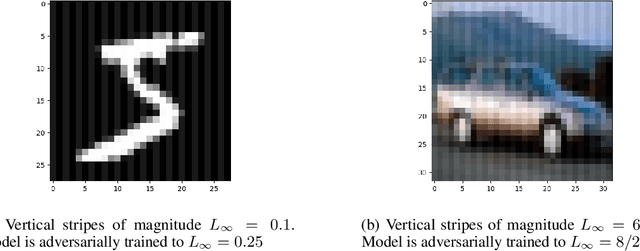

Abstract:In federated learning (FL), robust aggregation schemes have been developed to protect against malicious clients. Many robust aggregation schemes rely on certain numbers of benign clients being present in a quorum of workers. This can be hard to guarantee when clients can join at will, or join based on factors such as idle system status, and connected to power and WiFi. We tackle the scenario of securing FL systems conducting adversarial training when a quorum of workers could be completely malicious. We model an attacker who poisons the model to insert a weakness into the adversarial training such that the model displays apparent adversarial robustness, while the attacker can exploit the inserted weakness to bypass the adversarial training and force the model to misclassify adversarial examples. We use abstract interpretation techniques to detect such stealthy attacks and block the corrupted model updates. We show that this defence can preserve adversarial robustness even against an adaptive attacker.

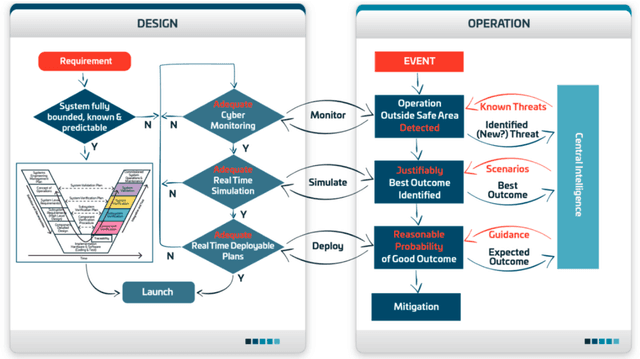

CyRes -- Avoiding Catastrophic Failure in Connected and Autonomous Vehicles (Extended Abstract)

Jul 03, 2020

Abstract:Existing approaches to cyber security and regulation in the automotive sector cannot achieve the quality of outcome necessary to ensure the safe mass deployment of advanced vehicle technologies and smart mobility systems. Without sustainable resilience hard-fought public trust will evaporate, derailing emerging global initiatives to improve the efficiency, safety and environmental impact of future transport. This paper introduces an operational cyber resilience methodology, CyRes, that is suitable for standardisation. The CyRes methodology itself is capable of being tested in court or by publicly appointed regulators. It is designed so that operators understand what evidence should be produced by it and are able to measure the quality of that evidence. The evidence produced is capable of being tested in court or by publicly appointed regulators. Thus, the real-world system to which the CyRes methodology has been applied is capable of operating at all times and in all places with a legally and socially acceptable value of negative consequence.

Fault Tree Analysis: Identifying Maximum Probability Minimal Cut Sets with MaxSAT

May 05, 2020

Abstract:In this paper, we present a novel MaxSAT-based technique to compute Maximum Probability Minimal Cut Sets (MPMCSs) in fault trees. We model the MPMCS problem as a Weighted Partial MaxSAT problem and solve it using a parallel SAT-solving architecture. The results obtained with our open source tool indicate that the approach is effective and efficient.

Intrusion Detection for Industrial Control Systems: Evaluation Analysis and Adversarial Attacks

Nov 08, 2019

Abstract:Neural networks are increasingly used in security applications for intrusion detection on industrial control systems. In this work we examine two areas that must be considered for their effective use. Firstly, is their vulnerability to adversarial attacks when used in a time series setting. Secondly, is potential over-estimation of performance arising from data leakage artefacts. To investigate these areas we implement a long short-term memory (LSTM) based intrusion detection system (IDS) which effectively detects cyber-physical attacks on a water treatment testbed representing a strong baseline IDS. For investigating adversarial attacks we model two different white box attackers. The first attacker is able to manipulate sensor readings on a subset of the Secure Water Treatment (SWaT) system. By creating a stream of adversarial data the attacker is able to hide the cyber-physical attacks from the IDS. For the cyber-physical attacks which are detected by the IDS, the attacker required on average 2.48 out of 12 total sensors to be compromised for the cyber-physical attacks to be hidden from the IDS. The second attacker model we explore is an $L_{\infty}$ bounded attacker who can send fake readings to the IDS, but to remain imperceptible, limits their perturbations to the smallest $L_{\infty}$ value needed. Additionally, we examine data leakage problems arising from tuning for $F_1$ score on the whole SWaT attack set and propose a method to tune detection parameters that does not utilise any attack data. If attack after-effects are accounted for then our new parameter tuning method achieved an $F_1$ score of 0.811$\pm$0.0103.

Deep Latent Defence

Oct 09, 2019

Abstract:Deep learning methods have shown state of the art performance in a range of tasks from computer vision to natural language processing. However, it is well known that such systems are vulnerable to attackers who craft inputs in order to cause misclassification. The level of perturbation an attacker needs to introduce in order to cause such a misclassification can be extremely small, and often imperceptible. This is of significant security concern, particularly where misclassification can cause harm to humans. We thus propose Deep Latent Defence, an architecture which seeks to combine adversarial training with a detection system. At its core Deep Latent Defence has a adversarially trained neural network. A series of encoders take the intermediate layer representation of data as it passes though the network and project it to a latent space which we use for detecting adversarial samples via a $k$-nn classifier. We present results using both grey and white box attackers, as well as an adaptive $L_{\infty}$ bounded attack which was constructed specifically to try and evade our defence. We find that even under the strongest attacker model that we have investigated our defence is able to offer significant defensive benefits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge