Chi-Yang Hsu

Thought Graph: Generating Thought Process for Biological Reasoning

Mar 11, 2024

Abstract:We present the Thought Graph as a novel framework to support complex reasoning and use gene set analysis as an example to uncover semantic relationships between biological processes. Our framework stands out for its ability to provide a deeper understanding of gene sets, significantly surpassing GSEA by 40.28% and LLM baselines by 5.38% based on cosine similarity to human annotations. Our analysis further provides insights into future directions of biological processes naming, and implications for bioinformatics and precision medicine.

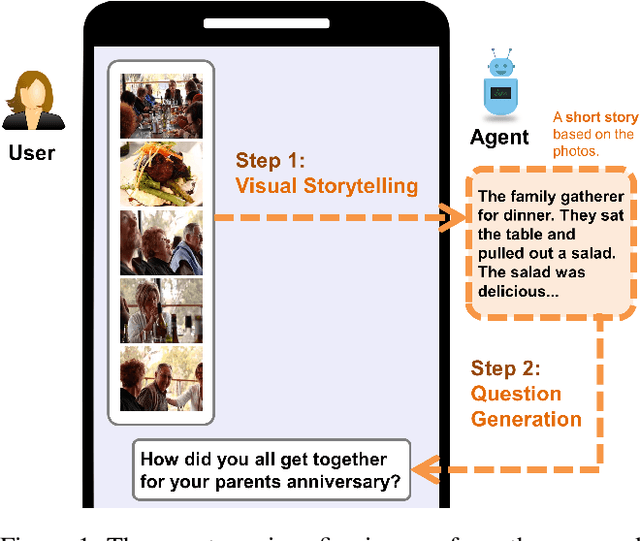

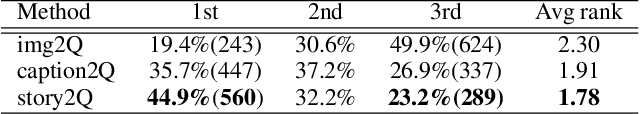

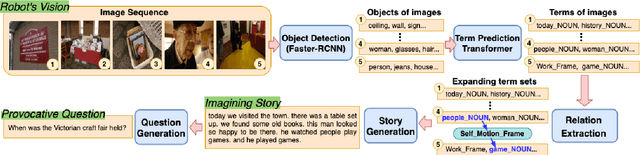

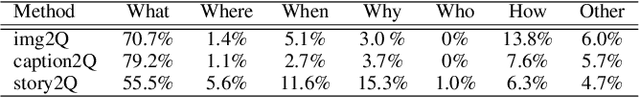

Let's Talk! Striking Up Conversations via Conversational Visual Question Generation

May 19, 2022

Abstract:An engaging and provocative question can open up a great conversation. In this work, we explore a novel scenario: a conversation agent views a set of the user's photos (for example, from social media platforms) and asks an engaging question to initiate a conversation with the user. The existing vision-to-question models mostly generate tedious and obvious questions, which might not be ideals conversation starters. This paper introduces a two-phase framework that first generates a visual story for the photo set and then uses the story to produce an interesting question. The human evaluation shows that our framework generates more response-provoking questions for starting conversations than other vision-to-question baselines.

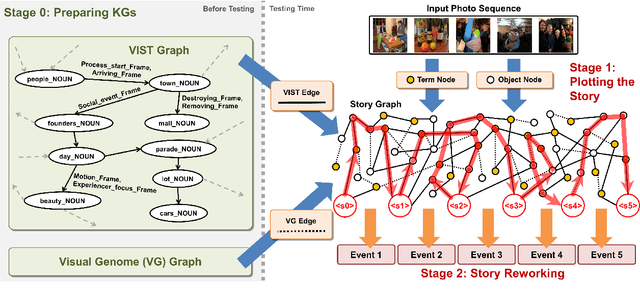

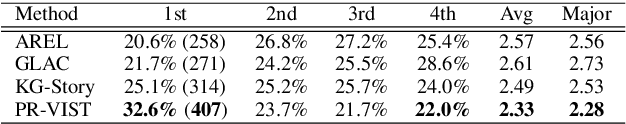

Plot and Rework: Modeling Storylines for Visual Storytelling

May 23, 2021

Abstract:Writing a coherent and engaging story is not easy. Creative writers use their knowledge and worldview to put disjointed elements together to form a coherent storyline, and work and rework iteratively toward perfection. Automated visual storytelling (VIST) models, however, make poor use of external knowledge and iterative generation when attempting to create stories. This paper introduces PR-VIST, a framework that represents the input image sequence as a story graph in which it finds the best path to form a storyline. PR-VIST then takes this path and learns to generate the final story via an iterative training process. This framework produces stories that are superior in terms of diversity, coherence, and humanness, per both automatic and human evaluations. An ablation study shows that both plotting and reworking contribute to the model's superiority.

A `Sourceful' Twist: Emoji Prediction Based on Sentiment, Hashtags and Application Source

Mar 14, 2021

Abstract:We widely use emojis in social networking to heighten, mitigate or negate the sentiment of the text. Emoji suggestions already exist in many cross-platform applications but an emoji is predicted solely based a few prominent words instead of understanding the subject and substance of the text. Through this paper, we showcase the importance of using Twitter features to help the model understand the sentiment involved and hence to predict the most suitable emoji for the text. Hashtags and Application Sources like Android, etc. are two features which we found to be important yet underused in emoji prediction and Twitter sentiment analysis on the whole. To approach this shortcoming and to further understand emoji behavioral patterns, we propose a more balanced dataset by crawling additional Twitter data, including timestamp, hashtags, and application source acting as additional attributes to the tweet. Our data analysis and neural network model performance evaluations depict that using hashtags and application sources as features allows to encode different information and is effective in emoji prediction.

Knowledge-Enriched Visual Storytelling

Dec 03, 2019

Abstract:Stories are diverse and highly personalized, resulting in a large possible output space for story generation. Existing end-to-end approaches produce monotonous stories because they are limited to the vocabulary and knowledge in a single training dataset. This paper introduces KG-Story, a three-stage framework that allows the story generation model to take advantage of external Knowledge Graphs to produce interesting stories. KG-Story distills a set of representative words from the input prompts, enriches the word set by using external knowledge graphs, and finally generates stories based on the enriched word set. This distill-enrich-generate framework allows the use of external resources not only for the enrichment phase, but also for the distillation and generation phases. In this paper, we show the superiority of KG-Story for visual storytelling, where the input prompt is a sequence of five photos and the output is a short story. Per the human ranking evaluation, stories generated by KG-Story are on average ranked better than that of the state-of-the-art systems. Our code and output stories are available at https://github.com/zychen423/KE-VIST.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge