Chi-Man Wong

PKGM: A Pre-trained Knowledge Graph Model for E-commerce Application

Mar 02, 2022

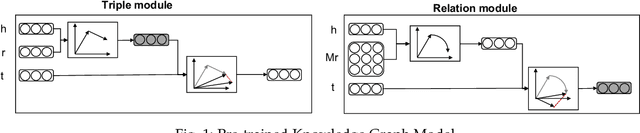

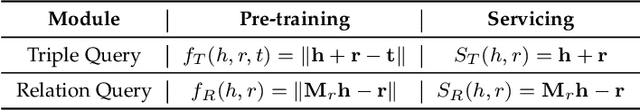

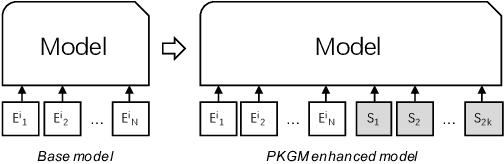

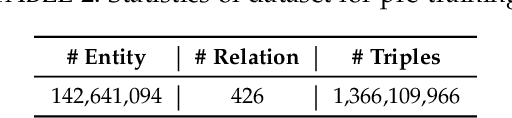

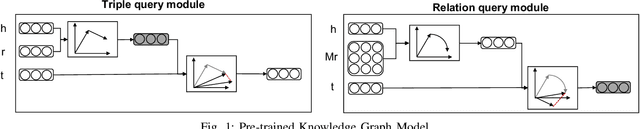

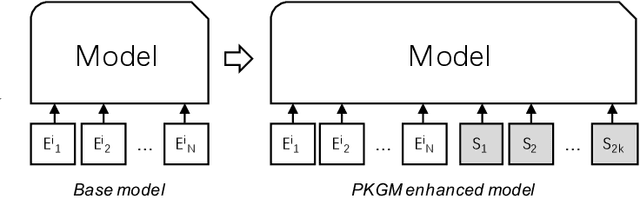

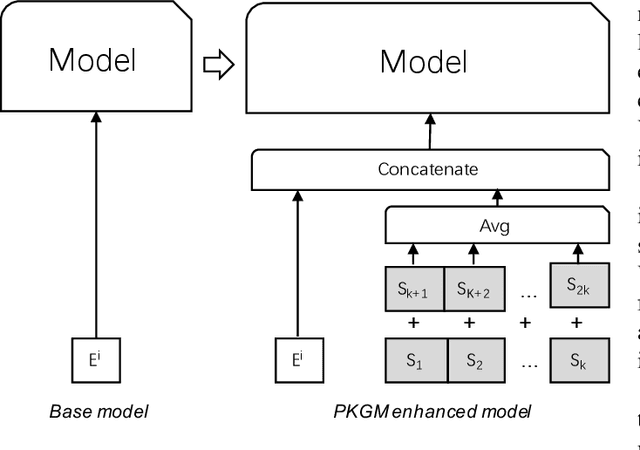

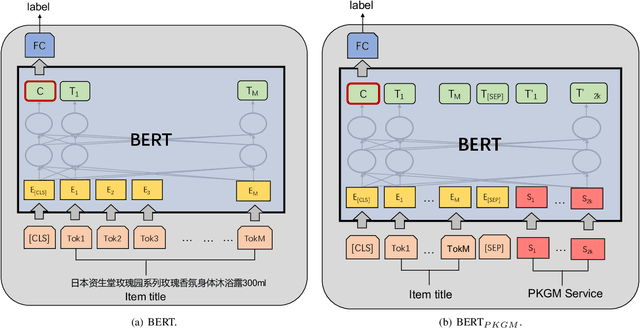

Abstract:In recent years, knowledge graphs have been widely applied as a uniform way to organize data and have enhanced many tasks requiring knowledge. In online shopping platform Taobao, we built a billion-scale e-commerce product knowledge graph. It organizes data uniformly and provides item knowledge services for various tasks such as item recommendation. Usually, such knowledge services are provided through triple data, while this implementation includes (1) tedious data selection works on product knowledge graph and (2) task model designing works to infuse those triples knowledge. More importantly, product knowledge graph is far from complete, resulting error propagation to knowledge enhanced tasks. To avoid these problems, we propose a Pre-trained Knowledge Graph Model (PKGM) for the billion-scale product knowledge graph. On the one hand, it could provide item knowledge services in a uniform way with service vectors for embedding-based and item-knowledge-related task models without accessing triple data. On the other hand, it's service is provided based on implicitly completed product knowledge graph, overcoming the common the incomplete issue. We also propose two general ways to integrate the service vectors from PKGM into downstream task models. We test PKGM in five knowledge-related tasks, item classification, item resolution, item recommendation, scene detection and sequential recommendation. Experimental results show that PKGM introduces significant performance gains on these tasks, illustrating the useful of service vectors from PKGM.

Billion-scale Pre-trained E-commerce Product Knowledge Graph Model

May 02, 2021

Abstract:In recent years, knowledge graphs have been widely applied to organize data in a uniform way and enhance many tasks that require knowledge, for example, online shopping which has greatly facilitated people's life. As a backbone for online shopping platforms, we built a billion-scale e-commerce product knowledge graph for various item knowledge services such as item recommendation. However, such knowledge services usually include tedious data selection and model design for knowledge infusion, which might bring inappropriate results. Thus, to avoid this problem, we propose a Pre-trained Knowledge Graph Model (PKGM) for our billion-scale e-commerce product knowledge graph, providing item knowledge services in a uniform way for embedding-based models without accessing triple data in the knowledge graph. Notably, PKGM could also complete knowledge graphs during servicing, thereby overcoming the common incompleteness issue in knowledge graphs. We test PKGM in three knowledge-related tasks including item classification, same item identification, and recommendation. Experimental results show PKGM successfully improves the performance of each task.

Improving Conversational Recommendation System by Pretraining on Billions Scale of Knowledge Graph

Apr 30, 2021

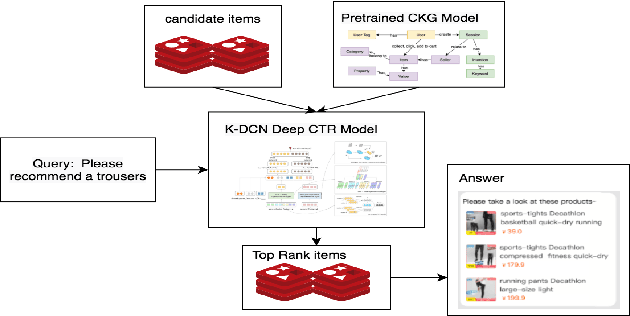

Abstract:Conversational Recommender Systems (CRSs) in E-commerce platforms aim to recommend items to users via multiple conversational interactions. Click-through rate (CTR) prediction models are commonly used for ranking candidate items. However, most CRSs are suffer from the problem of data scarcity and sparseness. To address this issue, we propose a novel knowledge-enhanced deep cross network (K-DCN), a two-step (pretrain and fine-tune) CTR prediction model to recommend items. We first construct a billion-scale conversation knowledge graph (CKG) from information about users, items and conversations, and then pretrain CKG by introducing knowledge graph embedding method and graph convolution network to encode semantic and structural information respectively.To make the CTR prediction model sensible of current state of users and the relationship between dialogues and items, we introduce user-state and dialogue-interaction representations based on pre-trained CKG and propose K-DCN.In K-DCN, we fuse the user-state representation, dialogue-interaction representation and other normal feature representations via deep cross network, which will give the rank of candidate items to be recommended.We experimentally prove that our proposal significantly outperforms baselines and show it's real application in Alime.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge