Changming Zhao

Semi-Supervised Transfer Boosting (SS-TrBoosting)

Dec 04, 2024Abstract:Semi-supervised domain adaptation (SSDA) aims at training a high-performance model for a target domain using few labeled target data, many unlabeled target data, and plenty of auxiliary data from a source domain. Previous works in SSDA mainly focused on learning transferable representations across domains. However, it is difficult to find a feature space where the source and target domains share the same conditional probability distribution. Additionally, there is no flexible and effective strategy extending existing unsupervised domain adaptation (UDA) approaches to SSDA settings. In order to solve the above two challenges, we propose a novel fine-tuning framework, semi-supervised transfer boosting (SS-TrBoosting). Given a well-trained deep learning-based UDA or SSDA model, we use it as the initial model, generate additional base learners by boosting, and then use all of them as an ensemble. More specifically, half of the base learners are generated by supervised domain adaptation, and half by semi-supervised learning. Furthermore, for more efficient data transmission and better data privacy protection, we propose a source data generation approach to extend SS-TrBoosting to semi-supervised source-free domain adaptation (SS-SFDA). Extensive experiments showed that SS-TrBoosting can be applied to a variety of existing UDA, SSDA and SFDA approaches to further improve their performance.

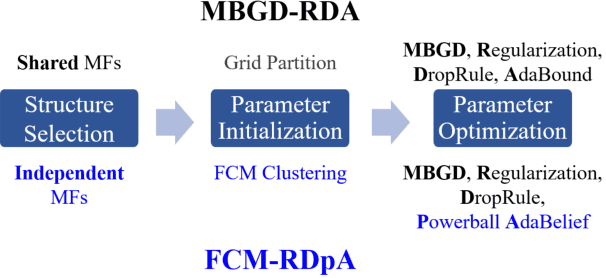

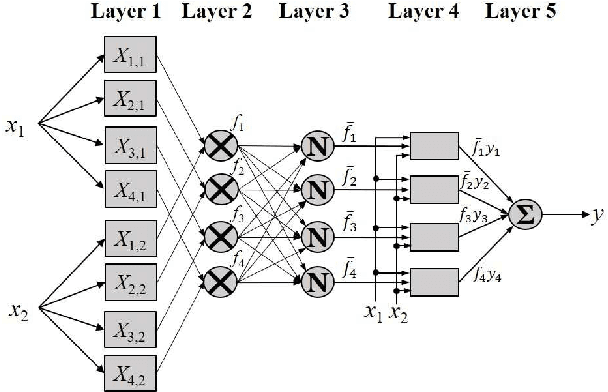

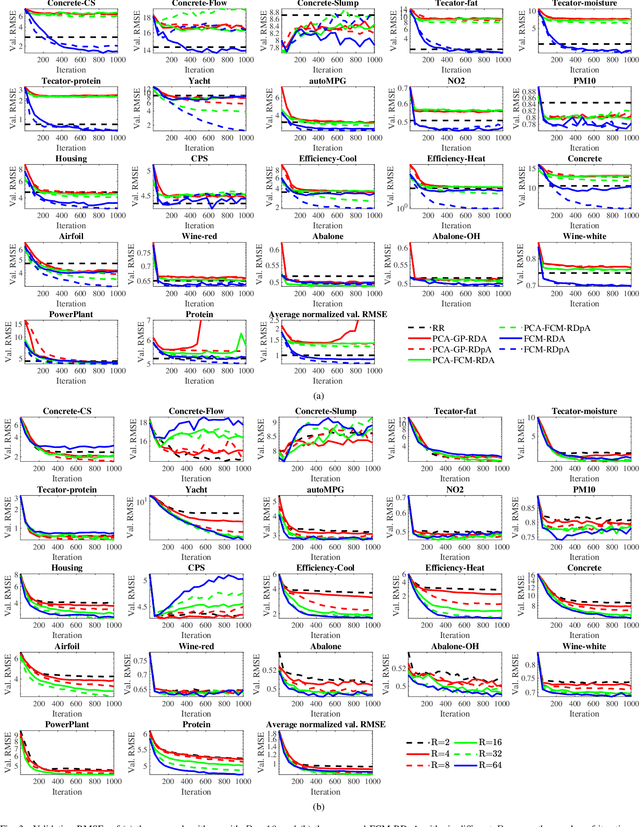

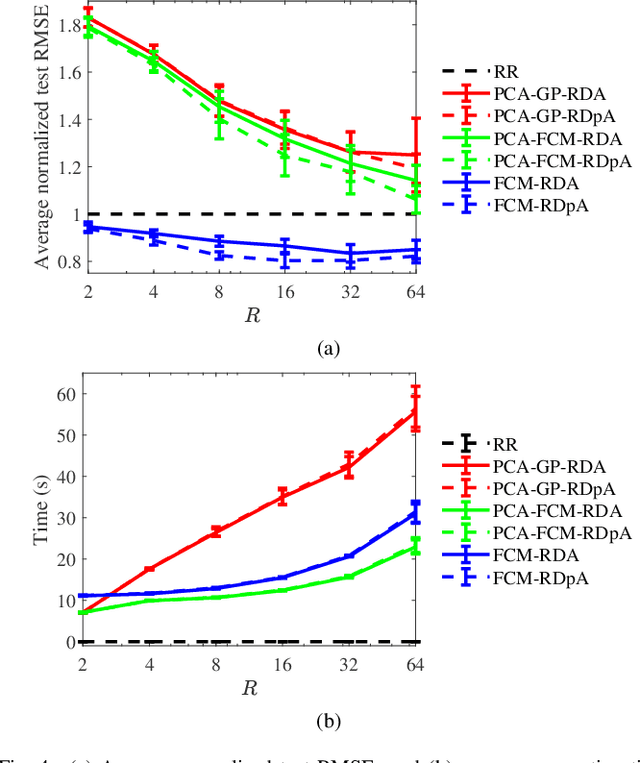

FCM-RDpA: TSK Fuzzy Regression Model Construction Using Fuzzy C-Means Clustering, Regularization, DropRule, and Powerball AdaBelief

Nov 30, 2020

Abstract:To effectively optimize Takagi-Sugeno-Kang (TSK) fuzzy systems for regression problems, a mini-batch gradient descent with regularization, DropRule, and AdaBound (MBGD-RDA) algorithm was recently proposed. This paper further proposes FCM-RDpA, which improves MBGD-RDA by replacing the grid partition approach in rule initialization by fuzzy c-means clustering, and AdaBound by Powerball AdaBelief, which integrates recently proposed Powerball gradient and AdaBelief to further expedite and stabilize parameter optimization. Extensive experiments on 22 regression datasets with various sizes and dimensionalities validated the superiority of FCM-RDpA over MBGD-RDA, especially when the feature dimensionality is higher. We also propose an additional approach, FCM-RDpAx, that further improves FCM-RDpA by using augmented features in both the antecedents and consequents of the rules.

BoostTree and BoostForest for Ensemble Learning

Mar 21, 2020

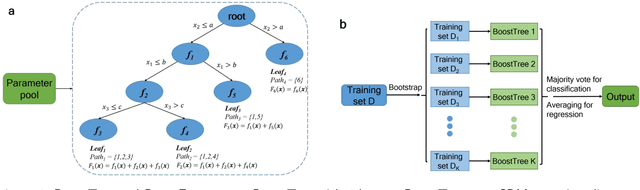

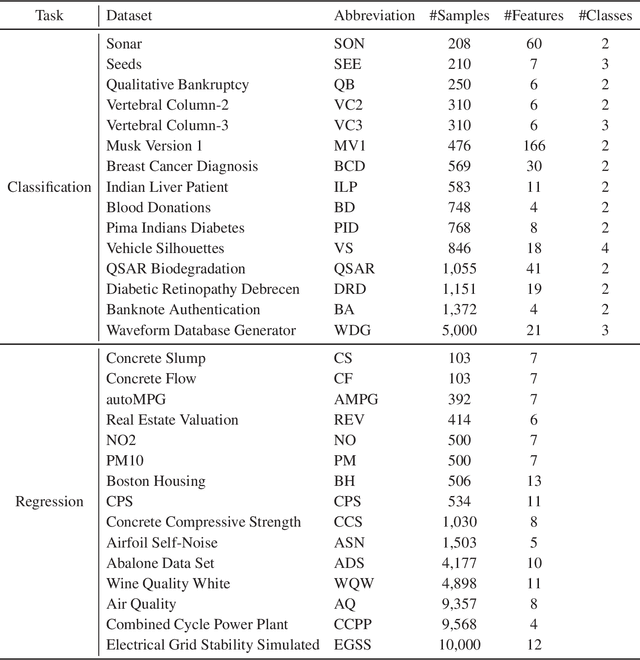

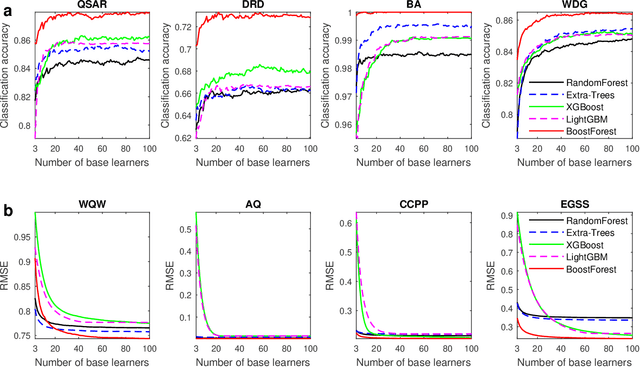

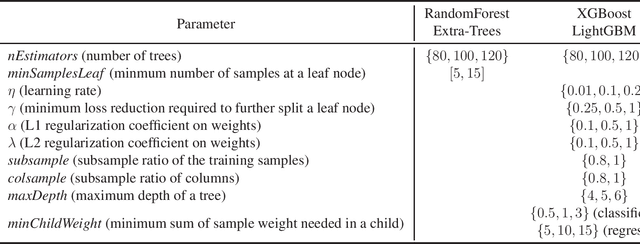

Abstract:Bootstrap aggregation (Bagging) and boosting are two popular ensemble learning approaches, which combine multiple base learners to generate a composite learner. This article proposes BoostForest, which is an ensemble learning approach using BoostTree as base learners and can be used for both classification and regression. BoostTree constructs a tree by gradient boosting, which trains a linear or nonlinear model at each node. When a new sample comes in, BoostTree first sorts it down to a leaf, then computes the final prediction by summing up the outputs of all models along the path from the root node to that leaf. BoostTree achieves high randomness (diversity) by sampling its parameters randomly from a parameter pool, and selecting a subset of features randomly at node splitting. BoostForest further increases the randomness by bootstrapping the training data in constructing different BoostTrees. BoostForest is compared with four classical ensemble learning approaches on 30 classification and regression datasets, demonstrating that it can generate more accurate and more robust composite learners.

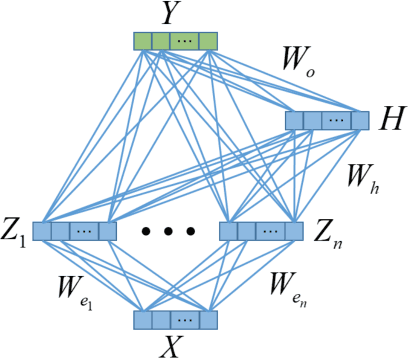

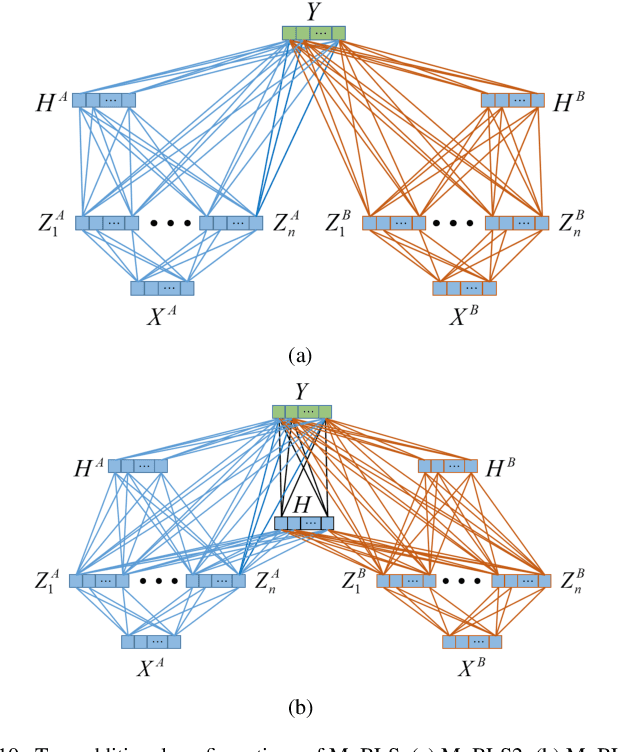

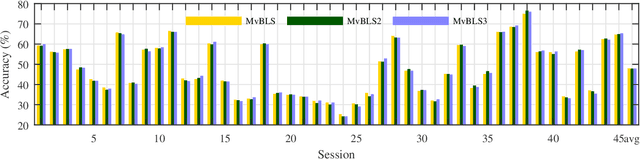

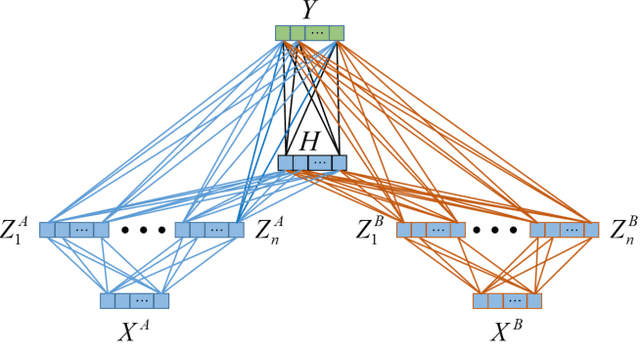

Multi-View Broad Learning System for Primate Oculomotor Decision Decoding

Aug 16, 2019

Abstract:Multi-view learning improves the learning performance by utilizing multi-view data: data collected from multiple sources, or feature sets extracted from the same data source. This approach is suitable for primate brain state decoding using cortical neural signals. This is because the complementary components of simultaneously recorded neural signals, local field potentials (LFPs) and action potentials (spikes), can be treated as two views. In this paper, we extended broad learning system (BLS), a recently proposed wide neural network architecture, from single-view learning to multi-view learning, and validated its performance in monkey oculomotor decision decoding from medial frontal LFPs and spikes. We demonstrated that medial frontal LFPs and spikes in non-human primate do contain complementary information about the oculomotor decision, and that the proposed multi-view BLS is a more effective approach to classify the oculomotor decision, than several classical and state-of-the-art single-view and multi-view learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge