BoostTree and BoostForest for Ensemble Learning

Paper and Code

Mar 21, 2020

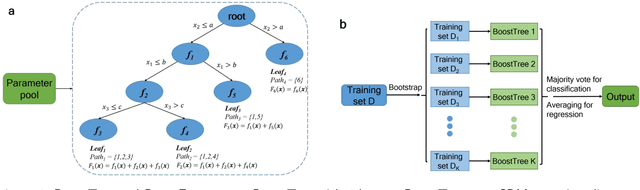

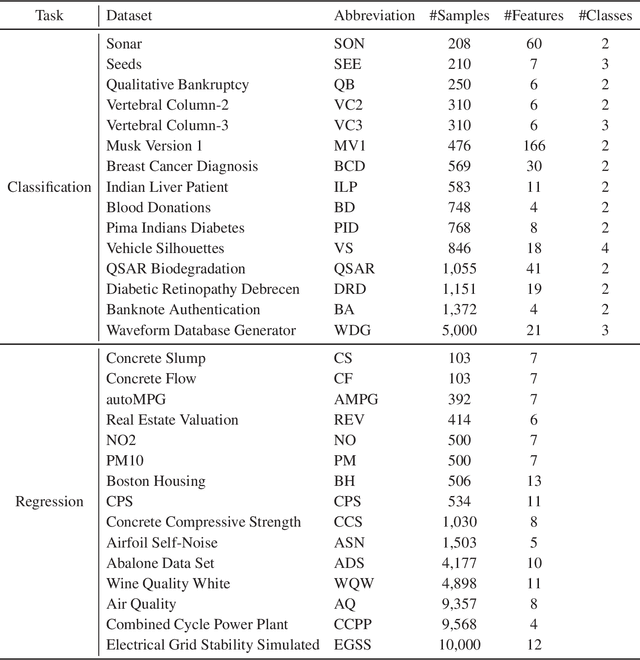

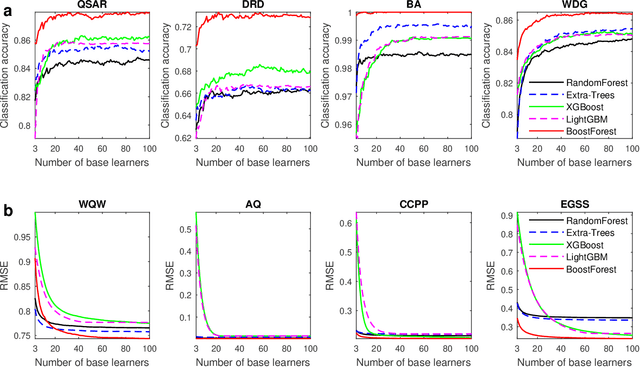

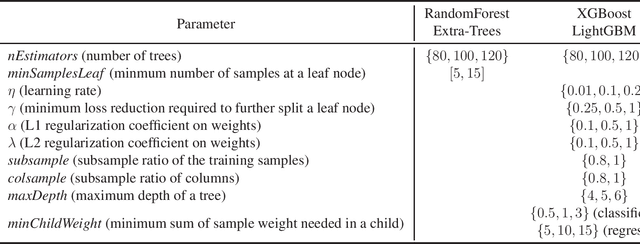

Bootstrap aggregation (Bagging) and boosting are two popular ensemble learning approaches, which combine multiple base learners to generate a composite learner. This article proposes BoostForest, which is an ensemble learning approach using BoostTree as base learners and can be used for both classification and regression. BoostTree constructs a tree by gradient boosting, which trains a linear or nonlinear model at each node. When a new sample comes in, BoostTree first sorts it down to a leaf, then computes the final prediction by summing up the outputs of all models along the path from the root node to that leaf. BoostTree achieves high randomness (diversity) by sampling its parameters randomly from a parameter pool, and selecting a subset of features randomly at node splitting. BoostForest further increases the randomness by bootstrapping the training data in constructing different BoostTrees. BoostForest is compared with four classical ensemble learning approaches on 30 classification and regression datasets, demonstrating that it can generate more accurate and more robust composite learners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge