Chak Shing Lee

Free-RBF-KAN: Kolmogorov-Arnold Networks with Adaptive Radial Basis Functions for Efficient Function Learning

Jan 13, 2026Abstract:Kolmogorov-Arnold Networks (KANs) have shown strong potential for efficiently approximating complex nonlinear functions. However, the original KAN formulation relies on B-spline basis functions, which incur substantial computational overhead due to De Boor's algorithm. To address this limitation, recent work has explored alternative basis functions such as radial basis functions (RBFs) that can improve computational efficiency and flexibility. Yet, standard RBF-KANs often sacrifice accuracy relative to the original KAN design. In this work, we propose Free-RBF-KAN, a RBF-based KAN architecture that incorporates adaptive learning grids and trainable smoothness to close this performance gap. Our method employs freely learnable RBF shapes that dynamically align grid representations with activation patterns, enabling expressive and adaptive function approximation. Additionally, we treat smoothness as a kernel parameter optimized jointly with network weights, without increasing computational complexity. We provide a general universality proof for RBF-KANs, which encompasses our Free-RBF-KAN formulation. Through a broad set of experiments, including multiscale function approximation, physics-informed machine learning, and PDE solution operator learning, Free-RBF-KAN achieves accuracy comparable to the original B-spline-based KAN while delivering faster training and inference. These results highlight Free-RBF-KAN as a compelling balance between computational efficiency and adaptive resolution, particularly for high-dimensional structured modeling tasks.

Deep Symbolic Optimization: Reinforcement Learning for Symbolic Mathematics

May 16, 2025Abstract:Deep Symbolic Optimization (DSO) is a novel computational framework that enables symbolic optimization for scientific discovery, particularly in applications involving the search for intricate symbolic structures. One notable example is equation discovery, which aims to automatically derive mathematical models expressed in symbolic form. In DSO, the discovery process is formulated as a sequential decision-making task. A generative neural network learns a probabilistic model over a vast space of candidate symbolic expressions, while reinforcement learning strategies guide the search toward the most promising regions. This approach integrates gradient-based optimization with evolutionary and local search techniques, and it incorporates in-situ constraints, domain-specific priors, and advanced policy optimization methods. The result is a robust framework capable of efficiently exploring extensive search spaces to identify interpretable and physically meaningful models. Extensive evaluations on benchmark problems have demonstrated that DSO achieves state-of-the-art performance in both accuracy and interpretability. In this chapter, we provide a comprehensive overview of the DSO framework and illustrate its transformative potential for automating symbolic optimization in scientific discovery.

DisCo-DSO: Coupling Discrete and Continuous Optimization for Efficient Generative Design in Hybrid Spaces

Dec 15, 2024

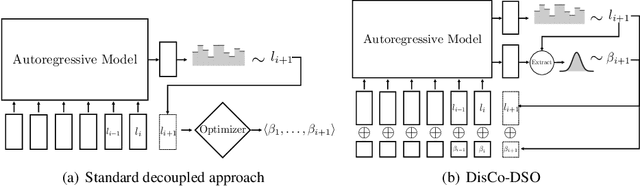

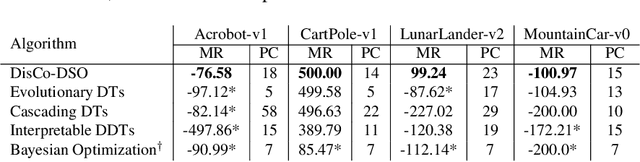

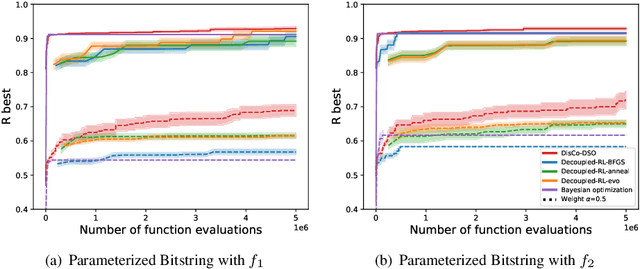

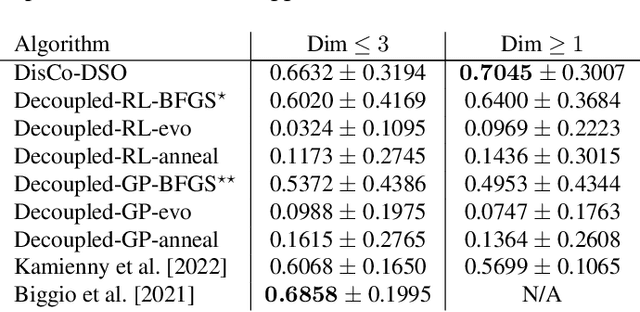

Abstract:We consider the challenge of black-box optimization within hybrid discrete-continuous and variable-length spaces, a problem that arises in various applications, such as decision tree learning and symbolic regression. We propose DisCo-DSO (Discrete-Continuous Deep Symbolic Optimization), a novel approach that uses a generative model to learn a joint distribution over discrete and continuous design variables to sample new hybrid designs. In contrast to standard decoupled approaches, in which the discrete and continuous variables are optimized separately, our joint optimization approach uses fewer objective function evaluations, is robust against non-differentiable objectives, and learns from prior samples to guide the search, leading to significant improvement in performance and sample efficiency. Our experiments on a diverse set of optimization tasks demonstrate that the advantages of DisCo-DSO become increasingly evident as the complexity of the problem increases. In particular, we illustrate DisCo-DSO's superiority over the state-of-the-art methods for interpretable reinforcement learning with decision trees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge